- The paper demonstrates that fine-tuned BERT models outperform traditional NLP methods in MBTI classification from social media text.

- It employs both BERT and DistilBERT variants with a fine-tuning approach and 10-fold cross-validation on multilingual datasets.

- Experimental results reveal higher F1 scores in MBTI trait classification, highlighting the effectiveness of context-aware embeddings.

Myers-Briggs Personality Classification from Social Media Text Using Pre-Trained LLMs

This paper presents a method for Myers-Briggs Type Indicator (MBTI) personality classification by leveraging the power of Bidirectional Encoder Representations from Transformers (BERT) models for analyzing textual data from social media platforms. The research focuses on improving the efficacy of personality detection over traditional techniques and validating these methods across multiple languages.

Introduction

Personality classification involves understanding and predicting human behavior through text analysis. The Myers-Briggs Type Indicator (MBTI), less commonly applied compared to the Big Five model, is one such framework for personality profiling. This paper explores the application of advanced NLP models to the task of classifying MBTI types based on written social media content. The BERT model is employed here due to its ability to process contextual language representations, an improvement over bag-of-words or static embeddings such as Word2Vec.

Methodology

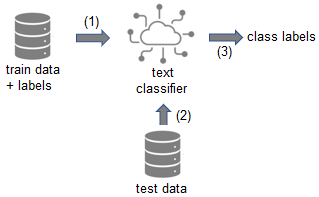

The research employs a fine-tuning approach for pre-trained BERT models to conduct MBTI personality classification. The BERT model is supplemented with a DistilBert variant for efficiency, using 32-token sequences and a relatively reduced dimensional space via 512-neuron dense layers followed by dropout and output layers.

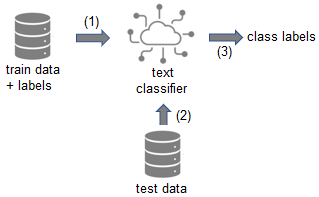

Figure 1: Overview of experiment pipeline used for training and testing MBTI classifiers.

A comparative framework is established, including baseline models using word n-gram logistic regression, and an LSTM model with static word embeddings. The datasets utilized include the MBTI9k Reddit corpus for English and the TwiSty corpus for other languages like German, Spanish, French, Italian, Dutch, and Portuguese, focusing on 10-fold cross-validation for robustness.

Experimental Results

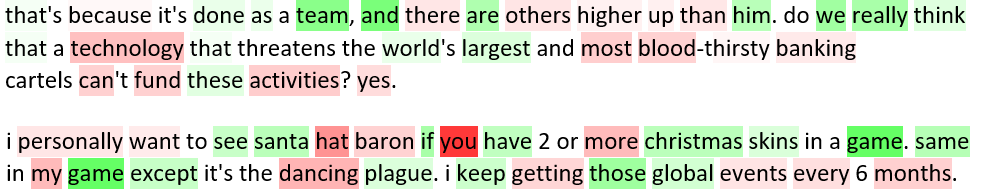

The results from the experiments notably indicate that BERT-based methods consistently outperform traditional text classification models, achieving higher F1 scores across various language datasets for MBTI classification. In particular, tasks such as distinguishing between Extraversion versus Introversion (EI) and other MBTI binary categories yielded better performance metrics, thus supporting the hypothesis on the superiority of context-aware embeddings.

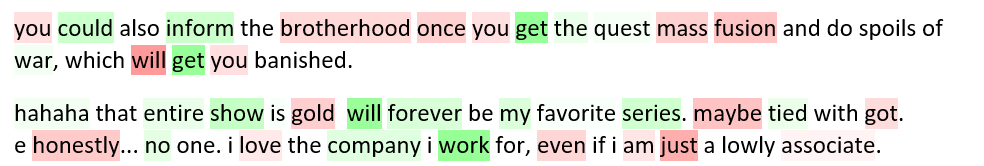

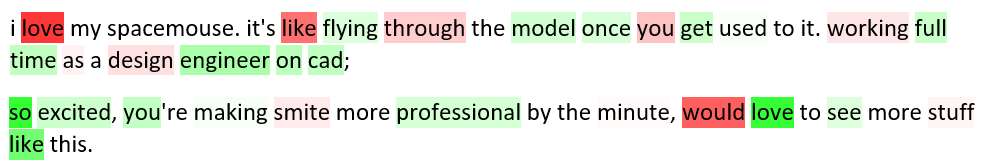

Figure 2: Extraversion and Introversion feature correlations showcasing word importance in classification.

Furthermore, the paper highlights that such improvements vary minimally across task-specific classification performance but maintain a general trend of superiority over static methods like Logistic Regression with TF-IDF.

Comparative Analysis

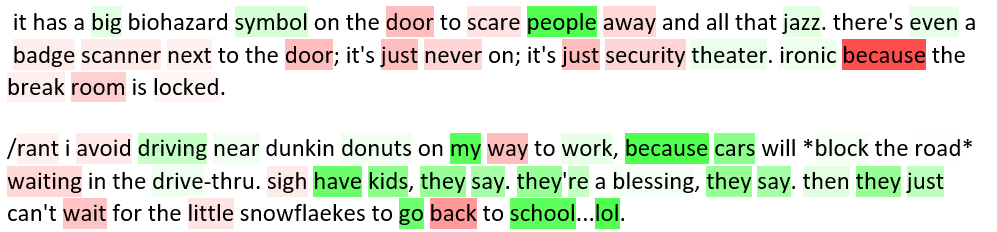

The BERT model achieved notable improvements over previous results [Gjurković, Šnajder 2018] with the MBTI9k dataset, and comparisons showed similar trends in the TwiSty corpus. Across tasks and languages, BERT yielded higher precision and recall, underlining its effectiveness in diverse linguistic contexts, predominantly due to its sophisticated word and sentence embeddings.

Implications and Future Directions

The findings suggest that employing BERT for MBTI classification could significantly impact personality-based applications, particularly in industry domains requiring nuanced human-computer interaction. The approach shows promise for expansion into broader domains and finer granularity with other transformer-based models and multi-task learning frameworks.

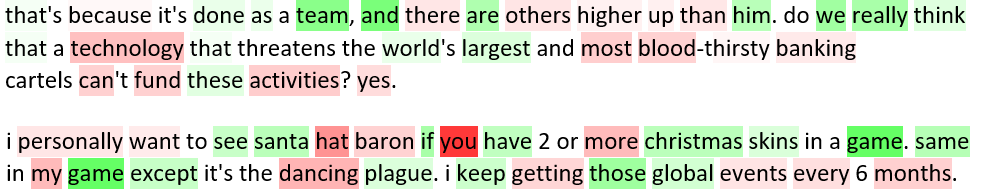

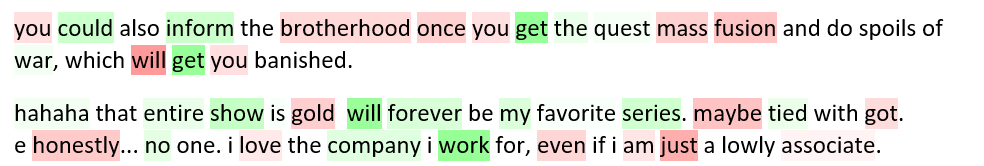

Figure 3: Feature analysis for Intuition vs. Sensing classification indicating word significance in predictions.

Challenges include adapting these methods to corpora with less existing training data or exploring domain adaption strategies like adversarial learning for cross-domain scalability. Continuous exploration of advanced models like RoBERTa, XLNet, and GPT variants for personality profiling remains a crucial future consideration.

Conclusion

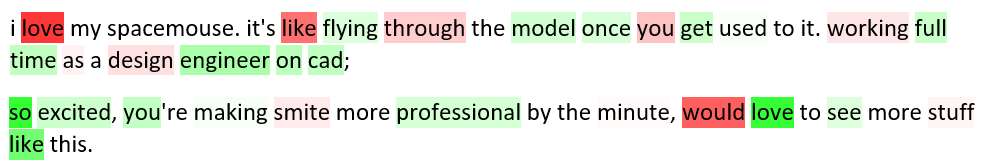

This paper demonstrates the effectiveness of leveraging BERT models for automated personality classification from social media text, achieving marked improvements over conventional text-based approaches. It steers the conversation towards more contextually aware models, advocating for further exploration into deployment across varied linguistic and application domains.

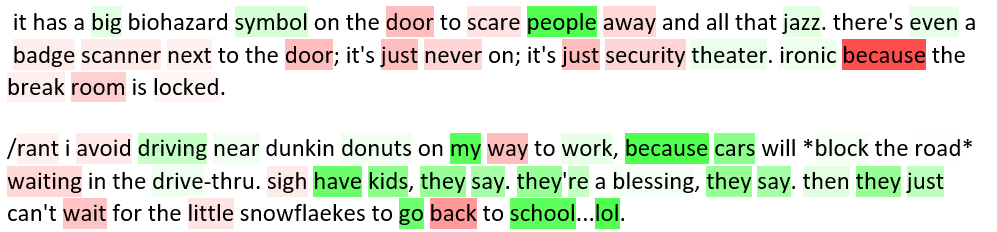

Figure 4: Distribution and correlation of Thinking vs. Feeling traits within datasets.

Though advanced, these methods hint at greater potential yet to be fully realized, especially in specialized and underrepresented linguistic applications, making them pivotal for future AI-driven personality assessment tasks.

Figure 5: Example features illustrating Perceiving vs. Judging categorizations derived from textual analysis.

The paper underscores the need for further technical advancements to handle nuances in personality typing and expands on the potential utility of NLP in psychological and social domains.