Learning the policy for mixed electric platoon control of automated and human-driven vehicles at signalized intersection: a random search approach (2206.12052v1)

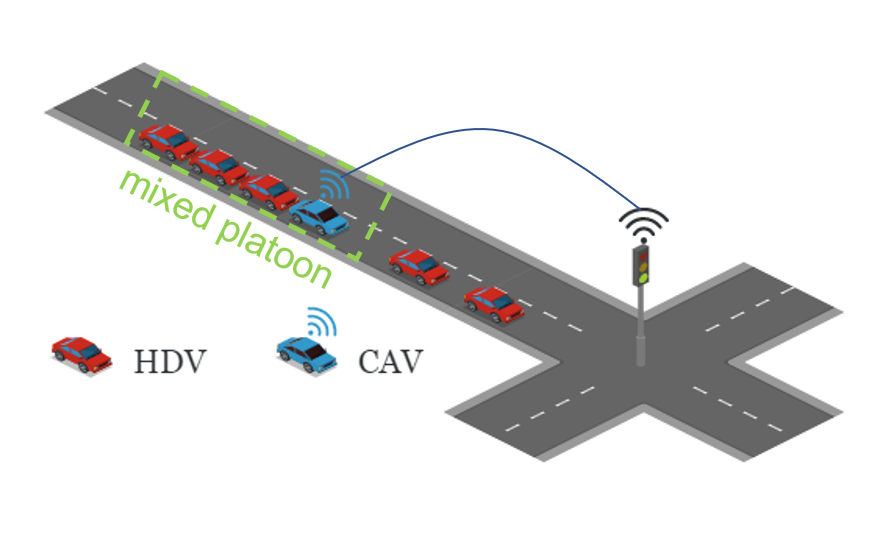

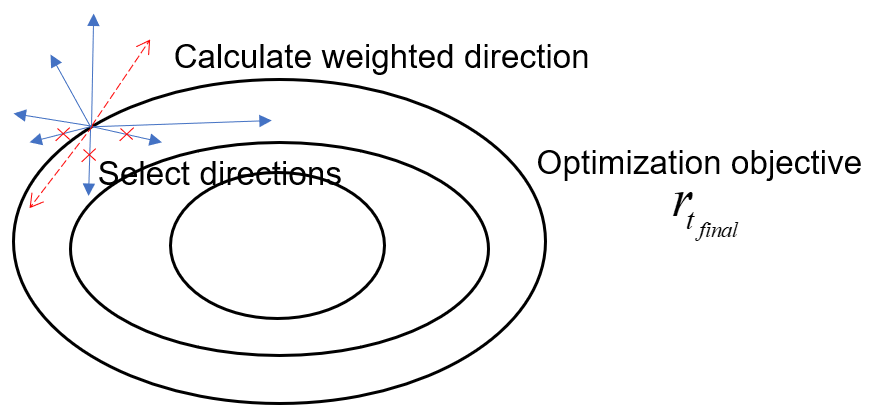

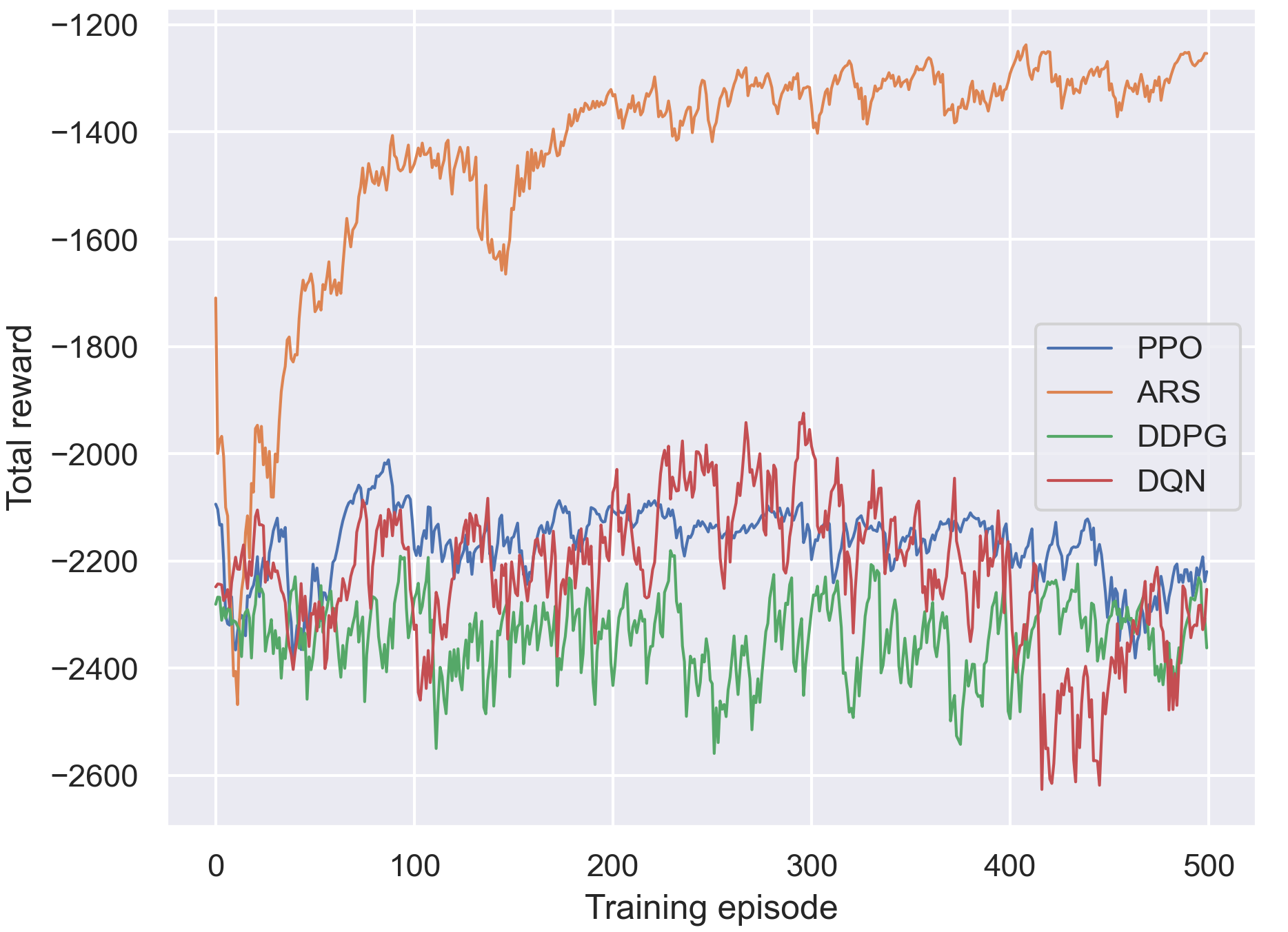

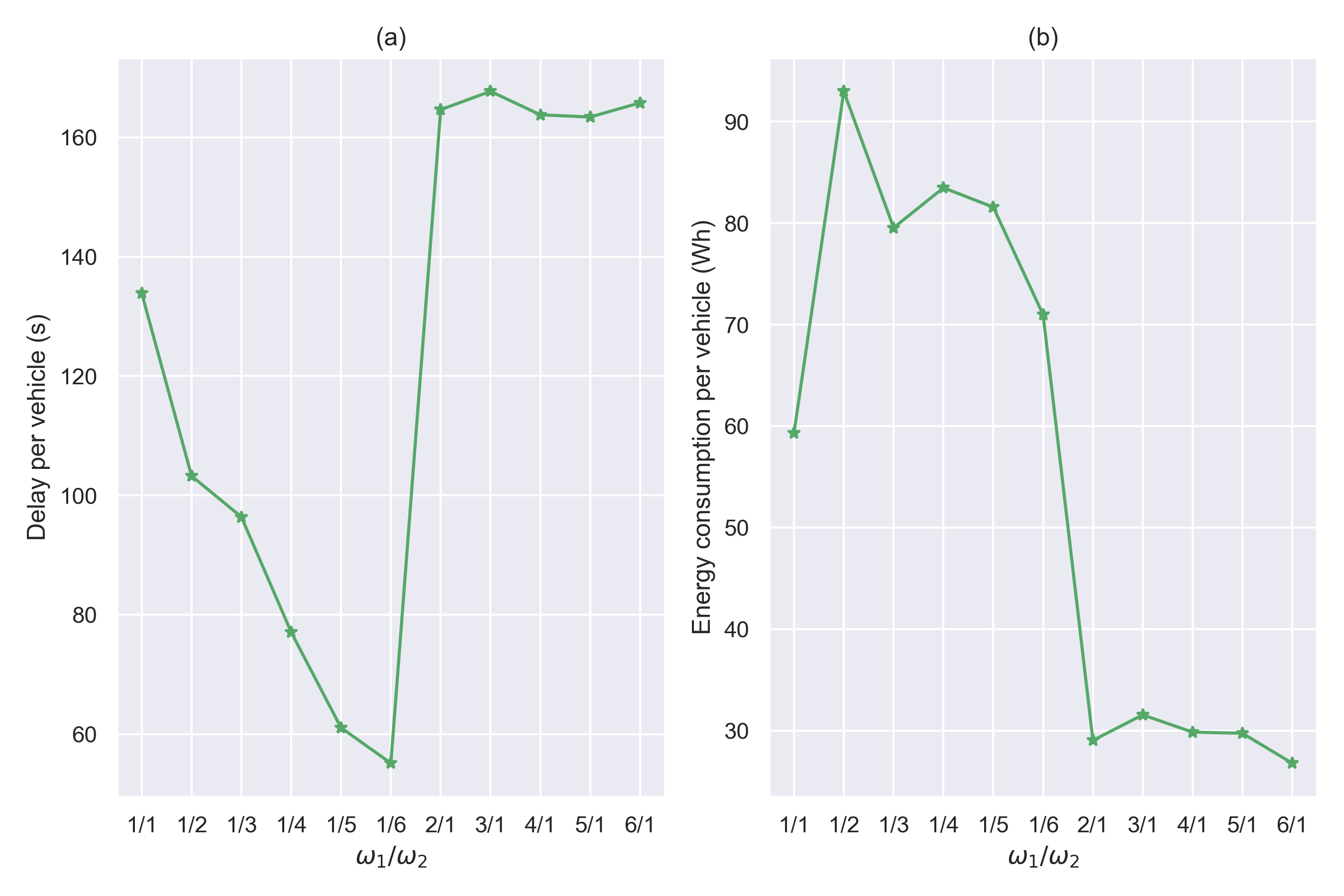

Abstract: The upgrading and updating of vehicles have accelerated in the past decades. Out of the need for environmental friendliness and intelligence, electric vehicles (EVs) and connected and automated vehicles (CAVs) have become new components of transportation systems. This paper develops a reinforcement learning framework to implement adaptive control for an electric platoon composed of CAVs and human-driven vehicles (HDVs) at a signalized intersection. Firstly, a Markov Decision Process (MDP) model is proposed to describe the decision process of the mixed platoon. Novel state representation and reward function are designed for the model to consider the behavior of the whole platoon. Secondly, in order to deal with the delayed reward, an Augmented Random Search (ARS) algorithm is proposed. The control policy learned by the agent can guide the longitudinal motion of the CAV, which serves as the leader of the platoon. Finally, a series of simulations are carried out in simulation suite SUMO. Compared with several state-of-the-art (SOTA) reinforcement learning approaches, the proposed method can obtain a higher reward. Meanwhile, the simulation results demonstrate the effectiveness of the delay reward, which is designed to outperform distributed reward mechanism} Compared with normal car-following behavior, the sensitivity analysis reveals that the energy can be saved to different extends (39.27%-82.51%) by adjusting the relative importance of the optimization goal. On the premise that travel delay is not sacrificed, the proposed control method can save up to 53.64% electric energy.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.