- The paper introduces a neuro-symbolic framework that integrates deep neural networks with Probabilistic Soft Logic for joint inference and learning.

- It utilizes energy-based models and Łukasiewicz logic to enhance prediction accuracy, achieving up to 93.9% accuracy on MNIST addition in low-data scenarios.

- The framework demonstrates efficiency with a 40× speedup over traditional approaches in large-scale tasks like citation network node classification.

NeuPSL: Neural Probabilistic Soft Logic

Introduction

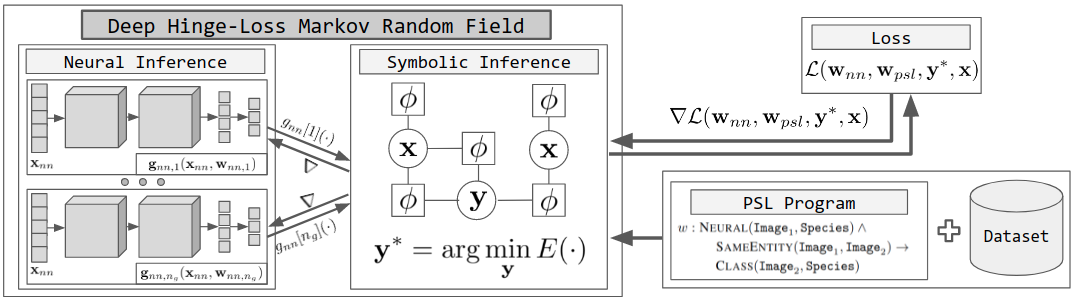

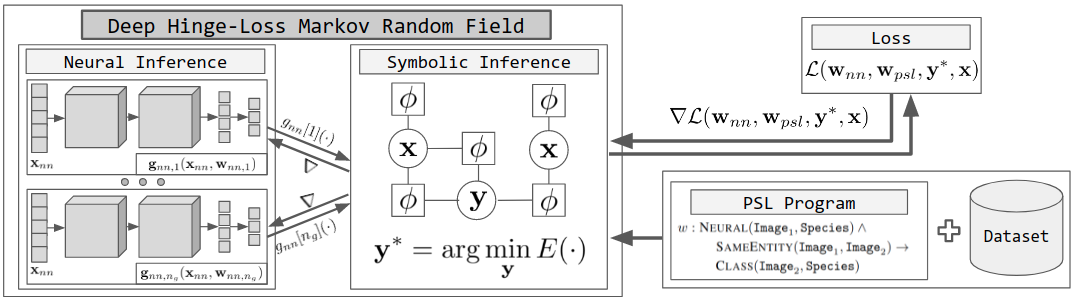

The paper introduces "NeuPSL: Neural Probabilistic Soft Logic," a neuro-symbolic (NeSy) framework aimed at integrating deep neural networks with symbolic reasoning to perform joint inference and learning. The authors present a novel approach that combines the strengths of neural perception with the scalable inference capabilities of symbolic logic. NeuPSL extends the capabilities of Probabilistic Soft Logic (PSL) by incorporating neural networks as predicates, thus enabling the seamless integration of neural and symbolic parameter learning and inference.

Neuro-Symbolic Energy-Based Models (NeSy-EBMs)

NeSy-EBMs provide a mathematical foundation for NeuPSL. These models measure the compatibility between observed and target variables using an energy function, parameterized by neural and symbolic parameters. Inference in these models involves finding the lowest energy state, while learning involves adjusting the parameters to associate low energy with correct solutions. The framework generalizes across different NeSy systems by defining how various neuro-symbolic approaches fit into the NeSy-EBM paradigm.

Implementation of NeuPSL

NeuPSL utilizes a hybrid architecture combining neural networks and symbolic potentials. Symbolic potentials are instantiated using PSL rules relaxed via Łukasiewicz logic, allowing the integration of neural outputs as ground atoms. In this way, NeuPSL can leverage joint inference and learning to improve predictions, particularly in low-data scenarios.

1

2

3

4

5

6

|

def neupsl_inference(neural_model, psl_rules, input_data):

neural_outputs = neural_model.predict(input_data)

psl_model = PSLModel(rules=psl_rules)

psl_model.set_neural_output(neural_outputs)

inferred_values = psl_model.infer()

return inferred_values |

Experimental Evaluation

NeuPSL is evaluated on multiple tasks, including MNIST addition and citation network node classification, demonstrating superior performance over existing NeSy methods and neural baselines. The results showcase NeuPSL's ability to leverage structural relationships in data, achieving significant accuracy improvements and efficiency in inference times.

Figure 1: Overview of the NeuPSL inference and learning pipeline.

The evaluation results emphasize NeuPSL's efficient handling of joint inference tasks with notable improvements in low-data environments. On tasks such as MNIST addition, NeuPSL achieves up to 93.9% accuracy compared to lower performances by traditional neural models. NeuPSL also demonstrated a 40 times speedup compared to other NeSy approaches in large-scale problems like citation network tasks.

Conclusion and Future Work

The introduction of NeuPSL marks a significant advancement in integrating neural networks with symbolic reasoning for scalable joint inference and learning. Future work may explore alternative learning objectives, balance between traditional and energy-based losses, and application to new domains. These advancements can offer new insights and challenges in neuro-symbolic integration, further expanding the applicability and effectiveness of AI systems in complex reasoning tasks.