- The paper proposes a novel framework using sensemaking theory to contextualize AI explanations through social and cognitive lenses.

- It introduces Sensible AI with seamful design, adversarial auditing, and continuous monitoring to improve system reliability.

- The study demonstrates the framework’s application in healthcare, emphasizing enhanced trust and practical decision support in complex settings.

Interpretability and Explainability Through Sensemaking Theory

Introduction

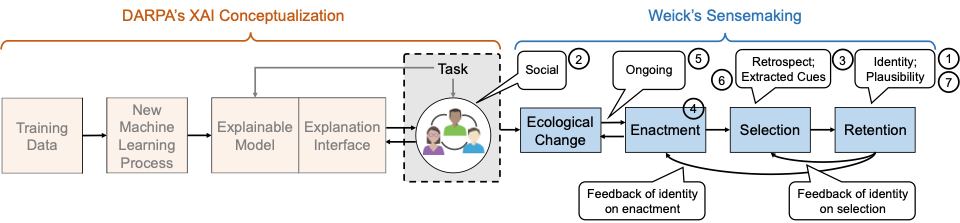

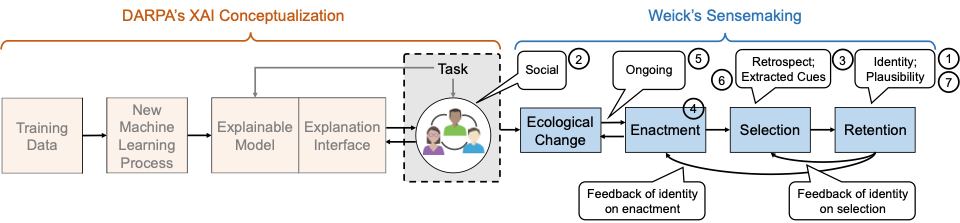

The paper proposes a novel framework for interpretability in machine learning systems, rooted in Karl Weick's sensemaking theory. Traditional approaches to interpretability emphasize the formulation of clear, understandable explanations for model outputs. However, these are frequently devoid of context regarding who these explanations are intended for and the cognitive landscapes of these stakeholders. By focusing on sensemaking, the paper advocates for a shift towards explanations that factor in the social and cognitive contexts of individuals engaging with AI systems.

Framework for Sensemaking in AI

The authors identify several properties from Weick's sensemaking framework that are key in evaluating human understanding of machine learning models:

- Identity Construction: The ways explanations align with or challenge individual and collective identities significantly influence their assimilation.

- Social Context: Explanations vary in their effectiveness depending on the socio-cultural environments of the users.

- Retrospective Sensemaking: Effective explanations must allow for reflective cognition, enabling users to situate new information within existing cognitive schemas.

These properties collectively suggest that interpretability should engage with the nuanced and dynamic processes by which people make sense of AI systems, rather than merely focusing on producing technically accurate or simplified explanations.

Figure 1: Left: DARPA's conceptualization of Explainable AI, adapted from [gunning2019darpa].

Application of Sensemaking Theory

To exemplify these points, consider the application of machine learning in a healthcare setting, specifically for diagnostic support. While traditional interpretability frameworks emphasize the clarity of explanations (e.g., a decision tree output), a sensemaking-informed approach might identify additional contextual factors such as the doctor's past diagnostic experiences or institutional policies that influence decision acceptance.

Users’ cognitive and emotional states, social dynamics among medical professionals, and broader organizational structures all play roles in how effectively a given explanation fosters understanding or aids in decision-making processes.

Designing Sensible AI

The paper introduces "Sensible AI", which draws extensively from the principles observed in High-Reliability Organizations (HROs) such as healthcare systems and nuclear power plants. By fostering a similarity of intent in AI systems, Sensible AI integrates human-centered insights to address the cognitive and contextual needs of its users:

- Seamful Design: Embracing complexity and user control to facilitate deeper understanding and skepticism about model predictions.

- Adversarial Design: Engaging diverse teams in the auditing and validation of AI systems’ outputs to ensure multi-faceted scrutiny and cognitive diversity.

- Continuous Monitoring: Regularly updating models with new data and feedback to maintain operational relevance and accuracy.

Implications and Challenges

The paper posits that adopting a sensemaking orientation in AI design can support not only comprehensibility and usability but also align with broader social and ethical imperatives. However, the implementation of Sensible AI raises certain challenges, such as balancing user cognitive load with the richness of information provided and ensuring that design complexity does not hinder practical deployment or user engagement.

Conclusion

The integration of sensemaking into AI interpretability suggests a paradigm where focus shifts from purely technical solutions to those considering human cognitive and social contexts. Though challenging, this approach has the potential to fundamentally enhance how stakeholders interact with and trust machine learning systems. By applying organizational and cognitive theories to AI design, the paper argues for a future where machine learning systems aren't just understandable, but sensible in their real-world applications.