- The paper introduces a joint LSTM+CNN model that achieves an R² greater than 0.9 in predicting shear stress and failure times in lab experiments.

- The study employs autoregressive models like TCN and Transformer networks to capture periodic fault stress variations, showcasing effective forecasting capabilities.

- The work highlights the potential and challenges of applying lab-based deep learning methods to real-world earthquake prediction through enhanced seismic data analysis.

Deep Learning for Laboratory Earthquake Prediction and Autoregressive Forecasting of Fault Zone Stress

Introduction

The paper "Deep Learning for Laboratory Earthquake Prediction and Autoregressive Forecasting of Fault Zone Stress" investigates the potential of deep learning (DL) architectures to enhance laboratory-based earthquake prediction and forecasting through advanced neural network models. The utilization of controlled laboratory environments allows for precise data collection and analysis of seismic signals, providing an analog for real-world seismic activity. The study focuses on extending previous ML approaches by implementing DL models specifically tailored to earthquake prediction and fault zone stress forecasting.

Experiments and Data Collection

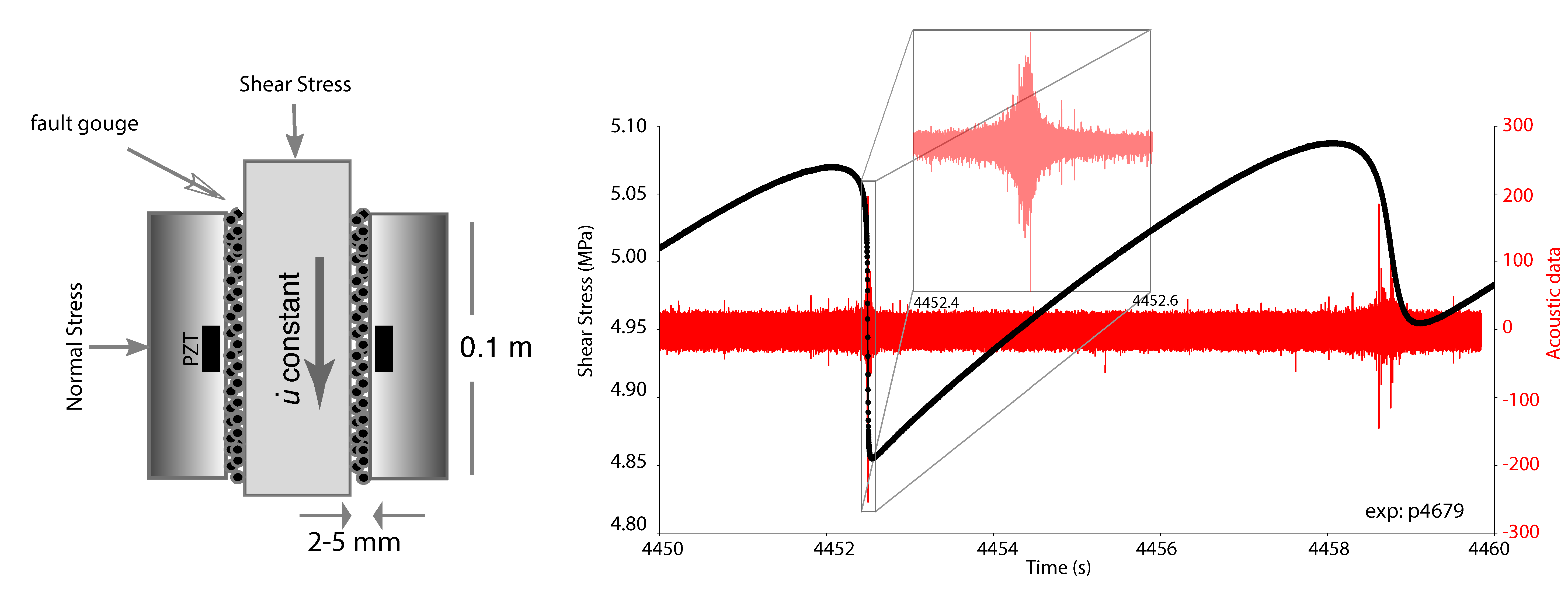

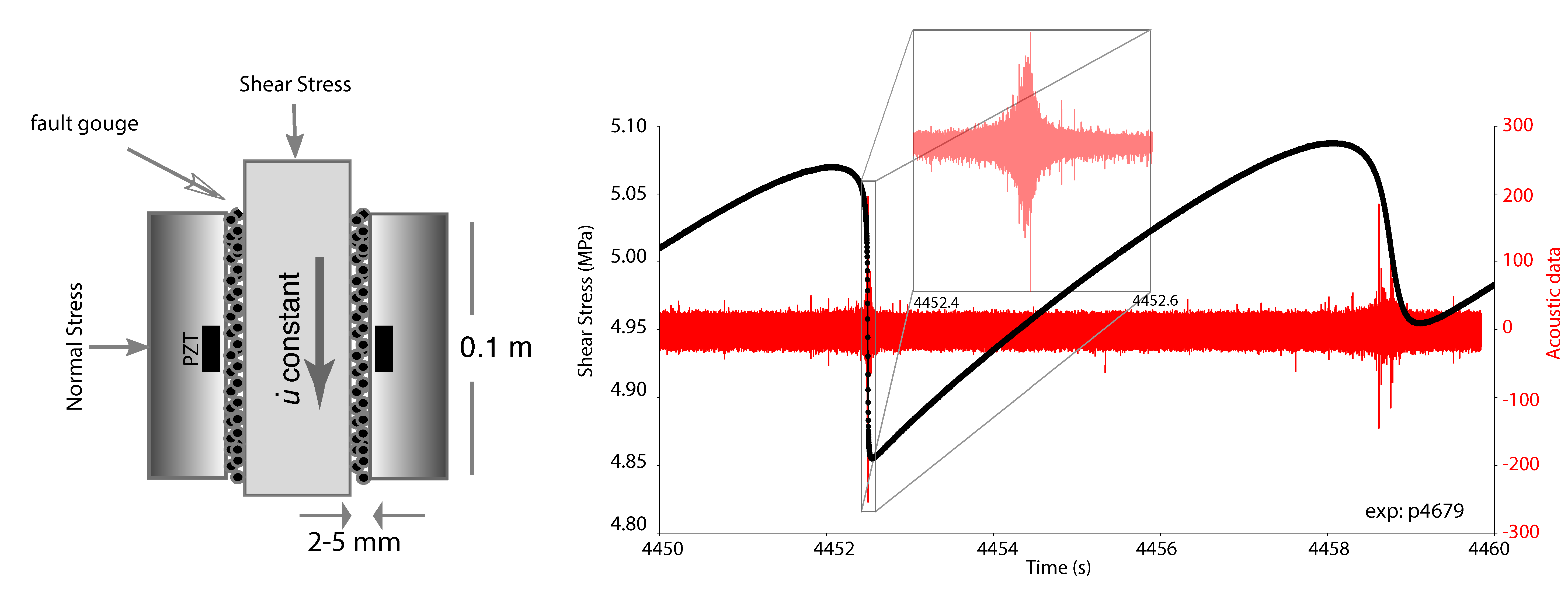

The research employs the double direct shear (DDS) configuration in a lab setting to simulate fault lines subjected to various stress conditions. The faults, composed of granular materials such as glass beads and quartz powder, undergo shear stress, and the resulting acoustic emissions (AE) are meticulously recorded using piezo-electric transducers (Figure 1).

Figure 1: Schematic of the DDS experimental setup with recorded shear stress and AE data.

Methodology

Deep Learning Models

The paper introduces several DL architectures for predicting and forecasting seismic activity:

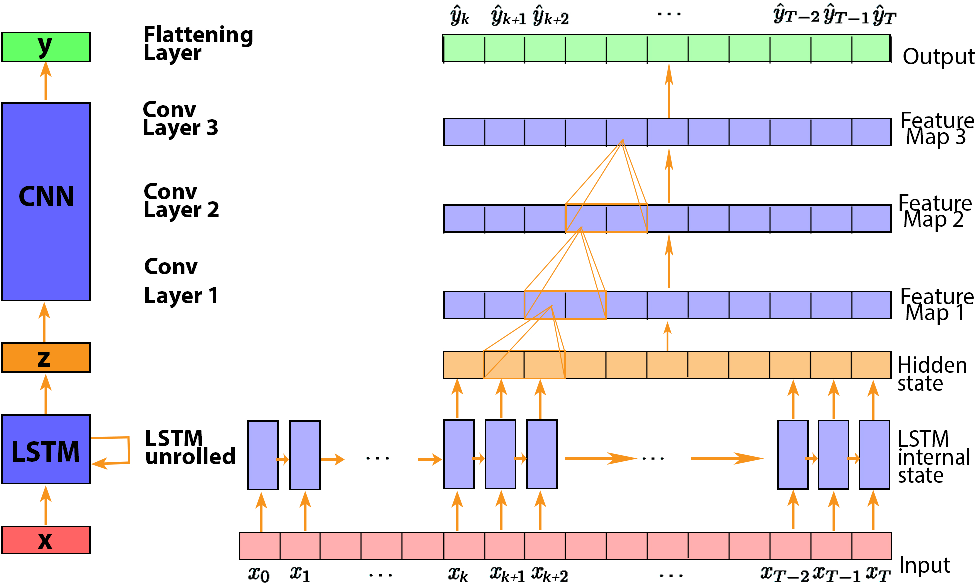

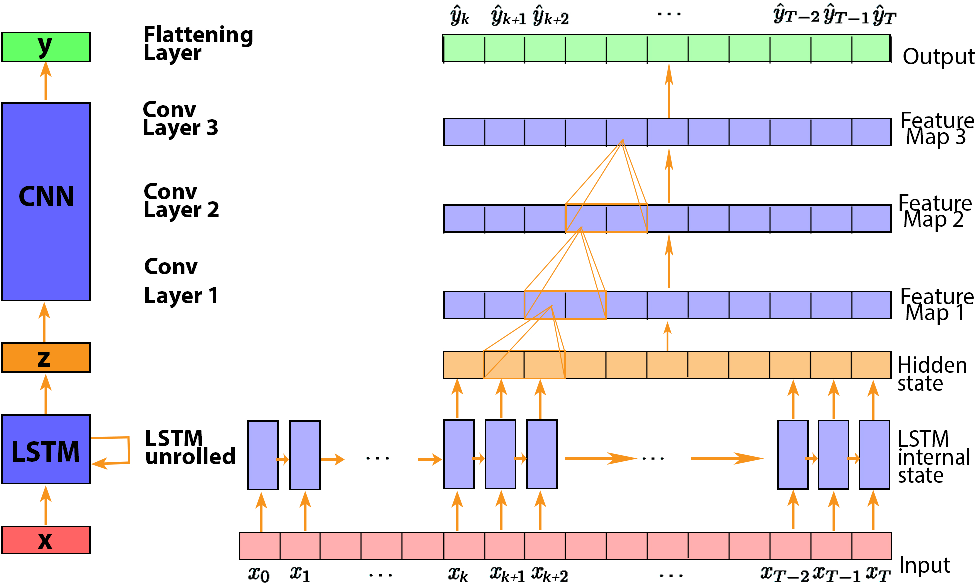

- Prediction Models: Utilize Long-Short Term Memory (LSTM) networks combined with Convolutional Neural Networks (CNNs) to predict current shear stress and times to failure (TTsF and TTeF) based on AE data (Figure 2).

Figure 2: Schematic LSTM and CNN architecture for seismic prediction.

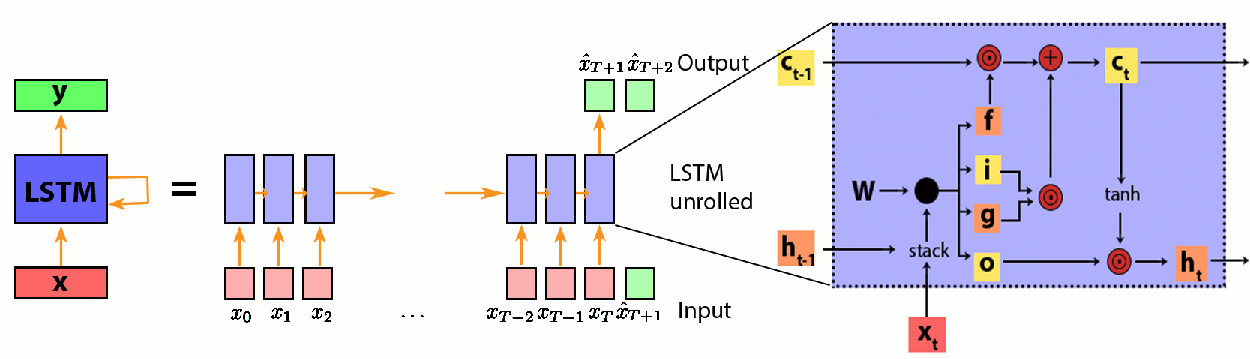

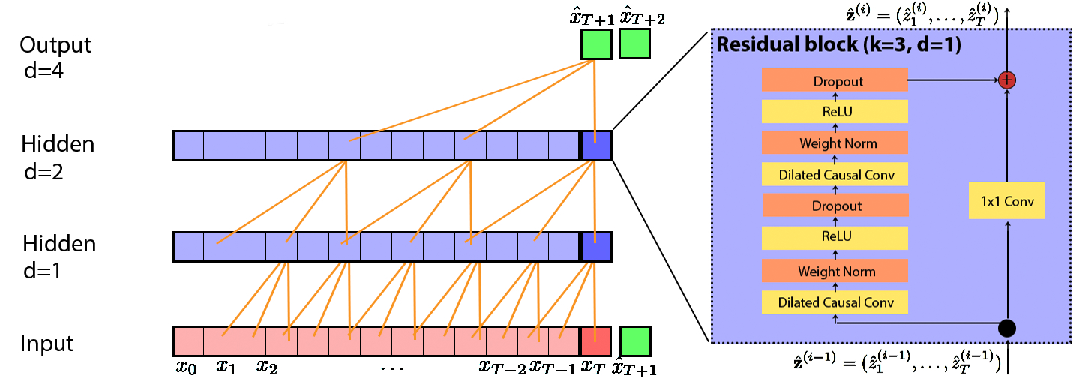

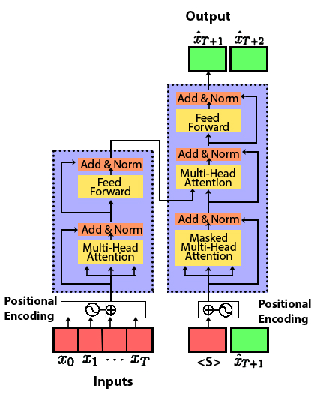

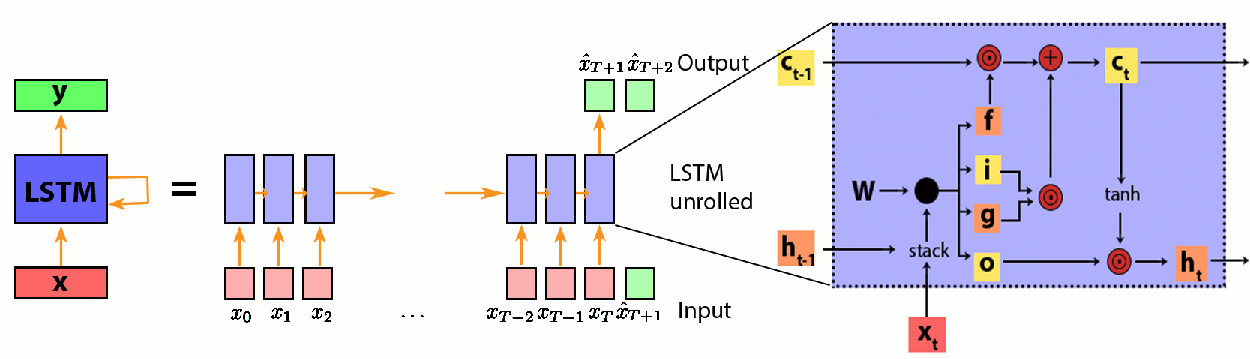

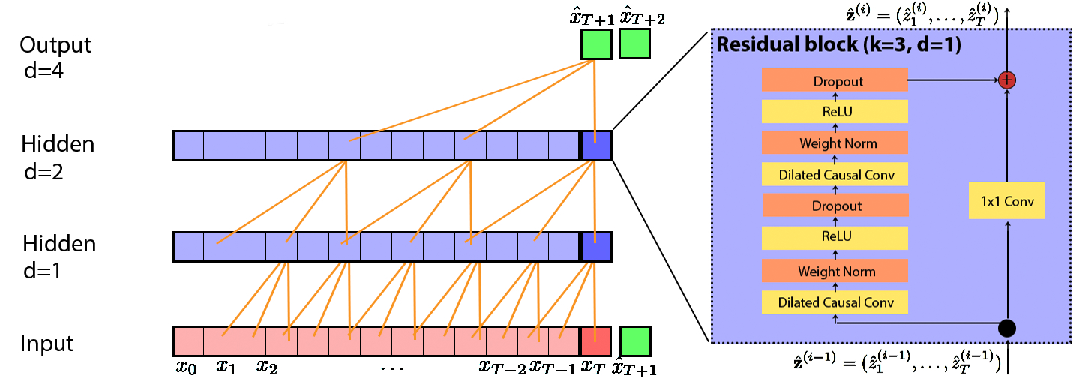

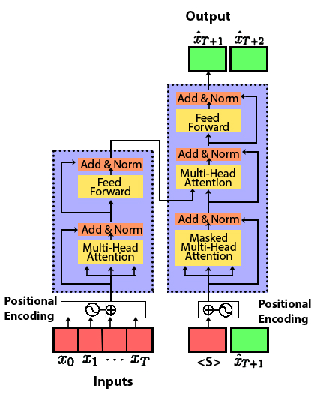

- Autoregressive (AR) Models for Forecasting: Deploy LSTM, Temporal Convolutional Networks (TCN), and Transformer Networks (TF) to forecast future stress states in a sequence modeling framework (Figure 3).

Figure 3: TF architecture utilized in autoregressive stress forecasting.

Model Training and Evaluation

The models were trained using AE data and tested on separate data subsets to ensure robust evaluations. The study explores optimizing hyperparameters, such as the memory length in LSTMs, to enhance performance. A root mean squared error (RMSE) loss function is used for model optimization, with performance metrics including RMSE and the coefficient of determination (R2).

Results

Prediction Models

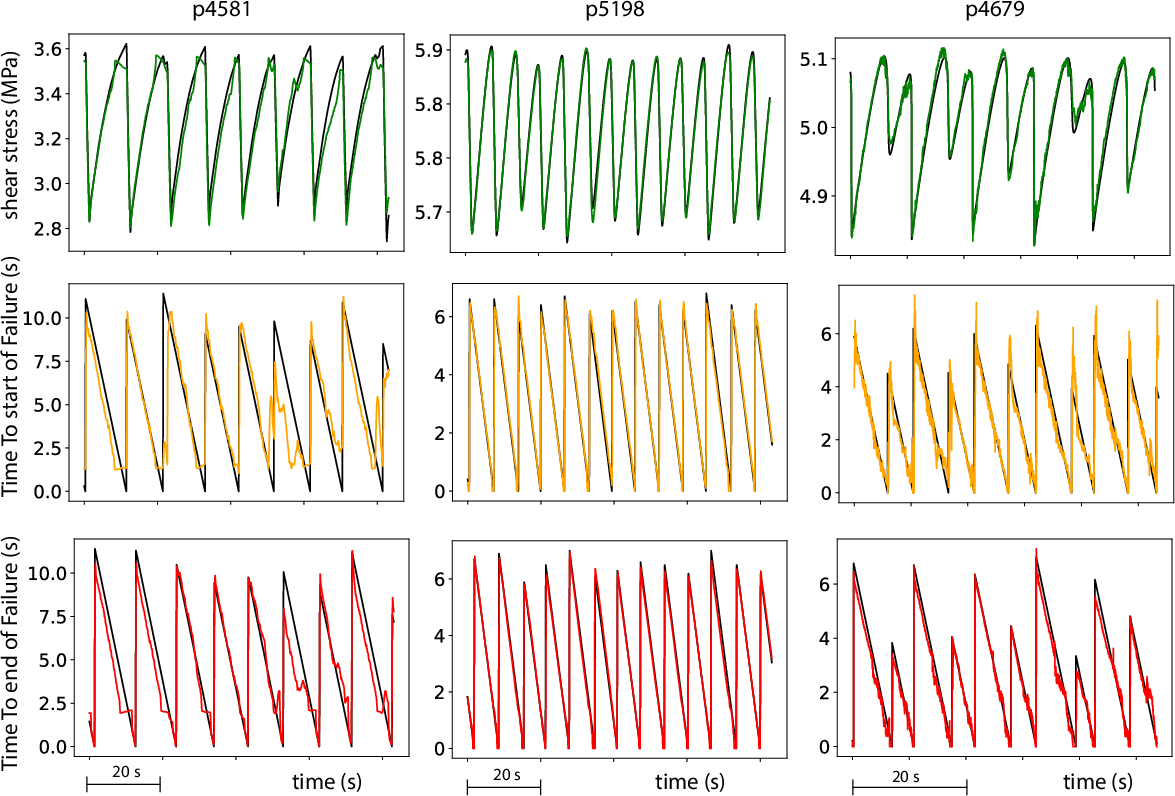

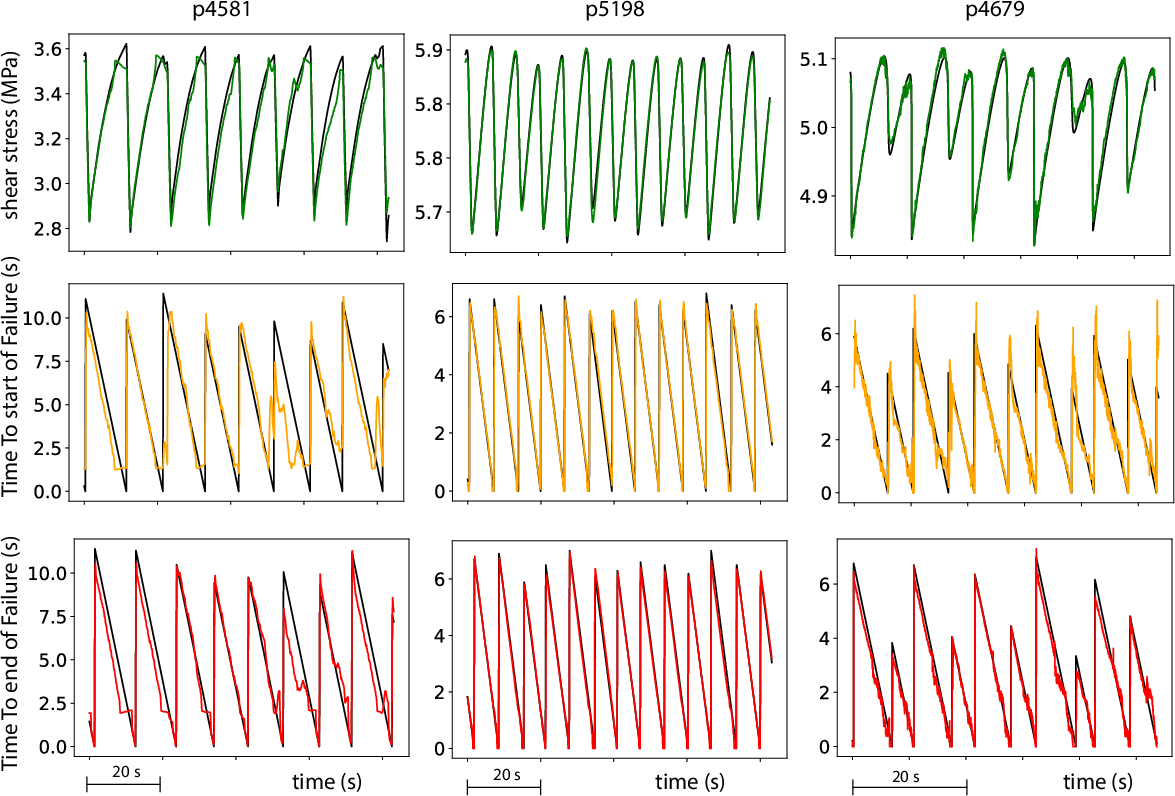

The joint LSTM+CNN model demonstrated accurate predictions of shear stress and failure times, yielding an R2 greater than 0.9 across multiple experiments. Predictions were particularly effective when incorporating preseismic creep data, allowing the model to anticipate changes in stress states more accurately (Figure 4).

Figure 4: Performance of LSTM+CNN model in predicting shear stress and failure times.

Autoregressive Forecasting Models

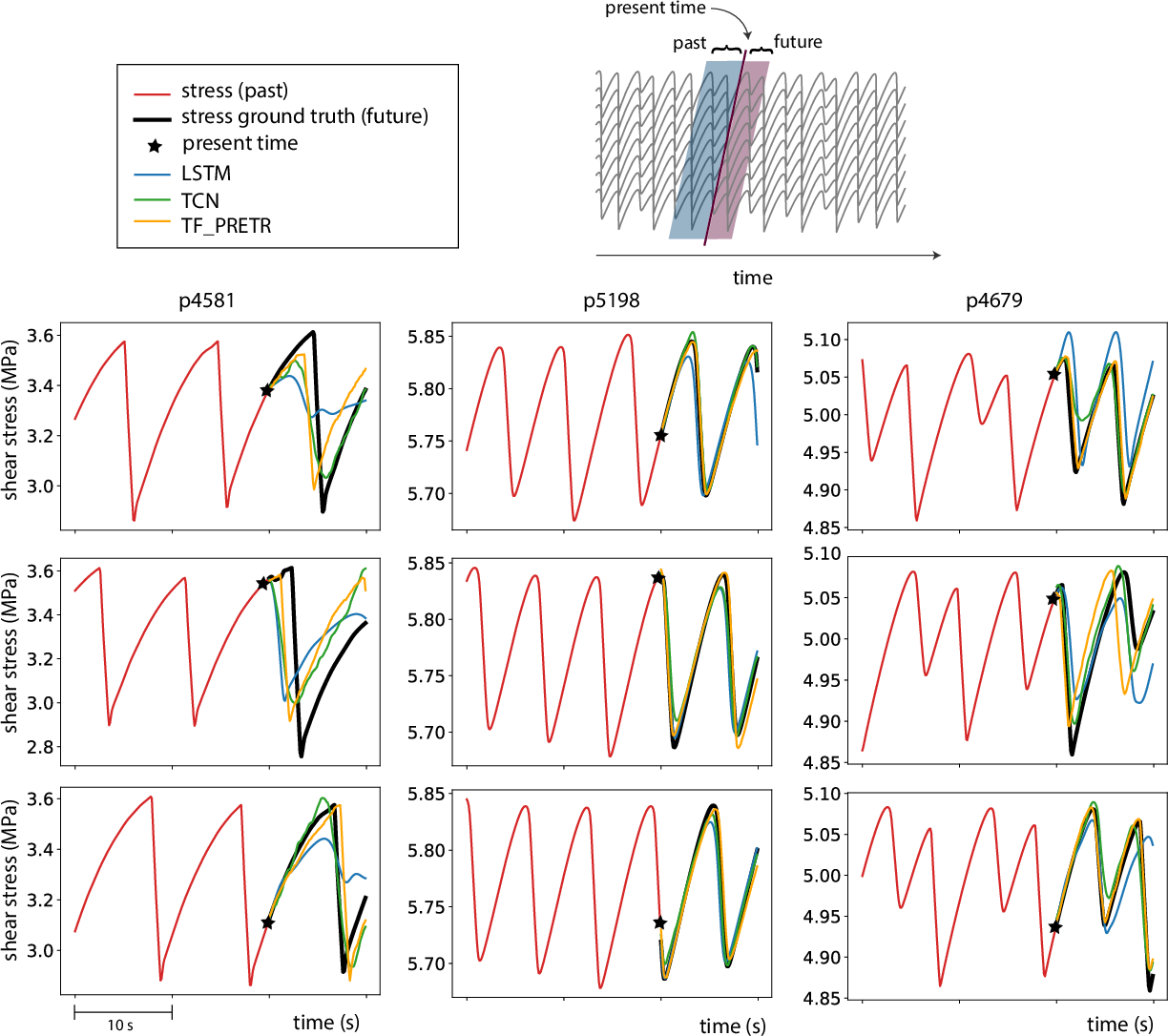

The autoregressive models provided insights into the temporal dynamics of fault stress:

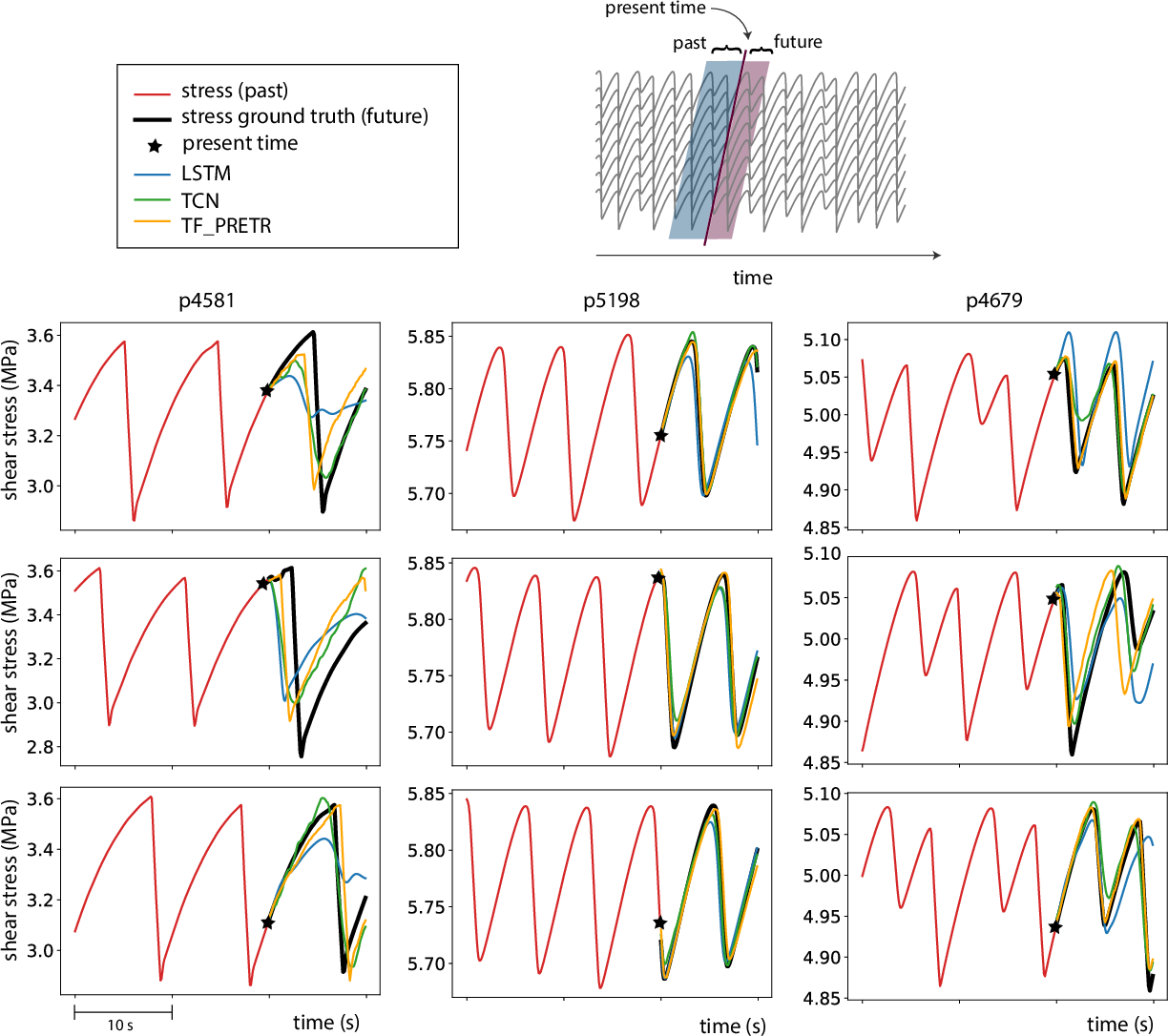

- LSTM Performance: Encountered challenges with longer sequence lengths due to inherent memory limitations, leading to less accurate forecasts.

- TCN Performance: Despite simplicity, TCN offered superior generalization capabilities with fewer parameters, maintaining stable predictions by capturing periodic stress variations effectively.

- TF Performance: Although requiring substantial data for training, the Transformer Network captured complex dependencies within seismic data, especially with pre-training on synthetic sine waves to mimic oscillatory behavior (Figure 5).

Figure 5: TF model's ability to forecast complex seismic cycles with pre-training.

Discussion

The study demonstrates that DL models can effectively predict lab-scale seismic events, providing foundational insights for extending these methods to natural earthquake prediction. The application of AR models highlights their potential in forecasting fault dynamics beyond isolated events, offering avenues for real-time stress monitoring.

However, the translational capability to tectonic fault lines remains dependent on data availability and the fidelity of analog conditions in lab experiments. Future research should focus on integrating real-world seismograms and exploring transfer learning strategies to bridge the gap between laboratory and natural environments.

Conclusion

This paper establishes a novel approach using deep learning to predict and autoregressively forecast seismic activities in laboratory settings. By improving upon existing ML frameworks with sophisticated DL architectures, the work sets a foundation for further exploration into automated earthquake forecasting systems—potentially providing crucial insights into the deterministic nature of seismic cycles and advancing real-time hazard prediction methodologies.

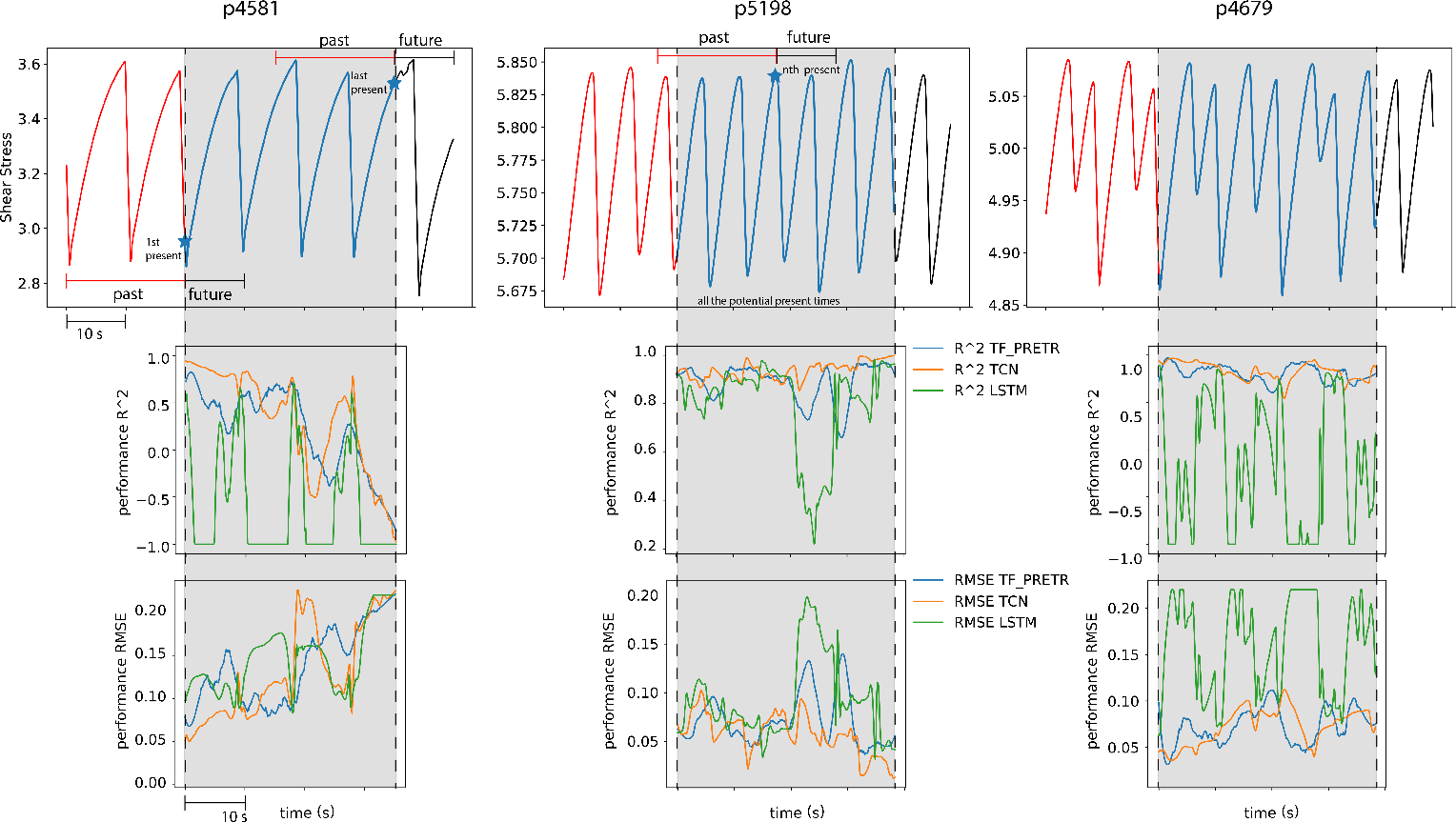

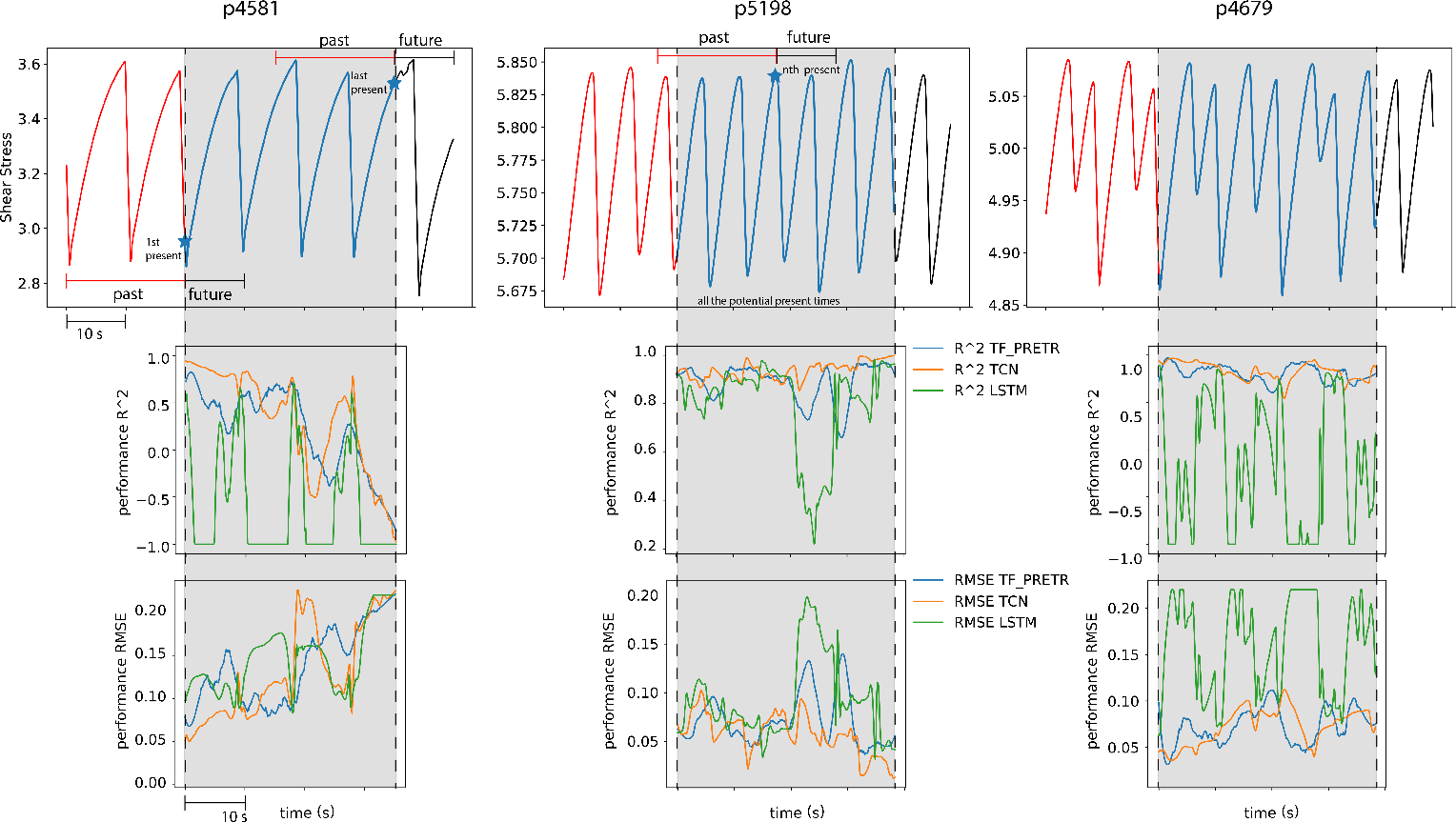

Figure 6: Evolution of AR model performance with extended forecast horizons.