- The paper shows that only 27% of self-reported Node.js programmers met the technical criteria, highlighting limitations of self-reported data.

- The study employs a two-phase recruitment process with a pre-screening survey and video calls to assess practical programming skills.

- The report recommends enhanced validation measures and transparent communication to improve recruitment accuracy in software engineering studies.

Software Engineering User Study Recruitment on Prolific: An Experience Report

Introduction

The paper "Software Engineering User Study Recruitment on Prolific: An Experience Report" addresses the challenges of recruiting participants with specific programming skills using the Prolific platform. Prolific, an online research participant recruitment platform, offers access to a large participant pool; however, the accuracy of self-reported skills can be problematic when recruiting technical-skilled participants for studies, especially in software engineering domains. The report provides insights and methodologies derived from recruiting Node.js programmers and emphasizes the importance of validation mechanisms beyond self-reporting.

Methodology

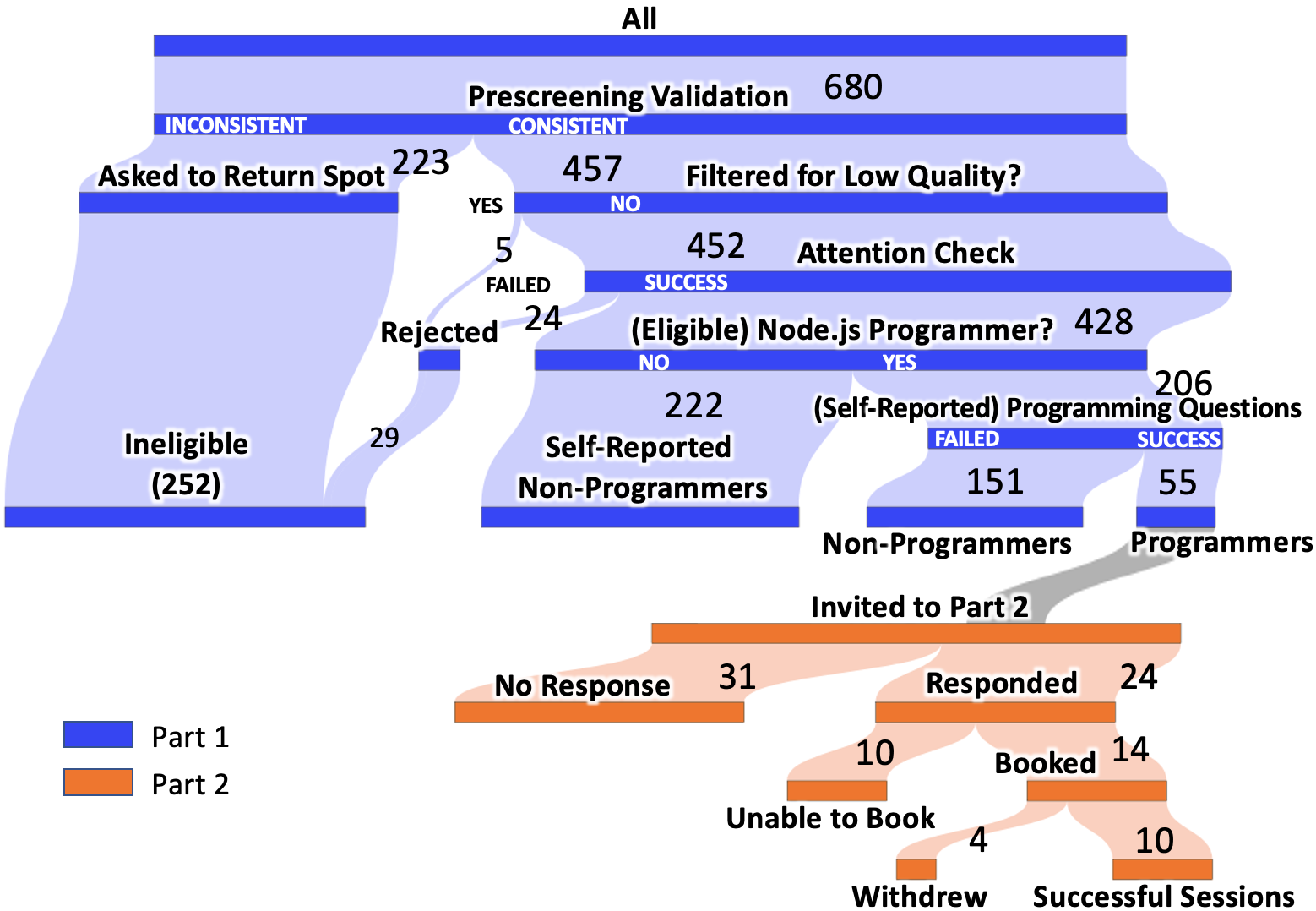

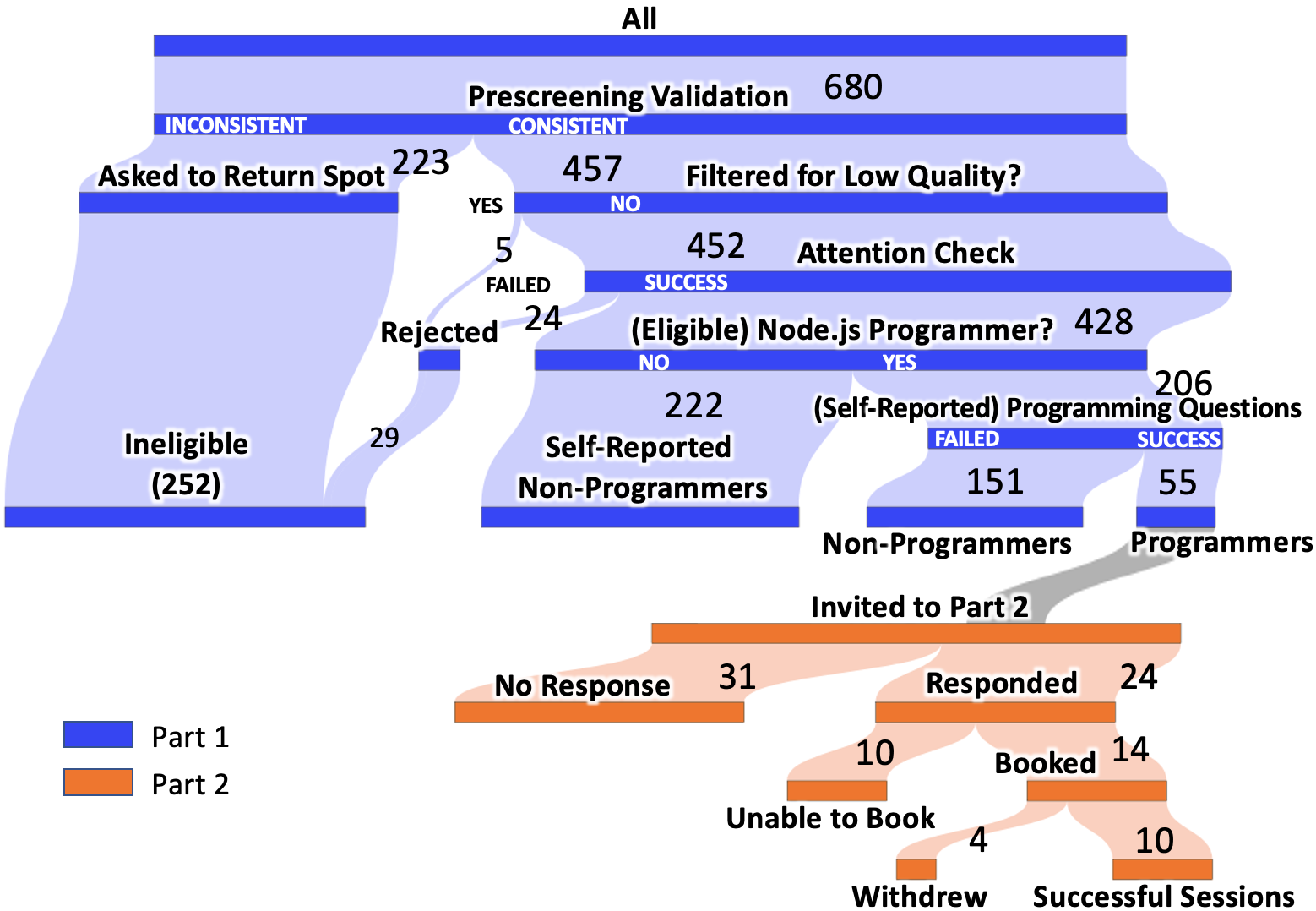

The authors implemented a two-part study to recruit participants: a pre-screening survey followed by a video call study. The process involved an initial participant pool of 680 responses, leading to 55 potential candidates meeting the criteria, out of which 10 participated in the complete study.

The pre-screening survey incorporated sections for validating prescreening responses, demographic information, and technical questions to ascertain Node.js programming proficiency. Participants were filtered based on their ability to answer programming questions adapted from existing literature, specifically focusing on their ability to perform basic tasks and demonstrating competence in executing Node.js code.

Figure 1: Diagram of participant recruitment process.

Results

The findings of the study underscored the inadequacies in prescreening questions relying solely on self-reported abilities. Notably, 33% of participants provided inconsistent responses, and overall, only 27% of self-reported Node.js programmers passed the programming questions criteria. From 206 supposed Node.js programmers, only 55 were deemed eligible. The final cohort demonstrated a significant disparity in self-reported versus actual programming skills, highlighting a need for robust measures to mitigate inaccuracies in future participant recruitment endeavors.

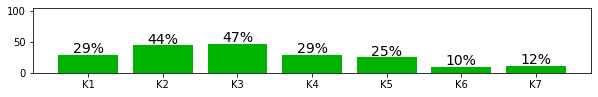

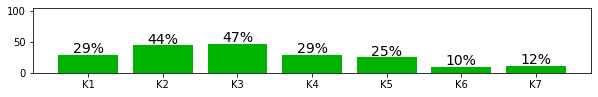

Figure 2: Percentage of correct answers (non-programmers).

The non-programmer group's results indicated that even non-programmers could occasionally answer programming questions correctly, suggesting that question effectiveness varied and required careful design to accurately identify true programmers.

Recommendations

Key recommendations provided by the authors include:

- Utilizing enhanced validation techniques beyond self-reporting, such as practical skill assessment questions, when recruiting participants with specific programming skills.

- Implementing prescreener validation checks due to a significant proportion of participants providing inconsistent responses.

- Offering pilot studies to gauge non-programmer involvement and adjust recruitment procedures accordingly.

- Transparently communicating study requirements, including specific skill expectations, to potential participants to minimize study withdrawal rates.

These strategies aim to improve precision in recruitment processes and ensure the reliability of participant data, thus conserving valuable researcher resources.

Conclusion

The reported experience indicates substantial challenges when recruiting programmers for software engineering studies through platforms like Prolific, primarily due to reliance on self-reported data and prescreening limitations. The authors advocate for methodological improvements that involve detailed programming assessments and prescreening validations as integral parts of the recruitment process for technical studies. This approach not only enhances the quality of recruited participants but also optimizes research efficiency by reducing the inclusion of non-qualified individuals. Future developments should focus on incorporating more sophisticated verification systems, one of which could be integrated within recruitment platforms to streamline participant selection and ensure higher study efficacy.