- The paper shows that AI explanations significantly improve UX evaluators' accuracy in identifying usability issues.

- It employs a 2x2 mixed-methods design with a Wizard-of-Oz AI Assistant to assess the effects of explanation and synchronization.

- Findings reveal that synchronous interfaces and tailored explanations boost evaluators' engagement and satisfaction.

Human-AI Collaboration for UX Evaluation: Effects of Explanation and Synchronization

This essay discusses the study titled "Human-AI Collaboration for UX Evaluation: Effects of Explanation and Synchronization" (2112.12387). The research focuses on understanding the effects of two design factors—explanations and synchronization—within AI-assisted user experience (UX) evaluation. The authors develop an AI tool called AI Assistant and investigate how these factors influence the performance and perceptions of UX evaluators tasked with analyzing usability test videos.

Introduction

Usability testing is critical in UX development, enabling evaluators to identify user experience issues during the interface and system development lifecycle [mcdonald2012exploring]. While AI has shown promise in assisting such tasks, the optimal design for effective collaboration between UX evaluators and AI remains under-explored. The paper examines two specific design factors from the literature on human-human and human-AI collaboration: explanations provided by AI and the temporal synchronization of interactions between users and AI.

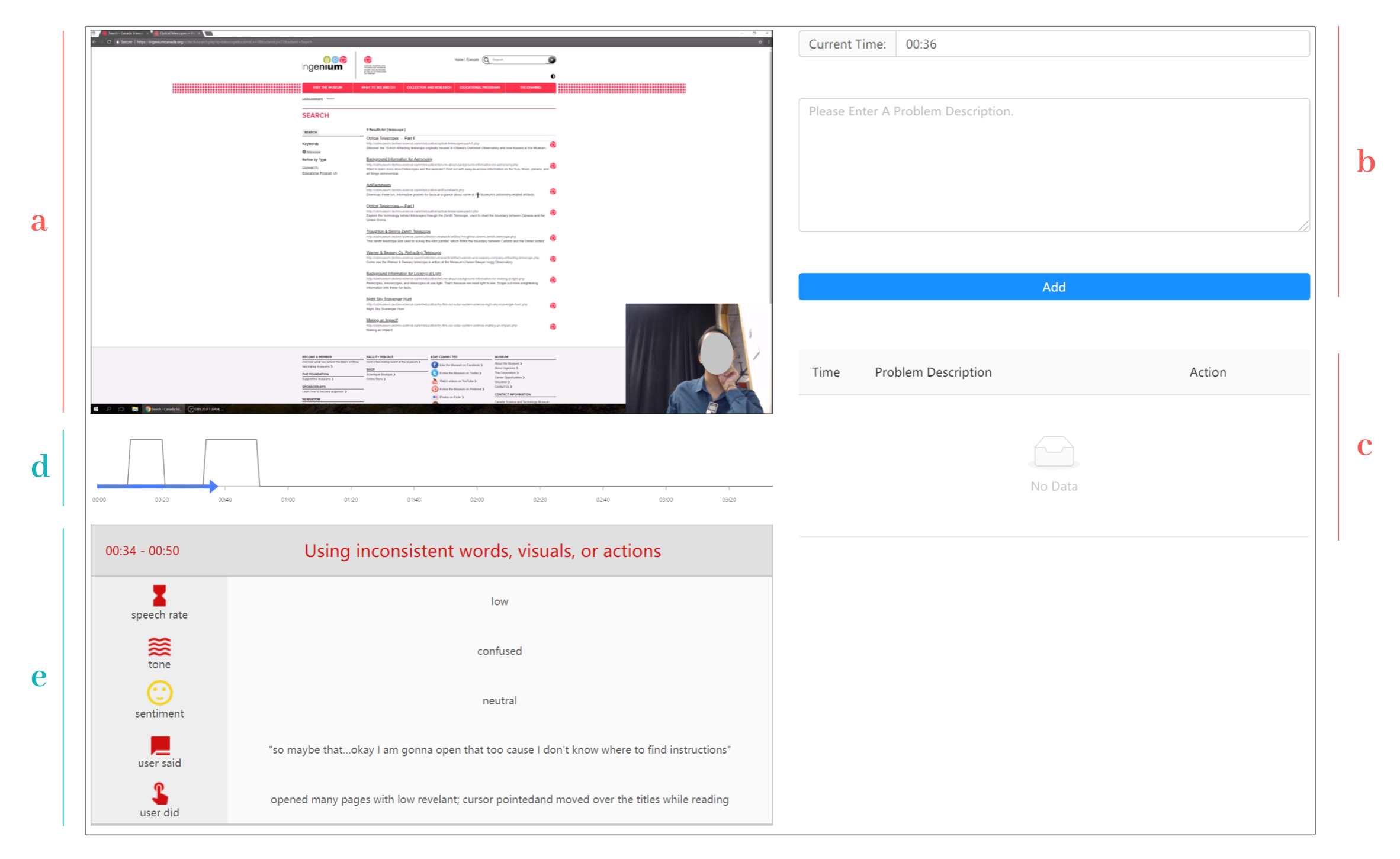

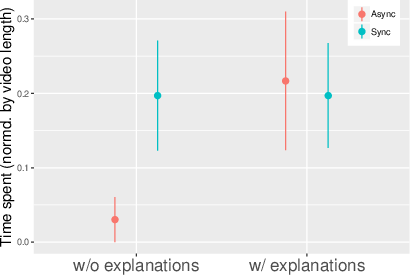

Figure 1: The user interface of AI Assistant that presents the AI-suggested problems synchronously with explanations: (a) video player; (b) annotation panel; (c) identified problem table; (d) timeline of the AI-suggested problems; (e) explanations of the AI-suggested problems.

To this end, the authors developed AI Assistant, simulating an AI through a Wizard-of-Oz (WoZ) approach to provide reliable suggestions. This tool was designed with four user interfaces (UIs) to explore two factors: explanations (with/without) and synchronization (synchronous/asynchronous). A study was conducted with 24 UX evaluators using AI Assistant to identify UX problems from usability test videos.

Methodology

Design of AI Assistant

AI Assistant was iteratively designed to aid UX evaluators by suggesting potential UX problems within usability test videos. The tool features a video player, an annotation panel, a problem table, an AI-suggested problems timeline, and, when applicable, an explanations panel. The WoZ approach simulated an AI capable of detecting UX problems with reasonable performance, facilitating a controlled study environment.

Experimental Design and Procedure

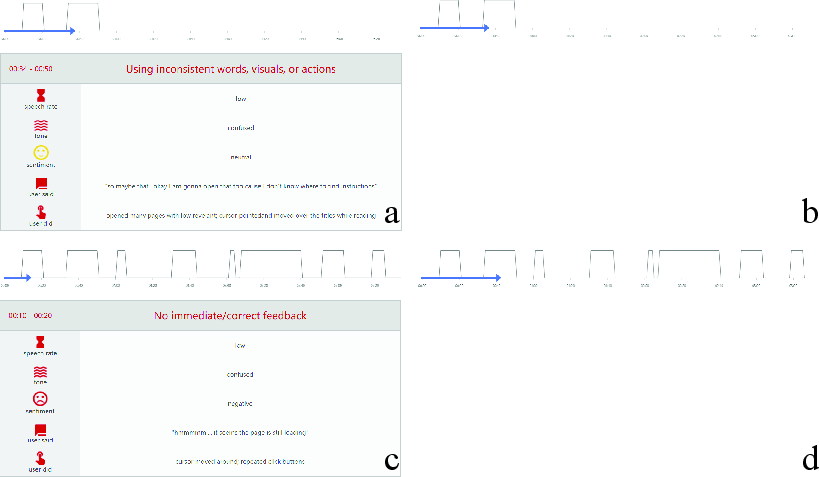

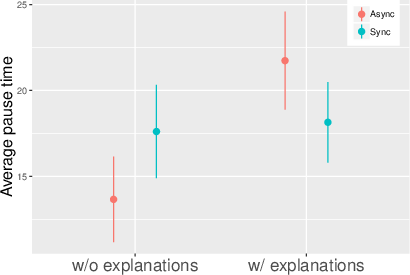

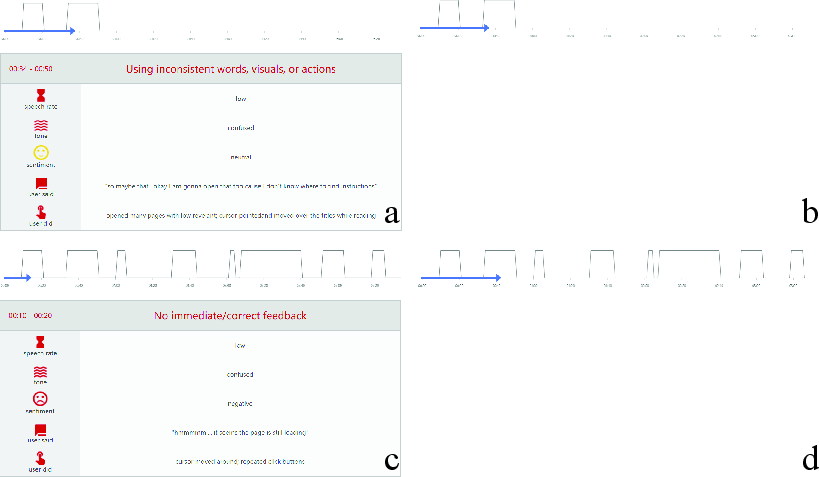

A mixed-methods 2-by-2 experimental design was employed, with explanations as a between-subjects factor and synchronization as a within-subjects factor. Each participant was assigned to either an explanation or no explanation condition and completed tasks using both synchronous and asynchronous UIs (Figure 2).

Figure 2: Comparison of the four different versions of AI Assistant.

The study included sessions with pre-task training, two formal tasks, and post-task interviews. Participants were veteran UX evaluators, ensuring expertise in UX research and usability testing. The study gathered task performance, behavioral patterns during tool interaction, and subjective perceptions via questionnaires and interviews.

Results

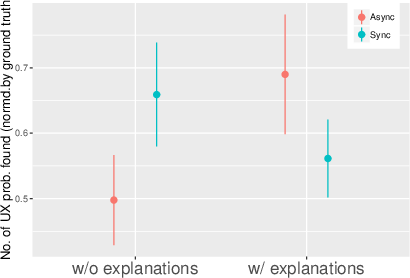

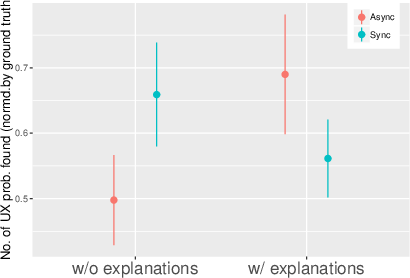

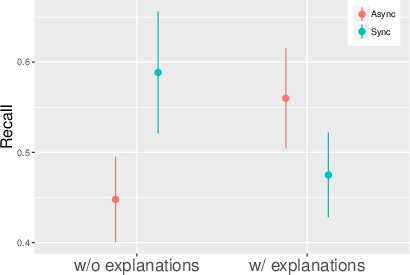

Quantitative analysis reveals significant interactions between the provision of explanations and synchronization, with explanations improving the UX evaluators' understanding of AI processes and enhancing their problem detection accuracy. The provision of explanations resulted in a higher detection rate of false negatives, indicating robustness in evaluation despite AI oversight (Figure 3).

Figure 3: Two-way interaction between explanations and synchronization on the number of UX problems found, demonstrating the impact on Recall.

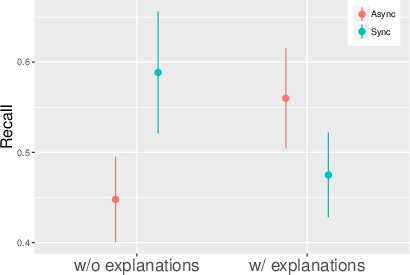

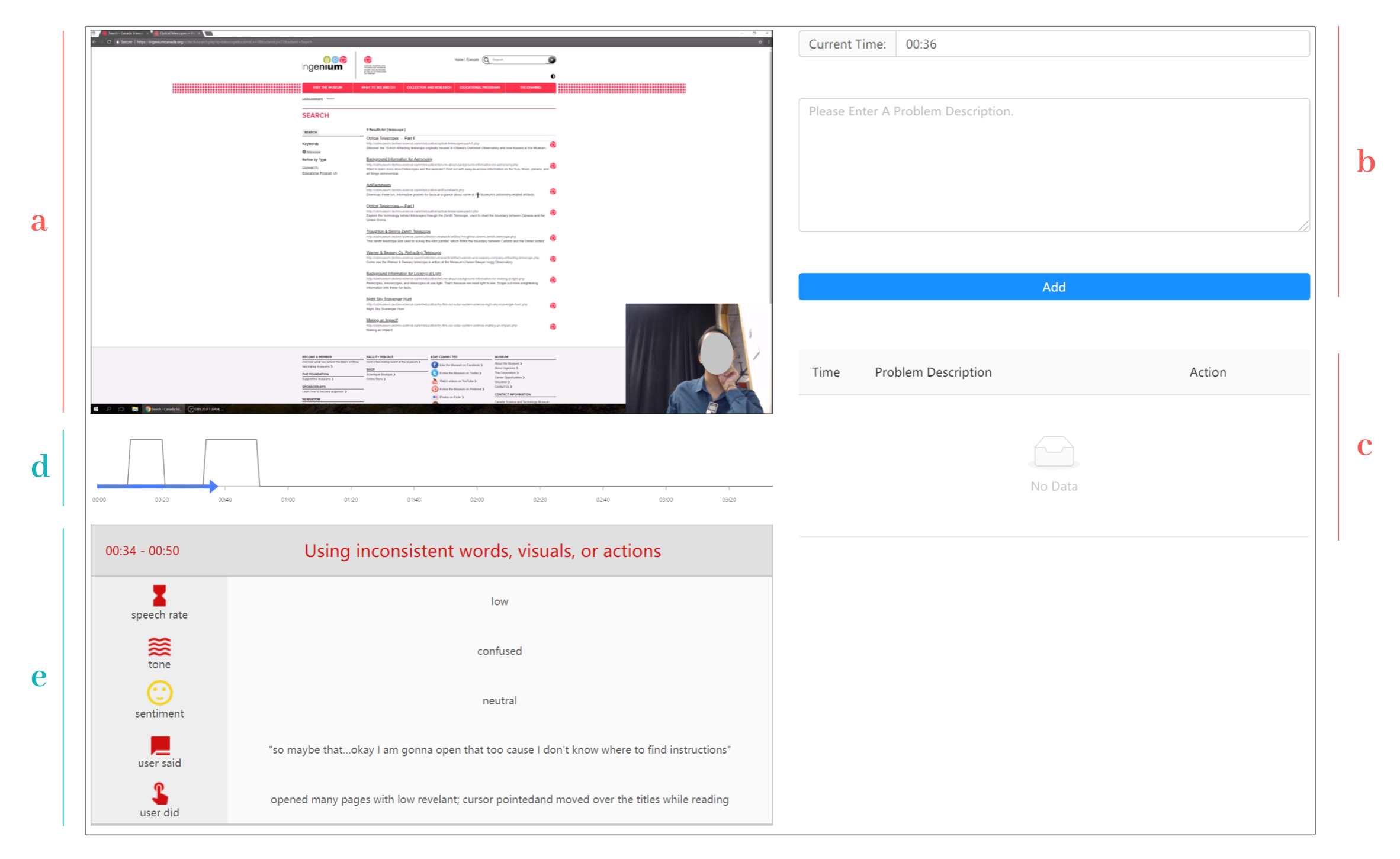

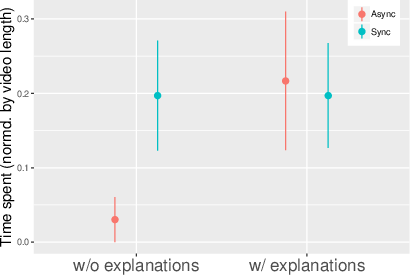

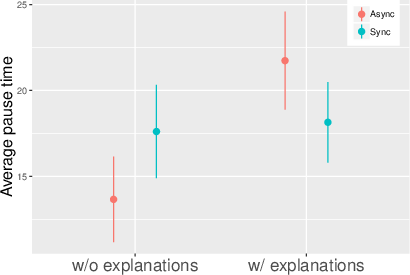

Behaviorally, synchronous AI without explanations led to higher evaluator engagement through increased time spent and active video interaction. This synchronization style was preferred as it reduced bias and cognitive overload associated with asynchronous presentation (Figure 4).

Figure 4: Two-way interaction between explanations and synchronization on the time spent on analysis (normalized by video length).

Subjective Perceptions

Participants reported enhanced satisfaction and understanding when AI explanations were present. The ability to cross-verify AI-suggested problems with personal judgments was highly valued. While the effect on trust was not significantly observable, participants' attitudes indicate potential trust improvements linked to better AI understanding.

Implications for Human-AI Collaboration

The study suggests that AI, by offering justifications and timely insights, can serve as a valuable partner in UX evaluations rather than as a stand-in for human expertise. While AI explanations facilitate understanding and task efficiency, synchronization enhances user engagement and preserves human agency. Design strategies should ensure AI explanations are nuanced, offering flexibility for personalized evaluator needs. Future work may focus on adaptive AI systems that leverage user feedback for learning and customization, potentially minimizing the limitations of current WoZ simulations.

Lastly, applicability to broader domains in high-stakes decisions remains an open area, as the "junior colleague" metaphor may extend differently where agency and user trust in AI are viewed differently.

Conclusion

This paper demonstrates the nuanced impacts of AI explanations and synchronization on enhancing UX evaluators' performance and engagement in analyzing usability test videos. By carefully designing AI tools that balance intelligent assistance with human analytical skills, future AI systems can be optimized for more effective and efficient human-AI collaboration, with potential implications across UX evaluation and other domains requiring nuanced judgment and decision-making.