- The paper introduces Latent Diffusion Models (LDMs) that decouple perceptual compression from generative modeling for efficient high-resolution image synthesis.

- It employs a time-conditional UNet in latent space to achieve faster convergence and superior FID scores compared to traditional pixel-based diffusion models.

- The approach supports multi-modal conditioning, delivering state-of-the-art results in text-to-image synthesis, super-resolution, and inpainting tasks.

High-Resolution Image Synthesis with Latent Diffusion Models

Introduction and Motivation

The paper introduces Latent Diffusion Models (LDMs), a framework for high-resolution image synthesis that leverages diffusion models in the latent space of pretrained autoencoders. Traditional diffusion models (DMs) operate in pixel space, which incurs substantial computational costs for both training and inference due to the high dimensionality and the need to model imperceptible details. LDMs address this by decoupling perceptual compression from generative modeling: an autoencoder first compresses images into a lower-dimensional latent space that preserves perceptual fidelity, and a diffusion model is then trained in this space. This approach enables efficient training and sampling, democratizing access to high-quality generative models.

Methodology

Perceptual Compression via Autoencoders

LDMs begin with a perceptual compression stage using an autoencoder trained with a combination of perceptual and adversarial losses. The encoder E maps an image x∈RH×W×3 to a latent z=E(x), and the decoder D reconstructs x from z. The latent space is regularized either via a KL penalty (VAE-style) or vector quantization (VQGAN-style), with the latter often yielding better scaling properties for downstream generative modeling. The compression factor f (downsampling ratio) is a critical hyperparameter, with f=4 or f=8 providing a favorable trade-off between efficiency and reconstruction fidelity.

(Figure 1)

Figure 1: Perceptual and semantic compression: LDMs suppress semantically meaningless information, enabling efficient training and inference in latent space.

Latent Diffusion Modeling

The diffusion model is trained in the latent space using a time-conditional UNet backbone. The objective is a denoising score-matching loss:

LDM:=EE(x),ϵ∼N(0,1),t[∥ϵ−ϵθ(zt,t)∥22]

where zt is a noisy version of the latent z. This formulation allows the model to focus on semantic content rather than pixel-level noise, resulting in faster convergence and reduced compute requirements.

Conditioning Mechanisms

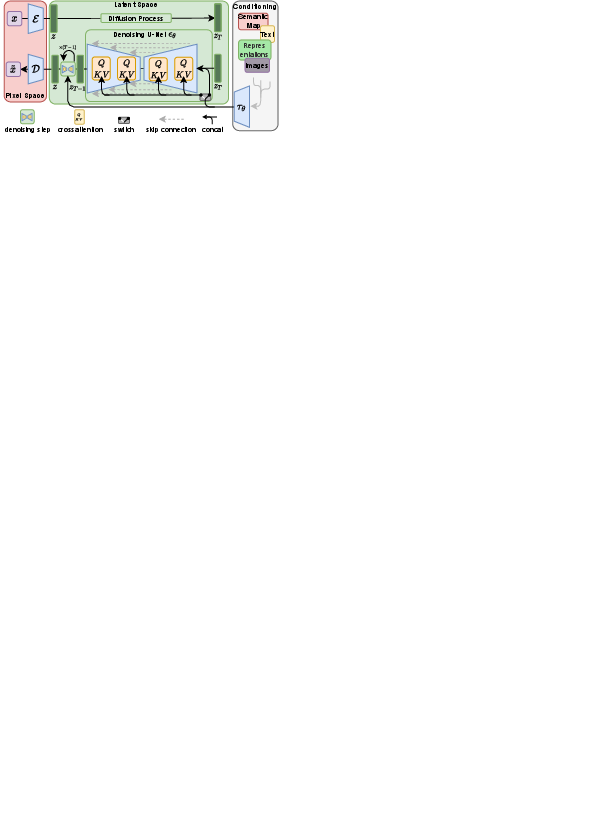

LDMs generalize to conditional generation tasks by augmenting the UNet with cross-attention layers. Conditioning inputs y (e.g., text, semantic maps, bounding boxes) are encoded via domain-specific encoders (e.g., transformers for text) and injected into the UNet via cross-attention. This enables flexible multi-modal conditioning and supports tasks such as text-to-image, layout-to-image, and semantic synthesis.

Figure 2: Conditioning LDMs via concatenation or cross-attention enables flexible multi-modal generation.

Experimental Results

Efficiency and Compression Trade-offs

Extensive experiments demonstrate that LDMs with moderate compression (f=4 or f=8) achieve superior sample quality and throughput compared to pixel-based DMs and highly compressed AR models. For example, on ImageNet, LDM-4 achieves a 38-point FID improvement over pixel-based DMs after 2M training steps, with a 2.7× speedup in sampling.

(Figure 3)

Figure 3: FID and IS on LSUN-Churches vs training iterations, showing rapid convergence for LDMs with moderate compression.

Unconditional and Conditional Generation

LDMs set new state-of-the-art FID scores for unconditional synthesis on CelebA-HQ (FID=5.11) and competitive results on FFHQ, LSUN-Churches, and LSUN-Bedrooms. Precision and recall metrics confirm improved mode coverage over GANs. For class-conditional ImageNet generation, LDMs outperform previous DMs (ADM) with fewer parameters and lower compute.

(Figure 4)

Figure 4: LDM samples on CelebA-HQ, FFHQ, LSUN-Churches, LSUN-Beds, and ImageNet, demonstrating high-fidelity synthesis.

Text-to-Image and Layout-to-Image Synthesis

The cross-attention mechanism enables LDMs to excel in text-to-image synthesis. On MS-COCO, LDM-KL-8-G achieves FID=12.61 and IS=26.62, matching or surpassing recent AR and DM baselines with significantly fewer parameters. Layout-to-image models trained on OpenImages and COCO outperform prior works in FID and qualitative diversity.

(Figure 5)

Figure 5: Text-to-image samples for user-defined prompts, illustrating semantic alignment and visual diversity.

Super-Resolution and Inpainting

LDMs are effective for super-resolution and inpainting tasks. On ImageNet 64→256 super-resolution, LDM-SR achieves FID=2.4, outperforming SR3 in FID while maintaining competitive IS. Inpainting experiments on Places show LDMs surpass LaMa and CoModGAN in FID, with user studies confirming human preference for LDM outputs.

(Figure 6)

Figure 6: Super-resolution comparison: LDM-SR renders realistic textures and generalizes to arbitrary inputs.

Implementation Considerations

- Autoencoder Training: Use perceptual and adversarial losses; regularize latent space with KL or VQ. For f=4, latent dimensionality is 64×64×3 for 256×256 images.

- Diffusion Model: UNet backbone with time embedding; train in latent space using reweighted denoising loss. For conditional tasks, inject conditioning via cross-attention.

- Sampling: DDIM or DDPM samplers; 50–200 steps suffice for high-quality synthesis in latent space.

- Compute: LDMs require 1–2 orders of magnitude less compute than pixel-based DMs or AR models. Training on a single A100 GPU is feasible for 2562 images.

- Scaling: Convolutional sampling enables generalization to resolutions >2562 (e.g., 10242) for spatially conditioned tasks.

Limitations and Future Directions

While LDMs dramatically reduce computational requirements, sequential sampling remains slower than GANs. Reconstruction fidelity of the autoencoder can be a bottleneck for tasks requiring pixel-level accuracy. Further research is needed on scaling to even higher resolutions, improving autoencoder architectures, and exploring new conditioning modalities. Societal impacts include democratization of generative modeling but also risks of misuse and data leakage.

Conclusion

Latent Diffusion Models represent a significant advance in efficient, high-fidelity image synthesis. By decoupling perceptual compression from generative modeling and leveraging cross-attention for flexible conditioning, LDMs achieve state-of-the-art results across unconditional, conditional, super-resolution, and inpainting tasks, all while drastically reducing computational requirements. This framework opens new avenues for scalable, accessible generative modeling in both research and real-world applications.