GFlowNet Foundations (2111.09266v4)

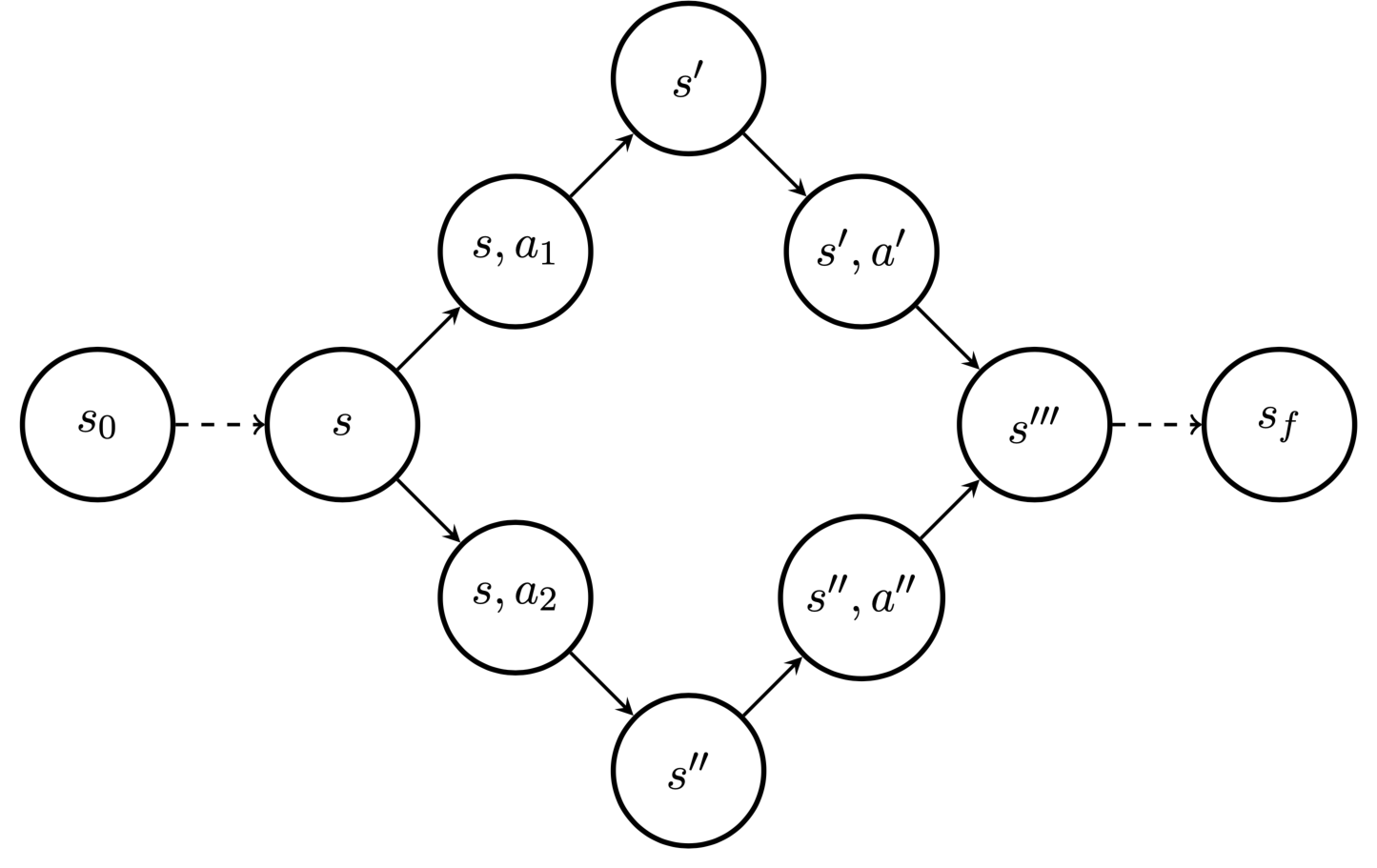

Abstract: Generative Flow Networks (GFlowNets) have been introduced as a method to sample a diverse set of candidates in an active learning context, with a training objective that makes them approximately sample in proportion to a given reward function. In this paper, we show a number of additional theoretical properties of GFlowNets. They can be used to estimate joint probability distributions and the corresponding marginal distributions where some variables are unspecified and, of particular interest, can represent distributions over composite objects like sets and graphs. GFlowNets amortize the work typically done by computationally expensive MCMC methods in a single but trained generative pass. They could also be used to estimate partition functions and free energies, conditional probabilities of supersets (supergraphs) given a subset (subgraph), as well as marginal distributions over all supersets (supergraphs) of a given set (graph). We introduce variations enabling the estimation of entropy and mutual information, sampling from a Pareto frontier, connections to reward-maximizing policies, and extensions to stochastic environments, continuous actions and modular energy functions.

- An introduction to mcmc for machine learning. Machine learning, 50(1):5–43, 2003.

- A tutorial on particle filters for online nonlinear/non-gaussian bayesian tracking. IEEE Transactions on Signal Processing, 50(2):174–188, 2002. doi: 10.1109/78.978374.

- The nonstochastic multiarmed bandit problem. SIAM journal on computing, 32(1):48–77, 2002.

- Neural machine translation by jointly learning to align and translate. ICLR’2015, arXiv:1409.0473, 2014.

- Deep equilibrium models. CoRR, abs/1909.01377, 2019. URL http://arxiv.org/abs/1909.01377.

- A distributional perspective on reinforcement learning. In International Conference on Machine Learning, 2017.

- Flow network based generative models for non-iterative diverse candidate generation. NeurIPS’2021, arXiv:2106.04399, 2021.

- Better mixing via deep representations. In International conference on machine learning, pages 552–560. PMLR, 2013.

- A graph-based genetic algorithm and its application to the multiobjective evolution of median molecules. Journal of chemical information and computer sciences, 44(3):1079–1087, 2004.

- Approximate inference in discrete distributions with monte carlo tree search and value functions, 2019.

- L. Cayton. Algorithms for manifold learning. Univ. of California at San Diego Tech. Rep, 12(1-17):1, 2005.

- Learning discrete energy-based models via auxiliary-variable local exploration. In Neural Information Processing Systems (NeurIPS), 2020.

- Bayesian structure learning with generative flow networks. In Uncertainty in Artificial Intelligence, pages 518–528. PMLR, 2022.

- Nice: Non-linear independent components estimation. ICLR’2015 Workshop, arXiv:1410.8516, 2014.

- Density estimation using real NVP. ICLR’2017, arXiv:1605.08803, 2016.

- A. Dosovitskiy and J. Djolonga. You only train once: Loss-conditional training of deep networks. In International Conference on Learning Representations, 2019.

- Tree-based batch mode reinforcement learning. Journal of Machine Learning Research, 6:503–556, 2005.

- Learning actionable representations with goal-conditioned policies. arXiv preprint arXiv:1811.07819, 2018.

- Generative adversarial nets. Advances in neural information processing systems, 27, 2014.

- A. Goyal and Y. Bengio. Inductive biases for deep learning of higher-level cognition. arXiv, abs/2011.15091, 2020. https://arxiv.org/abs/2011.15091.

- Recurrent independent mechanisms. ICLR’2021, arXiv:1909.10893, 2019.

- Oops i took a gradient: Scalable sampling for discrete distributions, 2021.

- Constrained bayesian optimization for automatic chemical design. arXiv preprint arXiv:1709.05501, 2017.

- Reinforcement learning with deep energy-based policies. In International Conference on Machine Learning, pages 1352–1361. PMLR, 2017.

- W. K. Hastings. Monte carlo sampling methods using markov chains and their applications. Biometrika, 1970.

- Gflownet-em for learning compositional latent variable models. arvix, 2023.

- Biological sequence design with gflownets. International Conference on Machine Learning (ICML), 2022.

- Multi-objective gflownets. arXiv preprint arXiv:2210.12765, 2023.

- Markov chain monte carlo methods and the label switching problem in bayesian mixture modeling. Statistical Science, pages 50–67, 2005.

- J. H. Jensen. A graph-based genetic algorithm and generative model/monte carlo tree search for the exploration of chemical space. Chemical science, 10(12):3567–3572, 2019.

- D. P. Kingma and M. Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

- Factor graphs and the sum-product algorithm. IEEE Transactions on information theory, 47(2):498–519, 2001.

- Maximum entropy generators for energy-based models, 2019.

- Grammar variational autoencoder. In International Conference on Machine Learning, pages 1945–1954. PMLR, 2017.

- A theory of continuous generative flow networks. International Conference on Machine Learning (ICML), 2023.

- Batch reinforcement learning. In Reinforcement learning, pages 45–73. Springer, 2012.

- S. Levine. Reinforcement learning and control as probabilistic inference: Tutorial and review. arXiv preprint arXiv:1805.00909, 2018.

- Batchgfn: Generative flow networks for batch active learning. arXiv preprint arXiv: 2306.15058, 2023.

- Trajectory balance: Improved credit assignment in gflownets. arXiv preprint arXiv:2201.13259, 2022.

- GFlowNets and variational inference. International Conference on Learning Representations (ICLR), 2023.

- Equation of state calculations by fast computing machines. The journal of chemical physics, 21(6):1087–1092, 1953.

- J. Močkus. On bayesian methods for seeking the extremum. In Optimization techniques IFIP technical conference, pages 400–404. Springer, 1975.

- J.-B. Mouret and S. Doncieux. Encouraging Behavioral Diversity in Evolutionary Robotics: An Empirical Study. Evolutionary Computation, 20(1):91–133, 03 2012. ISSN 1063-6560. doi: 10.1162/EVCO_a_00048. URL https://doi.org/10.1162/EVCO_a_00048.

- Bridging the gap between value and policy based reinforcement learning. arXiv preprint arXiv:1702.08892, 2017.

- Dualdice: Behavior-agnostic estimation of discounted stationary distribution corrections. arXiv preprint arXiv:1906.04733, 2019.

- Elements of sequential monte carlo. Foundations and Trends® in Machine Learning, 12(3):307–392, 2019.

- H. Narayanan and S. Mitter. Sample complexity of testing the manifold hypothesis. In NIPS’2010, pages 1786–1794, 2010.

- C. Nash and C. Durkan. Autoregressive energy machines. In International Conference on Machine Learning, pages 1735–1744. PMLR, 2019.

- Better training of gflownets with local credit and incomplete trajectories. arXiv preprint arXiv: 2302.01687, 2023.

- A framework for adaptive mcmc targeting multimodal distributions. The Annals of Statistics, 48(5):2930–2952, 2020.

- D. Rezende and S. Mohamed. Variational inference with normalizing flows. In International conference on machine learning, pages 1530–1538. PMLR, 2015.

- M. Riedmiller. Neural fitted q iteration–first experiences with a data efficient neural reinforcement learning method. In European conference on machine learning, pages 317–328. Springer, 2005.

- The manifold tangent classifier. Advances in neural information processing systems, 24:2294–2302, 2011.

- Evolution strategies as a scalable alternative to reinforcement learning, 2017.

- J. Schmidhuber. Reinforcement learning upside down: Don’t predict rewards–just map them to actions. arXiv preprint arXiv:1912.02875, 2019.

- On causal and anticausal learning. In ICML’2012, pages 1255–1262, 2012.

- Discrete object generation with reversible inductive construction. arXiv preprint arXiv:1907.08268, 2019.

- Deep unsupervised learning using nonequilibrium thermodynamics. In International Conference on Machine Learning, pages 2256–2265. PMLR, 2015.

- Gaussian process optimization in the bandit setting: No regret and experimental design. In International Conference on Machine Learning (ICML), 2010.

- Reinforcement learning: An introduction. MIT press, 2018.

- Amortized bayesian optimization over discrete spaces. In Conference on Uncertainty in Artificial Intelligence, pages 769–778. PMLR, 2020.

- M. Toussaint and A. Storkey. Probabilistic inference for solving discrete and continuous state markov decision processes. In Proceedings of the 23rd international conference on Machine learning, pages 945–952, 2006.

- Attention is all you need. In Advances in neural information processing systems, pages 5998–6008, 2017.

- Batch stationary distribution estimation. arXiv preprint arXiv:2003.00722, 2020.

- {MARS}: Markov molecular sampling for multi-objective drug discovery. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=kHSu4ebxFXY.

- Generative flow networks for discrete probabilistic modeling. International Conference on Machine Learning (ICML), 2022.

- Robust scheduling with gflownets. International Conference on Learning Representations (ICLR), 2023.

- Maximum entropy inverse reinforcement learning. In Aaai, volume 8, pages 1433–1438. Chicago, IL, USA, 2008.

- A variational perspective on generative flow networks. arXiv preprint 2210.07992, 2022.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.