- The paper presents a hybrid architecture combining heuristic and NN-based classifiers that enables real-time gesture recognition on mobile devices.

- Methodological enhancements to MediaPipe Hands improve 3D keypoint tracking, increasing mAP from 66.5 to 71.3 in diverse hand poses.

- The system leverages GPU acceleration via OpenGL/WebGL to achieve robust, low-latency performance at 30fps for dynamic human-computer interaction.

On-device Real-time Hand Gesture Recognition

Introduction

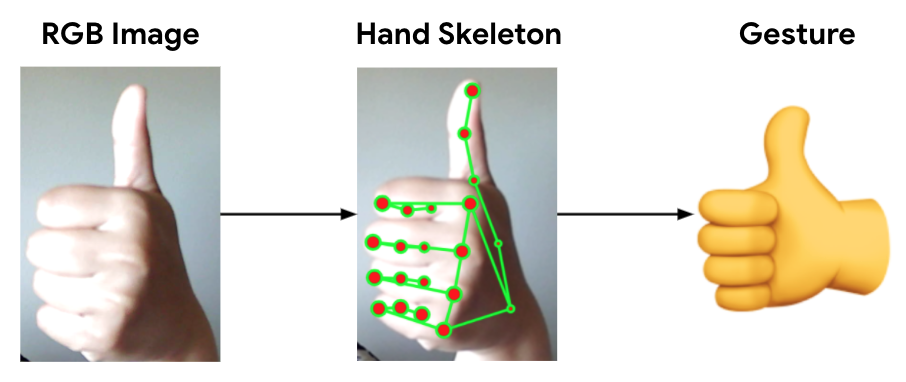

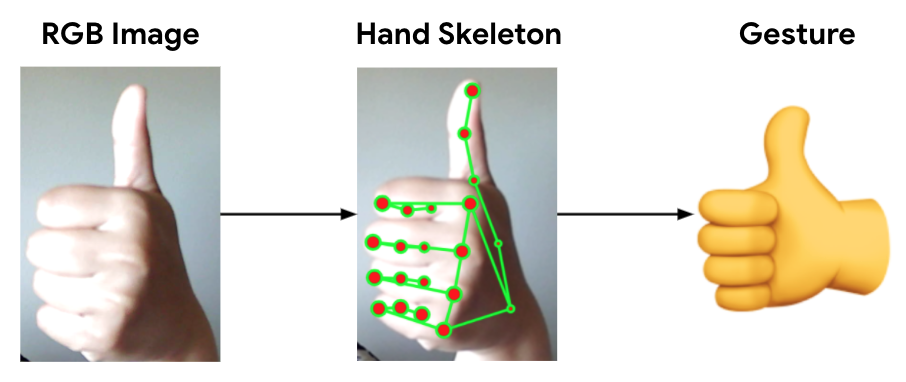

The paper presents a system for on-device, real-time hand gesture recognition (HGR) designed to identify predefined static gestures using a single RGB camera. This system aims to facilitate human-computer interaction by leveraging MediaPipe Hands to predict 3D skeleton keypoints and classify gestures through heuristic-based and neural network (NN)-based classifiers. The solution has the added advantage of executing in real-time at 30fps on common mobile devices.

Architecture

The architecture of the proposed HGR system is bifurcated into a hand skeleton tracker and a gesture classifier. The hand skeleton tracker is an upgrade to MediaPipe Hands, incorporating enhancements that improve keypoint accuracy and enable 3D keypoint estimation in a world metric space. The system architecture inherently optimizes complexity by engaging the gesture classifier only when hands are detected, thus reducing computational load.

Figure 1: Our hand gesture recognition system.

Hand Skeleton Tracker

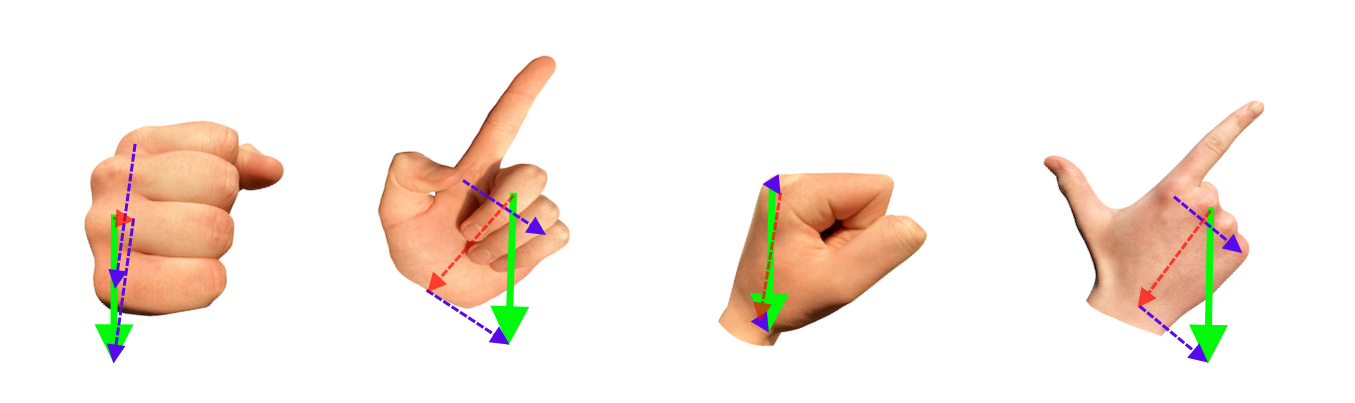

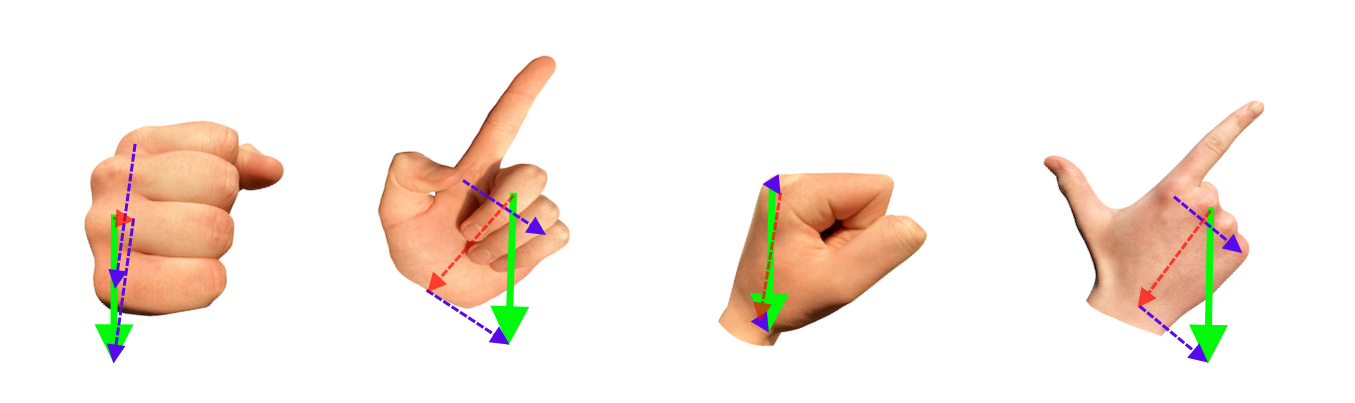

Enhancements to MediaPipe Hands facilitate robust hand keypoint estimation, crucial for subsequent gesture classification. Notably, the system rectifies challenges in rotation and scale estimation that previously caused tracking instability in frontal views. This is achieved by defining virtual keypoints and deriving hand rotation angles from composite vector sums, leading to improved hand tracking accuracy in complex poses, as demonstrated by an increase in mean average precision (mAP) from 66.5 to 71.3 on a validation dataset with varied hand poses.

Figure 2: Hands rotation angle derived from the sum of two vectors: index to pinky base knuckle (in green) and middle base knuckle to wrist (in red).

Heuristics Gesture Classifier

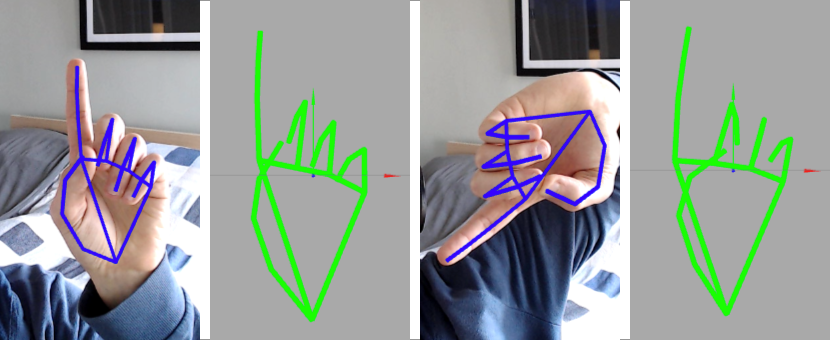

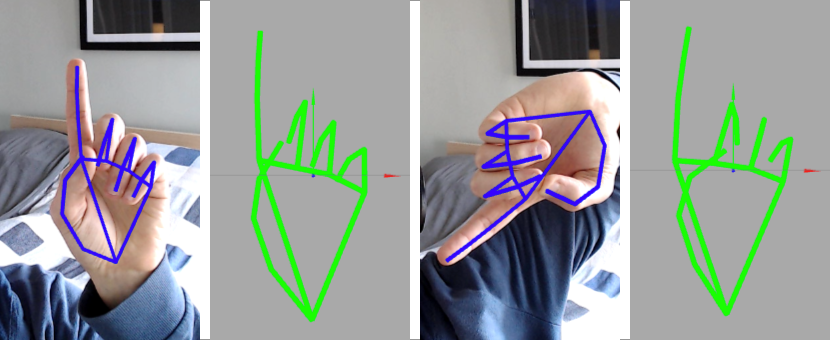

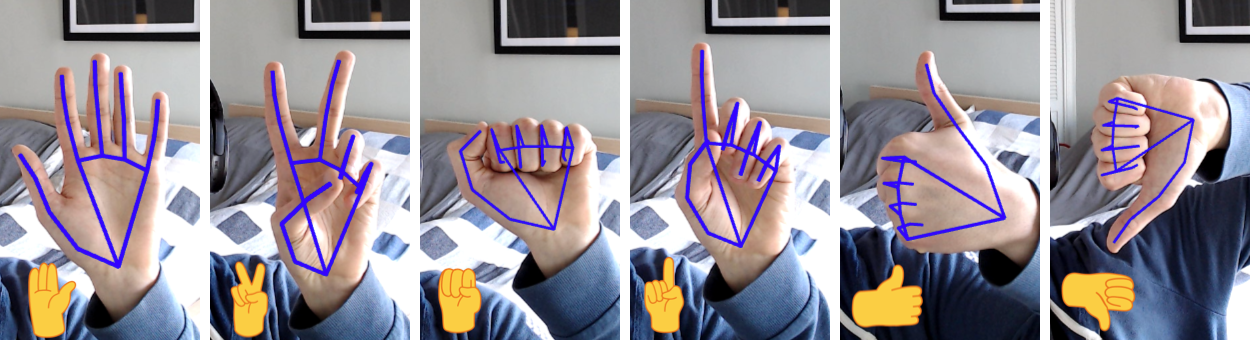

Utilizing the hand skeleton tracker, a heuristic-based gesture classifier is developed for a predefined set of static gestures. This classifier evaluates angles between 3D keypoints to determine finger states, thus simplifying the gesture definition through logical expressions. The classifier's robustness is enhanced by removing extrinsic palm pose features, focusing solely on intrinsic hand feature angles, facilitating a more stable and consistent classification process.

Figure 3: 3D hand keypoints decoupled from the palm pose during the preprocessing stage. The blue hand skeleton is based on the 2D hand keypoints. The green hand skeleton is based on the preprocessed 3D hand keypoints.

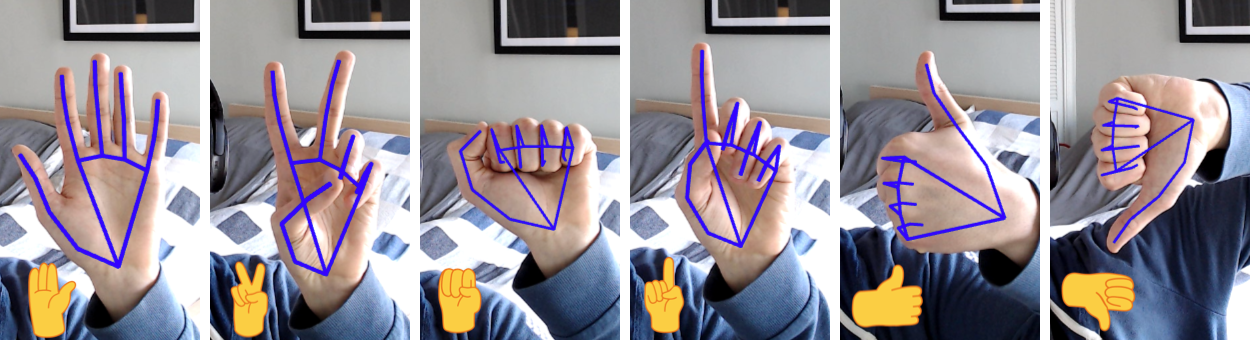

Figure 4: Visualization of gestures supported by the heuristic-based classifier. Left-to-right: OpenPalm, Victory, ClosedFist, PointingUp, ThumbUp, ThumbDown.

Neural Network Gesture Classifier

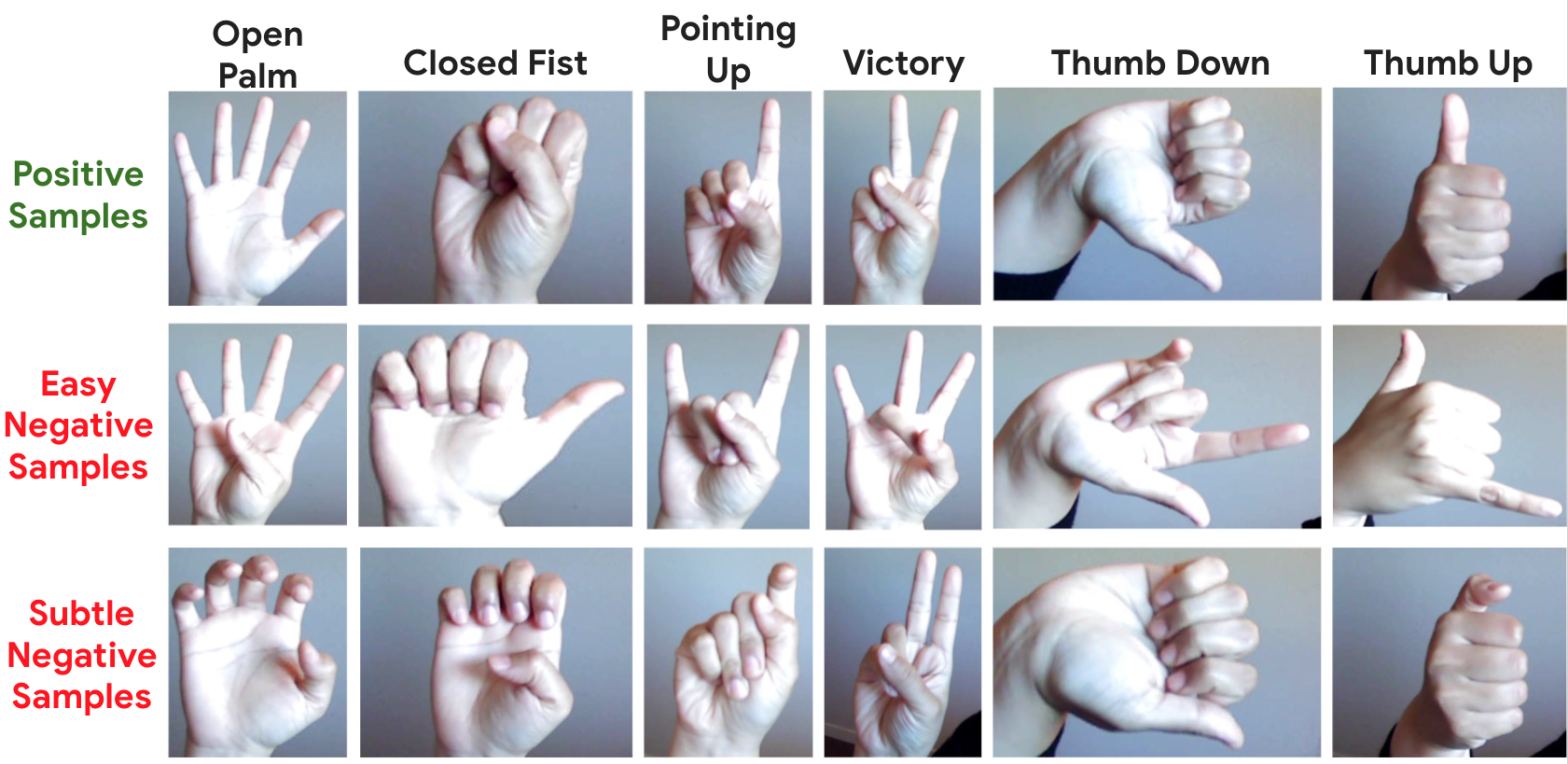

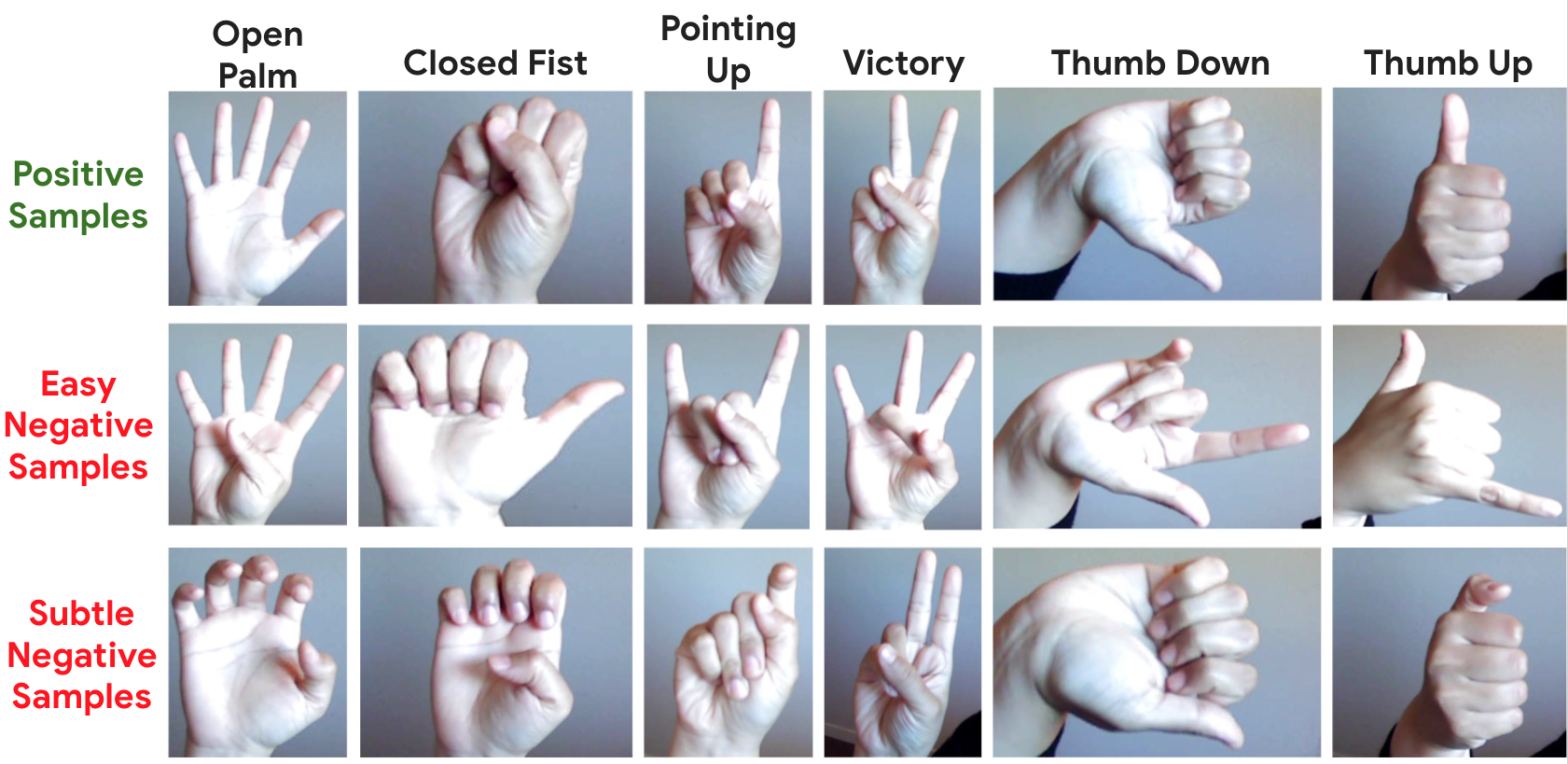

The NN-based gesture classifier, trained on an extensive dataset, outperforms the heuristic method by achieving an 87.9% recall for gesture recognition at a 1% false positive rate. The architecture consists of three fully connected layers operating on both intrinsic and extrinsic features. Utilizing focal loss addresses class imbalance, common in real-world datasets with more negative samples than positive ones.

Figure 5: Some examples of true positive samples for gesture classes, easy samples for Negative hand shapes and subtle variations of hand shapes that should not be confused with the gesture class.

The integration of the proposed HGR into the MediaPipe framework empowers the system with the ability to manage computational resources effectively by regulating detection and tracking frequencies. Leveraging GPU acceleration enables real-time performance across diverse devices and applications, employing OpenGL and WebGL for efficient task handling. This adaptability is crucial for resource-limited environments common in mobile applications.

Applications and Implications

This HGR system significantly impacts human-computer interaction, extending its utility to applications such as virtual desktops, robotic interfaces, and gaming systems. The self-contained real-time processing on mobile devices underscores the potential for widespread adoption in consumer electronics, enhancing accessibility and versatility in gesture-based controls.

Conclusion

The "On-device Real-time Hand Gesture Recognition" system delineates a methodical approach to gesture classification using an RGB camera, with real-time deployment validated on mobile platforms. Its dual-classifier design, amalgamating heuristic and NN-based methods, presents a flexible and robust solution for dynamic HCI tasks, setting a precedent for future advancements in gesture-based interaction paradigms. The integration within the MediaPipe framework exemplifies a model of efficiency and scalability that is poised to influence future developments in AI-driven HCI technologies.