MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer

(2110.02178)Abstract

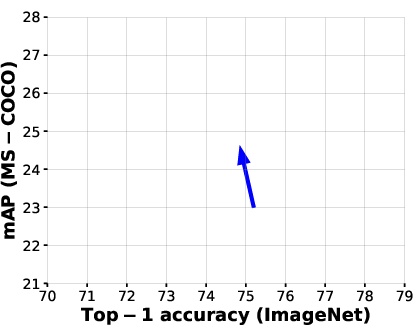

Light-weight convolutional neural networks (CNNs) are the de-facto for mobile vision tasks. Their spatial inductive biases allow them to learn representations with fewer parameters across different vision tasks. However, these networks are spatially local. To learn global representations, self-attention-based vision trans-formers (ViTs) have been adopted. Unlike CNNs, ViTs are heavy-weight. In this paper, we ask the following question: is it possible to combine the strengths of CNNs and ViTs to build a light-weight and low latency network for mobile vision tasks? Towards this end, we introduce MobileViT, a light-weight and general-purpose vision transformer for mobile devices. MobileViT presents a different perspective for the global processing of information with transformers, i.e., transformers as convolutions. Our results show that MobileViT significantly outperforms CNN- and ViT-based networks across different tasks and datasets. On the ImageNet-1k dataset, MobileViT achieves top-1 accuracy of 78.4% with about 6 million parameters, which is 3.2% and 6.2% more accurate than MobileNetv3 (CNN-based) and DeIT (ViT-based) for a similar number of parameters. On the MS-COCO object detection task, MobileViT is 5.7% more accurate than MobileNetv3 for a similar number of parameters. Our source code is open-source and available at: https://github.com/apple/ml-cvnets

Overview

-

MobileViT combines the strengths of Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs) to develop a light-weight, low-latency network for mobile vision tasks.

-

The MobileViT block integrates local feature extraction using convolutions with global dependencies handled by transformers, achieving a balance of robust performance and efficiency.

-

Empirical results show MobileViT outperforms previous state-of-the-art models like MobileNetv3 and DeIT on datasets such as ImageNet-1k and MS-COCO, with easier training processes and less reliance on extensive data augmentation.

Insights on MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer

The paper "MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer" authored by Sachin Mehta and Mohammad Rastegari, introduces MobileViT, a novel approach to integrate the benefits of Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs) for mobile vision tasks. This paper is particularly relevant for researchers interested in efficient model design for resource-constrained environments.

Summary of Contributions

- Combination of CNNs and ViTs: The authors address a critical question: Is it possible to combine the strengths of CNNs and ViTs to build a light-weight, low-latency network for mobile vision tasks? Their answer is MobileViT, a hybrid architecture that integrates the spatial inductive biases of CNNs with the global representation learning capabilities of ViTs.

- Architecture Design: MobileViT models incorporate a novel MobileViT block that performs global processing using transformers while retaining local inductive biases provided by convolutions. Specifically, convolutions are used for local feature extraction, and transformers handle the global dependencies, thus facilitating the learning of robust and high-performing representations while remaining light-weight.

- Performance Comparison: The paper reports strong numerical results for MobileViT. On the ImageNet-1k dataset, MobileViT achieves a top-1 accuracy of 78.4% with around 6 million parameters. This performance surpasses the previous state-of-the-art MobileNetv3 by 3.2% and DeIT (ViT-based) by 6.2% for a similar parameter count. Additionally, for object detection on the MS-COCO dataset, MobileViT outperforms MobileNetv3 by a significant margin of 5.7%.

- Ease of Optimization: Unlike traditional ViT models, which require extensive data augmentation and are sensitive to hyper-parameters like L2 regularization, MobileViT models use simple training recipes, making them easy to train and less dependent on these augmentations.

Practical and Theoretical Implications

The practical implications of MobileViT are substantial. By bringing together the best attributes of CNNs and ViTs, MobileViT makes it feasible to deploy high-performing visual recognition models on mobile devices, where computational and memory resources are limited. The authors’ contribution lies in demonstrating that transformers can be scaled down effectively to work within the constrained parameter budgets typical of mobile devices, without significant performance trade-offs.

Theoretically, the blending of local and global processing within the MobileViT block offers a new perspective on neural network design. CNNs are known for their ability to capture local patterns via convolutions, but they struggle with global dependencies; ViTs capture global information well but require large datasets and extensive training. MobileViT leverages the strengths of both architectures, potentially inspiring future research to explore other ways of blending different network paradigms.

Future Directions

Future work could extend MobileViT in several directions:

- Hardware Acceleration: The implementation and optimization of dedicated hardware kernels for the MobileViT block could further enhance inference speed on mobile devices, bridging the gap with CNN-based models.

- Broader Applications: Exploring the applicability of MobileViT to other computer vision tasks like instance segmentation, depth estimation, or video processing could prove useful.

- Scalability and Adaptation: Investigating how MobileViT can be adapted to different model scales, from tiny models suitable for the smallest edge devices to larger models that sit at the edge of mobile and desktop capabilities.

Contrasts and Unanswered Questions

Contrary to the general perception that ViTs are bulky and less efficient, MobileViT shows that with careful architectural design, transformers can be competitive in low-resource settings. However, some questions remain open, such as how well the MobileViT architecture generalizes across different domains without extensive augmentations or if further gains can be achieved by integrating different transformation techniques.

In conclusion, the MobileViT paper substantiates the necessity and feasibility of hybrid architecture in mobile vision applications. By effectively bridging the gap between CNNs and ViTs, MobileViT stands as a robust, versatile, and efficient architecture that pushes the boundaries of what can be achieved in resource-constrained environments.