- The paper introduces a novel low-rank adaptation (LoRA) method to update large language models efficiently by injecting trainable low-rank matrices.

- It significantly reduces the number of trainable parameters, making the process scalable and suitable for resource-constrained environments.

- Empirical evaluations across models like GPT-3 and RoBERTa show that LoRA achieves competitive or superior performance compared to full fine-tuning.

LoRA: Low-Rank Adaptation of LLMs

The "LoRA: Low-Rank Adaptation of LLMs" introduces a novel approach to adapting large pre-trained LLMs for specific downstream tasks. This technique, called Low-Rank Adaptation (LoRA), proposes an efficient and scalable way to improve the performance of these models without requiring the complete retraining of their extensive parameter sets.

Motivation and Concept

As pre-trained models grow in size, like the 175 billion parameters of GPT-3, traditional fine-tuning methods become resource-intensive and impractical. LoRA addresses this by introducing trainable rank decomposition matrices into each layer of the Transformer, allowing the model weights to remain frozen during adaptation. This reduces the number of trainable parameters significantly, facilitating efficient training and deployment.

Implementation Details

LoRA modifies dense layers within a neural network by constraining updates to learnable matrices A and B, representing weight changes using a low-rank decomposition ΔW=BA. Here, B∈Rd×r and A∈Rr×k, where r≪min(d,k), allowing for efficient adaptation:

1

2

3

|

def lora_forward(x, W_0, A, B, alpha=16):

Delta_W = (B @ A) * (alpha / A.shape[0])

return W_0 @ x + Delta_W @ x |

Thus, LoRA minimally alters the architecture while avoiding additional deployment latency, as the matrices A and B can be absorbed into W0 post-training.

Practical Benefits and Trade-offs

The primary advantage of LoRA lies in its resource efficiency: reducing required GPU memory for training and model storage makes it suitable for deployment contexts with limited infrastructure. This is particularly advantageous during the frequent task switching in environments requiring multiple task-specific models. However, the inherent design disallows simultaneous batching of different tasks unless additional mechanisms are integrated, such as modular sampling.

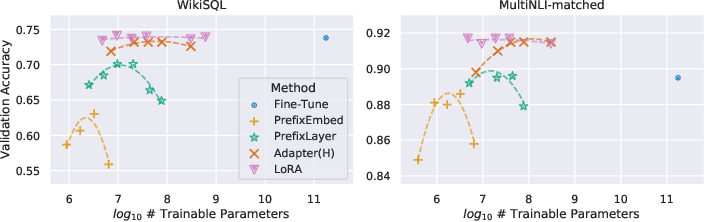

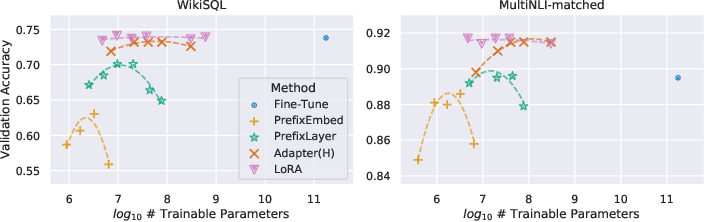

Figure 1: GPT-3 175B validation accuracy vs. number of trainable parameters of several adaptation methods on WikiSQL and MNLI-matched. LoRA exhibits better scalability and task performance.

Extensive empirical exploration across diverse tasks and models, including RoBERTa, DeBERTa, GPT-2, and GPT-3, demonstrates LoRA's capability to achieve competitive or superior performance compared to full fine-tuning. Critical insights into LoRA's rank sufficiency reveal that low-rank updates capture essential task-specific information, supported by subspace similarity measures showing minimal rank sufficiency for effective adaptation.

Theoretical Insights and Limitations

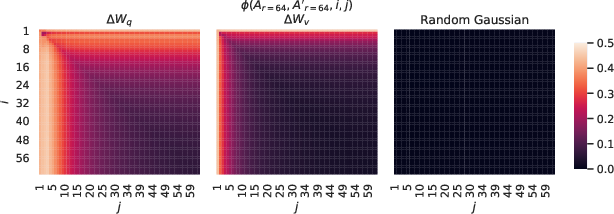

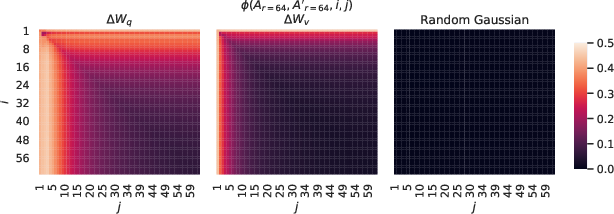

LoRA posits that the adaptive updates in LLMs intuitively exhibit low intrinsic dimensionality. This hypothesis is verified through subspace analysis, where directions captured in low-rank matrices effectively encompass the critical aspects necessary for task-specific adaptation. Nevertheless, the technique relies on heuristics for optimal matrix selection, which presents a potential opportunity for further research.

Figure 2: Left and Middle: Normalized subspace similarity between the column vectors of Ar=64 from multiple random seeds, confirming consistent low-rank capture across variations.

Conclusion and Future Directions

LoRA stands as a robust alternative to conventional fine-tuning approaches, allowing for significant parameter efficiency gains and reduced resource demands. Its promising results invite further investigations into combining it with other adaptation techniques, optimizing rank selections, and expanding its application beyond the existing scope to potentially redefine parameter-efficient model adaptation in NLP.

The development of LoRA notably contributes to easing the deployment of effective NLP systems in resource-constrained environments, aligning with contemporary challenges in scalability and sustainability in machine learning.