- The paper presents DSAN, a novel deep learning model that aligns subdomain distributions using LMMD to preserve fine-grained category information.

- DSAN outperforms traditional adversarial methods by achieving faster convergence and higher accuracy on datasets like ImageCLEF-DA and Office-31.

- The model’s simple implementation with fewer hyperparameters enables efficient training and better resource utilization.

Deep Subdomain Adaptation Network for Image Classification

The paper "Deep Subdomain Adaptation Network for Image Classification" presents a non-adversarial approach to address the challenges of domain adaptation in machine learning, specifically focusing on the alignment of subdomain distributions rather than the entire domain. This method is shown to enhance the adaptability and performance of neural networks when faced with unlabeled target data by capturing fine-grained information for each category.

Introduction to Domain Adaptation

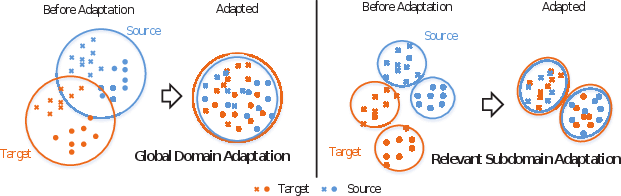

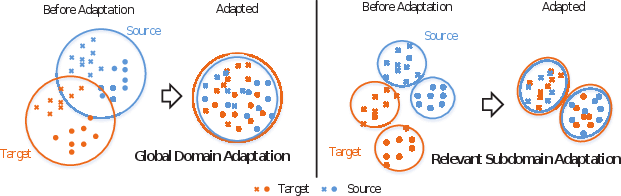

Domain adaptation aims to improve learning models by transferring knowledge from related domains with rich labeled data to a target domain lacking labeled data. Traditional global domain adaptation techniques focus on aligning global distributions across source and target domains. However, aligning global distributions often leads to mixing of irrelevant features due to indiscriminate generalization, potentially leading to loss of fine-grained category-specific information (Figure 1).

Figure 1: (left) Global domain adaptation might lose some fine-grained information, (right) while relevant subdomain adaptation can exploit the local affinity to capture the fine-grained information for each category.

Deep Subdomain Adaptation Network (DSAN)

DSAN proposes aligning subdomain distributions within different classes across domains using Local Maximum Mean Discrepancy (LMMD). Unlike previous methods that rely on adversarial training involving multiple loss functions and slow convergence, DSAN offers a straightforward implementation with fast convergence by minimizing LMMD using backpropagation. The architecture of DSAN incorporates this alignment strategy within a standard deep learning framework, showing its potential to capture detailed subdomain information through efficient training processes.

Figure 2: (left) The architecture of Deep Subdomain Adaptation Network. DSAN will formally reduce the discrepancy between the relevant subdomain distributions of the activations in layers L by using LMMD minimization. (right) The LMMD module needs four inputs: the activations zsl and ztl where l∈L, the ground-truth label ys and the predicted label yt^.

Experimental Evaluation

The paper demonstrates DSAN's robust performance across various datasets, including ImageCLEF-DA, Office-31, Office-Home, and digit classification tasks, with strong numerical results showcasing improvement over existing methods. DSAN consistently outperformed adversarial subdomain adaptation approaches by yielding higher accuracy and faster convergence, emphasizing the practical advantages of a non-adversarial framework.

Theoretical Analysis and Visualization

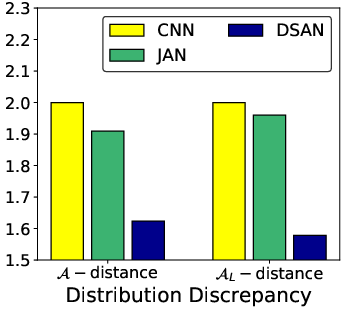

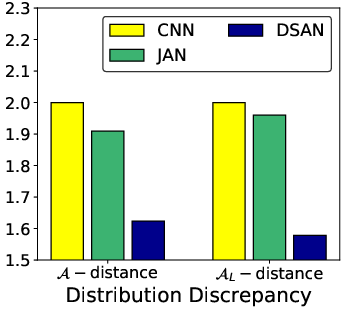

The effectiveness of DSAN is further validated by theoretical analyses, proposing the minimization of subdomain specific tasks through AL-distance. The succinct subdomain alignment achieved through LMMD is visualized with t-SNE plots, illustrating the comprehensive alignment within subdomains for both source and target data (Figure 3).

Figure 3: (a) and (b) are the visualizations of the learned representations using t-SNE for JAN and DSAN on task ArightarrowW, respectively. Red points are source samples and blue are target samples. (c) analyses A-distance and AL-distance on task ArightarrowW.

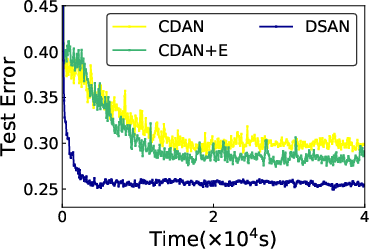

Convergence and Implementation

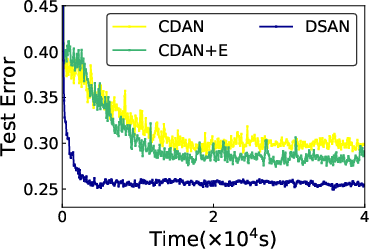

The simplicity in its implementation, coupled with fewer hyperparameters, leads to reduced convergence times and resource demands in comparison to adversarial methods. Convergence analysis further supports DSAN's effective utilization in varied datasets under constrained computation environments (Figure 4).

Figure 4: On task D rightarrow A (Office31), we further analyze the convergence. (a) and (b) are convergence about iterations and time.

Conclusion

DSAN represents a meaningful advancement in the field of domain adaptation. The study asserts the viability and efficiency of leveraging LMMD for subdomain-specific adaptation, presenting a scalable, non-adversarial approach to enhancing transfer learning performance. By focusing on subdomains, DSAN establishes a refined methodology that promises both improved accuracy and convergence speed across diverse datasets. As such, it paves the path for future exploration in non-adversarial domain adaptation strategies.