- The paper presents a novel method, NeRFactor, that jointly refines shape and spatially-varying reflectance from multi-view images under unknown illumination.

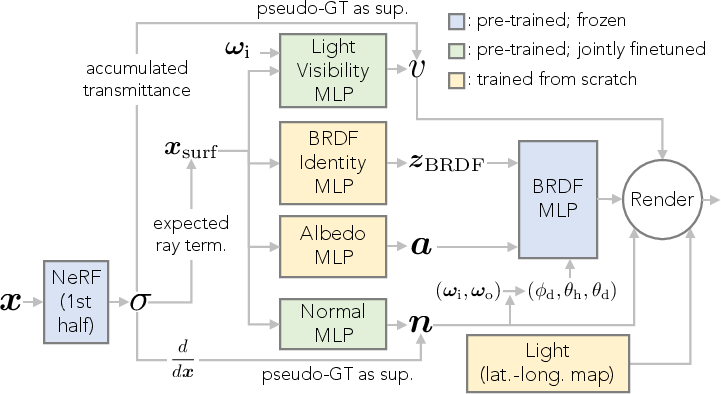

- It employs a surface-based MLP initialized from NeRF to predict normals, light visibility, and learned BRDFs for accurate reflectance modeling.

- The approach achieves superior relighting and material editing performance, as demonstrated by benchmark comparisons and detailed ablation studies.

Neural Factorization of Shape and Reflectance Under an Unknown Illumination

Introduction to NeRFactor

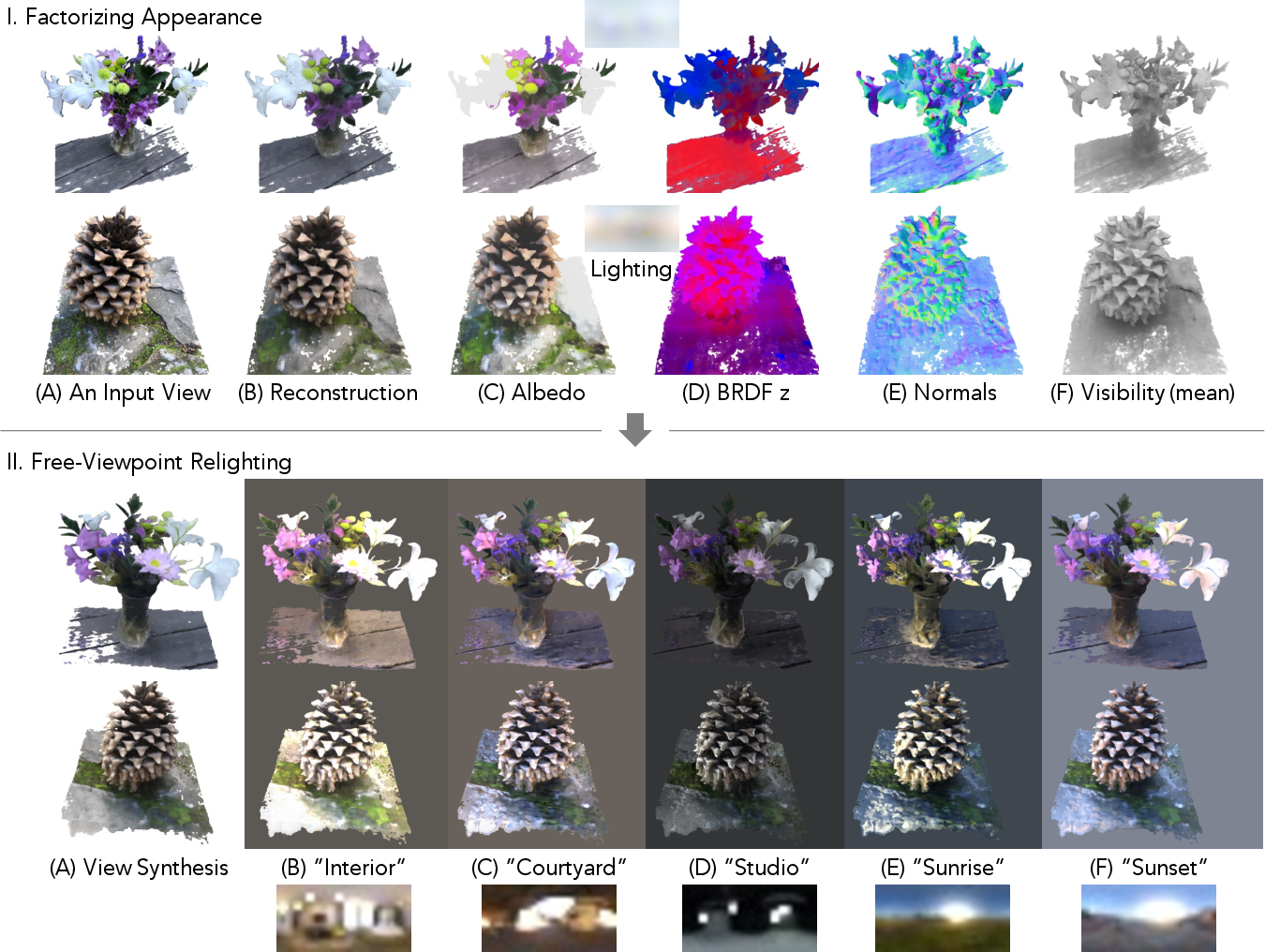

NeRFactor proposes a method to recover the shape and spatially-varying reflectance of an object from multi-view images, captured under unknown lighting conditions. By leveraging Neural Radiance Fields (NeRF) as a foundation, NeRFactor distills the volumetric geometry into a surface representation, refining geometry along with solving spatially-varying reflectance and environmental lighting. This sophisticated mechanism allows for rendering novel object views under arbitrary lighting conditions, supporting applications such as free-viewpoint relighting and material editing.

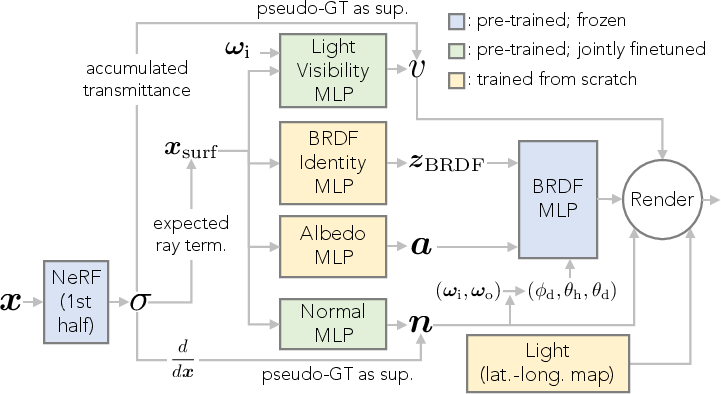

Figure 1: Model. NeRFactor leverages NeRF's sigma-volume as an initialization to predict, for each surface location $\bm{x_\text{surf}$.

Method Overview

Geometry Initialization and Optimization

NeRFactor utilizes the geometry estimated by NeRF as an initial coarse approximation and refines it during joint optimization of shape and reflectance. NeRF models surfaces as volumetric fields, which are computationally expensive for relighting. NeRFactor circumvents this by representing surfaces with an MLP, predicting surface normals, light visibility, and reflectance.

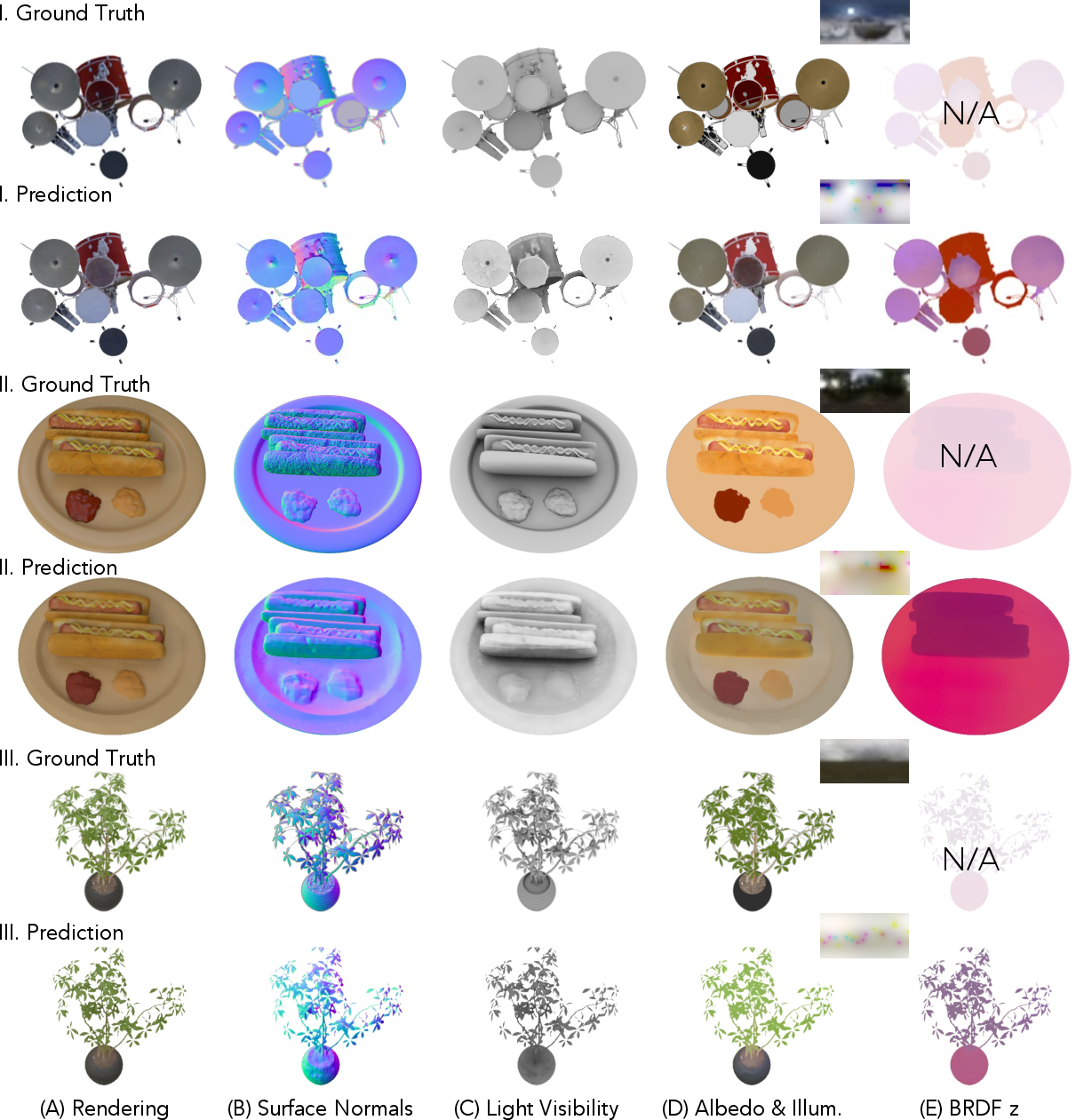

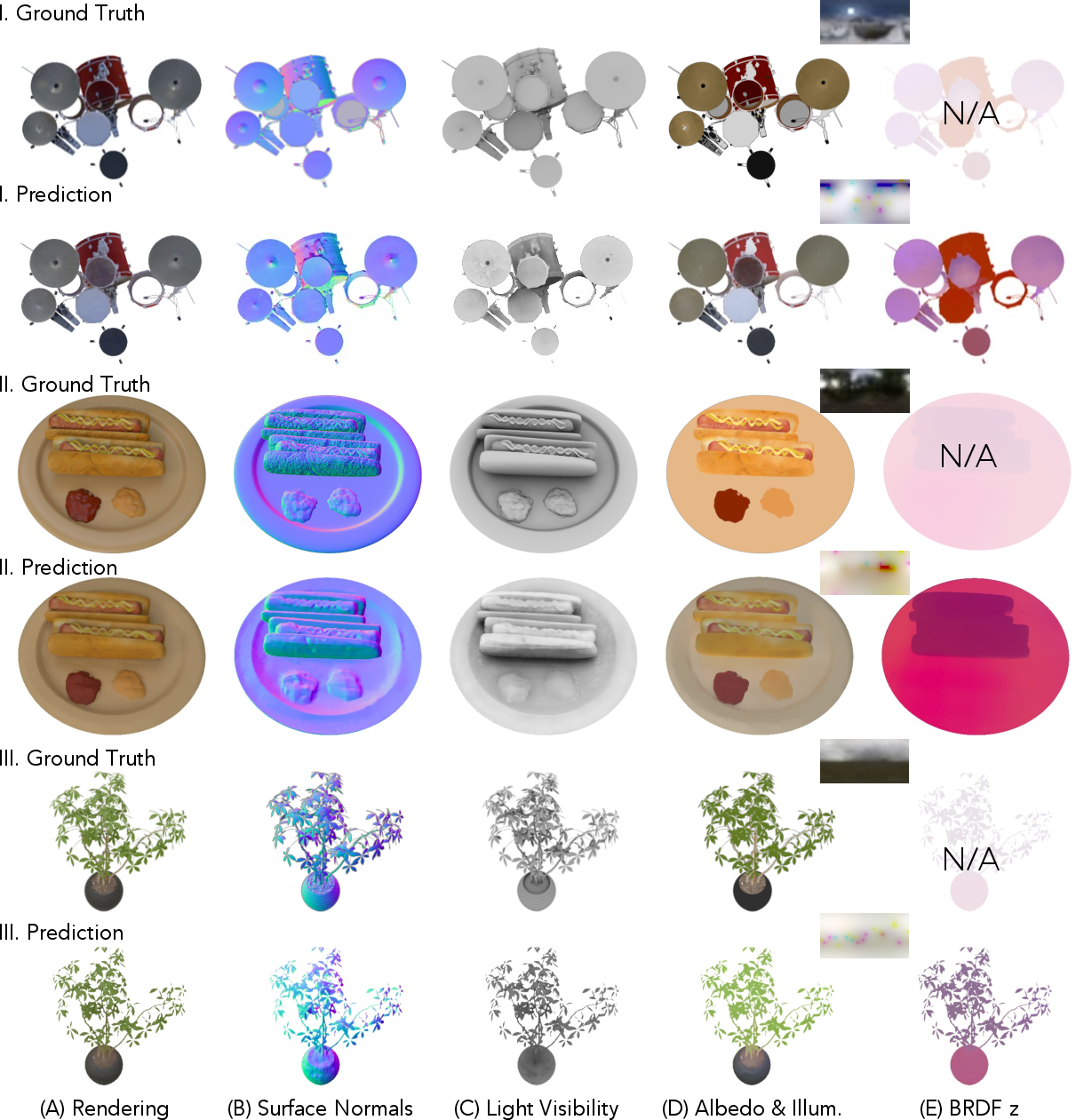

Figure 2: High-quality geometry recovered by NeRFactor shows improvements over initial estimations.

Albedo and BRDF Estimation

For reflectance modeling, NeRFactor incorporates a diffuse component determined by albedo and a specular component captured by learned spatially-varying Bidirectional Reflectance Distribution Functions (BRDFs). A data-driven approach trains the model on a set of real-world BRDFs, ensuring plausible reflectance recovery under the unsupervised setup.

Figure 3: Joint optimization of shape, reflectance, and lighting in challenging scenes.

Lighting Representation

NeRFactor models lighting using high-dynamic-range (HDR) light probes in latitude-longitude format, enabling accurate high-frequency lighting representation necessary for synthesizing realistic shadows under diverse lighting conditions.

Application and Results

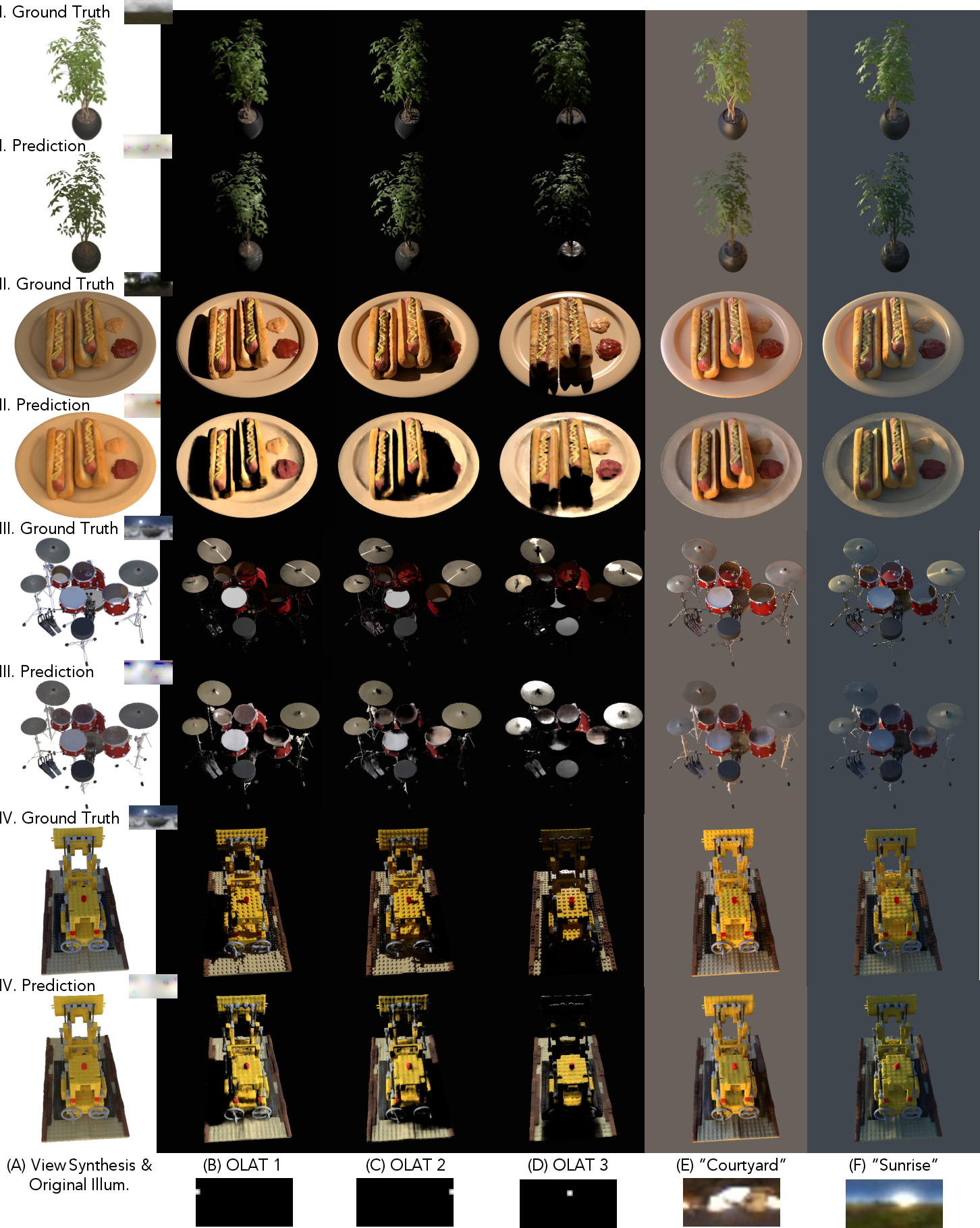

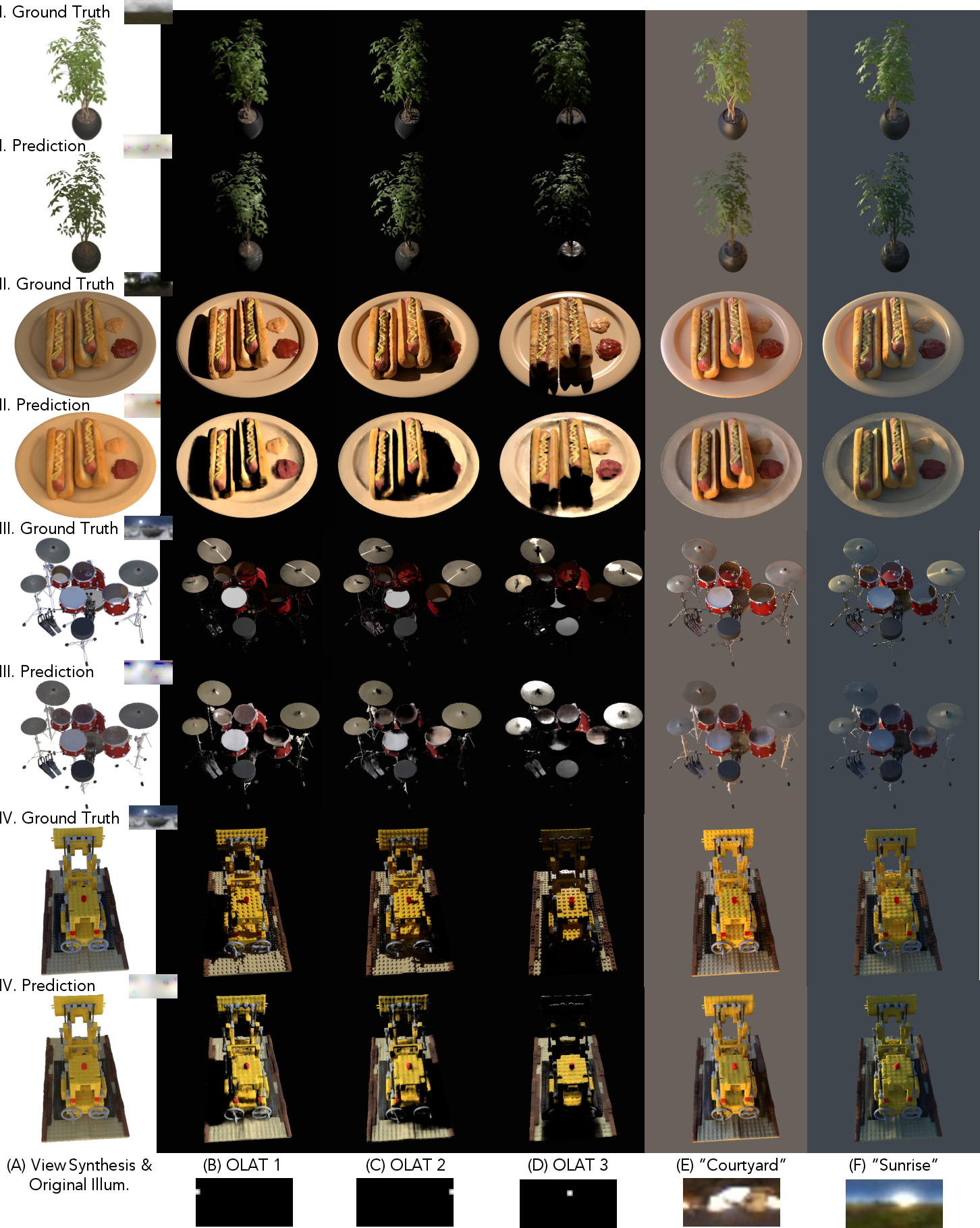

Free-Viewpoint Relighting

NeRFactor excels at relighting objects under varying lighting conditions, including point lights and complex probes. It effectively synthesizes specular highlights and shadows, accurately resembling ground truth renderings.

Figure 4: Free-viewpoint relighting demonstrates realistic shadows and specular effects.

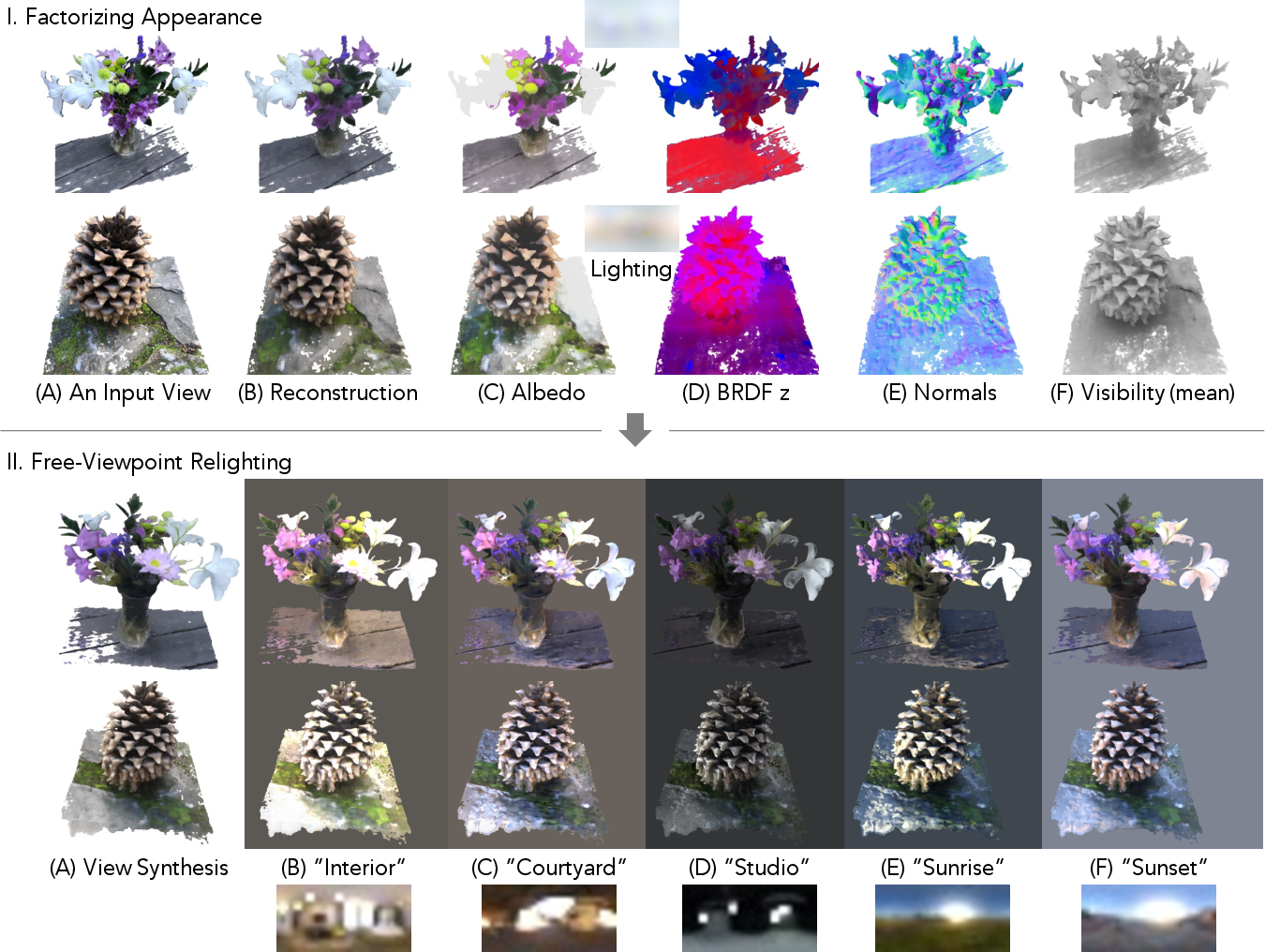

Real-World Scene Factorization

In real-world scenes, NeRFactor maintains its robustness, accurately factorizing appearance into components essential for relighting under arbitrary conditions.

Figure 5: Results of real-world captures exhibit consistent relighting capabilities in practical scenarios.

Evaluation and Comparisons

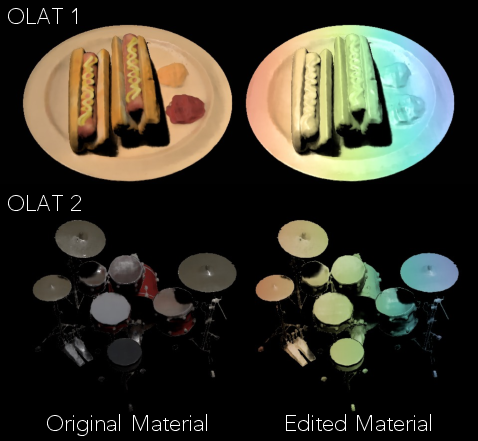

NeRFactor shows notable performance across benchmarks, surpassing traditional methods in tasks of appearance factorization and relighting. Detailed ablation studies reveal the significance of each model component, highlighting the advantages of learned BRDFs over analytical models.

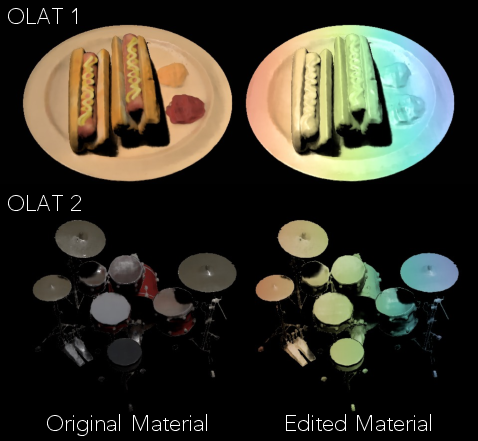

Figure 6: Material editing and relighting demonstrate versatility in changing object appearance.

Conclusion

NeRFactor advances inverse rendering by enabling plausible recovery of 3D models from limited illumination conditions. The use of smoothness constraints and learned BRDF priors significantly stabilizes the optimization, leading to high-quality geometry and reflectance for applications in relighting and editing. Future work can explore enhancements in resolution for higher fidelity lighting and extensions for indirect illumination effects.

This work contributes to bridging the gap between casual capture scenarios and generating intricate 3D assets suitable for diverse computational graphics applications, paving the way for robust and efficient real-world implementations.