- The paper presents a novel transition-based approach that integrates an action-pointer mechanism with a Transformer for effective AMR parsing.

- It employs incremental graph embedding and specialized attention layers to capture evolving graph states and long-range dependencies.

- Results on AMR 1.0, 2.0, and 3.0 datasets demonstrate improved Smatch scores, outperforming traditional methods with silver data and ensemble strategies.

The paper "AMR Parsing with Action-Pointer Transformer" proposes a novel transition-based approach for Abstract Meaning Representation (AMR) parsing that integrates an action-pointer mechanism within a Transformer framework. AMR parsing is a task of converting sentences into semantic graphs, which poses challenges due to the lack of explicit alignment between sentence tokens and graph nodes.

Methodology Overview

Transition-Based AMR Parsing

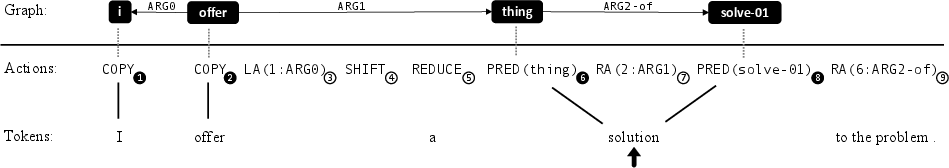

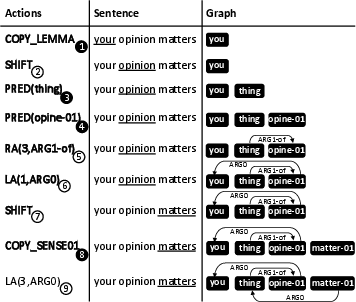

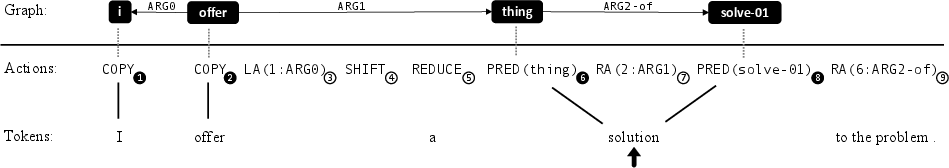

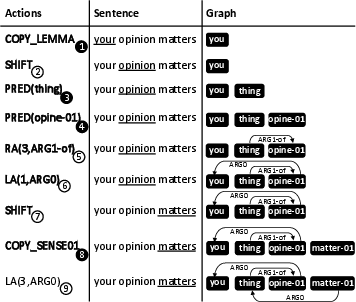

The proposed approach builds AMR graphs by employing a sequence of transition actions. Unlike traditional methods that directly predict node-labels and edges, this method utilizes an action sequence that serves both as an operational mechanism and a graphical representation. A cursor traverses sentence tokens left-to-right, directing the prediction of graph nodes and edges.

Action-Pointer Mechanism

Key innovations include the action-pointer mechanism, which enables the model to decide on edge creation by referencing previous actions explicitly, facilitating long-distance node interactions seamlessly. This mechanism is combined with a conventional Transformer architecture, where modifications such as masked attention heads incorporate parser state and graph information effectively.

Figure 1: Source tokens, target actions and AMR graph for the sentence I offer a solution to the problem (partially parsed). The black arrow marks the current token cursor position. The circles contain action indices, black circles indicate node creating actions. Only these actions are available for edge attachments.

The model adapts the encoder-decoder Transformer, incorporating a pointer network for generating the edge-attaching actions. The action-pointer mechanism enables a flexible graph generation, capable of adapting to diverse graph structures by efficiently leveraging attention mechanisms to encode sentence semantics and graph state updates.

Incremental Graph Embedding

To integrate graph-related information within the Transformer, the approach implements a step-wise incremental graph embedding, using attention layers to progressively encode expanding graph neighborhoods. Each graph node sequentially updates its representation by exchanging information with its neighbors through a specialized attention mechanism, allowing the model to maintain an updated view of the constructed graph up to any given step.

Results and Evaluation

The evaluation on AMR 1.0, 2.0, and 3.0 datasets demonstrates that the proposed model delivers improved Smatch scores, outperforming previous transition-based approaches significantly. Incorporating silver data and ensemble strategies further enhances the model's accuracy, achieving scores competitive with leading graph-based methods without necessitating graph re-categorization.

Figure 2: Step-by-step actions on the sentence your opinion matters. Creates subgraph from a single word thing:ARG1-of opine-01 and allows attachment to all its nodes. The cursor is at underlined words (post-action).

Effectiveness of Subcomponents

Ablation studies highlight the contribution of the monotonic alignment between actions and source tokens, as well as the advantage of leveraging graph embedding techniques that embed structural insights into model predictions. Such integrative designs contribute critically to parsing effectiveness.

Conclusion

By introducing an action-pointer strategy within a Transformer framework, the paper paves the way for more expressive AMR parsing techniques. The approach leverages the Transformer’s flexibility to address AMR’s intricate graph structures, efficiently managing long-range dependencies and multiple alignments. Future research could investigate further integrations with pre-trained models like BART to explore end-to-end enhancements in AMR parsing capabilities.