- The paper introduces Cbr-kbqa, a neuro-symbolic model that leverages case-based reasoning to generate logical forms for complex natural language queries over knowledge bases.

- It employs a three-stage process—retrieve, reuse, and revise—to handle novel combinations of KB relations, achieving an 11% accuracy boost on the CWQ dataset.

- The system's nonparametric design enables agile adaptation by incorporating new cases for debugging, thus offering scalable solutions without full model re-training.

Case-based Reasoning for Natural Language Queries over Knowledge Bases

The paper "Case-Based Reasoning for Natural Language Queries over Knowledge Bases" introduces a neuro-symbolic approach called Cbr-kbqa, which applies case-based reasoning (CBR) to question answering (QA) over large knowledge bases (KBs). This approach leverages a combination of nonparametric memory and a parametric model to generate logical forms for answering complex queries.

Overview of Cbr-kbqa

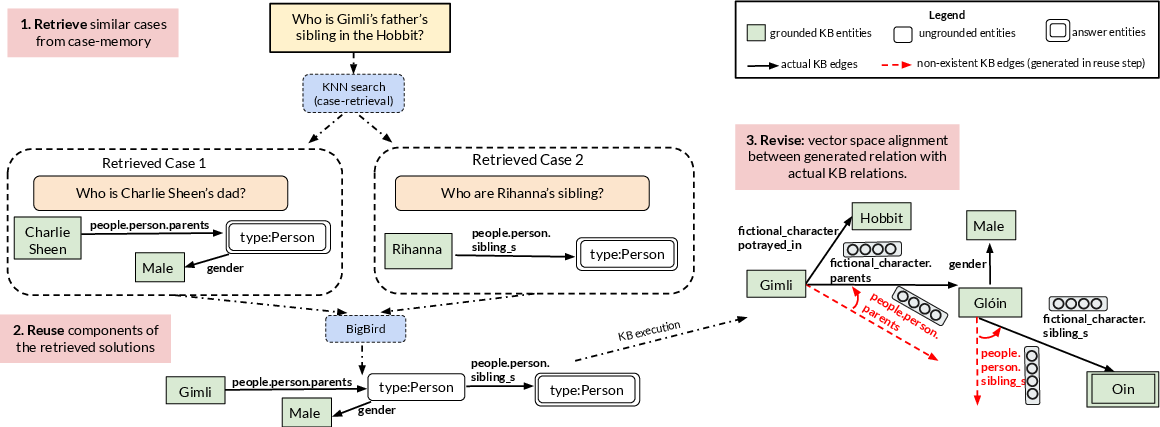

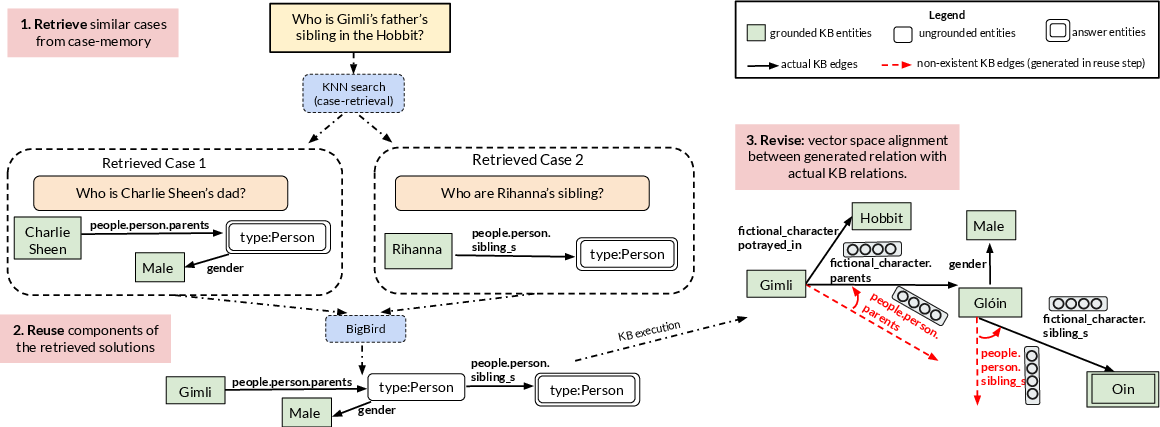

Cbr-kbqa integrates both symbolic and neural components to effectively answer natural language queries using knowledge bases. The core concept of the model is to retrieve similar cases—or past queries—from a database, and then adapt their solutions to generate a logical form (LF) for a new query. This technique enhances the ability of models to handle queries involving novel combinations of KB relations. The model comprises three primary modules:

- Retrieve Module: Uses dense passage retrieval techniques to find similar queries. Fine-tuning of a Roberta-base retriever ensures the retrieval of queries with high relational similarity, even when entity similarity is low.

- Reuse Module: Employs sparse-attention transformer architecture like BigBird for efficient processing of multiple retrieved cases. This module generates an intermediate logical form by reusing components from retrieved cases. A regularization term minimizes divergence between outputs generated with and without cases leveraging a seq2seq framework.

- Revise Module: Targets spurious relations in generated logical forms by aligning them with existing relations in the KB using pre-trained embeddings such as Trans-E. This step enhances executable logical forms through structural alignment without altering the logic framework.

Figure 1: Cbr-kbqa derives the logical form (LF) for a new query from the LFs of other retrieved queries from the case-memory. However, the derived LF might not execute because of missing edges in the KB. The revise step aligns any such missing edges (relations) with existing semantically-similar edges in the KB.

Experimental Evaluation

Cbr-kbqa was evaluated on several challenging KBQA benchmarks, including WebQuestionsSP, ComplexWebQuestions (CWQ), and Compositional Freebase Questions (CFQ). The results demonstrate its superior performance over both weakly supervised models and large pre-trained models like T5-11B. For instance, Cbr-kbqa achieved an 11% accuracy improvement over the state-of-the-art on the CWQ dataset. This reflects its strength in novel relation combinations and ability to generalize beyond training examples.

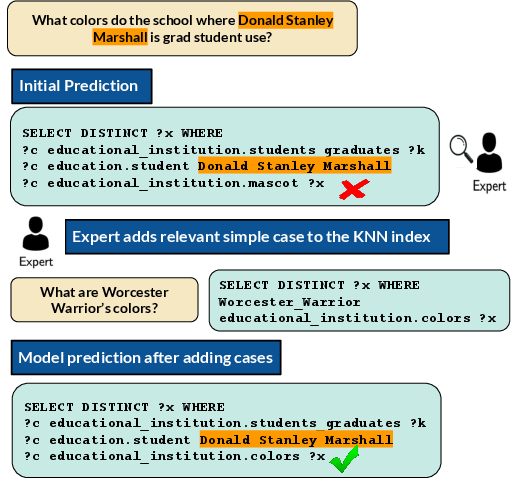

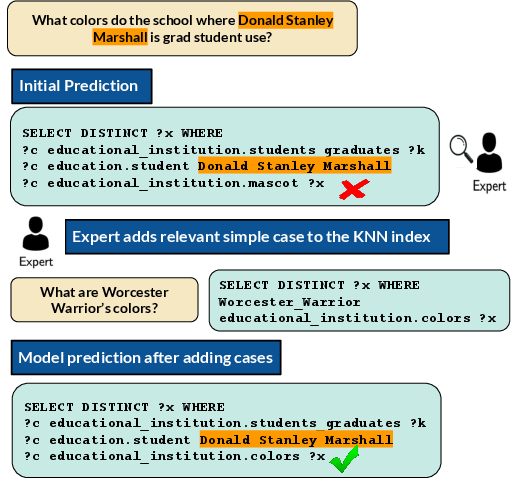

The revise step within Cbr-kbqa notably improved model performance by aligning logical forms with relation embeddings. Furthermore, through human-in-the-loop experiments, the model demonstrated the capacity to adapt to unseen relations without re-training, offering potential for scalable real-world applications where KBs frequently evolve.

Robustness and Controllability

A key feature of Cbr-kbqa lies in its nonparametric design, which allows easy integration of new cases into the model's case memory. This allows for agile adaptation to new relations and debugging of erroneous predictions. By simply adding new relevant cases, the model corrects mistaken predictions without undergoing full re-training, addressing problems associated with catastrophic forgetting in neural models.

Figure 2: An expert point-fixes a model prediction by adding a simple case to the KNN index. Initial prediction was incorrect as no query with the relation (educational_institution.colors) was present in the train set. Cbr-kbqa retrieves the case from the KNN index and fixes the erroneous prediction without requiring any re-training.

Conclusion

Cbr-kbqa presents a refined neural-symbiotic approach to knowledge base question answering. By leveraging a structured case-based reasoning framework, it addresses the limitations of existing neural models in handling complex, multi-relation queries. Its adaptability and efficiency underscore its potential for practical deployment in dynamic knowledge environments. Future advancements could aim at reducing dependency on logical form supervision, exploring end-to-end learning strategies, and enhancing integration of additional implicit contextual signals for even more robust question answering systems.