- The paper demonstrates that USCL, by integrating semi-supervised contrastive learning with the US-4 dataset, significantly enhances ultrasound diagnostic feature learning.

- The method employs an innovative sample pair generation process from video data to avoid similarity conflicts and improve instance-level discrimination.

- USCL outperforms traditional ImageNet-pretrained models with a 10% accuracy boost, achieving over 94% fine-tuning accuracy on POCUS datasets.

USCL: Pretraining Deep Ultrasound Image Diagnosis Model through Video Contrastive Representation Learning

Introduction

The paper introduces a novel approach for pretraining deep learning models specifically for ultrasound (US) medical image diagnosis, addressing the significant domain gap between natural images and US images. Traditional approaches that fine-tune models pretrained on datasets like ImageNet suffer from this domain discrepancy. The proposed method, Ultrasound Contrastive Learning (USCL), employs a semi-supervised contrastive learning framework to mitigate these effects, leveraging a newly constructed US-specific dataset, US-4. The paper's primary focus is on enhancing the feature learning process by aligning it more closely with the intrinsic characteristics of US data.

US-4 Dataset

The US-4 dataset is specifically structured to provide a robust foundation for training models on US video data, addressing the common challenge of data scarcity in medical imaging. Comprising over 23,000 images extracted from 1,051 videos covering two anatomic regions—lung and liver—it facilitates comprehensive model training. US-4's construction involves sampling images at optimal intervals to maximize informational content while minimizing redundancy, ensuring rich semantic clusters that aid contrastive learning tasks.

Methodology

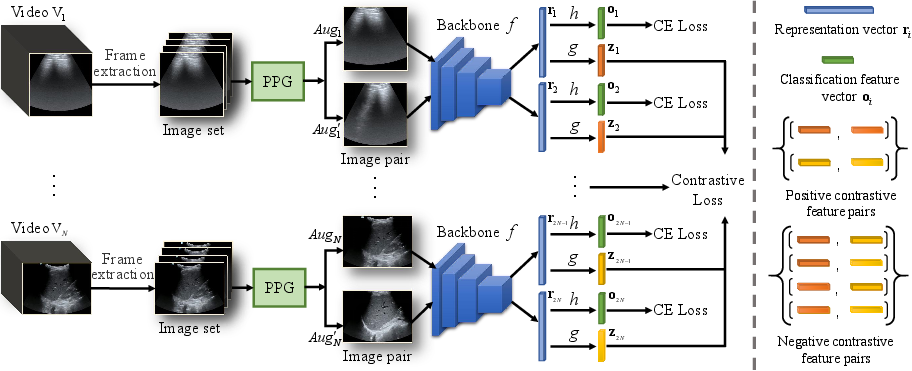

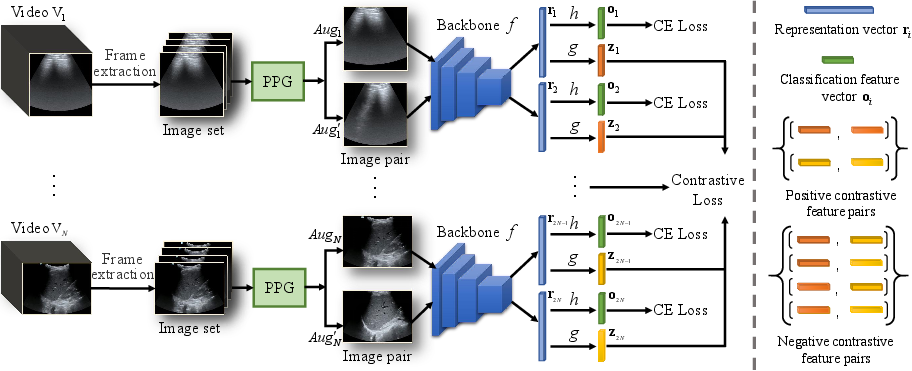

The methodology section details the USCL's framework, centering on how it uses sample pair generation to enhance contrastive learning. This involves techniques to avoid high similarities in negative pairs, a common pitfall in traditional methods.

Figure 1: System framework of the proposed USCL, which consists of sample pair generation and semi-supervised contrastive learning. Sample pair generation avoids similarity conflict, and the system combines label supervision with contrastive learning.

Sample Pair Generation

USCL's success is partly due to its ingenious Sample Pair Generation (SPG) process. Unlike conventional approaches that sample positive pairs through augmentation of single frames, SPG leverages a clustering-based technique consistent with the natural semantic clustering in video data. By interspersing samples to generate positive-pair interpolations, this method not only enriches the set of features but ensures meaningful negative pair distinctions, thus tackling the similarity conflict inherent to video data.

USCL Framework

This framework integrates supervised classification losses with contrastive losses, thereby enhancing model robustness. The architecture employs a dual-module system: a backbone for feature extraction and a projection head for representation mapping. This dual-process not only sharpens category level discriminatory power but also hones instance level discrimination through mutual contrastive reinforcement.

Experimental Evaluation

USCL exhibited substantial improvements over ImageNet-pretrained and other state-of-the-art methods when tested on downstream tasks such as the Point-of-Care Ultrasound (POCUS) dataset. The comparative accuracy highlights USCL's superior feature learning, with large gains observed in both classification accuracy and segmentation precision.

USCL-backed models attained a remarkable fine-tuning accuracy exceeding 94% on the POCUS dataset, demonstrating a 10% improvement over ImageNet pretrained counterparts. This performance also underscores USCL's capacity to better focus model attention on clinically relevant features within the ultrasound data, a critical attribute for real-world diagnostic applications.

Conclusion

The introduction of USCL, along with the US-4 dataset, marks significant progress in the domain-specific pretraining of deep neural networks for ultrasound image analysis. This approach exemplifies how targeted adaptation of contrastive learning techniques can bridge domain gaps, leading to improved diagnostic accuracy. Future directions will involve expanding the dataset's scope and exploring additional anatomical regions to further enhance USCL's generalizability. Such expansions are pivotal in scaling this model to broader clinical applications, potentially transforming ultrasound-based diagnostics.