- The paper introduces nonleaky, single-spike temporal-coded neurons for SNNs, simplifying training with clearer input-output relationships.

- It employs the NSL-KDD and AWID datasets to demonstrate over 99% classification accuracy, surpassing traditional DNN and machine-learning methods.

- The study outlines an efficient algorithmic blueprint for SNN implementation, paving the way for real-time network intrusion detection in cybersecurity.

Spiking Neural Networks with Single-Spike Temporal-Coded Neurons for Network Intrusion Detection

Introduction

This paper explores the application of Spiking Neural Networks (SNNs) for network intrusion detection, arguing that SNNs built with single-spike temporal-coded neurons hold promise over conventional Deep Neural Networks (DNNs). By analyzing neuron model complexities, the paper suggests that nonleaky neurons offer simpler input-output relationships than leaky neurons, facilitating easier training and improved performance for SNNs. Two datasets, NSL-KDD and AWID, are used to benchmark the performance of these SNNs, which are shown to outperform several DNN variants and traditional machine-learning models.

Neuron Model Complexity Analysis

The paper explores the input-output expressions for leaky and nonleaky neurons under various spike coding schemes. It demonstrates that leaky neurons often exhibit overly-nonlinear responses that complicate training, contrasting with nonleaky neurons, which display a more straightforward and less complex response suitable for gradient-based learning.

Figure 2: Attack distribution in the NSL-KDD Dataset.

This preference for nonleaky neurons is justified through mathematical formulations of spiking behaviors using step and exponentially-decaying spike waveforms. These formulations are key in realizing efficient neuron response models that translate to practical training methodologies for SNNs.

Dataset Utilization and Preprocessing

The NSL-KDD and AWID datasets, widely used in network security research, serve as the experimental ground for testing SNN capabilities. The NSL-KDD dataset, modified from its original form to reduce data redundancy, is split into KDDTrain+ and KDDTest+ subsets, facilitating robust evaluations by eliminating overfitting effects common with duplicated entries.

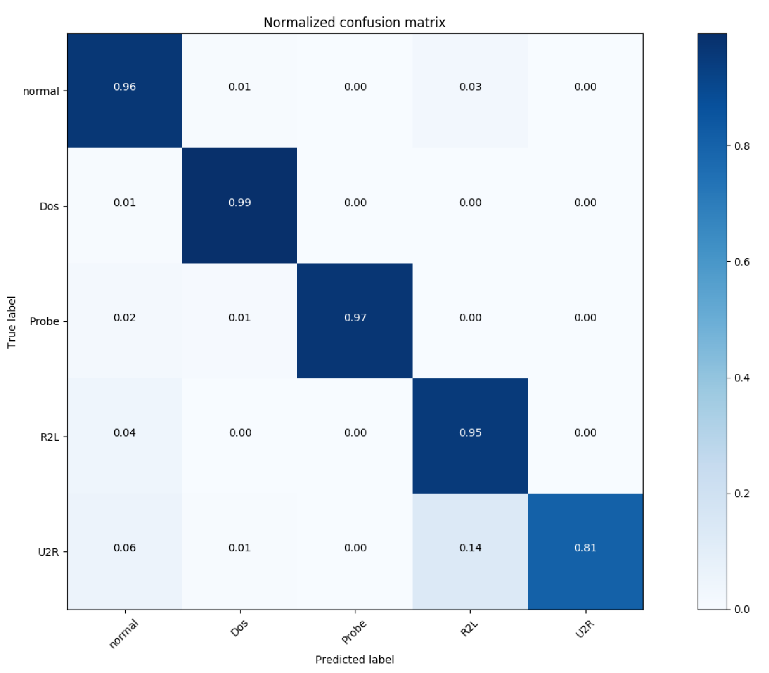

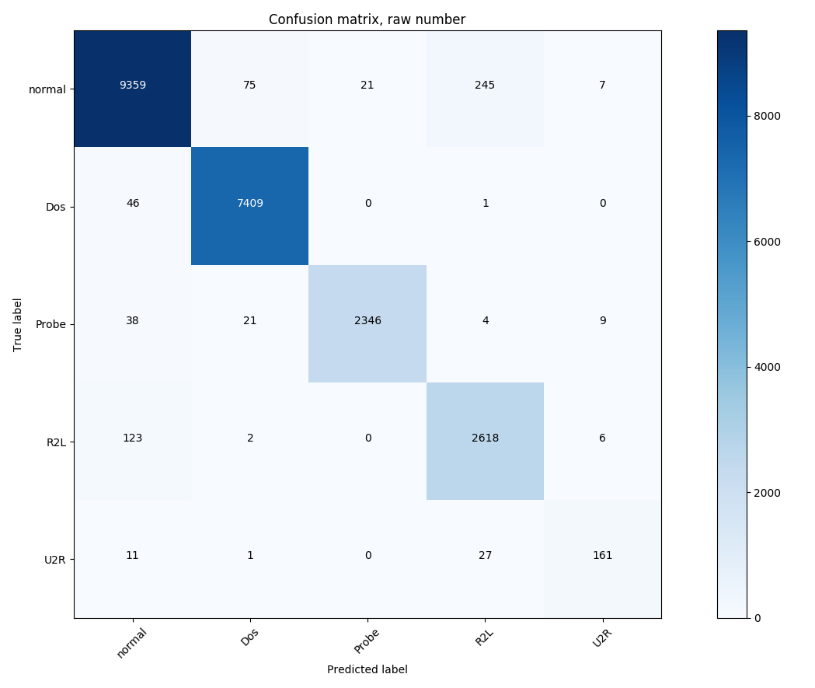

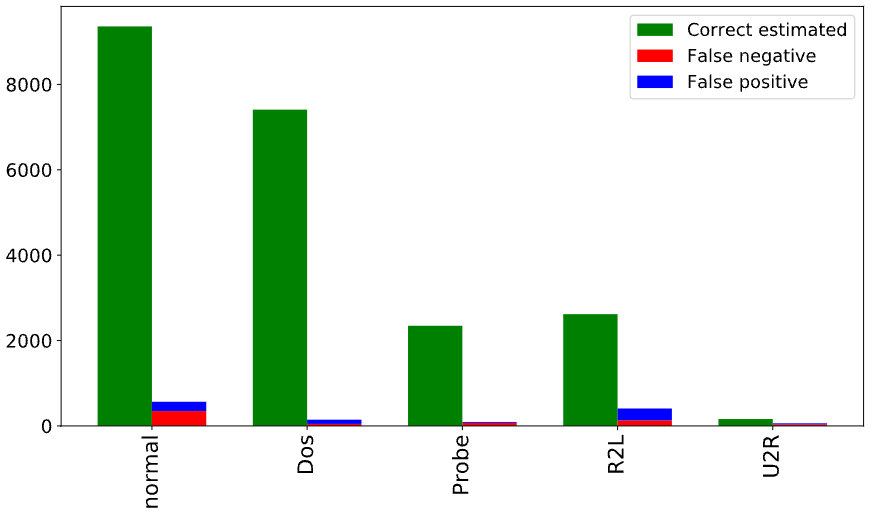

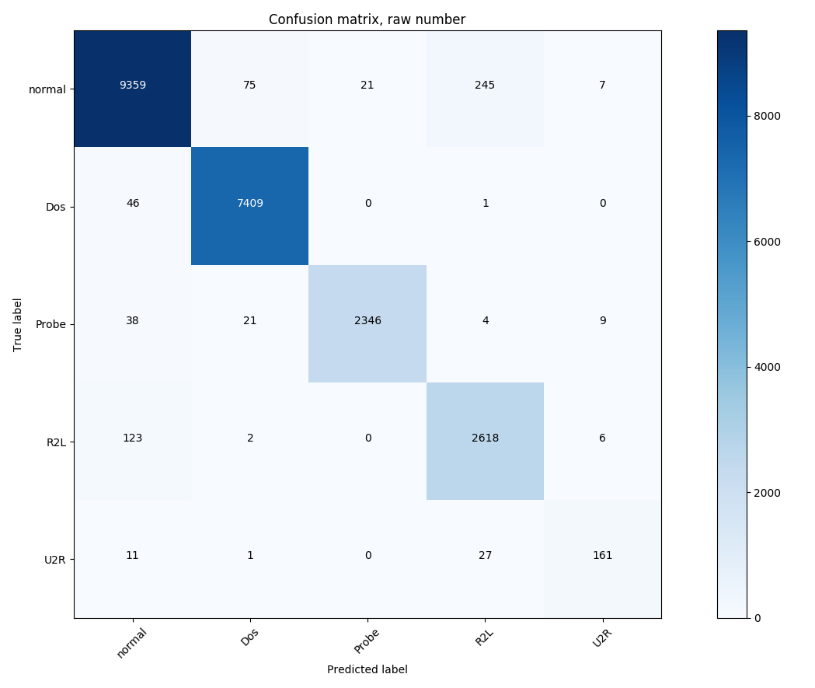

Figure 3: NSL-KDD "Original" dataset: Classification confusion matrix expressed as number of data records.

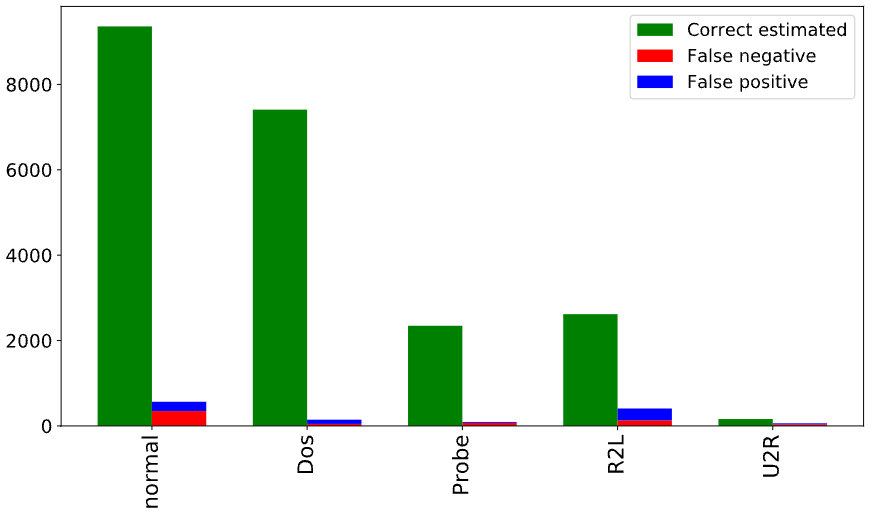

Figure 4: NSL-KDD "Original" dataset: Test result for each class.

Similarly, the AWID dataset provides real-world WiFi network data, crucial for this paper due to its diverse representation of normal and intrusion data. Preprocessing techniques extend the feature set of both datasets, enhancing model effectiveness by capturing nuanced patterns significant for intrusion detection.

Implementation Details

The paper provides a comprehensive blueprint for implementing SNNs using nonleaky neurons. The architecture proposed includes essential components such as layer specifications and data vector inputs tailored for each dataset. An algorithmic approach is presented to compute the forward pass of neurons efficiently, leveraging differentiable closed-form solutions:

1

2

3

4

5

6

7

8

9

10

|

def forward_pass_nonleaky_snn(z, w):

i_sorted = sorted(range(len(z)), key=lambda k: z[k])

z_sorted = [z[i] for i in i_sorted]

w_sorted = [w[i] for i in i_sorted]

z_cumsum = cumsum([zi * wi for zi, wi in zip(z_sorted, w_sorted)])

w_cumsum = cumsum(w_sorted) - threshold

z_candidate = [zi/wj for zi, wj in zip(z_cumsum, w_cumsum)]

valid = [zc > zs for zc, zs in zip(z_candidate, z_sorted)]

idx = next(i for i, v in enumerate(valid) if v)

return z_candidate[idx] |

This methodology facilitates training SNNs similarly to DNNs, utilizing standard backpropagation techniques refined for spike-time based neurons.

Experimental Results and Discussion

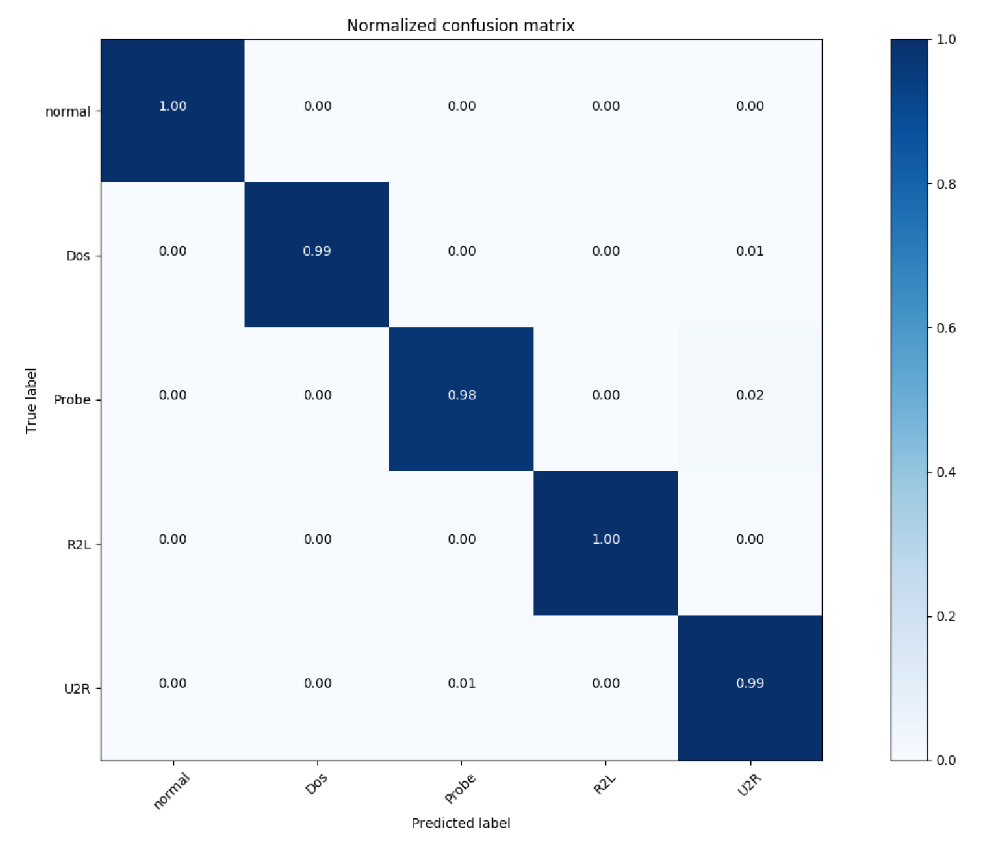

Across both datasets, the SNN models consistently outperform established DNN benchmarks. The classification accuracy for SNNs reaches 99.31% for NSL-KDD and 99.84% for AWID, surpassing various machine learning algorithms including SVM, KNN, and ensembles like Gradient Tree Boosting.

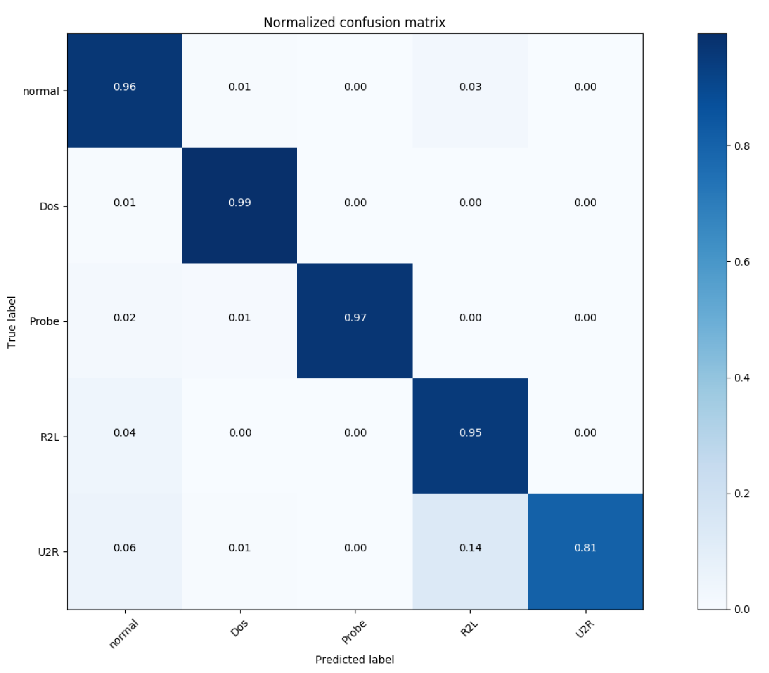

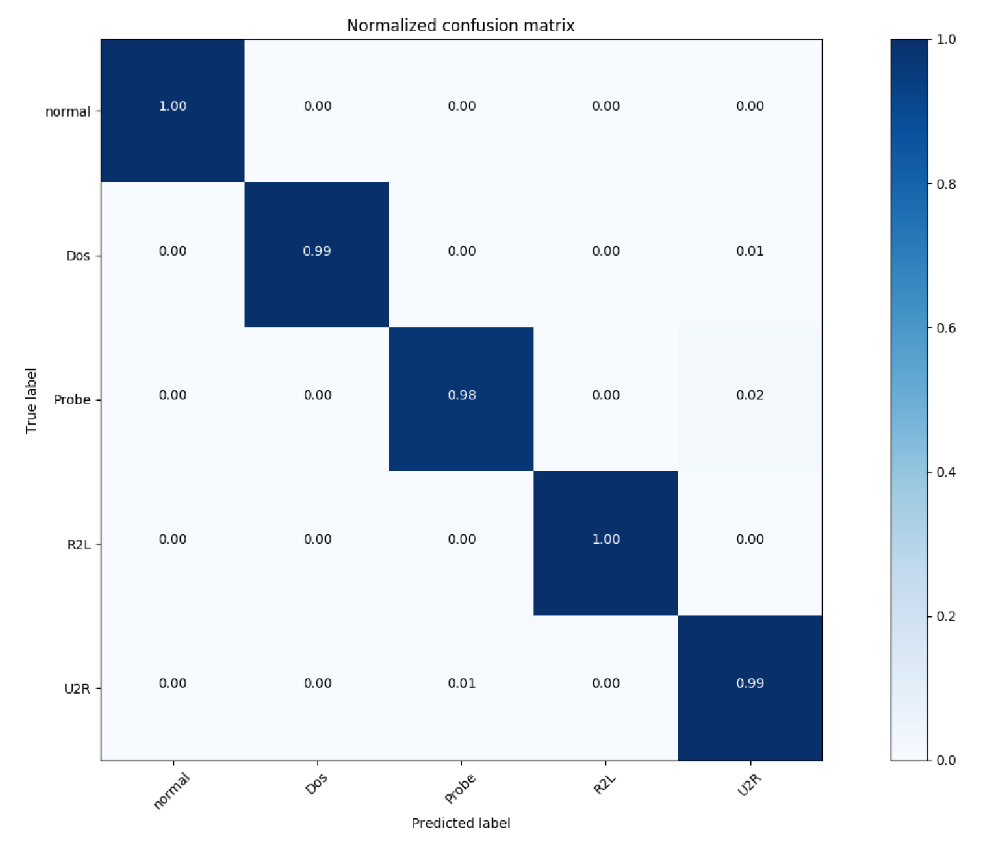

Figure 5: NSL-KDD "Resampled" dataset: Confusion matrix in percentage.

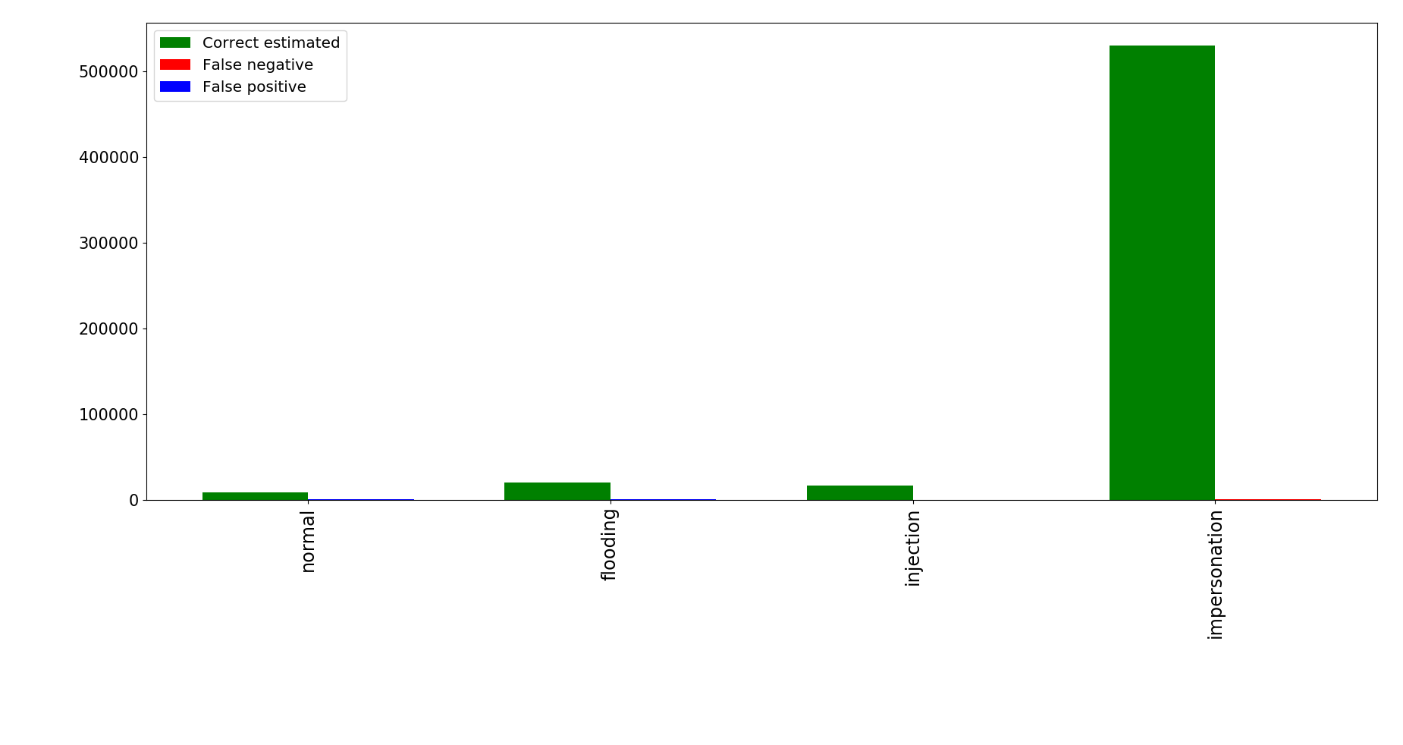

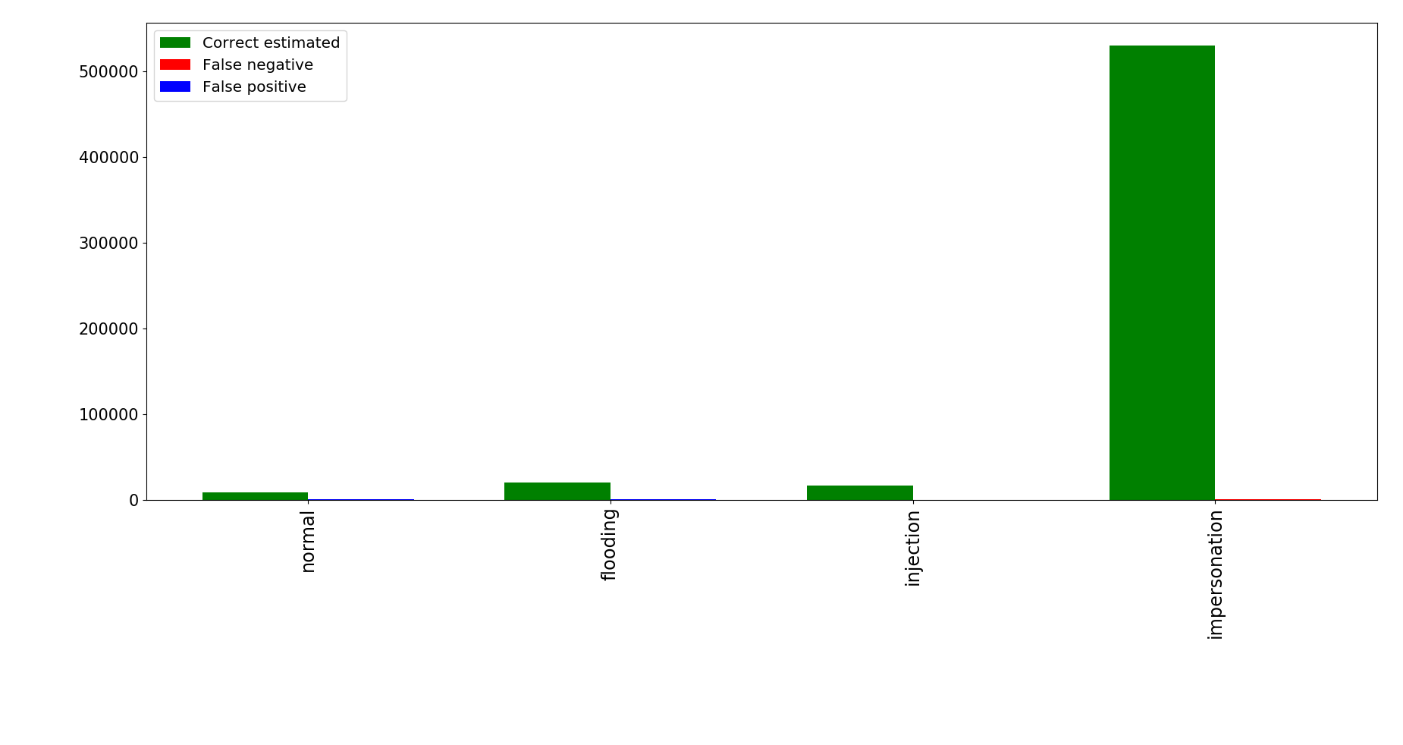

Figure 6: AWID "Resampled" dataset: Test result for each class.

These results substantiate the claim that SNNs, particularly those using nonleaky neurons, can offer competitive alternatives to traditional networks in terms of both efficacy and computational efficiency.

Conclusion

The investigation into SNNs reaffirms their potential in network intrusion detection tasks, challenging assumptions about their inferiority to DNNs. By adopting nonleaky neuron models, the paper shows that SNNs can be efficiently trained and deployed, marking a significant step forward in practical applications of bio-inspired networks in cybersecurity. Continued exploration and refinement may further enhance these models, increasing their viability for broader deployment in real-time intrusion detection systems and potentially other fields requiring rapid, efficient data processing.