- The paper introduces Pixel-Face, a comprehensive dataset that enhances 3D face reconstruction with high-resolution details and diverse samples.

- The methodology employs a two-stage registration combining ICP and spatial-varying NICP to achieve superior alignment in complex facial structures.

- Experiments demonstrate that finetuning with Pixel-Face data notably improves NME and ARMSE scores compared to traditional synthetic datasets.

Pixel-Face: A Large-Scale, High-Resolution Benchmark for 3D Face Reconstruction

Introduction

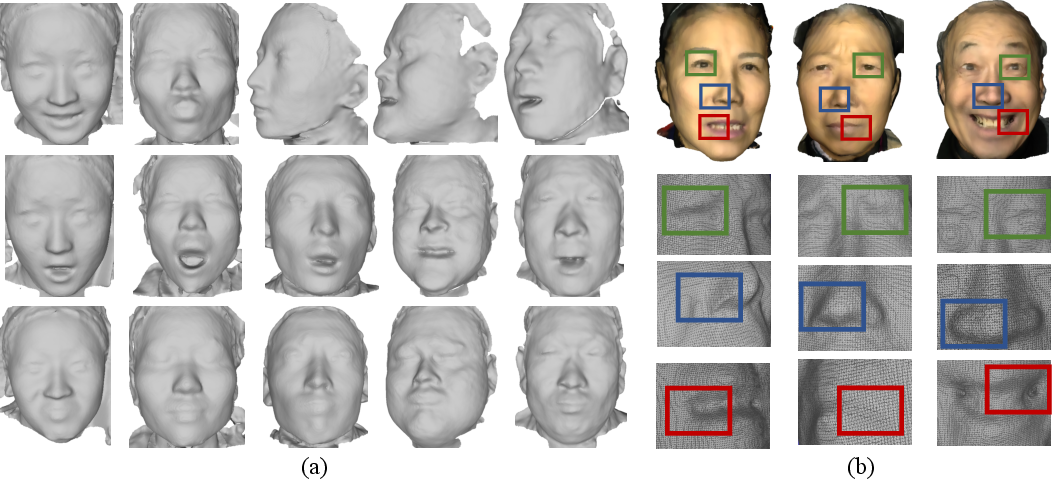

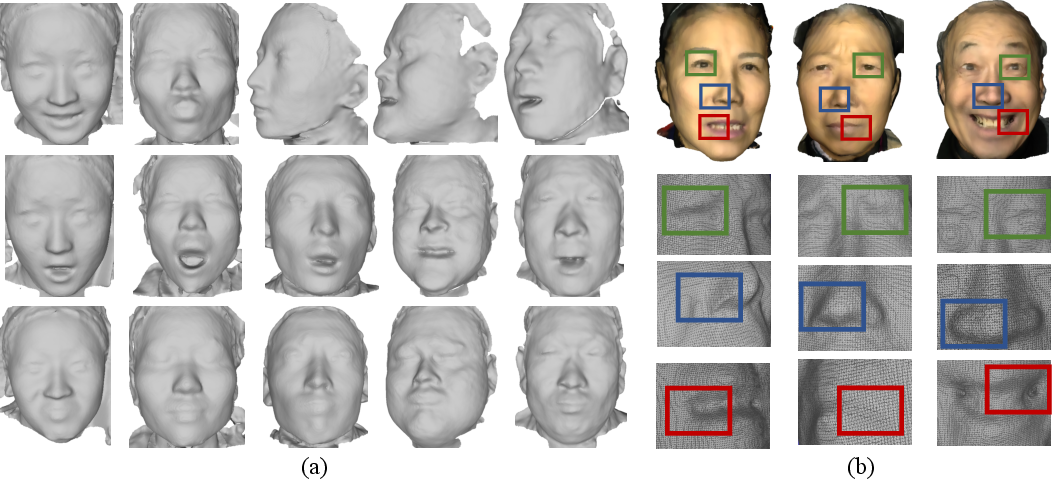

The paper introduces Pixel-Face, a comprehensive dataset to address challenges in 3D face reconstruction. The dataset provides a diverse collection of high-resolution 3D meshes and multi-view RGB images of subjects aged 18 to 80. Each subject has more than 20 samples with varying expressions, along with precise landmarks annotations and 3D registration results. Pixel-Face fuels improved performance for 3D face reconstruction models, as demonstrated by its re-parametrization into Pixel-3DM, a more expressive 3D Morphable Model (3DMM).

Figure 1: 3D samples of Pixel-Face.

3D Face Datasets

Previous datasets for 3D face reconstruction, such as Bosphorus or BFM, fell short in precision, diversity, and quality. Many relied on synthetic data, limiting the generalizability of models trained with them. In contrast, Pixel-Face offers a broader range of expressions, extensive annotations, and more realistic high-resolution meshes.

3D Face Models

Traditional 3DMMs often suffer from low precision and restrictive expression libraries. Basel Face Model (BFM) and similar models have limited real-world applicability due to small-scale datasets. Pixel-3DM leverages the high-quality Pixel-Face dataset to provide a more accurate, flexible, and diverse morphable model.

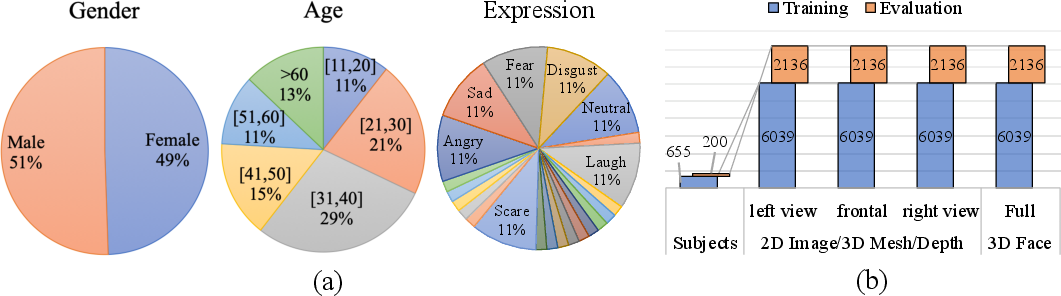

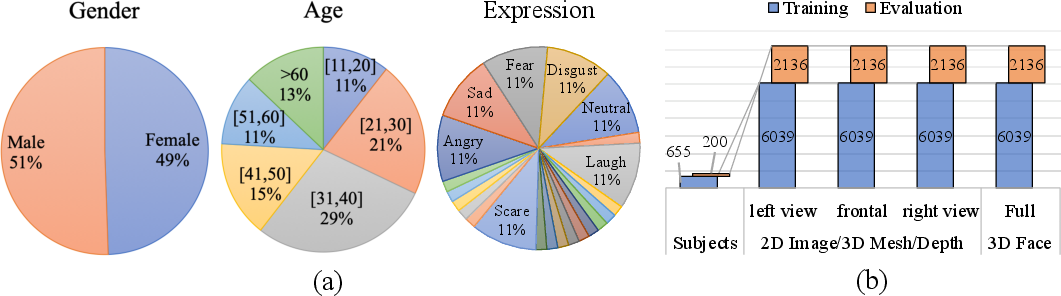

Figure 2: Overview of Pixel-Face.

The Pixel-Face Dataset

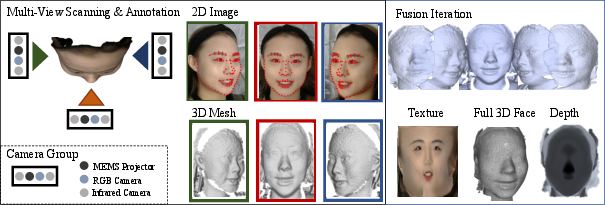

Pixel-Face stands out in scale and quality, with over 24,525 samples and extensive metadata. It features a high-fidelity trinocular structured light system to capture 3D point clouds, allowing for detailed landmark annotations, multi-view fusion, and comprehensive semantic annotations such as gender, age, and expressions.

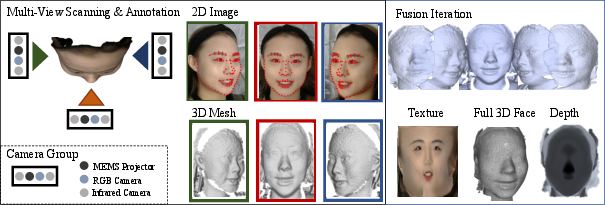

Figure 3: Data collecting pipeline. The pipeline is composed of multi-view scanning, facial landmarks annotation, texture mapping, multi-view fusion, surface mesh generation, and depth generation.

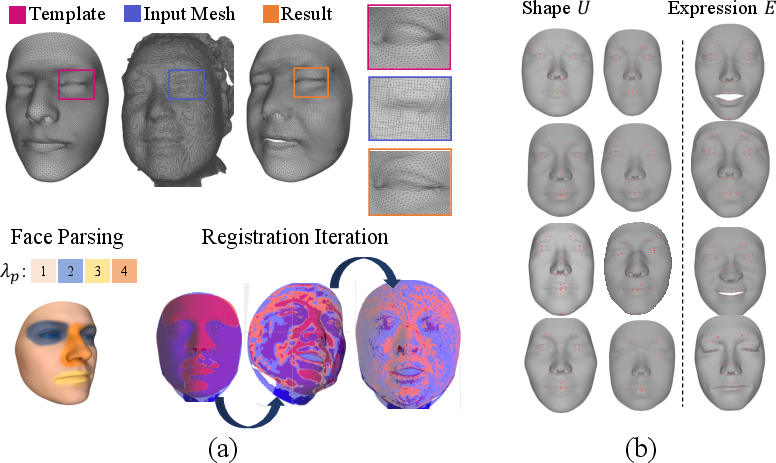

Constructing Pixel-3DM

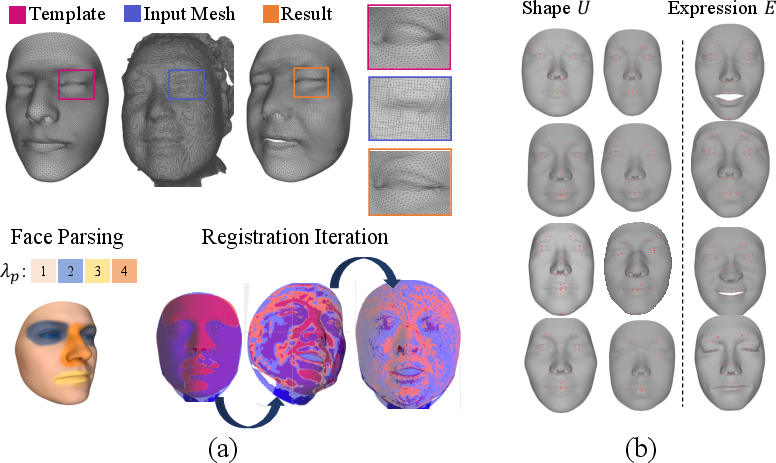

3D Face Registration: Using a two-stage algorithm combining ICP and spatial-varying NICP, Pixel-3DM achieves superior alignment and model precision. This approach ensures flexibility in handling complex facial expressions and challenging features like exaggerated poses.

Pixel-3DM Development: Pixel-3DM is constructed using PCA over extensive datasets, surpassing earlier 3DMMs by accommodating wider variance in facial shapes and expressions.

Figure 4: Overview of Pixel-3DM.

Experiments

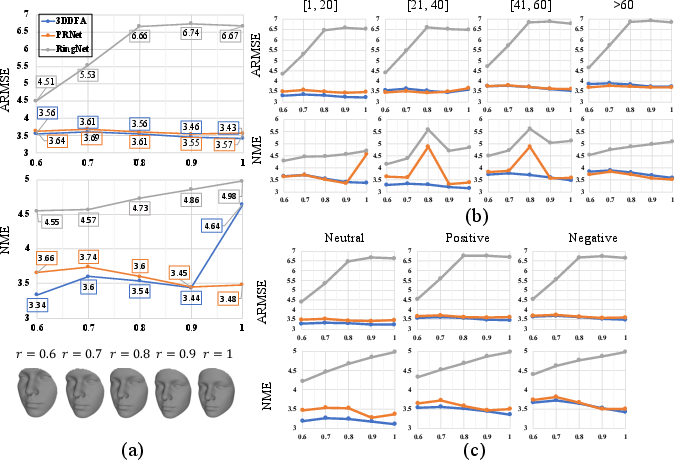

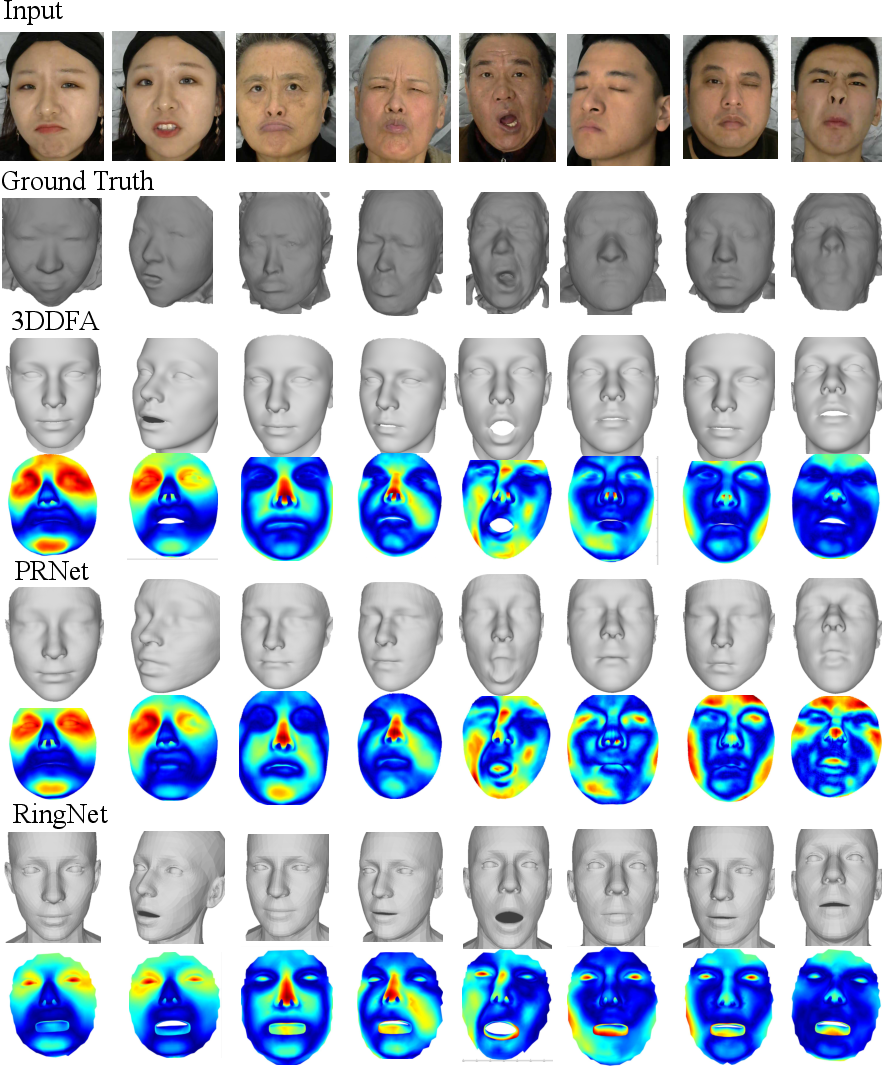

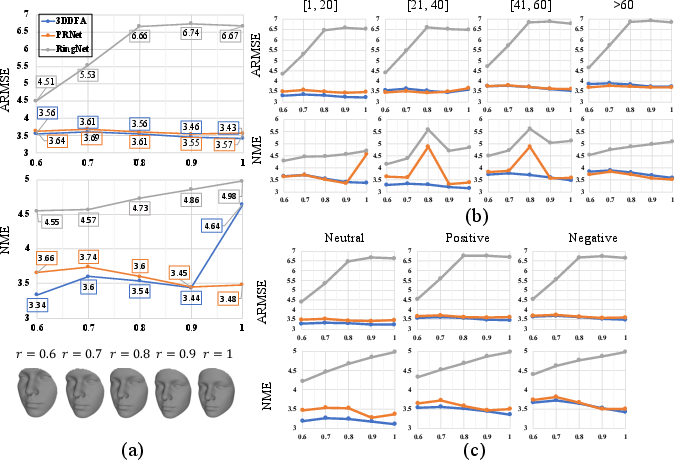

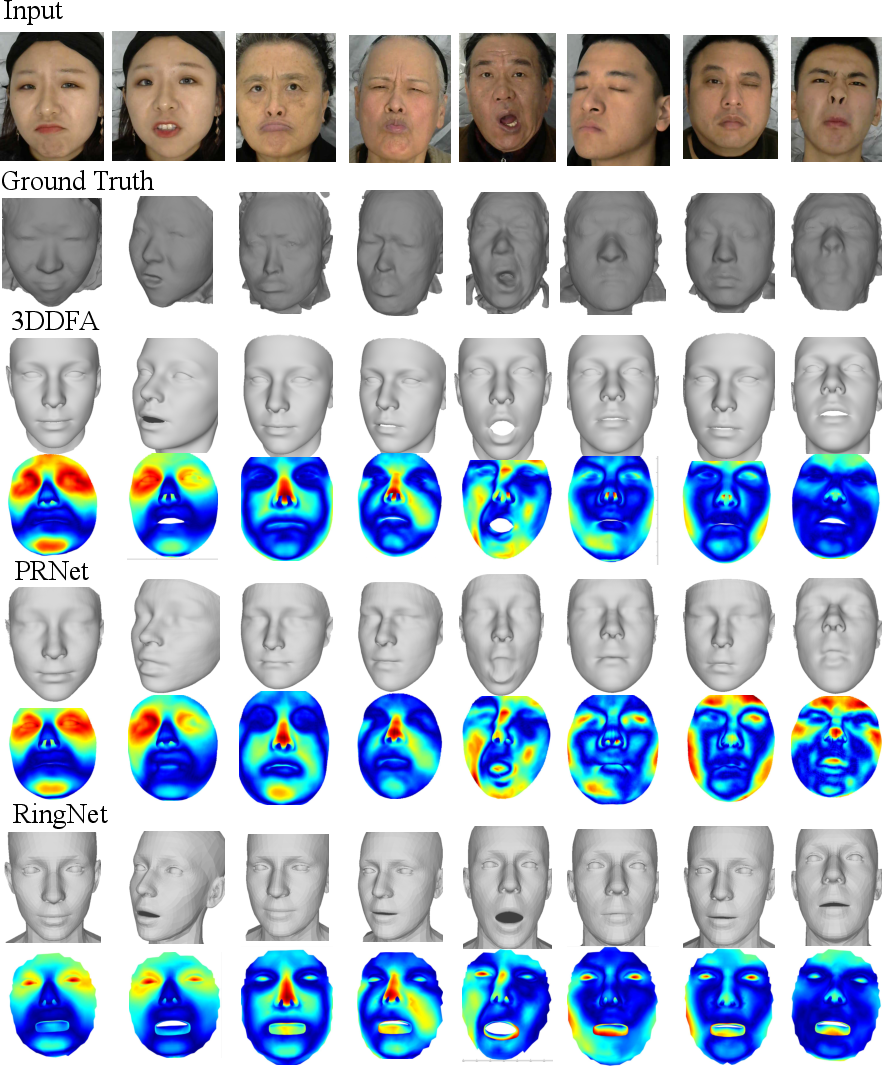

Benchmarks: Evaluation on Pixel-Face against methods like 3DDFA, PRNet, and RingNet reveals insights into insufficiencies in synthetic training datasets compared to Pixel-Face’s real-world data.

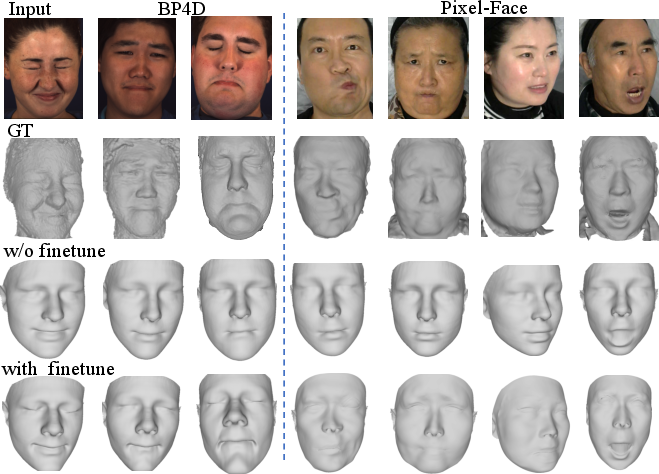

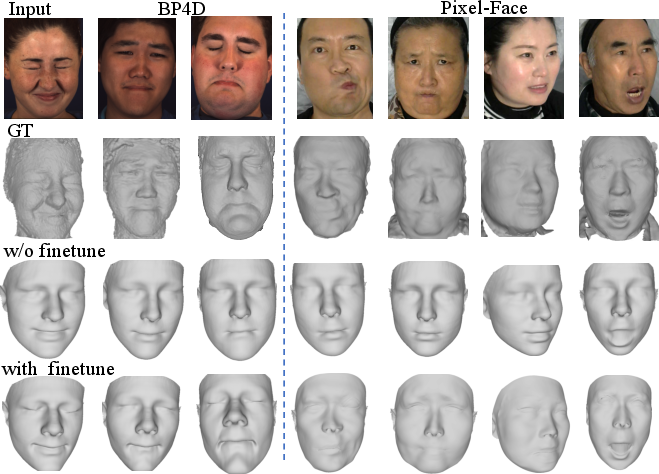

Performance Improvement: Finetuning models with Pixel-Face data significantly enhances reconstruction accuracy, improving NME and ARMSE scores across Pixel-Face and other datasets like BP4D.

Figure 5: ARMSE and NME scores of 3DDFA, PRNet, and RingNet.

Conclusion

Pixel-Face represents a pivotal step in developing robust 3D face analysis models. Its extensive, high-fidelity data supports accurate modeling, improved expressions, and enhanced generalization. As a publicly available dataset, it promises to advance the state of 3D face reconstruction and its applications in facial animation and recognition.

Figure 6: Qualitative comparison of 3DDFA, PRNet, and RingNet performance.

Figure 7: PRNet performance with and without Pixel-Face finetuning.