- The paper presents the APR model, which integrates active perception with controlled viewpoints to outperform passive setups in robotic grasping tasks.

- It leverages a bimodal encoder and a Generative Query Network to fuse visual and proprioceptive data for robust 6-DoF control.

- Experimental results demonstrate an 85% success rate and highlight the importance of log-polar sampling and representation learning in enhancing efficiency.

Active Perception and Representation for Robotic Manipulation

The paper "Active Perception and Representation for Robotic Manipulation" explores the use of active perception in robotic systems to enhance manipulation tasks. By drawing inspiration from the human visual-motor system, it introduces a novel framework—termed the Active Perception and Representation (APR) model—which leverages actively controlled viewpoints to improve the effectiveness of robotic grasping tasks.

Introduction to Active Perception

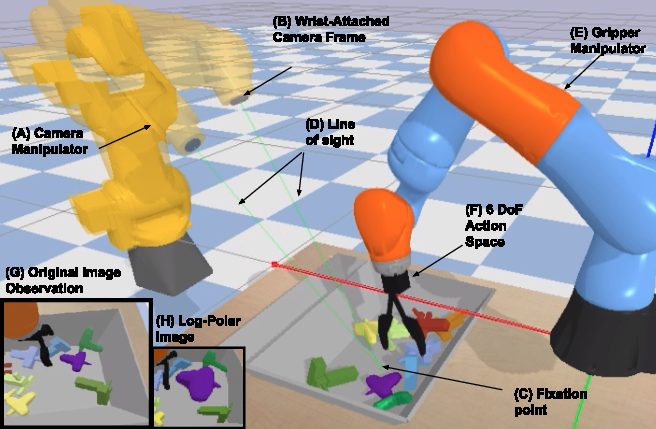

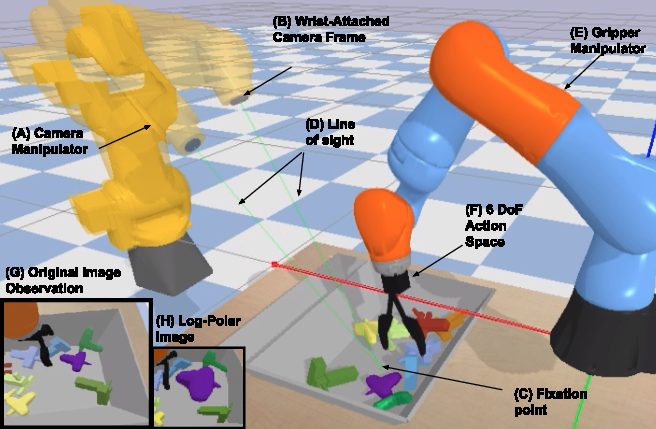

Active perception differentiates from passive visual setups by allowing dynamic interaction with the environment through controlled changes in camera viewpoints. This model exploits the principles of biological vision systems, such as the human foveation process and log-polar sampling, to achieve high-resolution focus on task-relevant areas while maintaining contextual background information. In robotics, this translates to improved localization, representation learning, and action planning in complex environments.

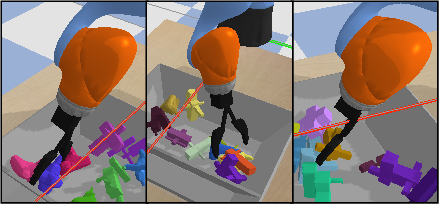

Figure 1: Our active perception setup, showing the interaction between two manipulators (A, E).

Methodology and Architecture

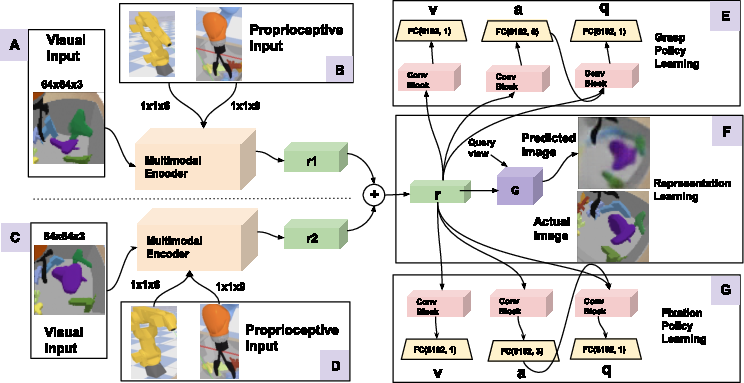

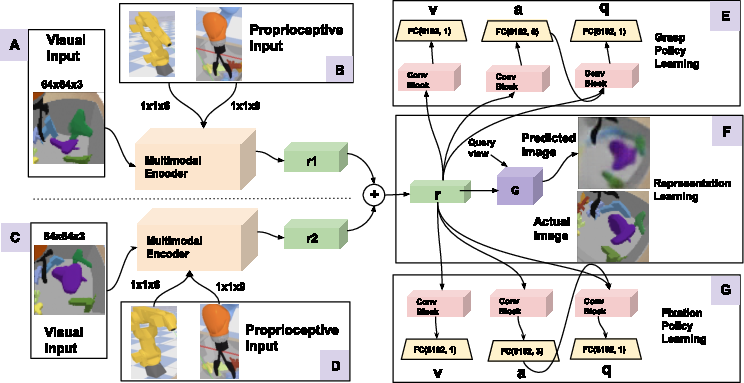

The APR model utilizes a bimodal input consisting of visual and proprioceptive data channelled through a multimodal encoder to produce a scene representation. The grasp and fixation policies leverage this representation to compute actions within a 6-DoF space. The architecture incorporates a Generative Query Network (GQN) for multi-view representation learning, promoting robustness in perception and decision-making processes.

Figure 2: The APR Model, illustrating the architecture of the multimodal encoder and policy networks.

Log-polar sampling is employed to focus the perception on relevant objects, further enhancing data efficiency by reducing the image complexity without degrading central detail fidelity.

Experimental Evaluation

Active versus Passive Perception

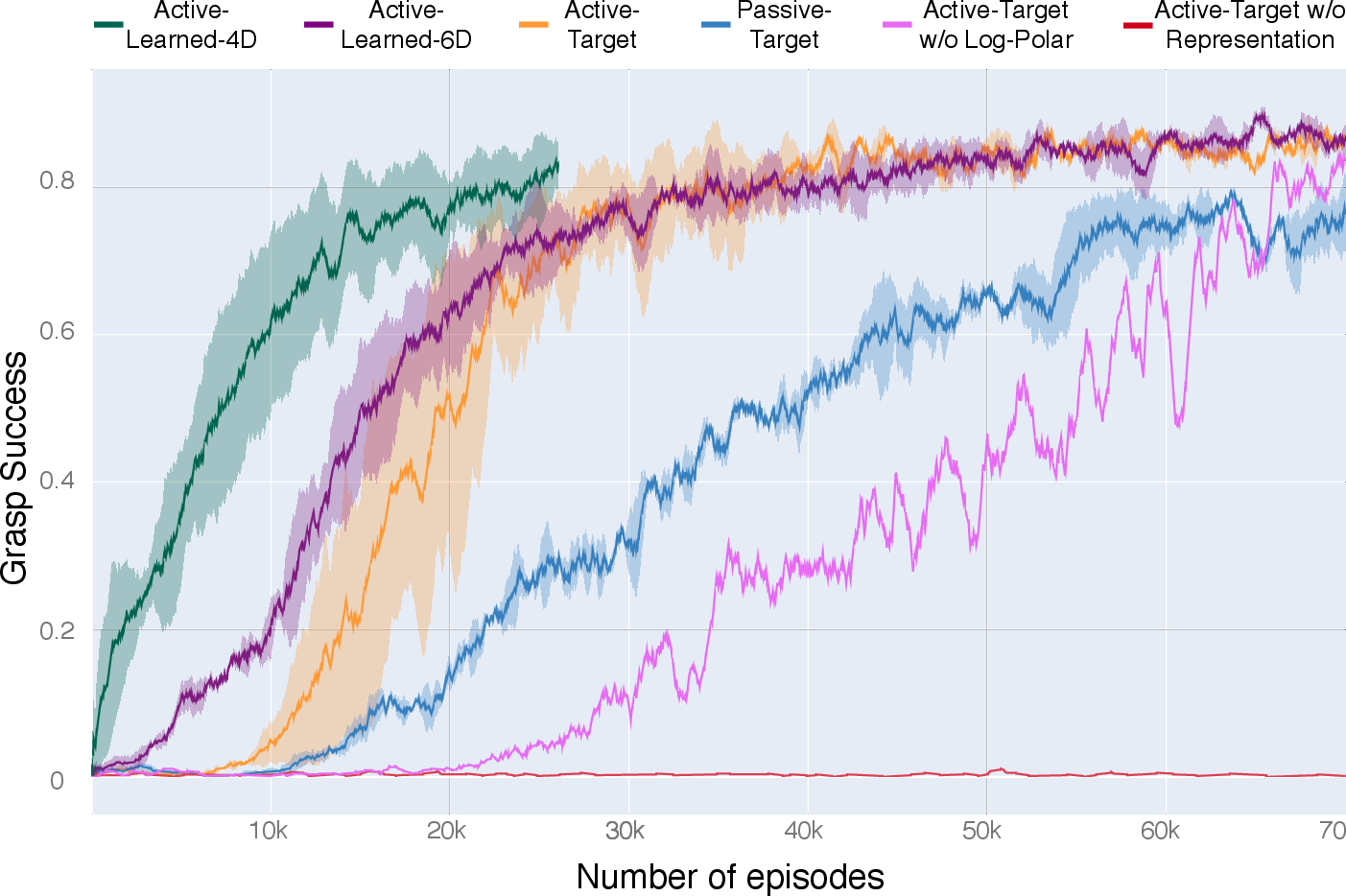

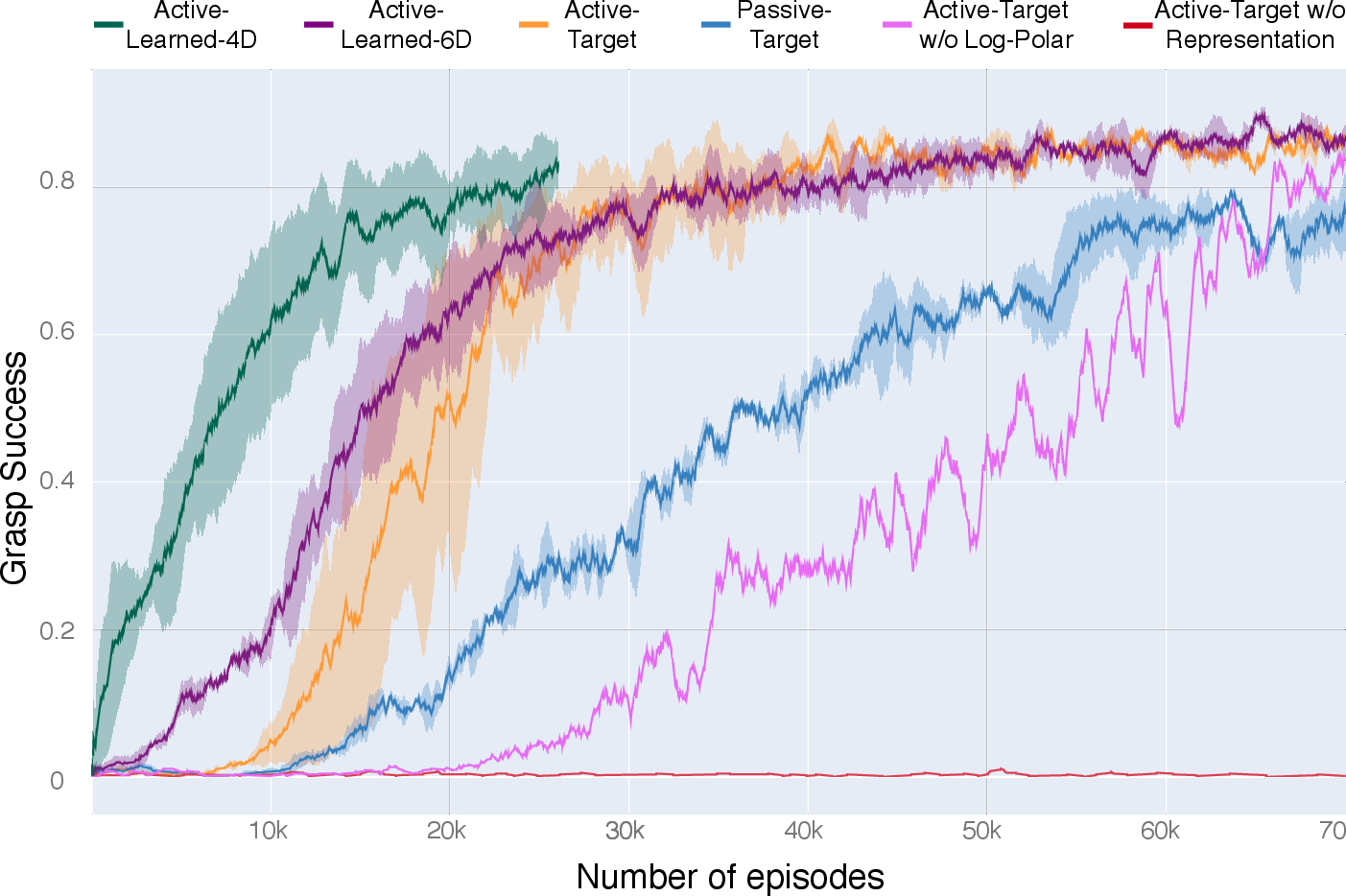

Experiments reveal that the active model outperforms passive counterparts by achieving higher success rates in target-specific grasping tasks. Active models demonstrated 8% superior efficiency, leveraging their dynamic viewpoint adjustment capability. This experiment underscores the significance of active perception in robotic grasping, presenting a marked improvement in targeted object manipulation.

Figure 3: Comparing visual inputs of active and passive models during a multi-step episode.

Learning Dynamics

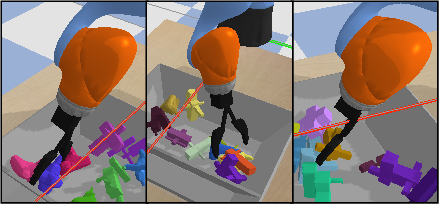

The full APR implementation, where the robot learns where to look and how to act, shows enhanced learning efficiencies with reduced sample requirements. Notably, the model achieves an 85% success rate with 70,000 grasps in a 6-DoF task setup, signifying a substantial advancement over existing static approaches.

Figure 4: Learning curves for various experimental conditions, indicating the benefits of active perception.

Ablation Studies

Ablation studies shed light on the effects of log-polar image usage and representation learning, indicating critical roles in optimizing grasp efficiency. Models lacking these features displayed significant performance drop, validating their integrality to the APR model's success.

Discussion and Future Implications

The biologically inspired active perception model confirms its utility in robotic manipulation, offering sample-efficiency and enriched learning through multi-view representations. The framework presents a scalable solution for other robotic tasks demanding goal-directed perception.

Figure 5: Examples of pre-grasp orienting behaviors due to the policy's 6-DoF action space.

Future exploration could extend APR's application beyond grasping to other dexterous tasks, incorporating real-world perceptions and possible force-sensing integrations for collision avoidance. This paper advocates for further integration of biological perception mechanics into robotics, with prospects for extensive cross-disciplinary research.

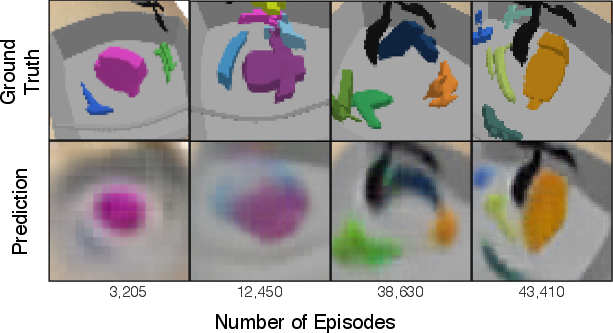

Figure 6: Scene renderings from query views at different snapshots during active model training.

Conclusion

By simulating active perception mechanisms akin to biological systems, the APR model paves the way for more intelligent, efficient robotic agents. This research elucidates the benefits of bridging perception and action through advanced neural architectures within the field of reinforcement learning, pushing towards the design of autonomous systems with enhanced environmental understanding and interaction capabilities.