- The paper introduces the Temporal Fusion Transformer, an innovative model integrating recurrent processing and self-attention to capture local and long-term dependencies.

- It employs gating mechanisms, variable selection networks, and static covariate encoders to enhance performance and filter noisy inputs.

- Experimental results demonstrate up to 9% lower forecast losses, confirming the model's accuracy and interpretability across diverse datasets.

The paper introduces the Temporal Fusion Transformer (TFT), a novel attention-based architecture designed for multi-horizon time series forecasting. TFT distinguishes itself by integrating high forecasting performance with interpretable insights into temporal dynamics. The architecture leverages recurrent layers for local processing and self-attention layers for capturing long-term dependencies, along with specialized components for feature selection and gating mechanisms to enhance performance and interpretability.

Addressing the Challenges of Multi-horizon Forecasting

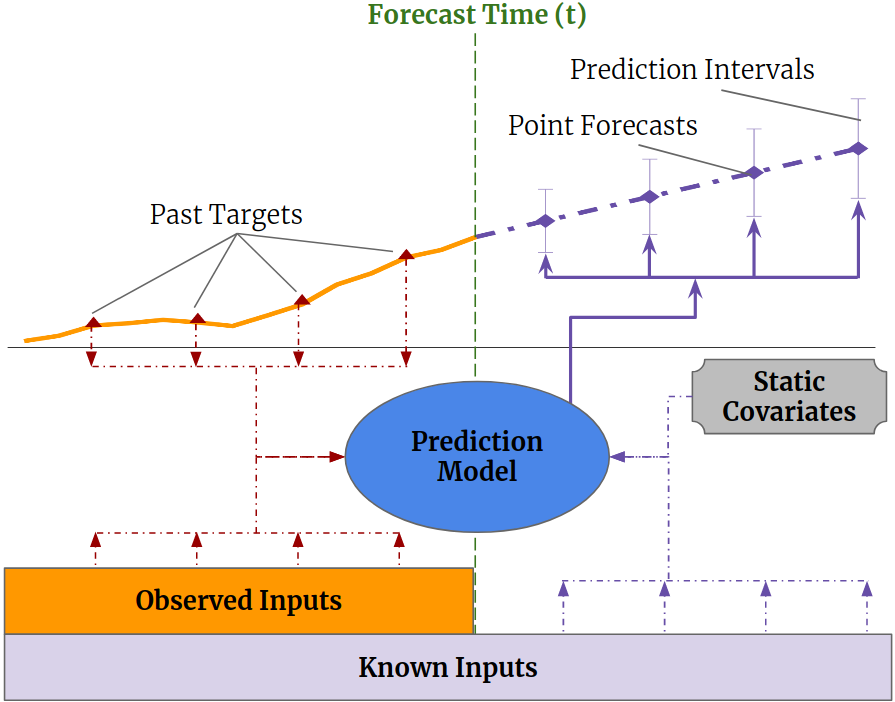

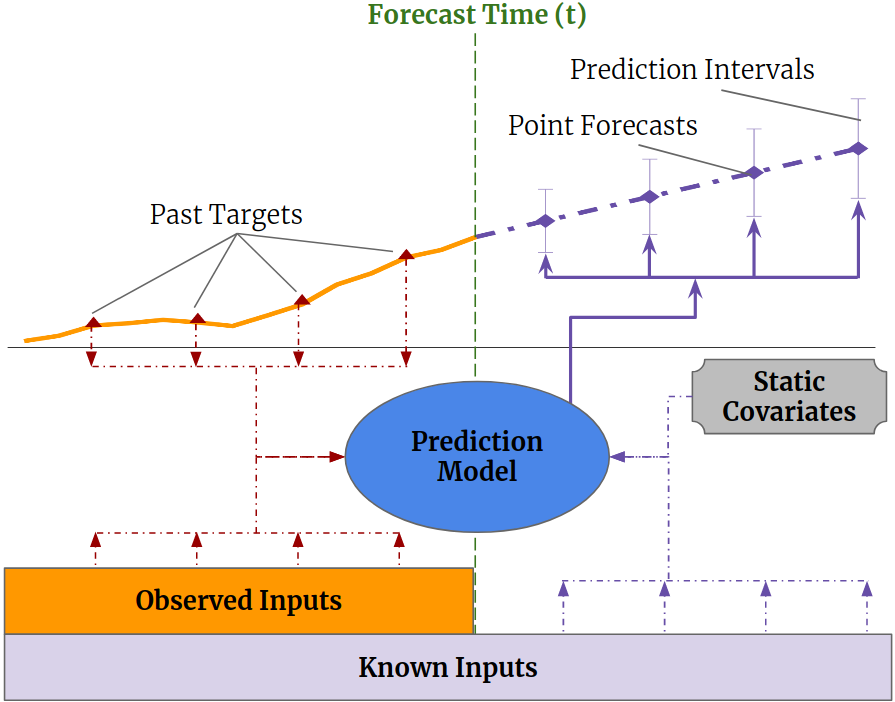

Multi-horizon forecasting is essential for optimizing actions across multiple future time steps, such as retailers managing inventory or clinicians planning patient treatment. Practical applications often involve diverse data sources, including static covariates, known future inputs, and exogenous time series observed only in the past (Figure 1). This heterogeneity presents significant challenges for traditional forecasting methods and many existing DNNs.

Figure 1: Illustration of multi-horizon forecasting with static covariates, past-observed and apriori-known future time-dependent inputs.

Current DNN models frequently fail to adequately address the heterogeneity of inputs, either assuming all exogenous inputs are known in advance or neglecting crucial static covariates. Moreover, many architectures operate as "black boxes," making it difficult to understand how predictions are derived. The paper argues that aligning architectures with unique data characteristics, similar to previous work with Clockwork RNNs and Phased LSTMs, can lead to substantial performance gains.

TFT Architecture and Components

The TFT architecture (Figure 2) incorporates several novel components to address the challenges of multi-horizon forecasting and enhance interpretability:

- Gating Mechanisms: Gated Residual Networks (GRNs) provide adaptive depth and network complexity, allowing the model to apply non-linear processing only when necessary.

- Variable Selection Networks: These networks select relevant input variables at each time step, improving performance by focusing on salient features and mitigating noise.

- Static Covariate Encoders: These encoders integrate static features into the network by producing context vectors that condition temporal dynamics.

- Temporal Processing: This module employs a sequence-to-sequence layer for local processing of known and observed inputs, and an interpretable multi-head attention block for capturing long-term dependencies.

- Quantile Forecasts: The model generates prediction intervals by simultaneously predicting various percentiles, providing insights into the range of likely target values.

Interpretable Multi-Head Attention Mechanism

To enhance explainability, the paper modifies the multi-head attention mechanism by sharing values across heads and employing additive aggregation. This approach allows different heads to learn different temporal patterns while attending to a common set of input features. The interpretable multi-head attention mechanism can be described by the following equations:

H~=A~(Q,K) V WV

A~(Q,K)={1/H∑h=1mHA(Q WQ(h),K WK(h))}

where A~(Q,K) represents the combined attention matrix that increases representation capacity.

Experimental Results and Analysis

The performance of TFT was evaluated on a variety of real-world datasets, including Electricity, Traffic, Retail, and Volatility datasets. The results demonstrate that TFT outperforms existing benchmarks across these datasets. Specifically, TFT achieved an average of 7% lower P50 and 9% lower P90 losses compared to the next best model, highlighting the benefits of aligning the architecture with the multi-horizon forecasting problem.

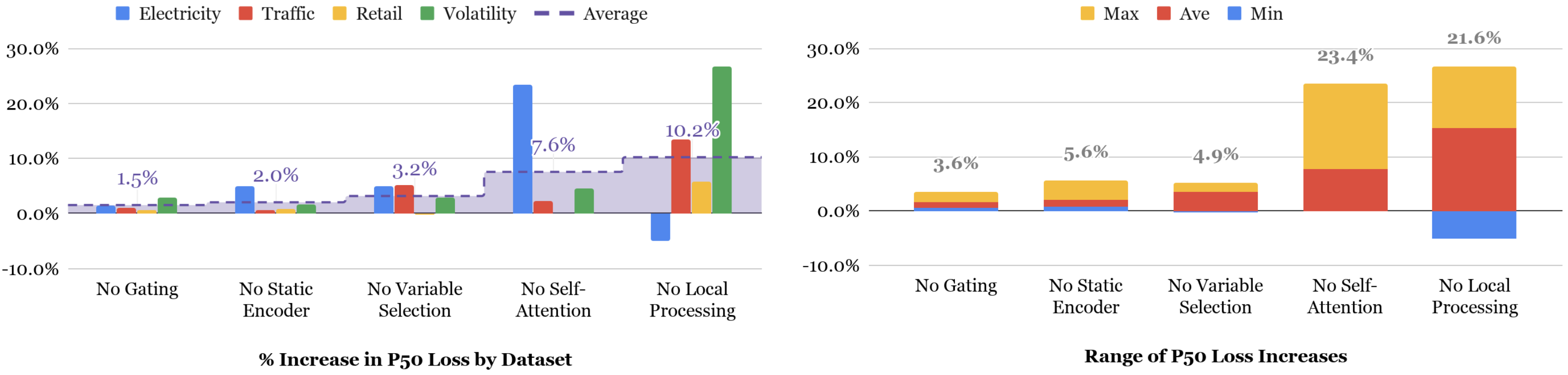

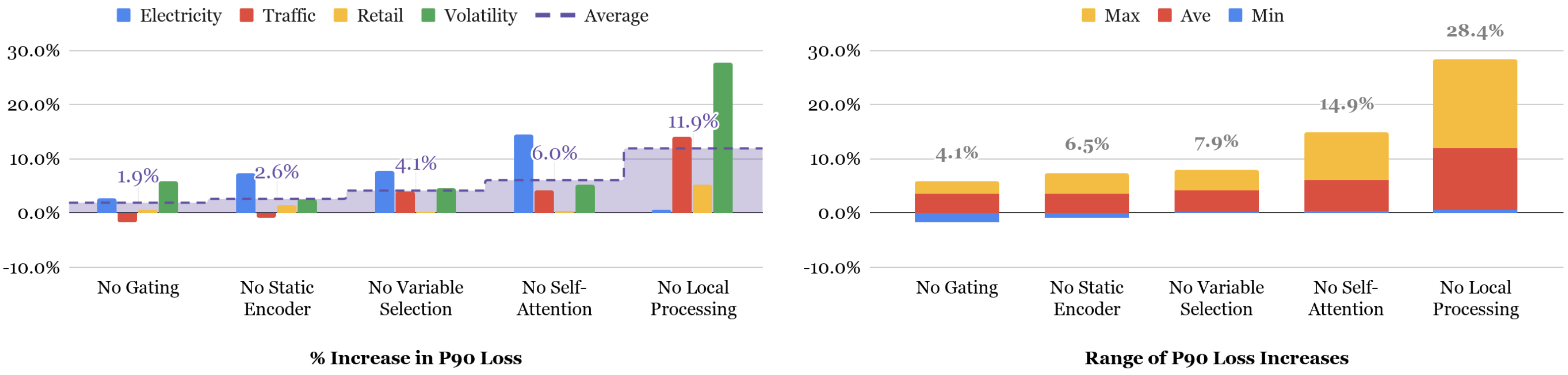

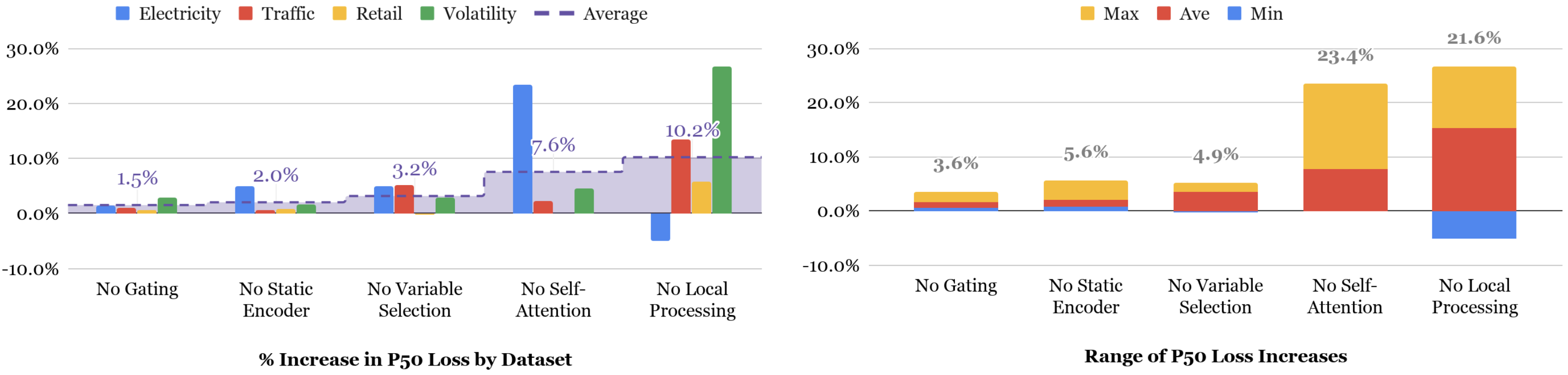

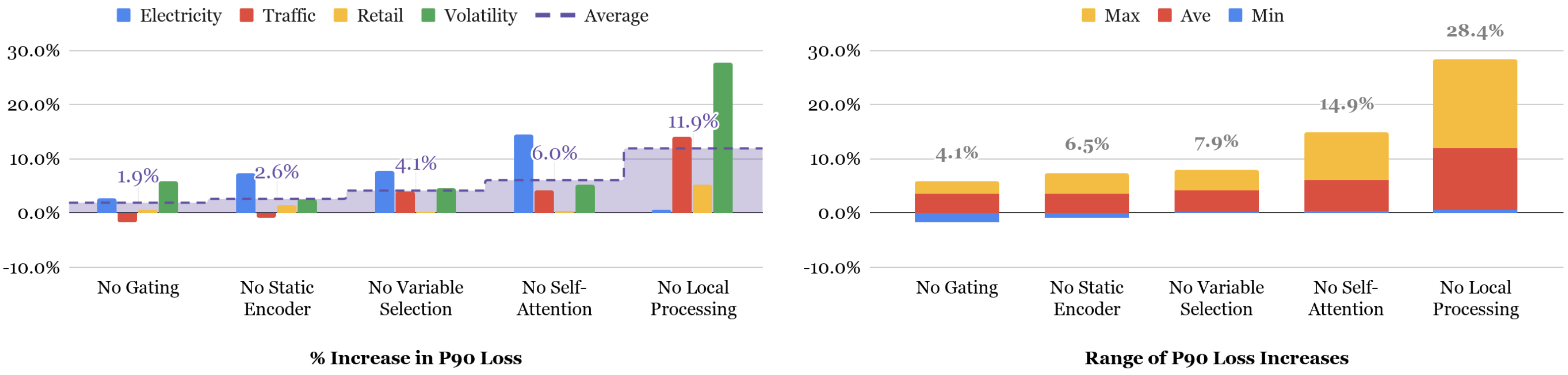

Ablation studies were conducted to assess the contribution of each architectural component (Figure 3). The results indicate that the temporal processing components have the largest impact on performance, followed by static covariate encoders and instance-wise variable selection networks. Gating layers also contribute to improved performance, particularly for smaller and noisier datasets.

Figure 3: Changes in P50 losses across ablation tests

Interpretability Use Cases

The paper demonstrates three practical interpretability use cases of TFT:

- Variable Importance Analysis: By analyzing variable selection weights, users can identify globally important variables for the prediction problem (Table 1).

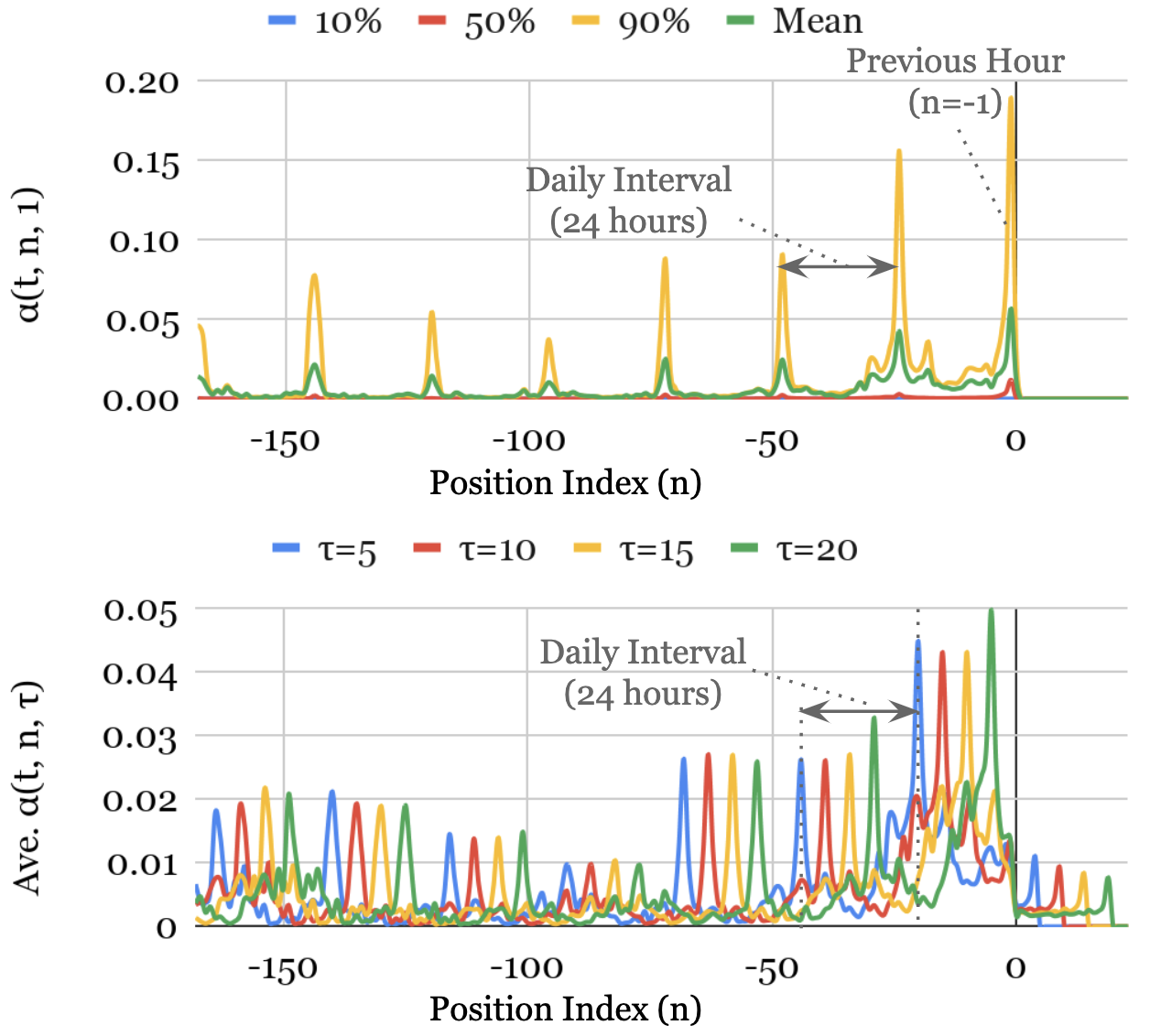

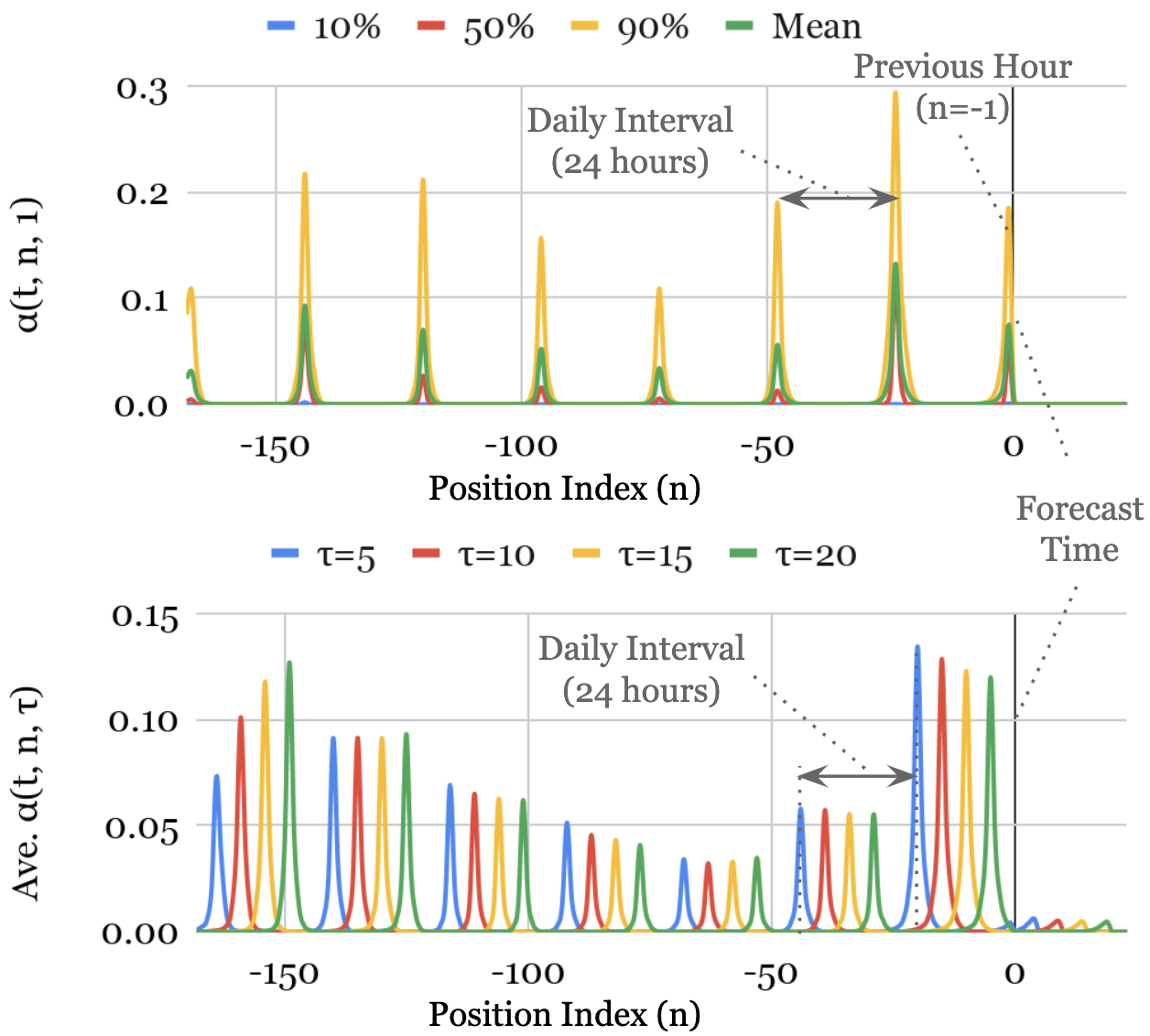

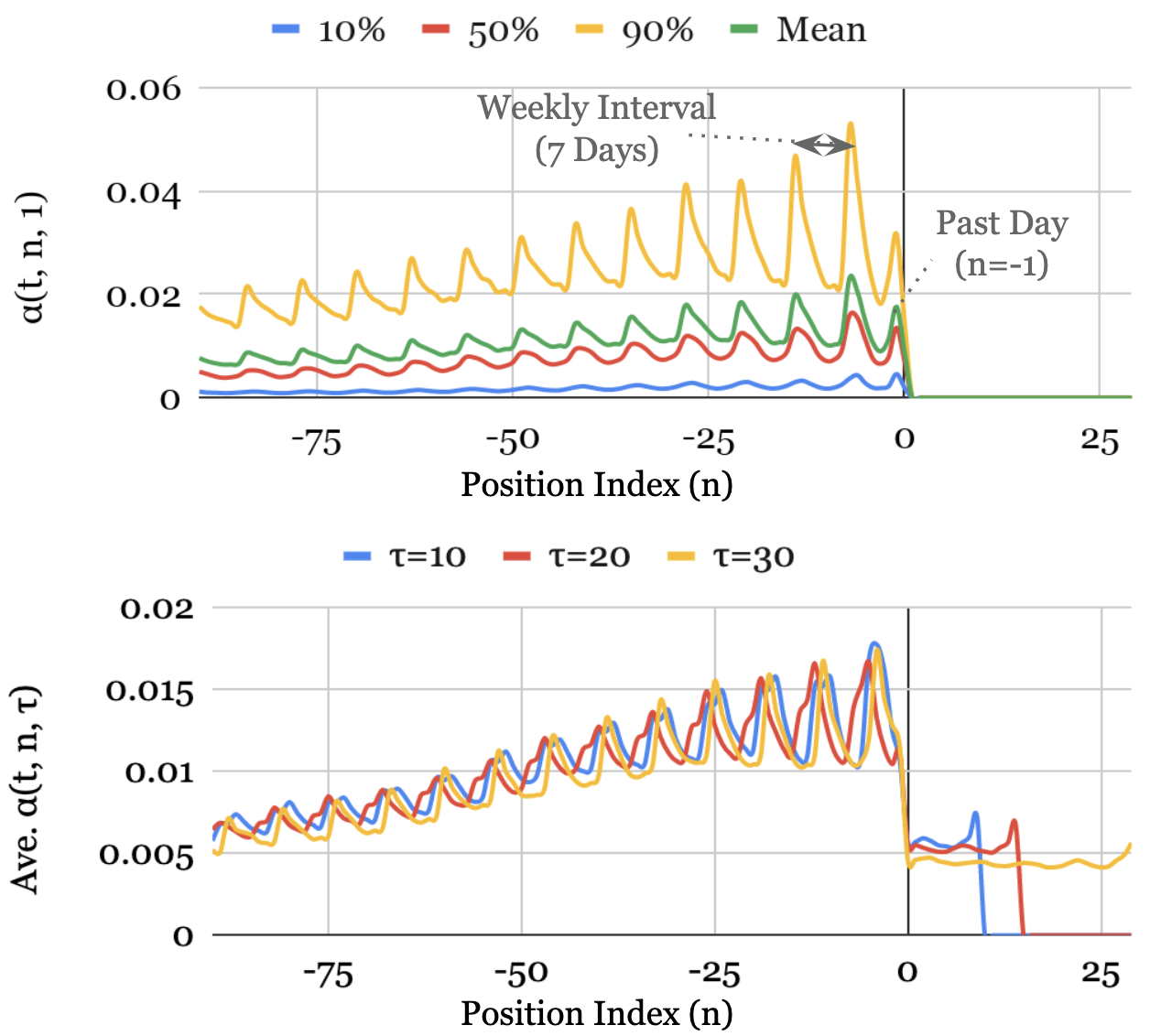

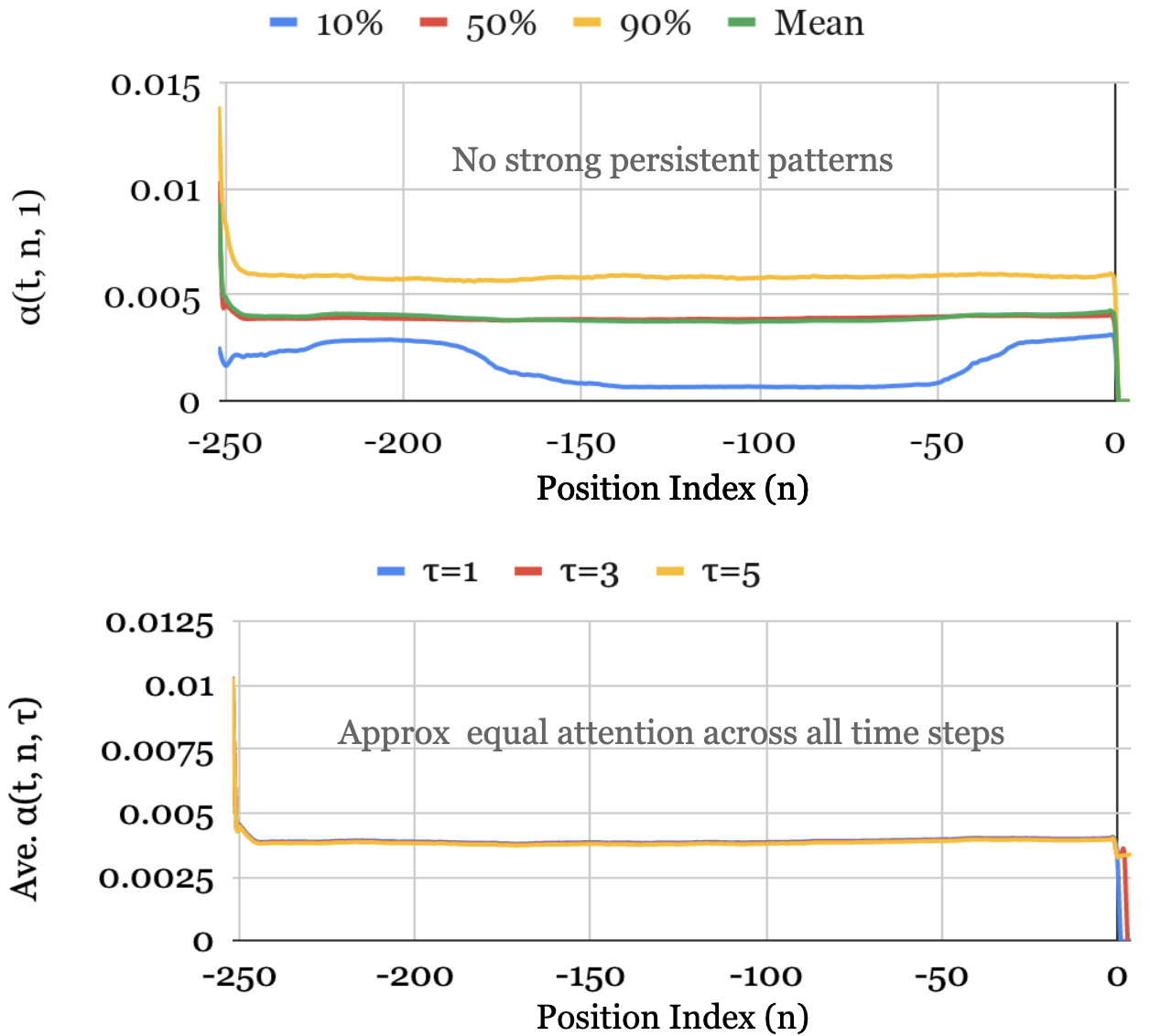

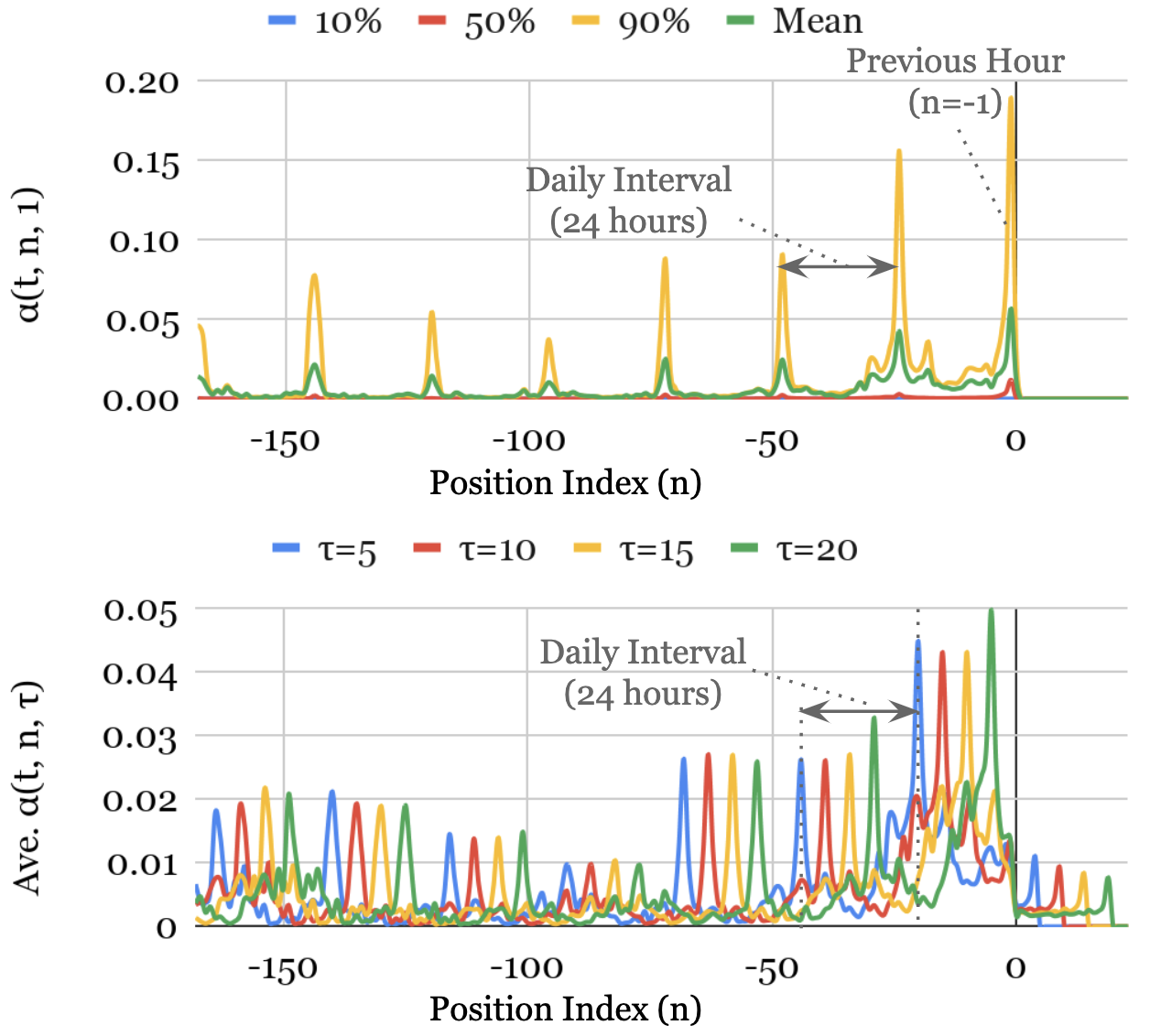

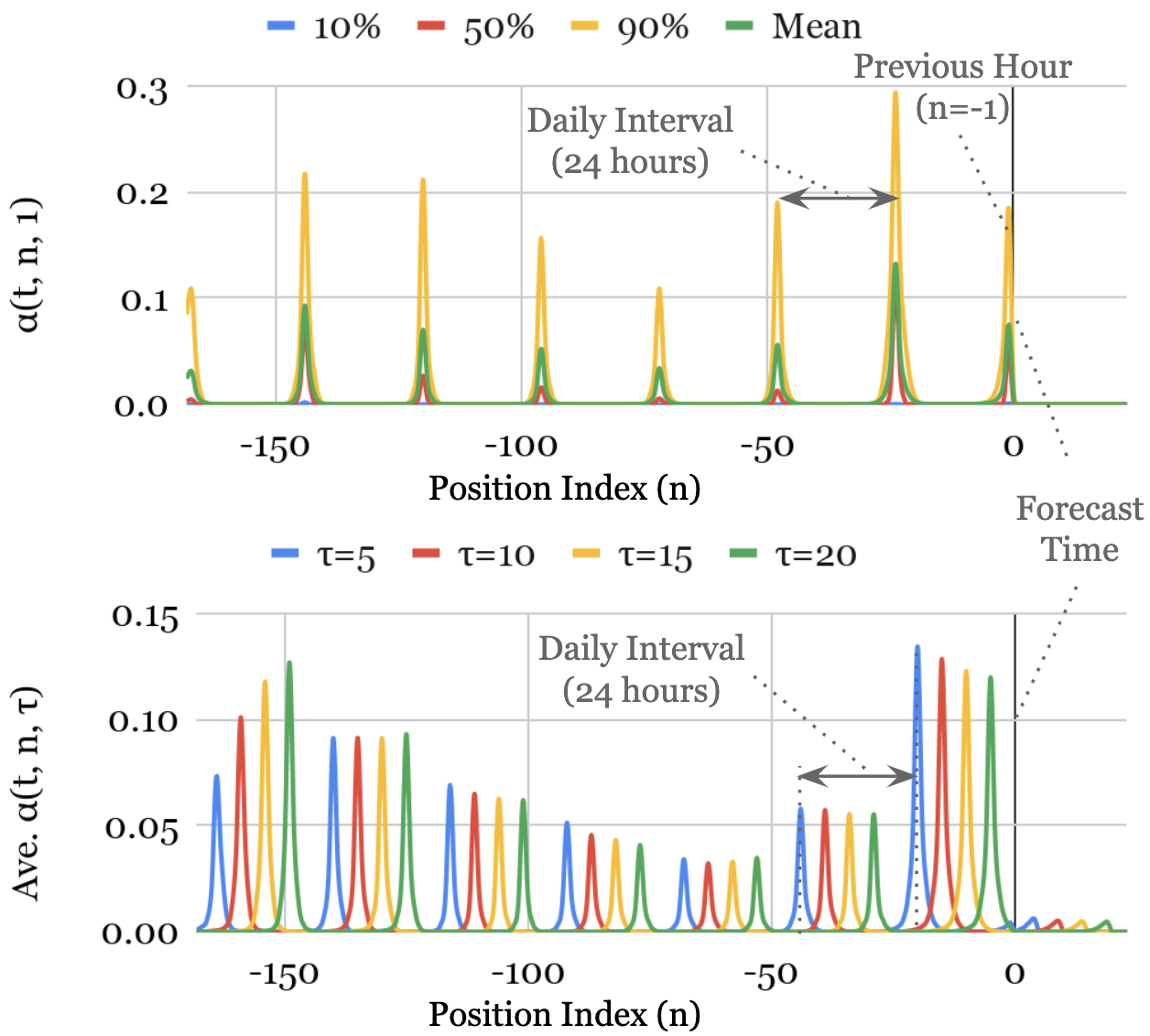

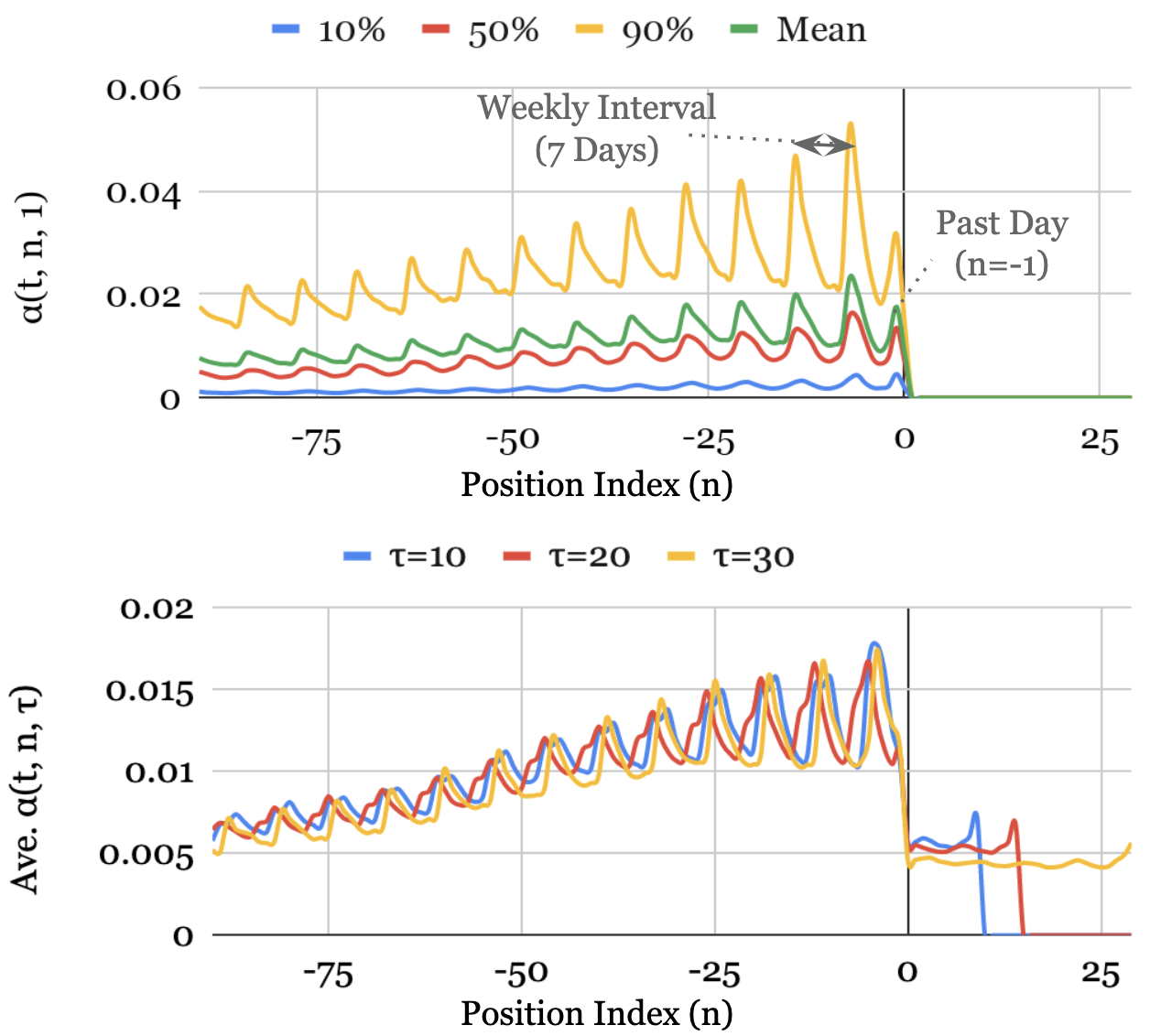

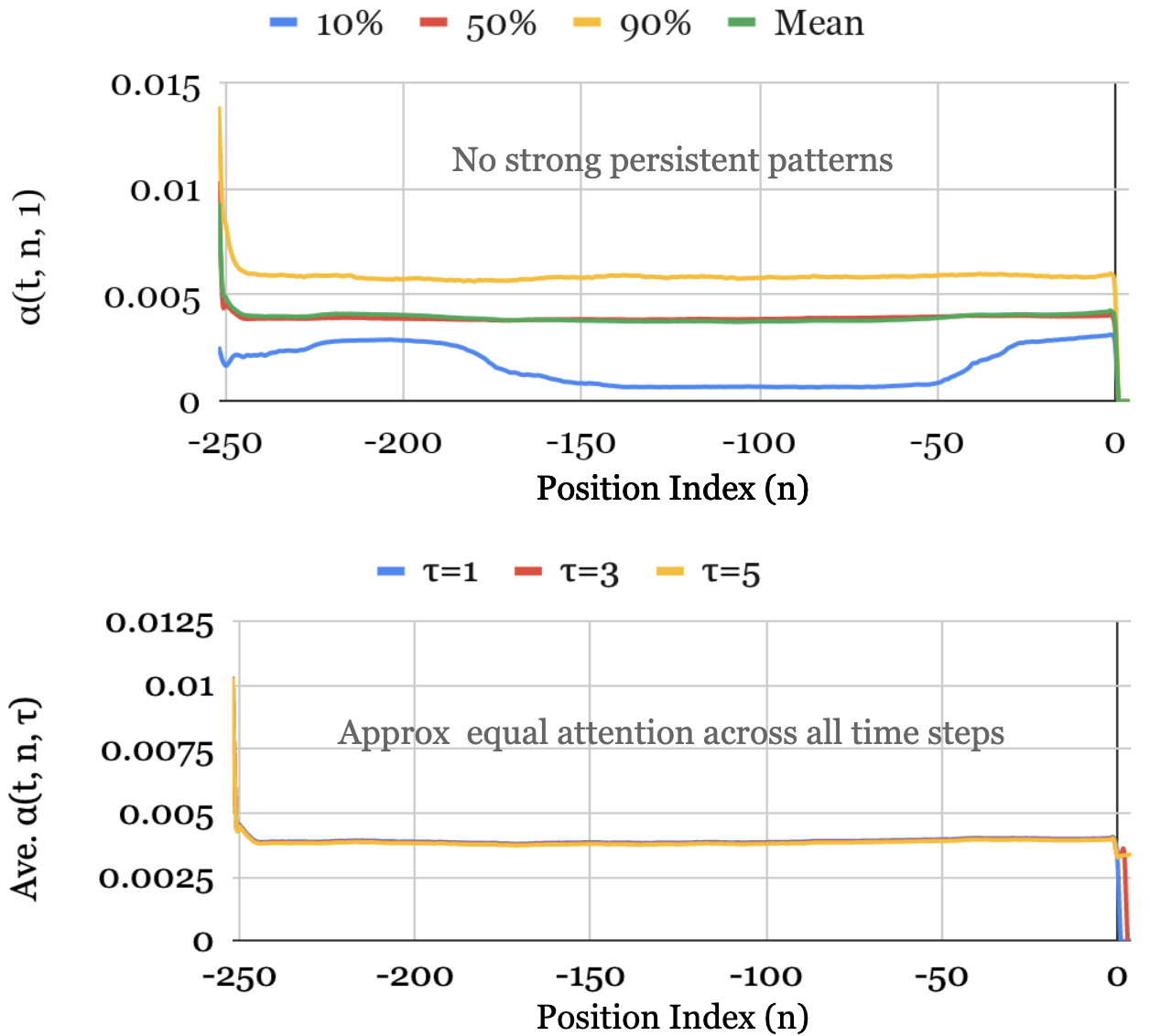

- Visualization of Persistent Temporal Patterns: Attention weights in the self-attention layer can be used to identify persistent temporal patterns, such as seasonality and lag effects (Figure 4).

- Identification of Significant Events: The model can identify regimes or events that lead to significant changes in temporal dynamics by measuring the distance between attention vectors at each point in time.

Figure 4: Electricity

Conclusion

The paper presents a compelling case for the TFT architecture as a high-performance and interpretable solution for multi-horizon time series forecasting. By incorporating specialized components and an interpretable attention mechanism, TFT addresses the challenges of diverse input types and provides valuable insights into temporal dynamics. The experimental results and interpretability use cases demonstrate the practical applicability and benefits of TFT for a wide range of real-world forecasting problems.