- The paper introduces Co-DQL, a scalable MARL algorithm that combines double Q-learning, UCB exploration, and mean field approximation to enhance traffic signal coordination.

- It employs reward allocation and local state sharing, ensuring stable and robust learning across diverse traffic conditions.

- Experimental results show that Co-DQL significantly reduces vehicle delays and improves throughput compared to conventional control methods.

Multi-Agent Reinforcement Learning for Large-Scale Traffic Signal Control

Introduction and Motivation

The paper "Large-Scale Traffic Signal Control Using a Novel Multi-Agent Reinforcement Learning" (1908.03761) advances the application of decentralized MARL frameworks for urban Traffic Signal Control (TSC). Conventional methods such as pre-timed and actuated controls are insufficient for highly dynamic, large-scale traffic scenarios due to their inability to adapt to non-stationary flows and the curse of dimensionality in centralized approaches. Previous distributed intelligence schemes (e.g., genetic algorithms, fuzzy logic, swarm intelligence) suffer from slow convergence and scalability limits. This work proposes Cooperative Double Q-Learning (Co-DQL), a scalable MARL formulation employing double Q estimators, UCB-based exploration, mean field approximation for opponent modeling, and local reward/state sharing.

Methodology: Cooperative Double Q-Learning

Co-DQL operates on a decentralized MARL paradigm, treating each intersection as an agent controlling its local signal. The principal innovations in Co-DQL are:

- Independent Double Q-learning: Each agent maintains two Q-value networks (Qka, Qkb) updated in alternation to address value overestimation inherent to standard Q-learning, yielding superior reward estimation stability in nonstationary environments.

- Upper Confidence Bound (UCB) Exploration: Actions are selected using the UCB policy, ensuring balanced exploration by evaluating action visitation frequencies and Q-value optimism.

- Mean Field Approximation for Agent Interaction: To model the joint action space in large-scale multi-agent domains, Co-DQL approximates the effect of neighbors via mean field, reducing dimensionality and allowing agent policies to account for local population statistics, which is critical for learning cooperative behaviors at scale.

- Reward Allocation and Local State Sharing: Rewards incorporate both local and neighbor agent outcomes, weighted by a discount factor, resolving credit assignment challenges in MARL. Shared state features (⟨sk,Nk1i∈N(k)∑si⟩) improve robustness and stability in learning.

Theoretical guarantees are provided for convergence under mild assumptions, using stochastic process analysis extending results from the mean field RL literature.

Experimental Setup and Simulator Design

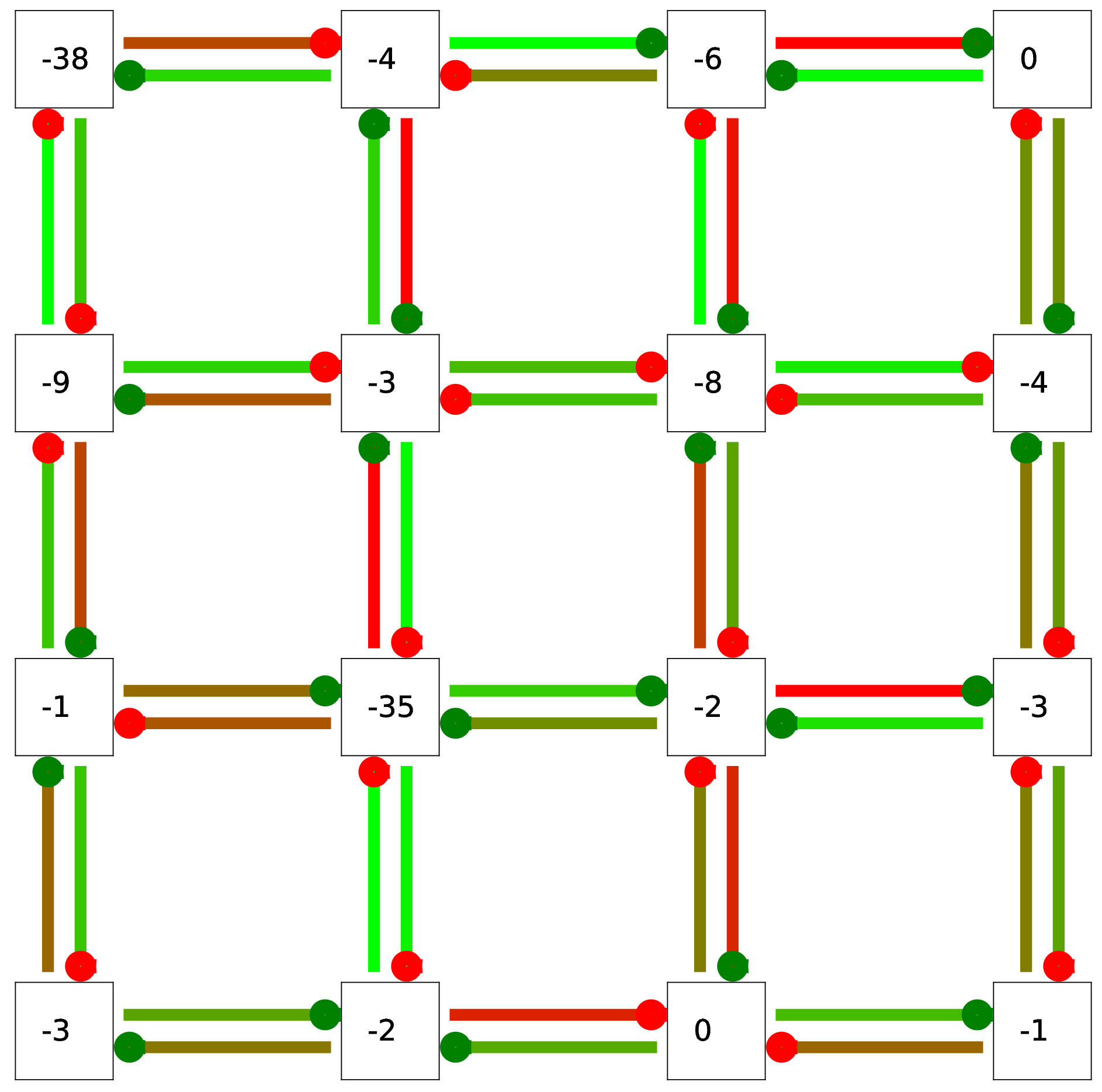

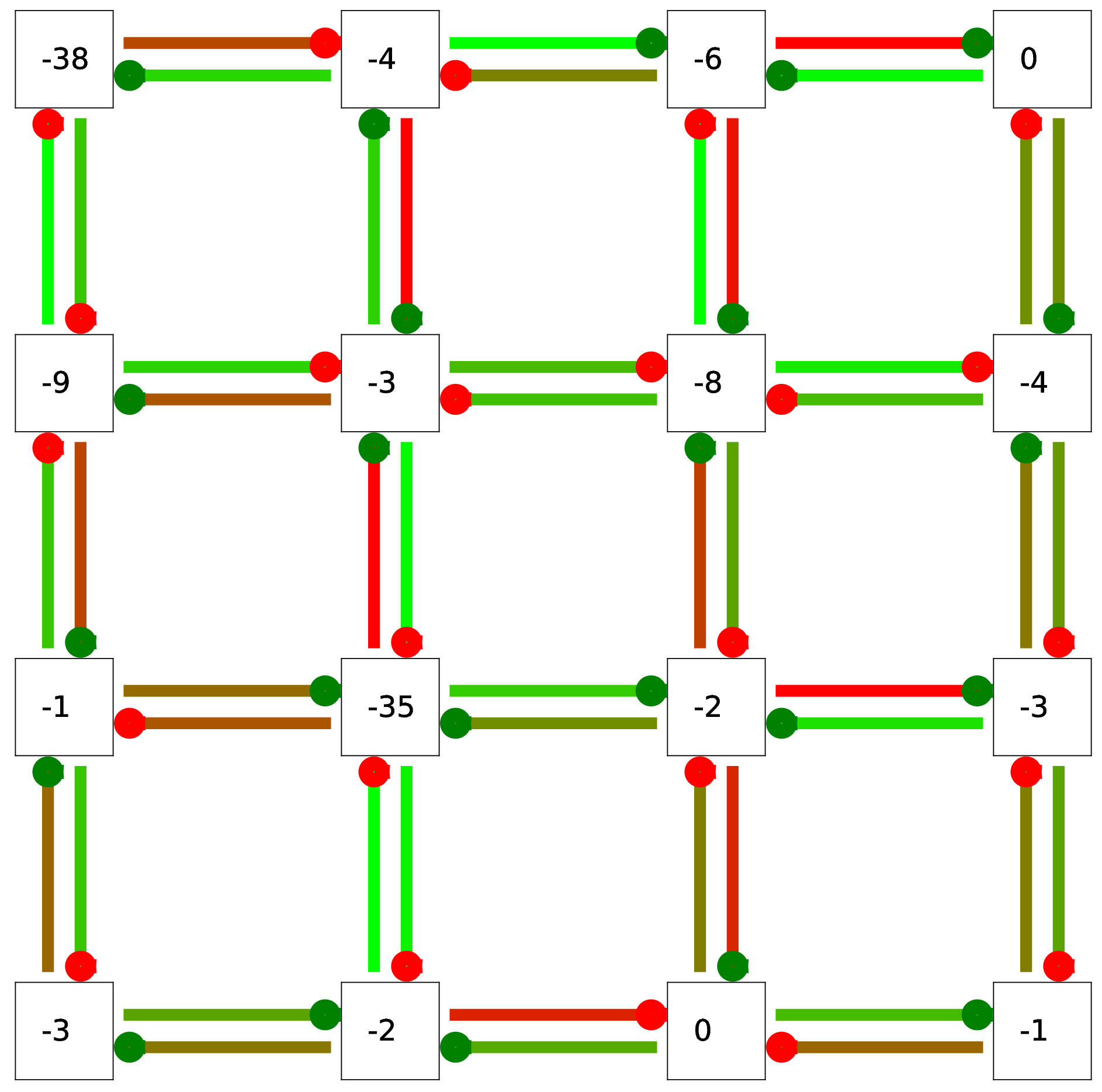

Experiments are conducted on both simplified grid-based and realistic city-scale traffic simulators. The simplified settings employ grid networks with several traffic flow regimes: global random, double-ring, and four-ring scenarios. Congestion levels are visualized by reward heatmaps and lane colors to indicate delays and vehicle queues.

Figure 1: Visualization of multiple grid traffic scenarios, enabling comparative analysis on agent cooperation under diverse flow patterns.

In the city-scale evaluation, a SUMO-based simulator modeled the traffic of Xi’an with 49 signalized intersections and stochastic, time-variant, high-intensity vehicle flows representative of peak hour congestion. The agent's states and actions reflect common MDP formalizations in TSC, leveraging local queue density, phase selection, and cumulative delays for both training and metrics.

Results and Numerical Analysis

Across all environments, Co-DQL consistently achieves superior numerical performance in both standard episode rewards and secondary metrics such as average vehicle delay, queue length, and arrival rate. Notable numerical highlights include:

- Global Random Traffic Flow: Co-DQL reduces average delay time to 36.98 time units—significantly outperforming IQL (148.50), IDQL (131.85), DDPG (111.05), and MA2C (71.55).

- Double-Ring and Four-Ring Flows: Co-DQL maintains lowest mean delay (26.05 and 37.17 units respectively), with stable convergence and improved robustness over alternatives.

- Large-Scale City Network: On SUMO, Co-DQL provides the best average vehicle speed (5.35 m/s), lowest intersection delay (20.31 s/veh), shortest trip delay (177.73 s), and highest arrived rate (0.91), confirming scalability and practical viability.

The empirical evidence contradicts the notion that decentralized MARL algorithms cannot match or exceed coordination effectiveness in large-scale TSC.

Implications and Future Prospects

The results demonstrate that mean field MARL, coupled with double Q estimators and learned cooperation protocols, is a highly effective paradigm for large-scale adaptive TSC. Theoretical convergence results support deployment in environments with finite state-action domains and stationary strategies. Practically, integrating reward and state sharing mechanisms directly into agent inputs and feedback loops yields robust learning, better global throughput, and resilience against the inherent dynamics of urban systems.

Further research directions include:

- Extending mean field theory for nonlinear reward aggregation and highly asymmetric networks.

- Hierarchical MARL architectures for even larger metropolitan deployments.

- Automated hyperparameter optimization for reward allocation factors and state sharing mechanisms.

- Comparative studies with classical optimization protocols (max pressure, cell transmission) and hybrid models.

- Sim2Real transfer learning and robustness investigations for real-world deployment.

Conclusion

This study significantly expands the MARL landscape for urban traffic signal control, establishing Co-DQL as a technically sound, scalable, and robust approach. The combination of mean field approximation, double Q-learning, and shared reward/state signals forms a foundation for future research in autonomous transportation networks. Its clear numerical superiority over contemporary RL methods encourages practical adoption and theoretical exploration in multi-agent systems for complex real-world domains.