- The paper introduces a novel dataset with 60 million state-action pairs from Minecraft demonstrations to boost reinforcement learning research.

- The paper details a scalable data collection pipeline using a public server, client plugin, and packet-level processing to capture complex gameplay trajectories.

- The paper demonstrates that integrating human demonstrations into RL methods like DQN and A2C significantly improves performance in hierarchical tasks.

MineRL: A Large-Scale Dataset of Minecraft Demonstrations

Introduction to MineRL

The "MineRL: A Large-Scale Dataset of Minecraft Demonstrations" paper introduces a comprehensive dataset designed to advance the field of reinforcement learning through the application of Minecraft demonstrations. Unlike standard DRL methods that suffer from sample inefficiency, MineRL harnesses human demonstrations to improve learning efficiency. This paper outlines both the construction of the MineRL dataset and its potential utility in addressing key challenges within reinforcement learning research, particularly in complex, open-world environments.

Environment: Minecraft

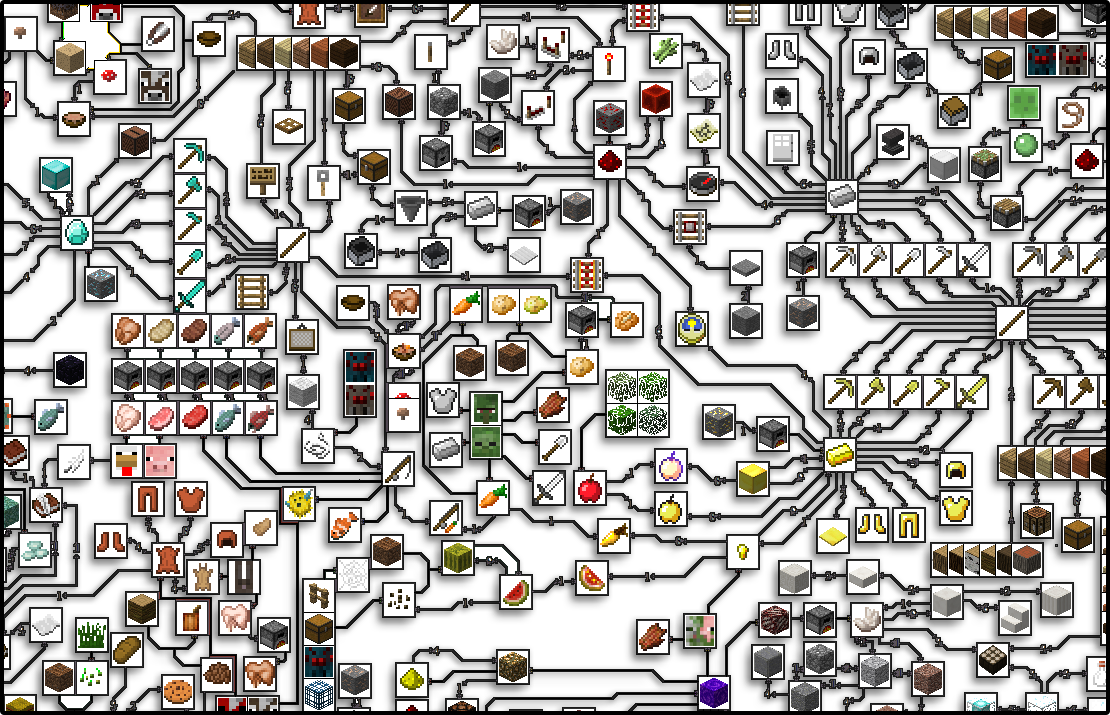

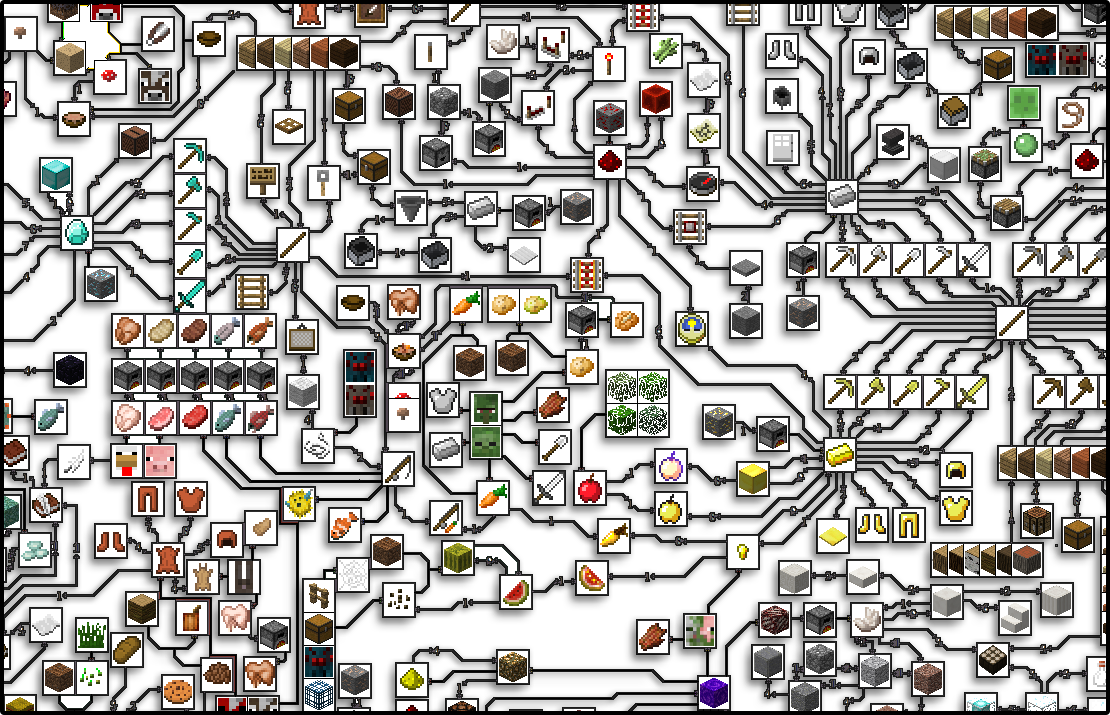

Minecraft, with its open-world, block-based structure and the absence of a singular objective, presents a unique setting for testing RL techniques. Its complex item hierarchy, extensive task list, and requirement for long-term planning create a rich environment for developing and evaluating RL strategies.

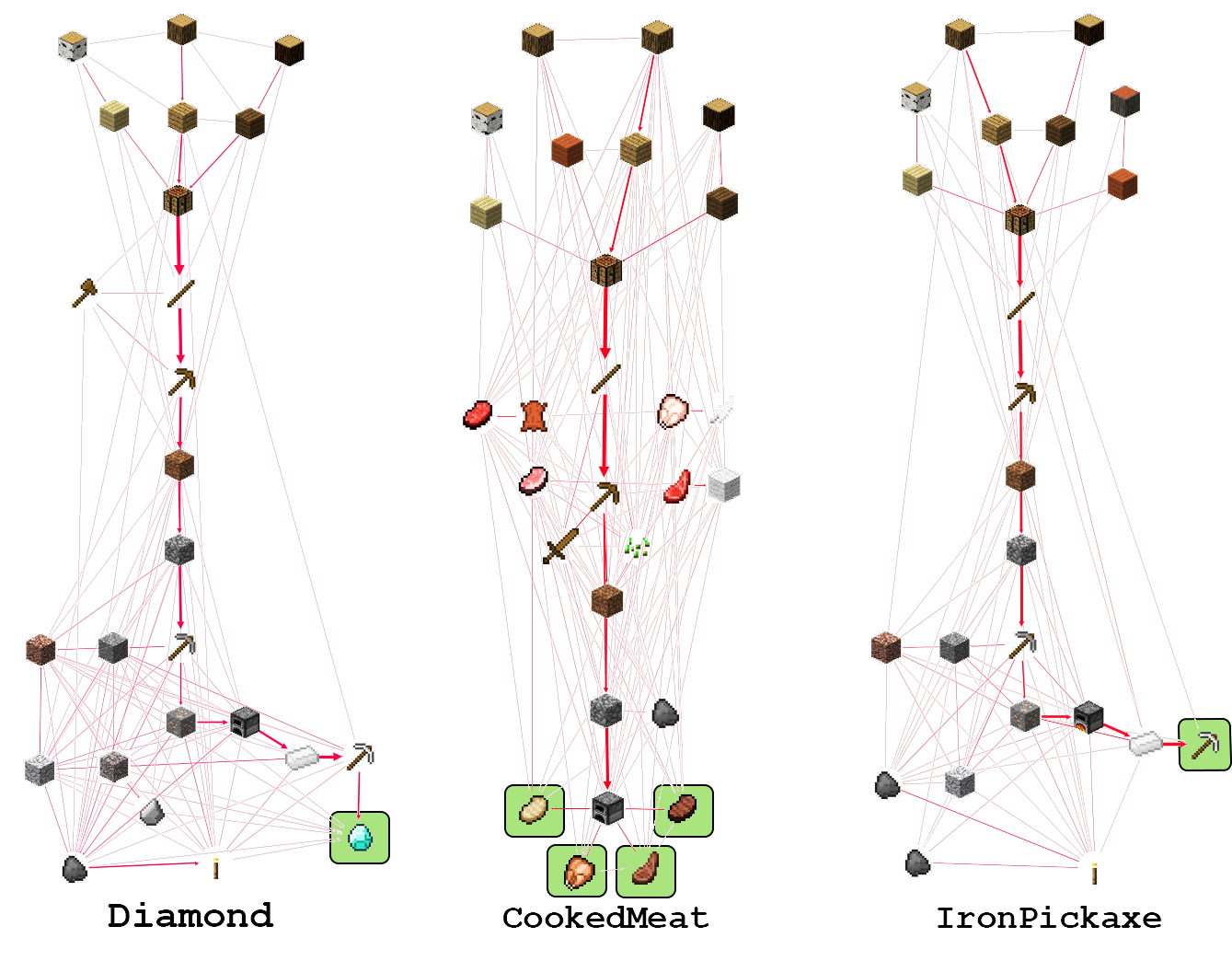

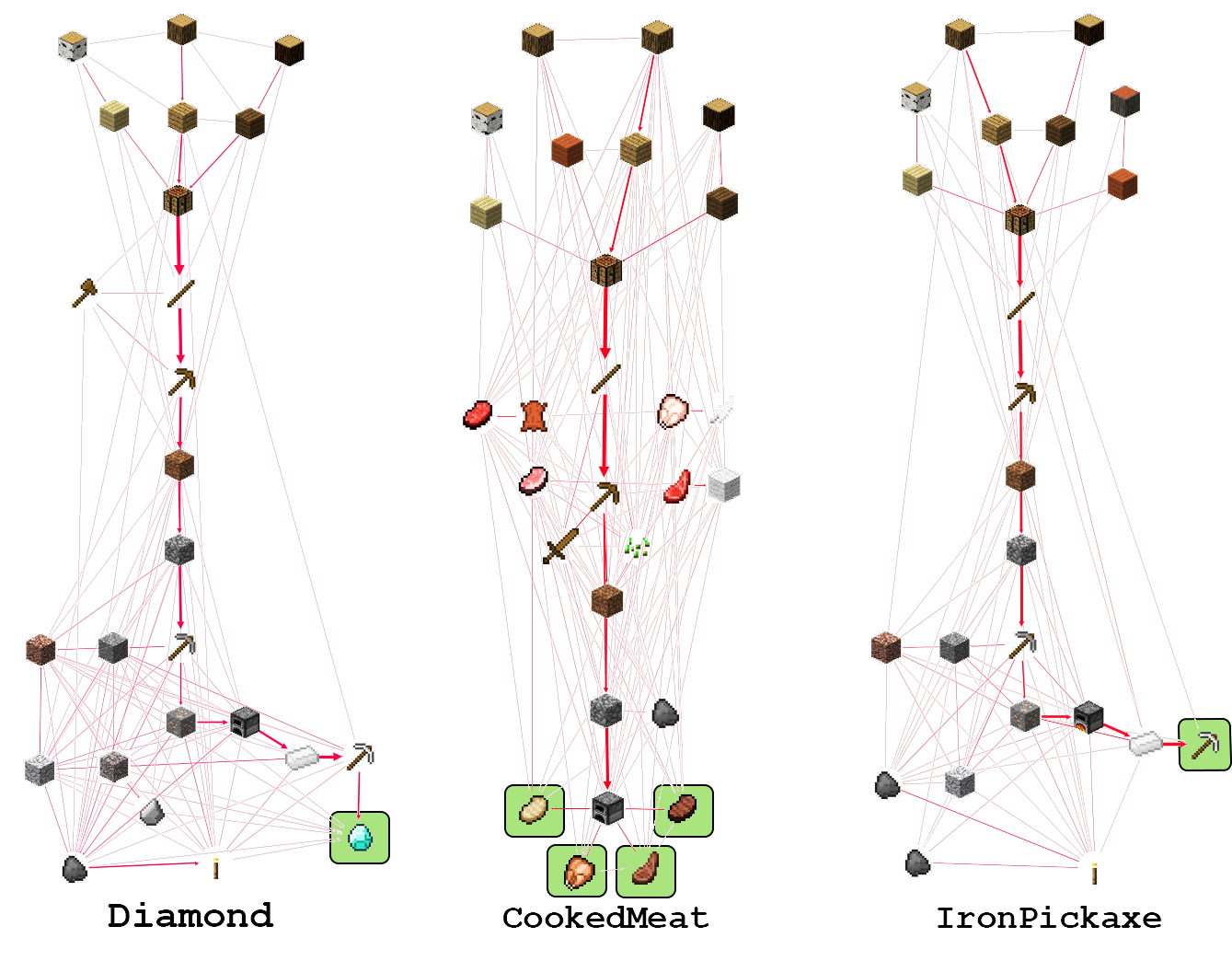

Figure 1: A subset of the Minecraft item hierarchy showcases the complex dependencies among tools and resources.

The translation of these challenges into well-defined RL problems is exemplified by tasks like resource gathering and strategic planning required to achieve goals like obtaining diamonds or defeating enemies.

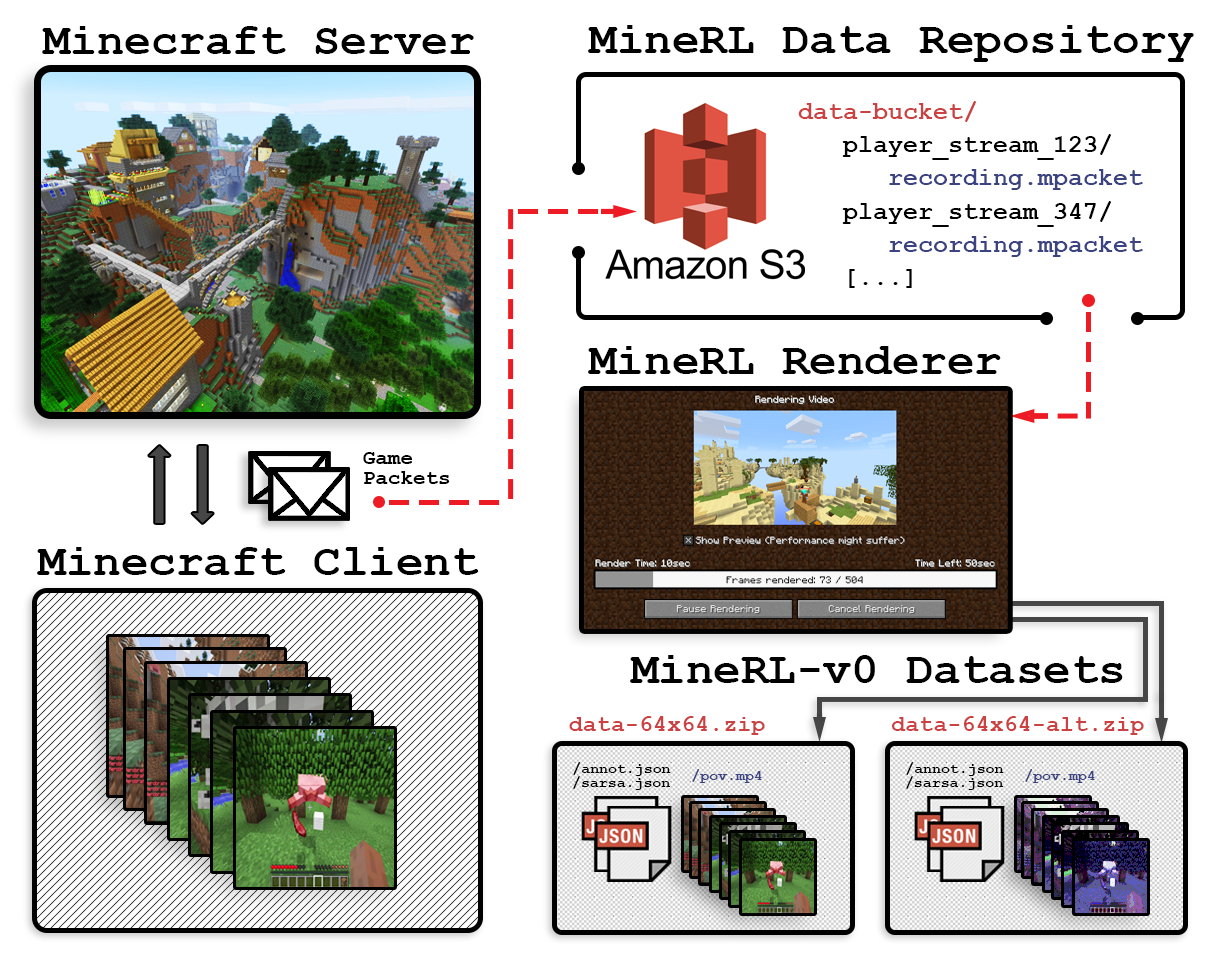

The MineRL dataset is made possible by a sophisticated data collection platform that captures 60 million state-action pairs from human gameplay within Minecraft. The platform features:

This extensive pipeline facilitates a scalable and adaptable approach to expanding the dataset, enabling ongoing data collection and task generation.

Results: MineRL-v0 Dataset

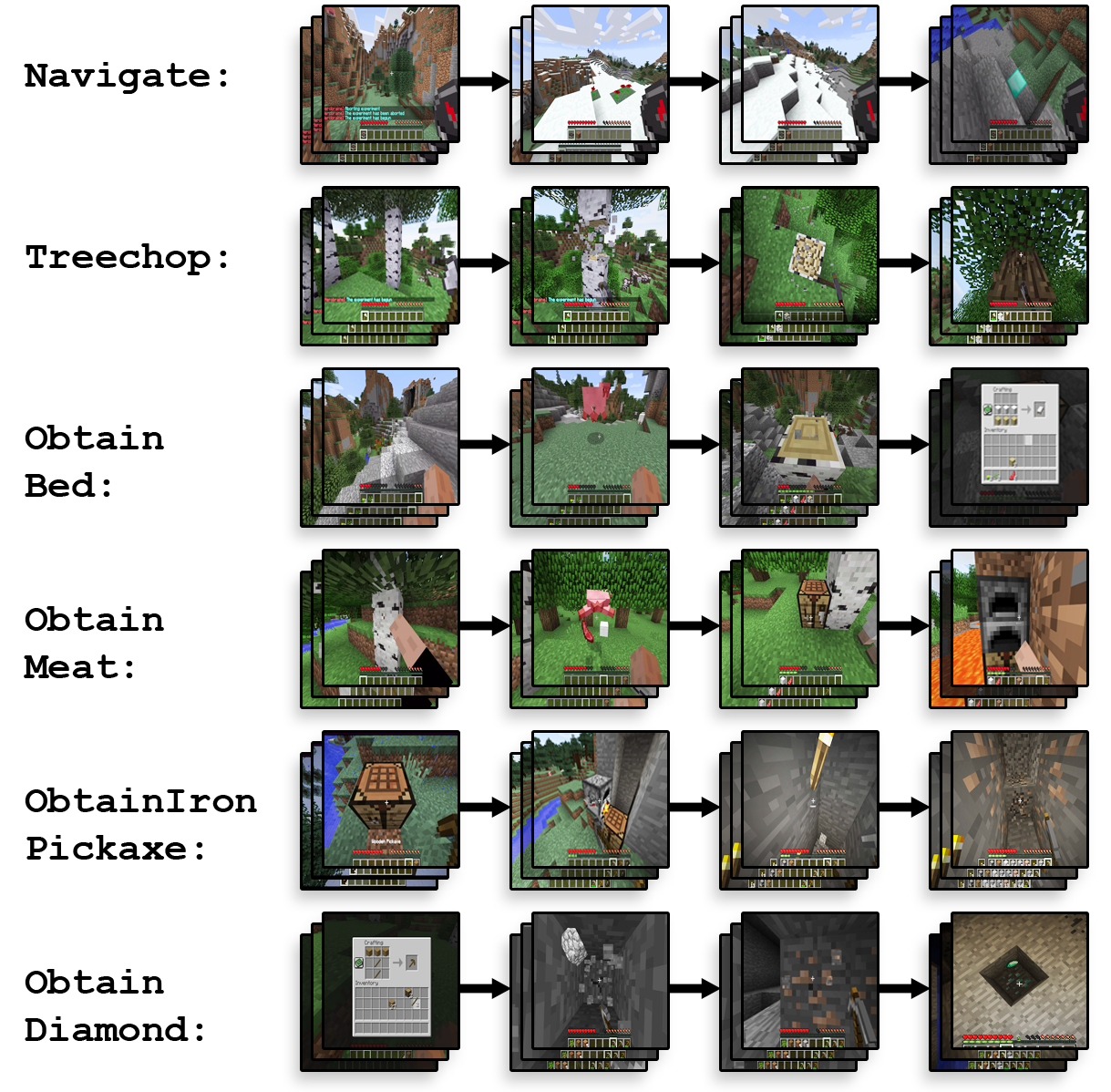

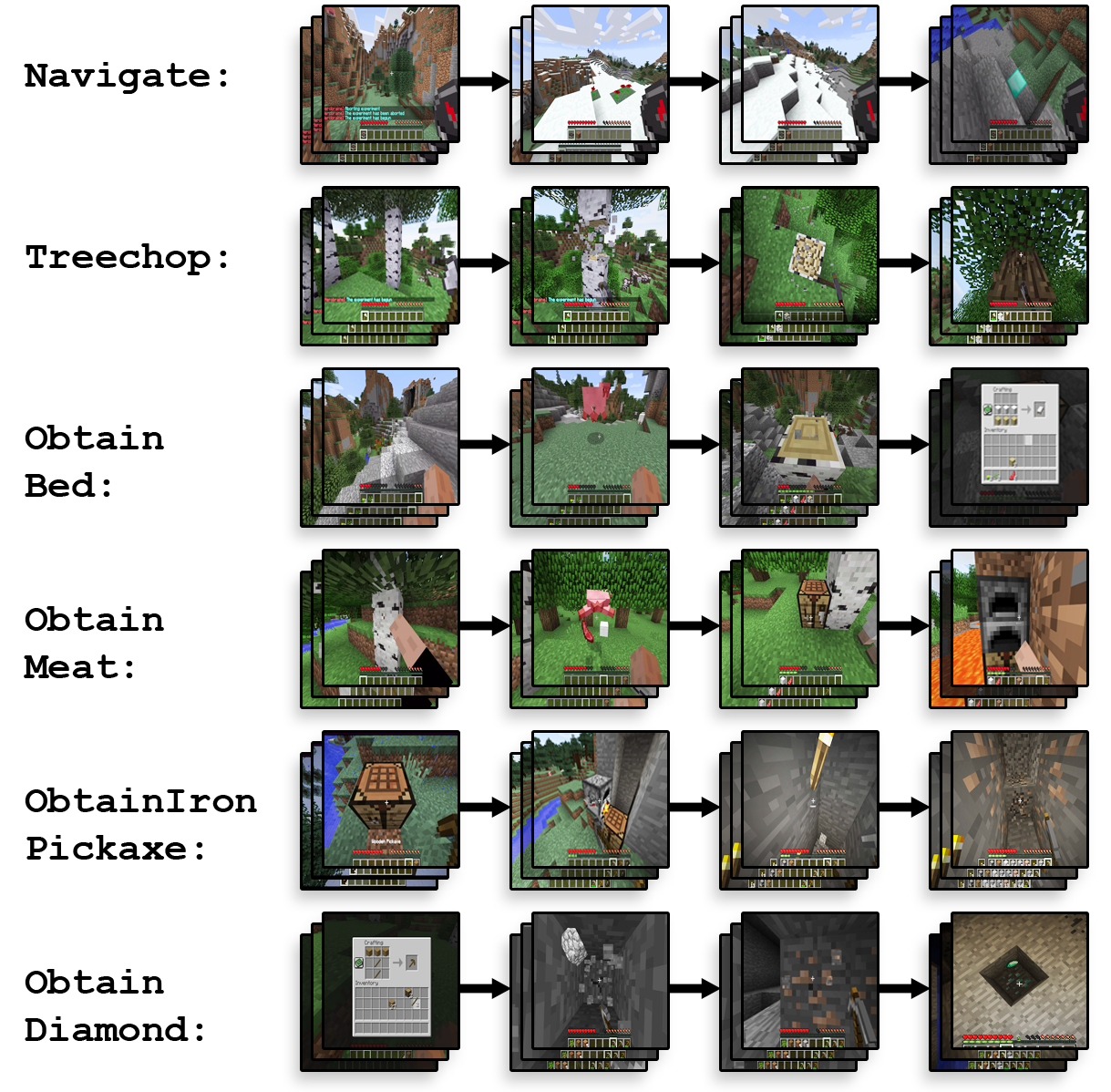

The initial release, MineRL-v0, encompasses six distinct tasks, providing extensive coverage across the vast possibility space Minecraft offers. This coverage allows for the evaluation of hierarchical learning, imitation learning, and transfer learning techniques.

Figure 3: Images of various stages within the six stand-alone tasks illustrating the diversity of gameplay challenges.

A significant portion of the dataset contains expert-level gameplay, facilitating imitation learning approaches. The dataset’s breadth also includes intermediate and novice demonstrations, supporting research into imperfect demonstration leveraging.

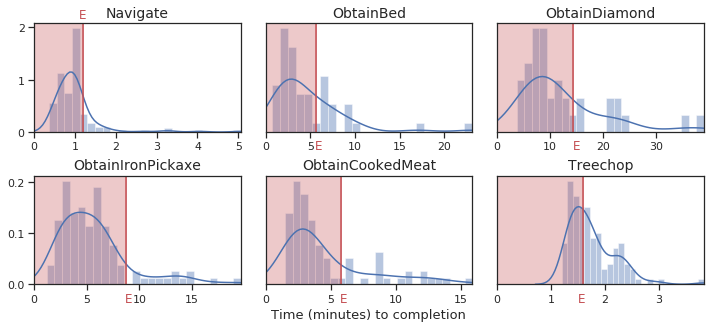

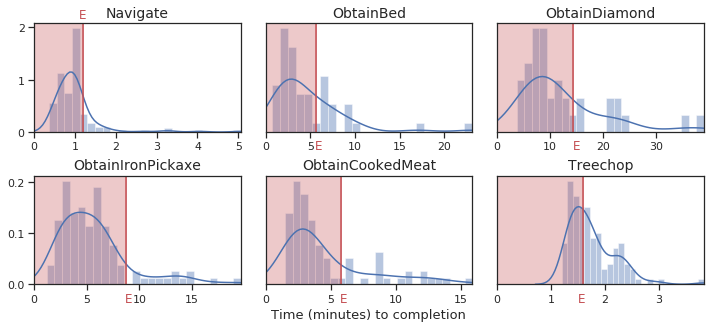

Figure 4: Normalized histograms of human demonstration lengths across various MineRL tasks.

Coverage and Hierarchality

The dataset covers a comprehensive range of Minecraft tasks, from navigation to complex item crafting, enabling sophisticated analysis of hierarchical action structures and subpolicy extraction. Item precedence frequency graphs further illustrate the nuanced strategies humans employ.

Figure 5: Item precedence frequency graphs for key tasks highlight how players progress through the item hierarchy.

Experiments and Evaluation

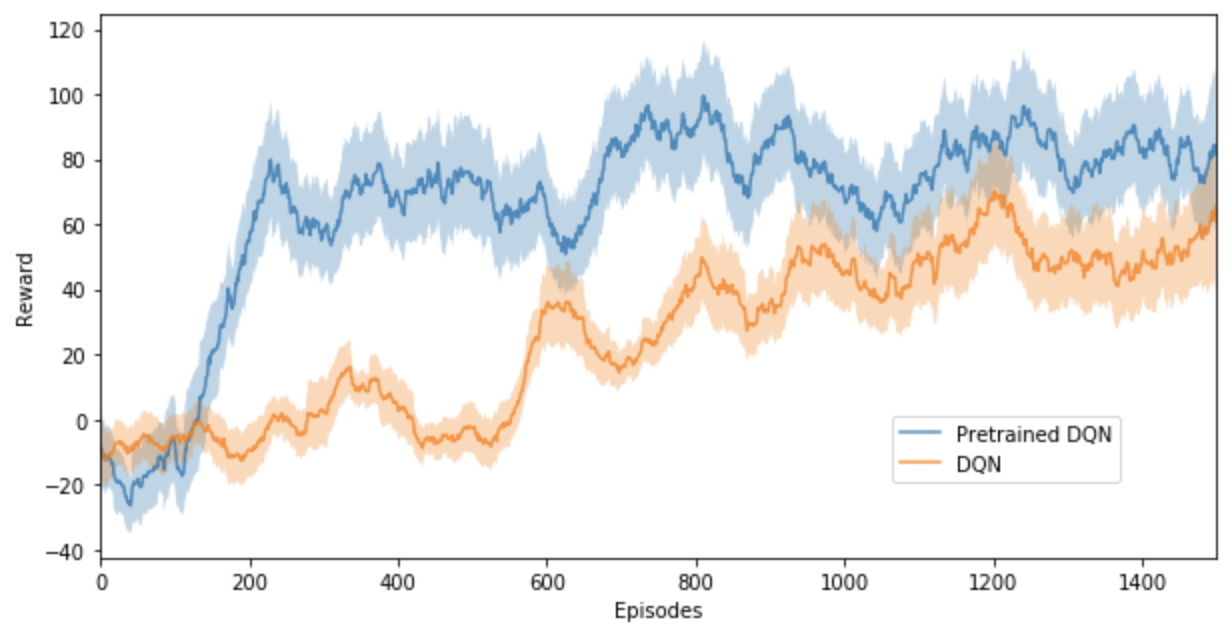

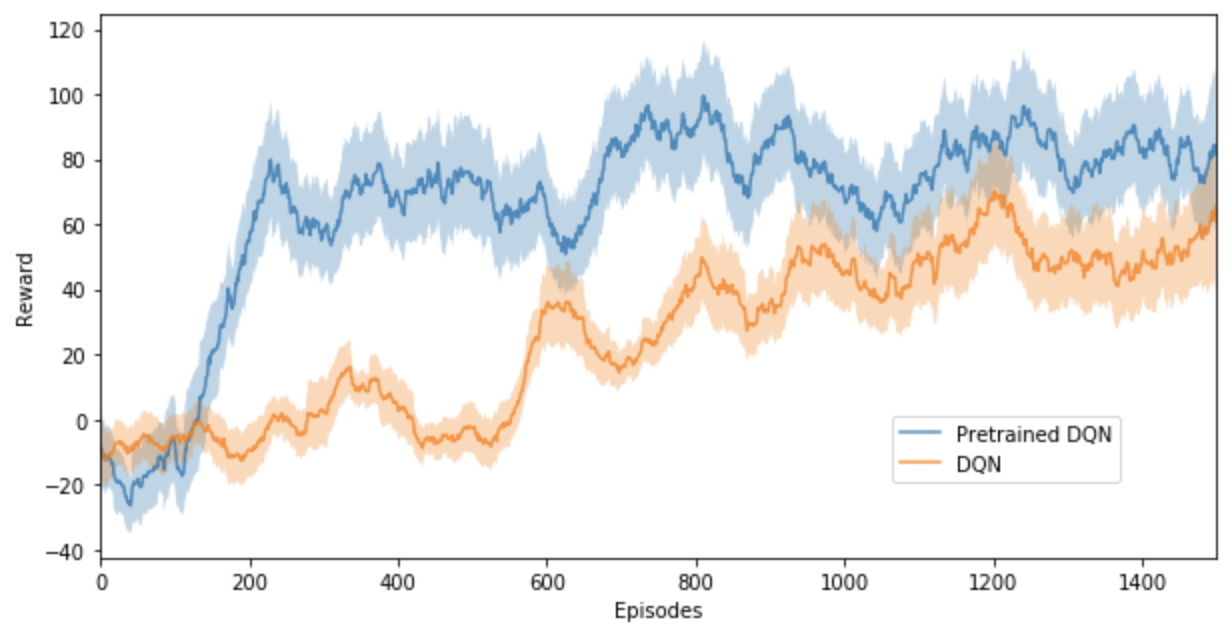

Experiments demonstrate the inherent difficulty of Minecraft tasks for RL algorithms and the benefits of integrating human demonstrations. Three RL methods, including DQN and A2C, were tested alongside a Behavioral Cloning method, all showing enhanced performance with the use of MineRL data.

Figure 6: Performance graphs over time for DQN and Pretrained DQN on Navigate (Dense) task highlight the impact of human data.

MineRL adds to a rich history of datasets in computer vision and language processing by providing an unprecedented scale of human-annotated data in a simulator-paired open-world game environment. The dataset is distinguished by its detailed annotations and hierarchical complexity, which rival existing benchmarks and provide fertile ground for developing hierarchical and imitation-based learning methods.

Conclusion

MineRL stands as a remarkable tool for advancing sequential decision-making research by providing an extensive, diverse dataset along with a robust infrastructure for ongoing data collection. It offers significant contributions to the fields of hierarchical RL, imitation learning, and has profound potential to shape future advancements in AI methods capable of tackling complex real-world problems.