- The paper introduces a two-stage attention-based framework that classifies and localizes prostate whole slide images using weakly supervised learning.

- It employs a CNN with an attention module and MIL to select informative tiles, enhancing diagnostic precision beyond conventional methods.

- Experimental results achieved an 85.11% accuracy in distinguishing cancer grades, demonstrating the model's effectiveness in automated histological analysis.

An Attention-Based Multi-Resolution Model for Prostate Whole Slide Image Classification and Localization

Introduction

The proposed study introduces a novel two-stage framework leveraging attention-based mechanisms for the classification and localization in prostate whole slide images (WSIs). Histology, often regarded as the gold standard in disease diagnosis, benefits significantly from automation through computer-aided diagnosis (CAD), alleviating the exhaustive manual scrutiny required by pathologists and minimizing interobserver discrepancies.

WSIs present unique challenges due to their substantial size, variability in tissue content, and the presence of staining artifacts. Previous models primarily focused on regions of interest (ROIs) necessitating fine-grained labels, which involved intensive labeling effort. This model utilizes weakly supervised learning, utilizing slide-level labels only, streamlining the labeling process markedly. The proposed design not only predicts the slide-level cancer grade but also weakly supervises ROI detection, advancing cancer grading beyond existing implementations by selecting visual saliency maps for fine-grained classification.

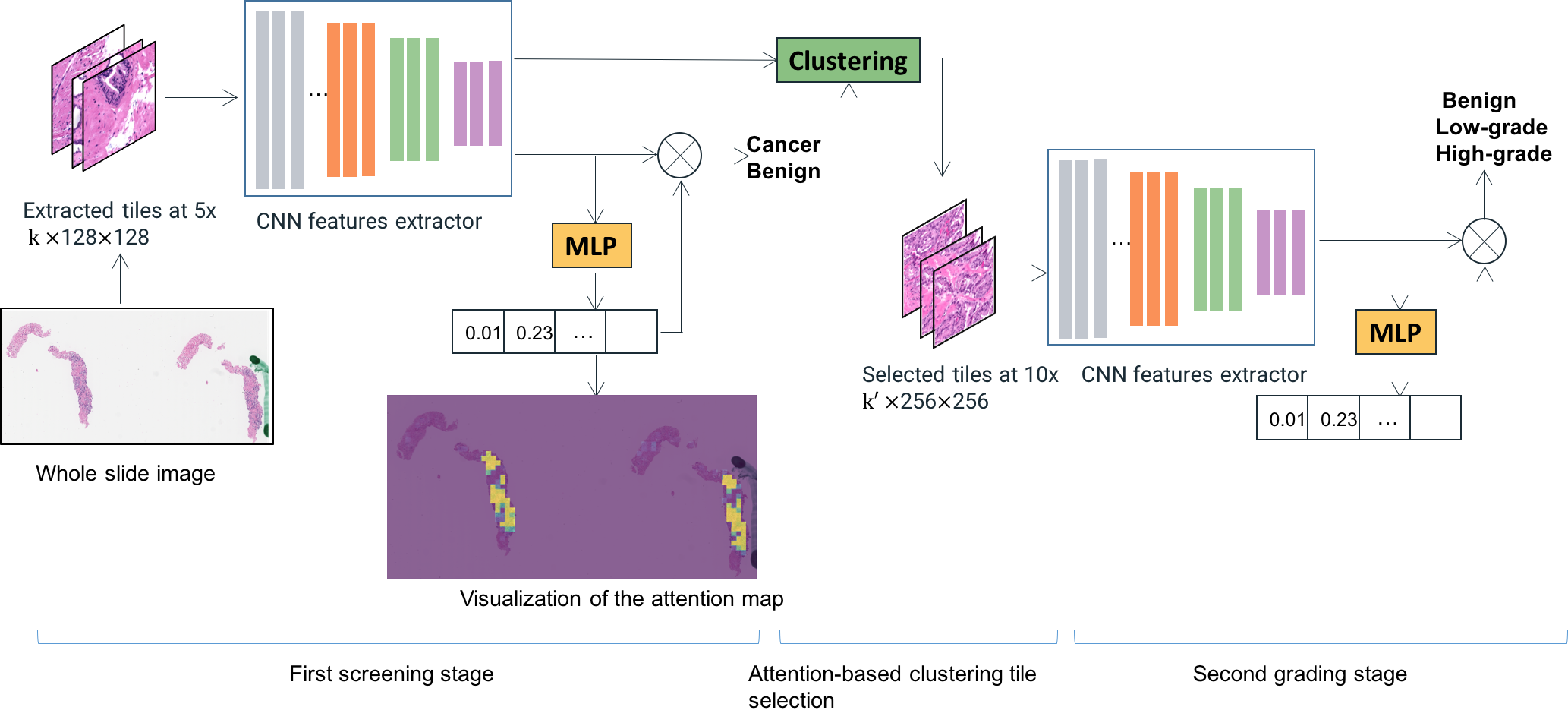

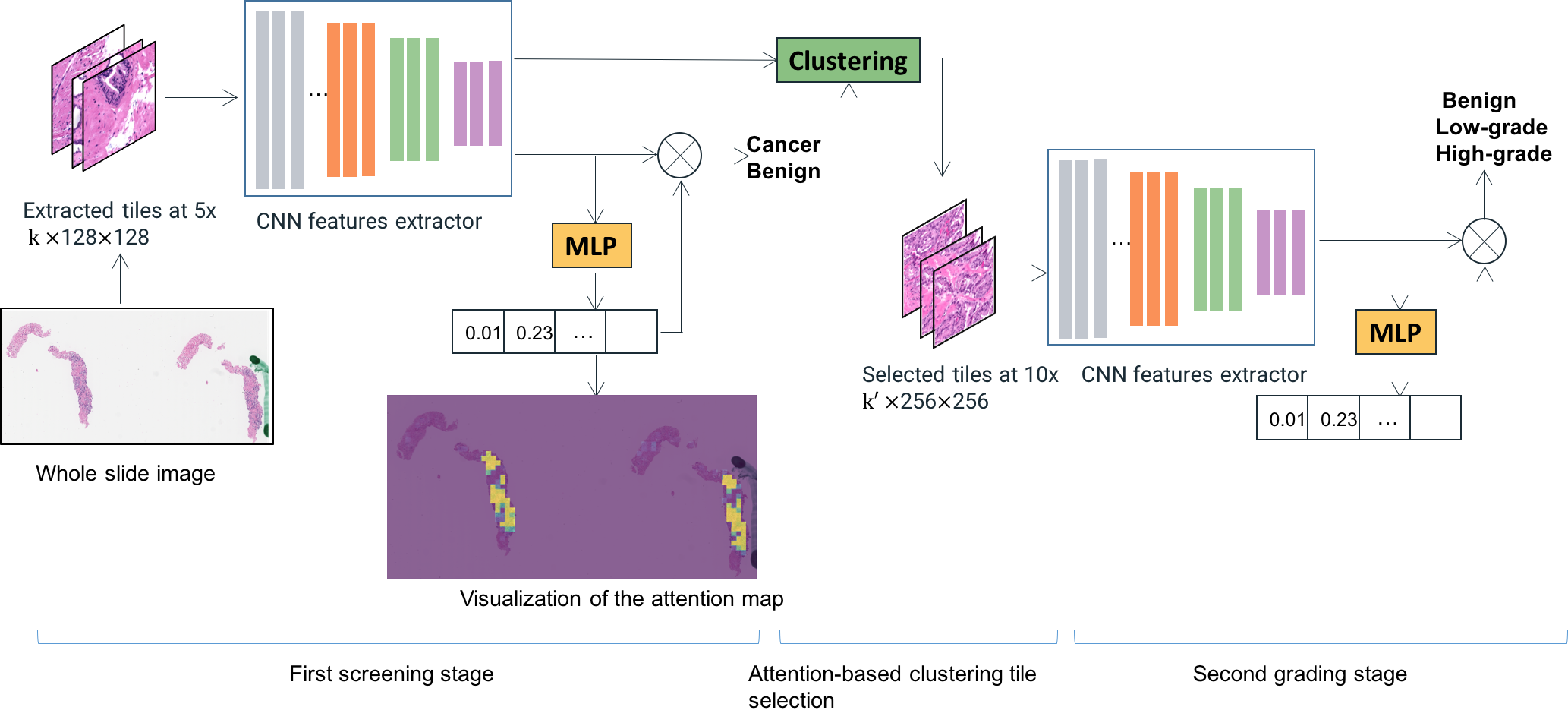

Figure 1: The overview of our two-stage attention-based whole slide image classification model. The first stage is trained with tiles at 5x for cancer versus non-cancer classification. Informative tiles identified using instance features and attention maps from the first stage are selected to be analyzed in the second stage at a higher resolution for cancer grading.

Methodology

Attention-Based Multiple Instance Learning

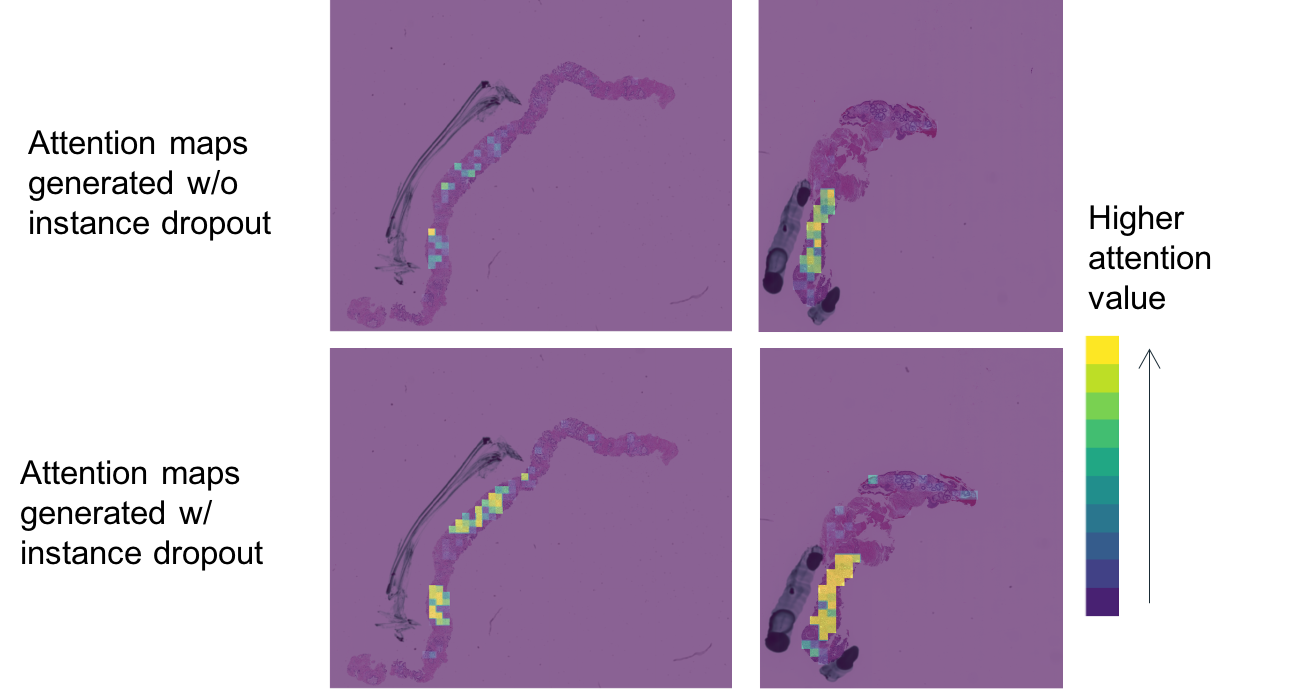

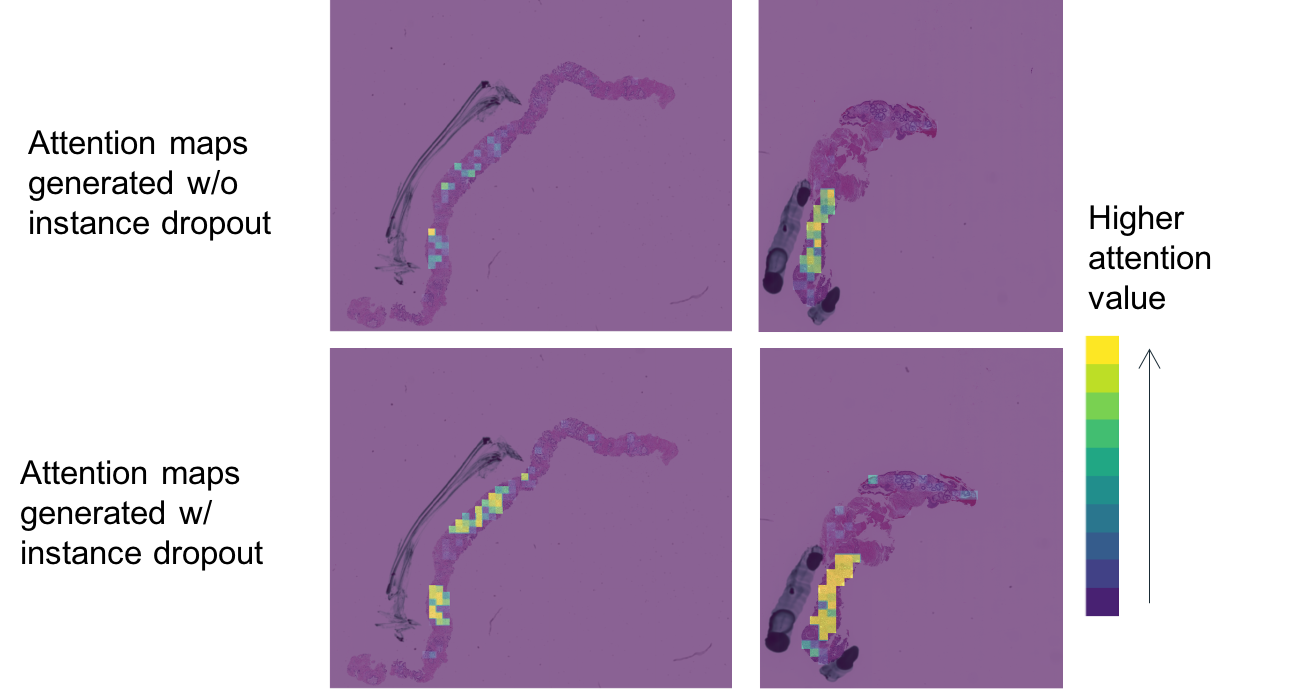

The backbone of the model is a convolutional neural network (CNN) integrated with an attention module enabling instance-based predictions in a bag-level classification framework, a process intrinsic to multiple instance learning (MIL). The attention module computes a weight distribution denoting the importance of tiles (instances) within the slide, crucial for predicting the bag's label. Furthermore, the model utilizes instance dropout during training, fortifying the robustness of the attention mechanism by preventing over-reliance on singular discriminative tiles.

The MIL framework represents each WSI as a collection of tiles, developing a nuanced mechanism for attention through a multilayer perceptron (MLP). This enables the transformation of complex histological data into informative and interpretable visualizations, essential for further analysis in the second-stage model.

Two-Stage Model Integration

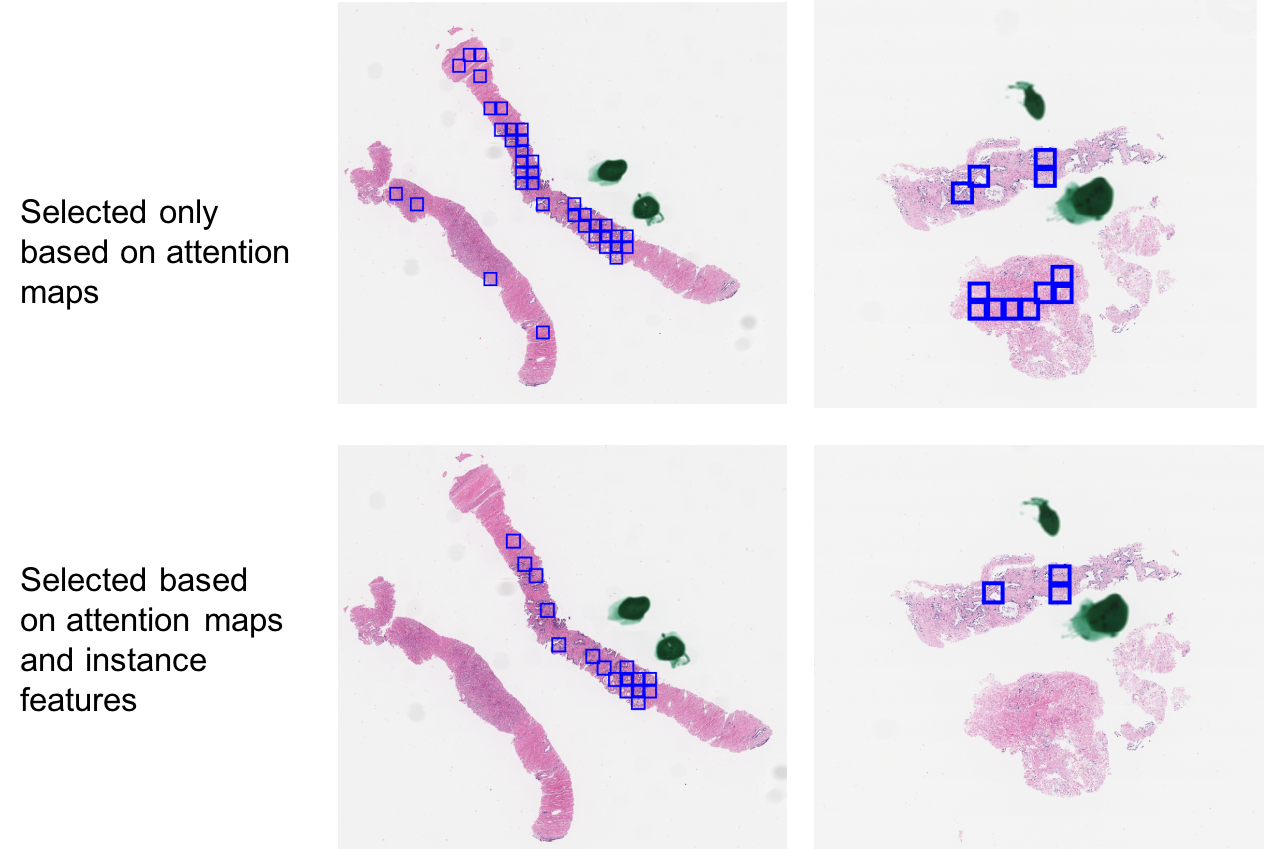

Inspired by pathological workflows, the model analyzes slides at two resolutions: low (5x) for initial screening and high (10x) for detailed grading analysis. The first-stage model identifies regions of potential cancerous significance using attention maps, subsequently scrutinized at higher resolutions to discern the grade of cancer with enhanced precision.

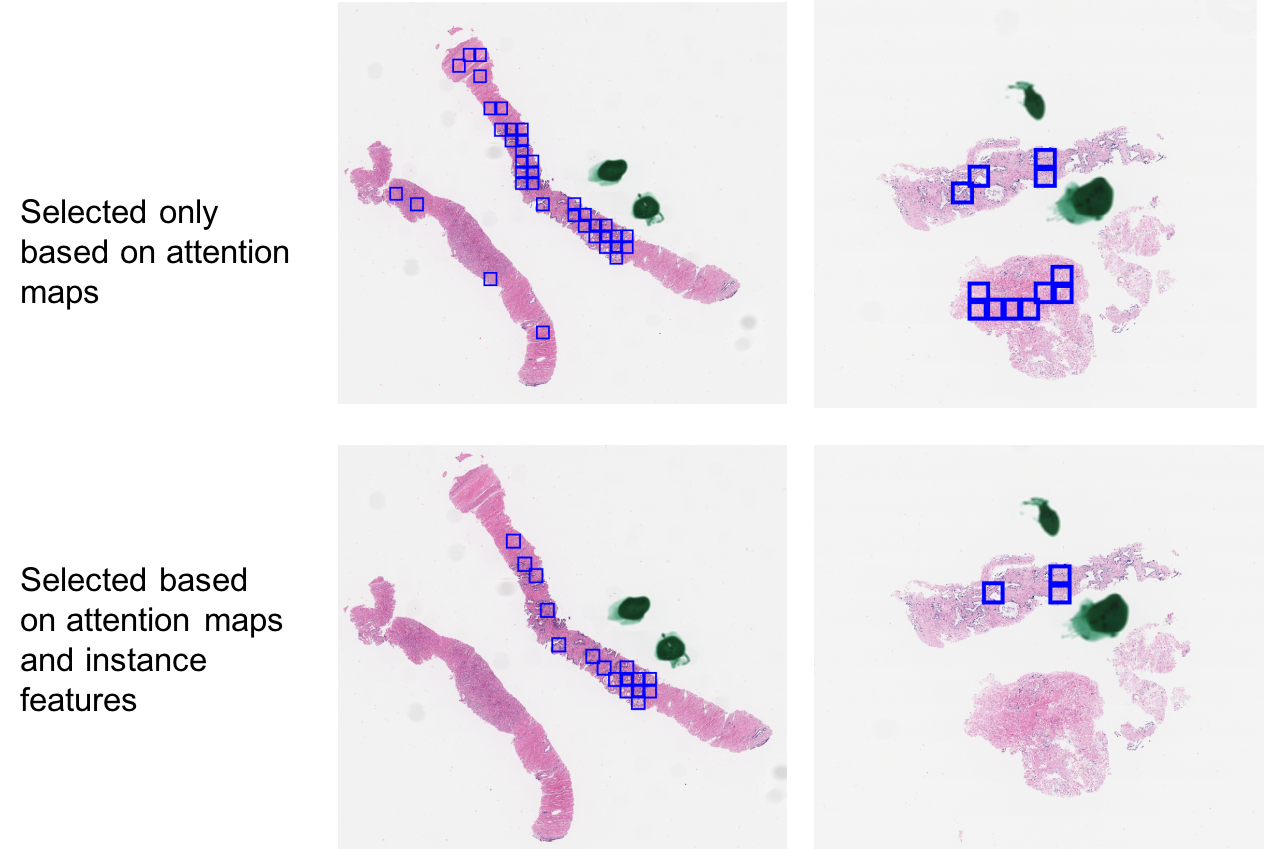

This two-stage model, driven by attention maps and clustering instance features, adeptly mimics the diagnostic techniques employed by professionals. Detection in the first stage is crucial for guiding the analysis in the second stage, emulating the standard clinical assessments employed in histological evaluations.

Experimentation and Evaluation

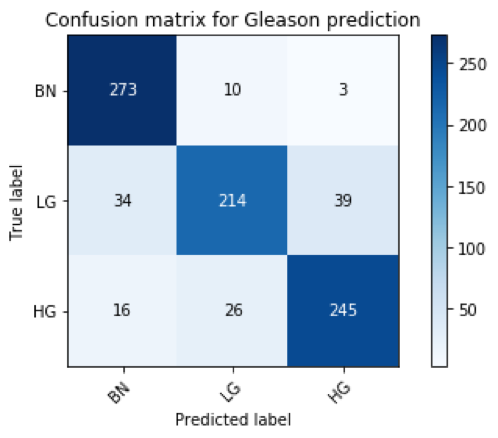

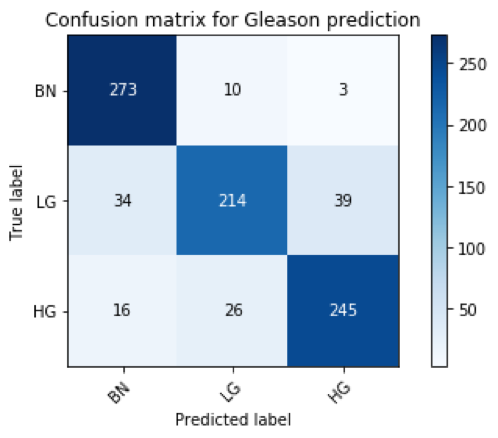

The model was evaluated on a substantial dataset containing biopsy slides, attaining state-of-the-art accuracy of 85.11% for differentiating benign, low-grade, and high-grade prostate cancer. This performance corroborates the utility of clustering-based attention and multi-resolution imagery in enhancing grading accuracy, notably outperforming unmodified attention models and previous approaches relying on RCNN and image segregation methodologies.

Figure 2: Confusion matrix for Gleason grade classification on the test set.

Attention maps have demonstrably improved, benefitted from clustering methods and instance dropout, assuring holistic tile selection and enhanced predictive accuracy. This strategic refinement manifests considerable improvements over one-stage models, as attested in experimental data.

Figure 3: Visualization of selected tiles based on different methods. Each blue box indicates one selected tile.

Figure 4: Visualization of the model trained with or without instance dropout.

Discussion

This paper sets a precedent for high-performance WSI analysis using attention-enhanced MIL models with minimal labeling requirements while impersonating clinical realism through multi-resolution assessments. The qualitative results underscore its applicability in clinical environments by simplifying complex workflows usually necessitating labor-intensive procedures.

Future work could refine instance dropout methodologies and incorporate higher resolution imagery to capture subtle features, such as nucleoli common in aggressive cancerous formations, potentially improving grading precision further. Additionally, explicit validation of attention maps through pathologist feedback could fortify interpretability, mitigating reliance on implicit evaluations. Moreover, exploring advanced transfer learning modalities could leverage pre-trained models to elevate performance margins.

Concluding, this model significantly progresses automated histological analysis, promising effective deployment across oncological diagnostic frameworks and heralding advancements in both theoretical and practical realms of medical AI.