nuScenes: A multimodal dataset for autonomous driving

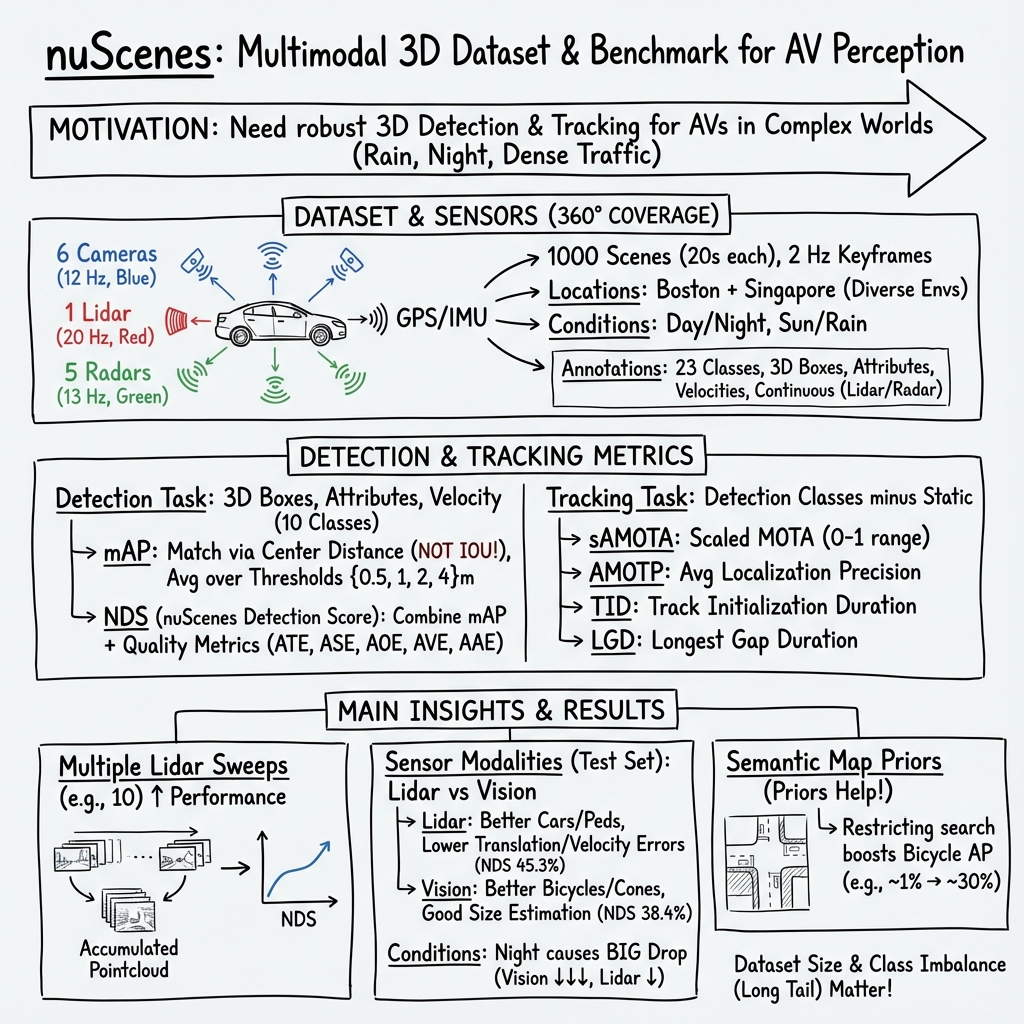

Abstract: Robust detection and tracking of objects is crucial for the deployment of autonomous vehicle technology. Image based benchmark datasets have driven development in computer vision tasks such as object detection, tracking and segmentation of agents in the environment. Most autonomous vehicles, however, carry a combination of cameras and range sensors such as lidar and radar. As machine learning based methods for detection and tracking become more prevalent, there is a need to train and evaluate such methods on datasets containing range sensor data along with images. In this work we present nuTonomy scenes (nuScenes), the first dataset to carry the full autonomous vehicle sensor suite: 6 cameras, 5 radars and 1 lidar, all with full 360 degree field of view. nuScenes comprises 1000 scenes, each 20s long and fully annotated with 3D bounding boxes for 23 classes and 8 attributes. It has 7x as many annotations and 100x as many images as the pioneering KITTI dataset. We define novel 3D detection and tracking metrics. We also provide careful dataset analysis as well as baselines for lidar and image based detection and tracking. Data, development kit and more information are available online.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise, actionable list of what the paper leaves missing, uncertain, or unexplored, to guide future research.

- Geographic and ODD coverage: Data is limited to urban Boston and Singapore; no rural, suburban, highway, or diverse international driving cultures beyond two cities are covered.

- Weather/lighting diversity: Absent or underrepresented conditions include snow, fog, heavy glare, low sun, sand/dust, and seasonal variations.

- Scene duration: 20-second scenes limit evaluation of long-horizon tracking and forecasting; longer annotated sequences are needed.

- Annotation frequency: 3D boxes are labeled at 2 Hz; the impact on fast-moving objects, velocity estimation, and tracking fidelity is not quantified. A high-frequency-labeled subset could benchmark this.

- Label completeness bias: Objects are annotated only if covered by lidar or radar; image-visible-only objects may be unlabeled, potentially biasing evaluation of camera-based methods.

- Ground-truth velocities: Methods for computing per-object velocities and their uncertainties are not described or validated; release of uncertainty estimates and error analysis is needed.

- Attribute annotations: Attribute coverage is limited and uneven (e.g., none for barriers/cones); utility and reliability of attributes are not benchmarked.

- Long-tail imbalance: Extreme class imbalance (1:10k) lacks standardized rare-class splits, few-shot/zero-shot protocols, or official reweighting baselines.

- 2D and point-level labels: No 2D instance masks, 2D boxes, or point-level semantic labels at release; these are needed for segmentation, depth, and multi-task learning.

- Human-centric labels: No pedestrian keypoints, gaze, gestures, or full articulated pose; these would enable intent and interaction modeling.

- Shape fidelity: Cuboids poorly approximate certain classes (e.g., bicyclists, pedestrians); richer geometry (meshes, instance masks) is missing.

- Traffic signal/sign states: Dynamic states (traffic lights, signs) are not labeled; this limits reasoning about compliance and scene intent.

- Map priors: Although maps are provided, there is no standardized map-aware benchmark (e.g., lane-level detection, map-consistent localization, map-based forecasting) or evaluation under map errors.

- Map generalization: Generalization to unmapped areas, outdated maps, or map misalignment is not studied; benchmarks with map perturbations are needed.

- Cross-dataset generalization: No standardized protocols for training on nuScenes and testing on other datasets (and vice versa); cross-dataset baselines would quantify domain transfer.

- Sensor synchronization/calibration: Quantitative statistics on time sync errors and extrinsic drift are not provided; per-frame quality flags and robustness evaluations are needed.

- Ego-pose uncertainty: Perception robustness to localization errors is not evaluated; benchmarks with perturbed ego-pose or uncertainty metadata would be valuable.

- Sensor rig diversity: Only one LiDAR model (32-beam), one camera setup, and one vehicle platform are used; robustness across different rigs (e.g., 64/128-beam LiDARs, varied FOVs/placements) is untested.

- Radar exploitation: No strong radar-only or principled radar–camera–lidar fusion baselines; raw radar representations (e.g., range–Doppler/IQ) and labeled associations are not provided.

- Asynchronous fusion: Practical strategies and baselines for fusing 20 Hz LiDAR, 12 Hz cameras, and 13 Hz radars under asynchrony are not established.

- Privacy blurring impact: The effects of face/plate blurring (false positives/negatives) on downstream perception are unquantified; a curated unblurred or synthetic alternative subset for analysis is missing.

- Metric design sensitivity: Fixed center-distance thresholds across classes and exclusion of <10% precision/recall regions may bias conclusions; size-adaptive thresholds and sensitivity studies are needed.

- Tracking metrics validation: New metrics (TID, LGD) are not validated for safety relevance; correlation with planning risk and scenario criticality remains unproven.

- Runtime and efficiency: Benchmarks do not include latency/throughput/energy constraints; real-time tracks and standardized resource reporting are absent.

- Split design and leakage: Spatial/temporal separation between train/val/test is not fully documented; strict geographic disjointness and per-scene metadata are needed to avoid leakage.

- Open-set and novelty: No protocols for detecting unseen classes or out-of-distribution objects; open-set detection benchmarks would address this.

- Interaction/intent labels: No standardized annotations for interactions (e.g., yielding, cut-ins, jaywalking intent); behavior/intent benchmarks are needed.

- Safety-critical scenarios: Although “interesting” scenes are curated, there is no standardized set of labeled near-misses or corner cases with scenario-specific evaluation.

- Dense depth: No dense depth or stereo disparity ground truth; releasing LiDAR-projected depth (and stereo pairs) would enable depth estimation tasks.

- Re-identification: Track IDs are scene-local; cross-scene identity continuity to support re-ID is absent.

- Annotation quality quantification: Inter-annotator agreement, label uncertainty, and error rates are not reported; releasing such statistics would enable uncertainty-aware learning/evaluation.

- Calibration robustness: Calibrations updated biweekly may drift between updates; robustness studies to small miscalibrations and per-frame validity indicators are missing.

- License constraints: Non-commercial license limits industry comparability; pathways for reproducible public evaluation bridging academia and industry remain unclear.

Practical Applications

The following applications translate the paper’s findings, methods, and innovations into practical, real-world uses across industry, academia, policy, and daily life. Each item notes relevant sectors, potential tools/products/workflows, and key assumptions or dependencies.

Immediate Applications

- Bold benchmarking and training of multimodal AV perception models; sectors: automotive/AV, robotics, software

- Tools/products/workflows: use the nuScenes devkit, dataset splits, and public leaderboards to train and evaluate 3D detectors and trackers (e.g., PointPillars, MonoDIS, AB3DMOT). Adopt the nuScenes detection score (NDS), mAP (center-distance), sAMOTA, TID/LGD in internal QA dashboards.

- Assumptions/dependencies: access to compute for training; familiarity with nuScenes dataset licensing (CC BY-NC-SA for non-commercial use); integration of provided evaluation code.

- Rapid prototyping of sensor fusion pipelines (camera-lidar-radar); sectors: automotive/AV, robotics

- Tools/products/workflows: design fusion models using synchronized 6-camera, lidar, and radar streams; leverage temporal accumulation (multi-sweep lidar) and radar Doppler velocity; run ablation studies to select sensor combinations per operational design domain (ODD).

- Assumptions/dependencies: compatible sensor configurations or domain adaptation if production sensors differ (beam count, FOV, capture rates).

- Upgrade existing lidar pipelines with multi-sweep temporal accumulation; sectors: automotive/AV, robotics, software

- Tools/products/workflows: incorporate 0.5 s of prior sweeps, append per-point time delta as a feature, retrain velocity heads; adopt the simple accumulation strategy shown to boost NDS/mAP and reduce mAVE.

- Assumptions/dependencies: accurate ego-motion compensation and timestamp alignment; sufficient memory/compute.

- Map-prior integration to reduce false positives and simplify trajectory prediction; sectors: automotive/AV, mapping/GIS, software

- Tools/products/workflows: constrain detections by semantic map layers (e.g., road vs. sidewalk), use baseline routes to reduce trajectory search space; integrate HD localization for strong priors.

- Assumptions/dependencies: access to accurate vectorized maps (11 layers) for target areas; robust localization to HD maps.

- Adopt center-distance matching for small/thin objects and fair cross-modality comparisons; sectors: automotive/AV, robotics, software

- Tools/products/workflows: switch detection evaluation from IoU to 2D center-distance thresholds for classes like pedestrians/bicycles; update internal metrics to reflect NDS’s balanced error composition.

- Assumptions/dependencies: alignment of internal KPIs with revised metrics; retraining or retuning thresholds.

- Radar algorithm development and validation; sectors: automotive/AV, robotics, semiconductor

- Tools/products/workflows: build and test radar-only and radar-fusion modules using nuScenes radar returns and Doppler; evaluate velocity estimation improvements (mAVE) in adverse conditions (rain/night).

- Assumptions/dependencies: expertise in radar signal processing; handling sparse radar returns and clutter.

- Tracking evaluation under realistic constraints using sAMOTA, TID, LGD; sectors: automotive/AV, robotics

- Tools/products/workflows: quantify tracking robustness (initialization time, longest gaps) to guide tracker improvements and safety triggers; implement early-warning for prolonged tracking loss.

- Assumptions/dependencies: detector quality directly influences tracking KPIs; careful threshold selection across recall.

- Standardize dataset schemas and tooling across organizations; sectors: automotive/AV, software/MLOps

- Tools/products/workflows: adopt nuScenes database schema and devkit to unify ingestion across datasets (e.g., Lyft L5), reducing data engineering overhead; build conversion tools and cross-dataset evaluation pipelines.

- Assumptions/dependencies: organizational buy-in to standardized formats; mapping of proprietary fields to the nuScenes taxonomy.

- Address class imbalance with importance sampling and curriculum design; sectors: automotive/AV, academia

- Tools/products/workflows: implement class-balanced sampling and reweighting strategies in training; establish long-tail curricula for rare-but-critical classes (ambulances, animals, cones).

- Assumptions/dependencies: monitoring of per-class metrics; robust augmentation policies.

- Privacy protection workflows for AV data; sectors: automotive/AV, legal/compliance, software

- Tools/products/workflows: deploy automated face and plate detection/blurring (e.g., Faster R-CNN, MTCNN) to comply with privacy regulations in large-scale image pipelines.

- Assumptions/dependencies: acceptable false-positive/false-negative rates; region-specific privacy requirements.

Long-Term Applications

- Performance certification and regulatory standards based on NDS/sAMOTA; sectors: policy/regulation, automotive/AV, safety certification

- Tools/products/workflows: develop certification protocols that require minimum NDS and tracking metrics across scenario suites (day/night/rain/intersections). Publish transparent performance reports aligned with nuScenes-style metrics.

- Assumptions/dependencies: regulatory acceptance; coverage of local ODDs beyond Boston/Singapore; scenario representativeness.

- Robust multimodal sensor fusion with redundancy against failures/adverse weather; sectors: automotive/AV, robotics

- Tools/products/workflows: design principled fusion architectures exploiting complementary failure modes (camera, lidar, radar) to maintain detection/tracking under sabotage, occlusion, or heavy rain.

- Assumptions/dependencies: sustained research in fusion strategies; validation across broader environments and sensors.

- Cost-optimized sensor stacks and edge compute co-design; sectors: automotive/AV, semiconductor, hardware

- Tools/products/workflows: use per-class performance analyses to tailor lower-cost combinations (e.g., radar + camera for velocity and thin-object detection); co-optimize model complexity using sAMOTA/TID/LGD constraints.

- Assumptions/dependencies: rigorous trade-off studies; procurement constraints; real-time budgets on embedded platforms.

- Scalable trajectory prediction and behavior modeling benchmarks; sectors: automotive/AV, smart mobility, software

- Tools/products/workflows: extend nuScenes to prediction tasks using baseline routes, maps, and multi-agent histories; standardize forecasting metrics; integrate with planners for closed-loop evaluation.

- Assumptions/dependencies: availability of expanded labels (planned by authors); joint evaluation frameworks for perception-prediction-planning.

- Semi-supervised/active learning and auto-labeling pipelines; sectors: software/MLOps, automotive/AV, academia

- Tools/products/workflows: pretrain on nuScenes, fine-tune on proprietary data with active selection (long-tail focus); leverage temporal/lidar sweeps and map priors for pseudo-labeling.

- Assumptions/dependencies: careful domain adaptation; scalable annotation review; legal constraints on dataset mixing.

- Privacy-preserving AV data ecosystems and tooling; sectors: legal/compliance, software, automotive/AV

- Tools/products/workflows: build standardized anonymization toolkits, audits, and documentation for large-scale multimodal capture; integrate with continuous data collection and MLOps pipelines.

- Assumptions/dependencies: evolving privacy laws; cross-region policy harmonization; acceptable impact on perception performance.

- Smart city safety and infrastructure planning; sectors: urban planning/public sector, smart cities, policy

- Tools/products/workflows: analyze dataset hotspots (intersections) to inform infrastructure improvements, traffic calming, and sensorized corridors; use performance deltas under rain/night to prioritize lighting/drainage upgrades.

- Assumptions/dependencies: transferability from AV dataset to broader traffic dynamics; collaboration with municipalities.

- Insurance and risk scoring for AV/ADAS fleets; sectors: insurance/finance, automotive/AV

- Tools/products/workflows: derive risk KPIs from detection/tracking quality (e.g., LGD thresholds) to price policies or set safety incentives; monitor fleet-level NDS trends over routes and weather.

- Assumptions/dependencies: access to production logs; correlation between perception KPIs and claim risk; regulatory approval for usage.

- Interoperable data marketplaces and research commons; sectors: software/MLOps, academia, industry consortia

- Tools/products/workflows: use nuScenes schema as a base for cross-dataset interoperability, cataloging, and standardized benchmarks; enable plug-and-play evaluation across datasets.

- Assumptions/dependencies: consortium governance; IP/licensing harmonization; robust converters.

- Human–vehicle interaction via natural language scene grounding; sectors: automotive/AV, HCI, accessibility

- Tools/products/workflows: combine scene descriptions and natural language referral annotations (extensions like Talk2Car) to build voice interfaces that reference the environment (e.g., “watch the bicycle crossing ahead”).

- Assumptions/dependencies: additional multimodal annotations; safety validation of language-grounded actions.

- Digital twins and dynamic point-cloud forecasting; sectors: software, smart cities, robotics

- Tools/products/workflows: train models to predict future point clouds/agent states (e.g., PointRNN) for digital twin simulations and proactive hazard detection; integrate with city-scale twins.

- Assumptions/dependencies: scalable simulation frameworks; broader coverage of urban environments.

- Global generalization and domain adaptation across countries and weather; sectors: automotive/AV, academia

- Tools/products/workflows: research transfer learning from Boston/Singapore to new geographies (lane markings, vegetation, left/right traffic); build robust augmentation (rain/night) and adaptation pipelines.

- Assumptions/dependencies: additional datasets for target regions; evaluation under local ODDs; continual learning safeguards.

- Fallback and teleoperation strategies informed by TID/LGD; sectors: automotive/AV, operations

- Tools/products/workflows: detect prolonged tracking gaps to trigger safe fallback or remote assistance; design operational policies around tracker recovery metrics.

- Assumptions/dependencies: reliable detection of metric thresholds in real time; integration with operations centers.

Notes on feasibility:

- Licensing: nuScenes is CC BY-NC-SA (non-commercial); commercial deployments generally require proprietary data or licensing arrangements, but tools/metrics/devkit can still be adopted.

- Sensor and environment differences: production fleets may use different sensors or drive in dissimilar ODDs; domain adaptation and calibration are required.

- HD maps and localization: map priors depend on accurate, up-to-date semantic maps and robust localization; availability varies by geography.

- Compute budgets: multi-sweep accumulation and multimodal fusion increase resource needs; edge constraints must be considered for deployment.

Collections

Sign up for free to add this paper to one or more collections.