- The paper introduces an adversarial framework combining TANet and BiNet to augment noisy document images and achieve superior binarization.

- The methodology uses content and style encoders along with adversarial and L2 loss functions to merge textual content with realistic noise textures.

- Experimental evaluations on DIBCO benchmarks demonstrate improved metrics, including an F-measure of 97.8 and a PSNR of 24.3, compared to state-of-the-art baselines.

Improving Document Binarization via Adversarial Noise-Texture Augmentation

Introduction

The paper "Improving Document Binarization via Adversarial Noise-Texture Augmentation" introduces an adversarial framework to enhance document binarization by employing noise-texture augmentation. Traditional methods based on fixed thresholding often fail under document degradations such as faded ink or variable lighting. This work utilizes a Generative Adversarial Network (GAN) framework to address these challenges by generating augmented training data that mimic real-world noisy conditions.

Proposed Framework

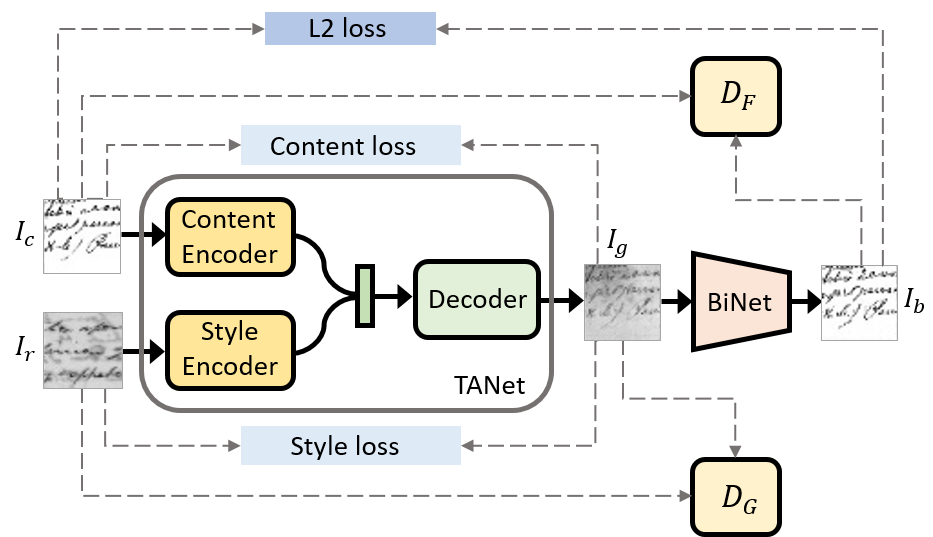

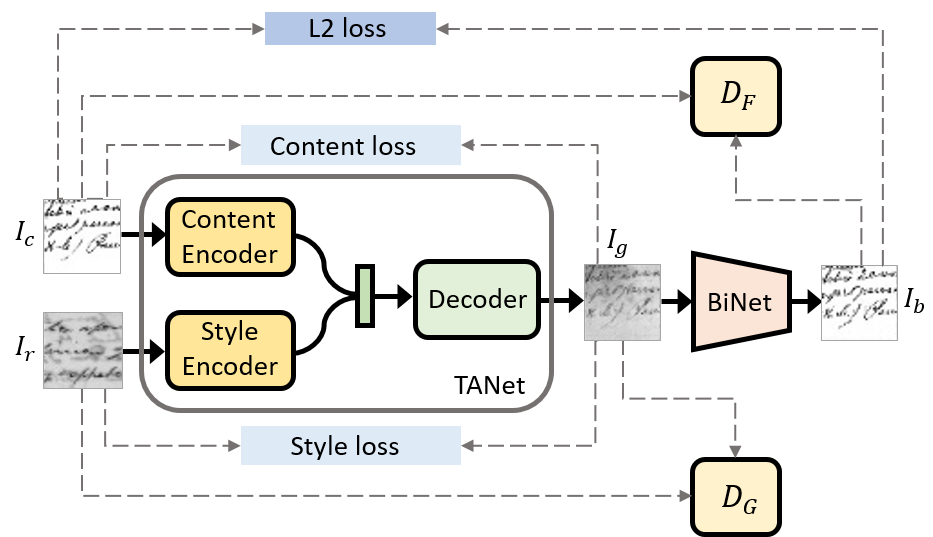

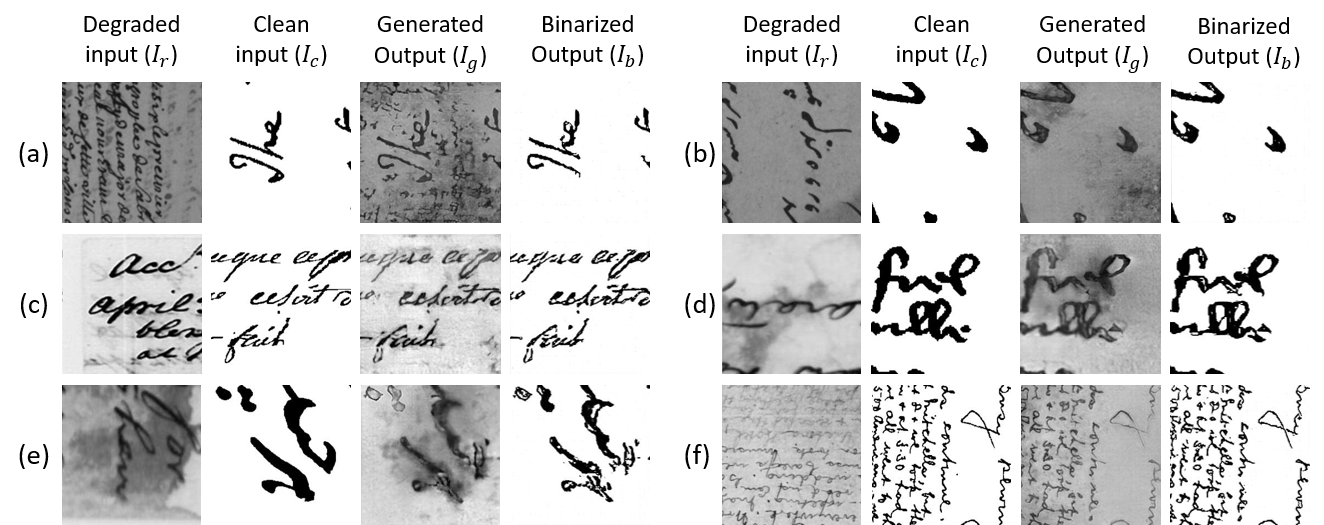

The proposed method integrates two neural networks: the Texture Augmentation Network (TANet) and the Binarization Network (BiNet). TANet is responsible for augmenting clean document images with noise textures derived from degraded reference images. This augmentation enlarges the effective training dataset by creating variations with realistic noise patterns. BiNet then processes these augmented images to return a clean, binarized version.

Figure 1: Illustration of the Proposed framework: It consists of two networks TANet and BiNet. TANet takes a clean document image Ic and a degraded reference image Ir and tries to generate an image Ig with the same textual content as Ic retaining the noisy texture of Ir.

Methodology

Texture Augmentation Network (TANet): TANet utilizes content and style encoders to separate and merge textual content and noise texture, respectively. The generated output maintains the semantic content of the clean image while adopting the noise characteristics of the reference image. Loss functions include adversarial loss for realism, style loss for accurate texture transfer, and content loss to ensure textual fidelity.

Binarization Network (BiNet): BiNet employs an image-to-image translation framework to de-noise the textures applied by TANet, producing a binarized clean image. This stage is optimized using adversarial loss for sharpness and an L2 loss to preserve content.

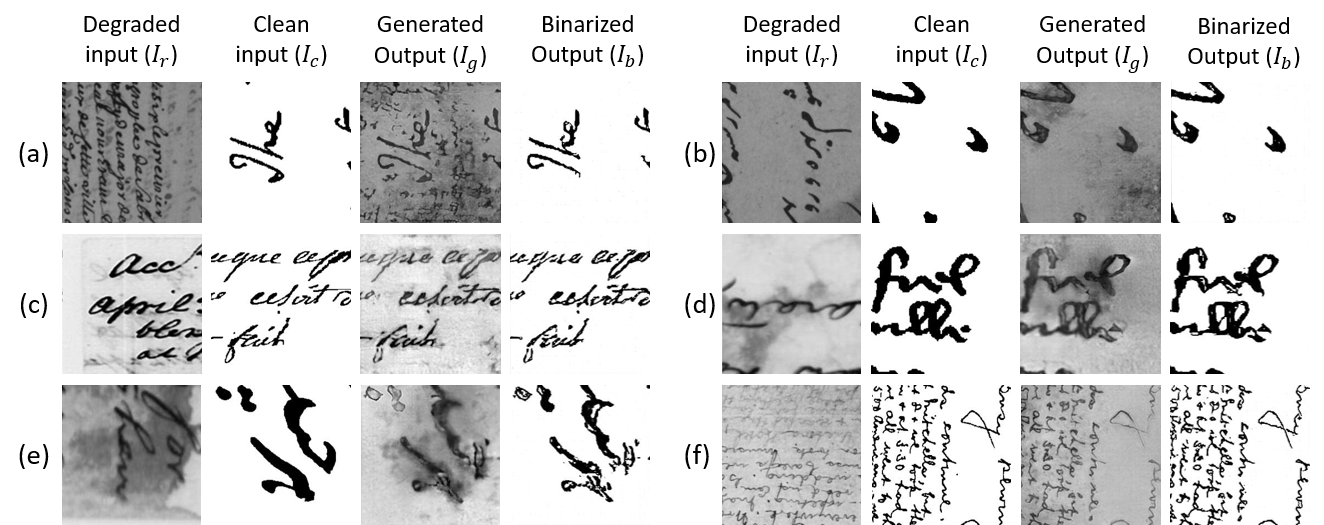

Figure 2: Qualitative evaluation of texture transfer process and binarization technique on the evaluation set.

Experimental Evaluation

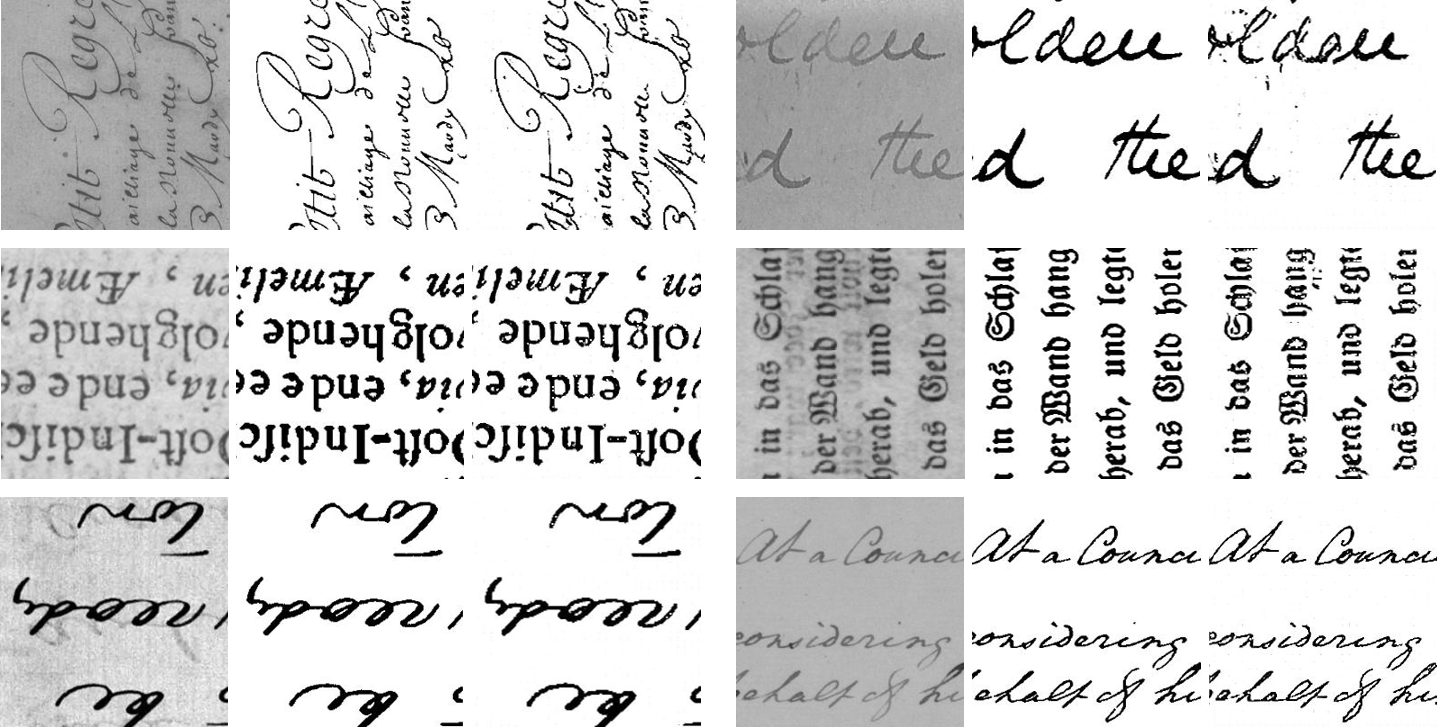

The experiments used various datasets, including DIBCO benchmarks, to validate the model, training with a mixture of image patches and augmentations. The proposed framework's performance was compared against traditional binarization techniques and deep-learning-based methods like U-Net, Pix2Pix, and CycleGAN.

The results demonstrated superior performance for the proposed method across metrics such as F-measure, pseudo-F-measure, DRD, and PSNR, outperforming state-of-the-art baselines significantly (Table).

1

2

3

4

5

|

| Method | F-measure | F_ps | DRD | PSNR |

|-------------|-----------|---------|------|------|

| Baseline A | XX.X | XX.X | XX.X | XX.X |

| Baseline B | XX.X | XX.X | XX.X | XX.X |

| Ours | 97.8 | 98.7 | 1.1 | 24.3 | |

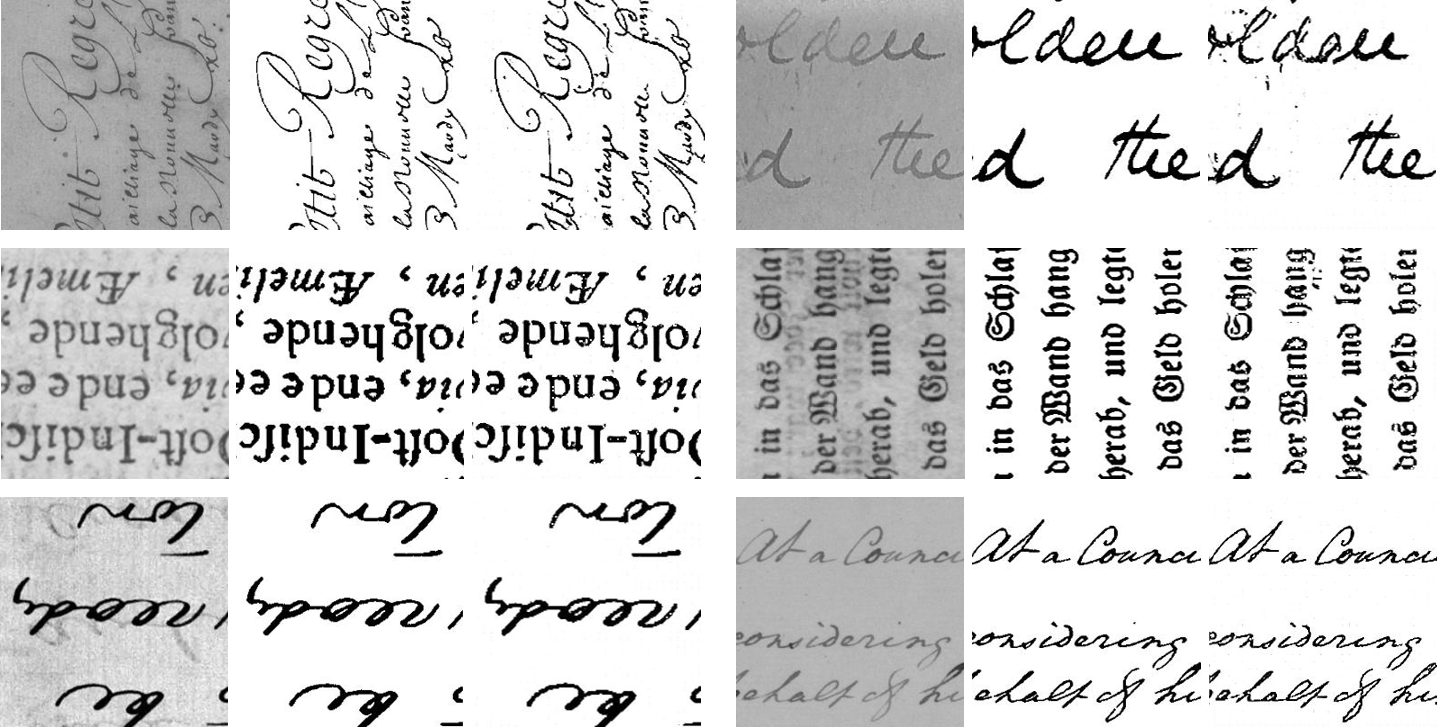

Figure 3: Binarization results using the trained BiNet model on test set. Images are in the order: input, predicted and ground truth from left to right for each sample result.

Conclusion

The adversarial noise-texture augmentation method enhances the robustness and accuracy of document binarization tasks significantly. By leveraging unpaired data to create realistic adversarial examples, the framework efficiently addresses document degradation challenges. Future work could explore further refinement of adversarial training strategies and application to other document processing tasks.