- The paper presents a framework that efficiently trains visuomotor navigation policies by combining simulation data with limited real-world fine-tuning.

- It employs adversarial domain adaptation and a stochastic forward dynamics model to effectively handle real-world uncertainties.

- Experimental results in lane-following tasks demonstrate robust performance and significantly reduced reliance on extensive real-world training.

A Data-Efficient Framework for Training and Sim-to-Real Transfer of Navigation Policies

Introduction

The paper introduces a framework designed to efficiently train visuomotor policies for robotic navigation by combining simulation and real-world data. The main focus is on reducing the high cost and risk associated with real-world robot training through sim-to-real transfer by using meta-learning and adversarial domain adaptation techniques.

Methodology

General Framework

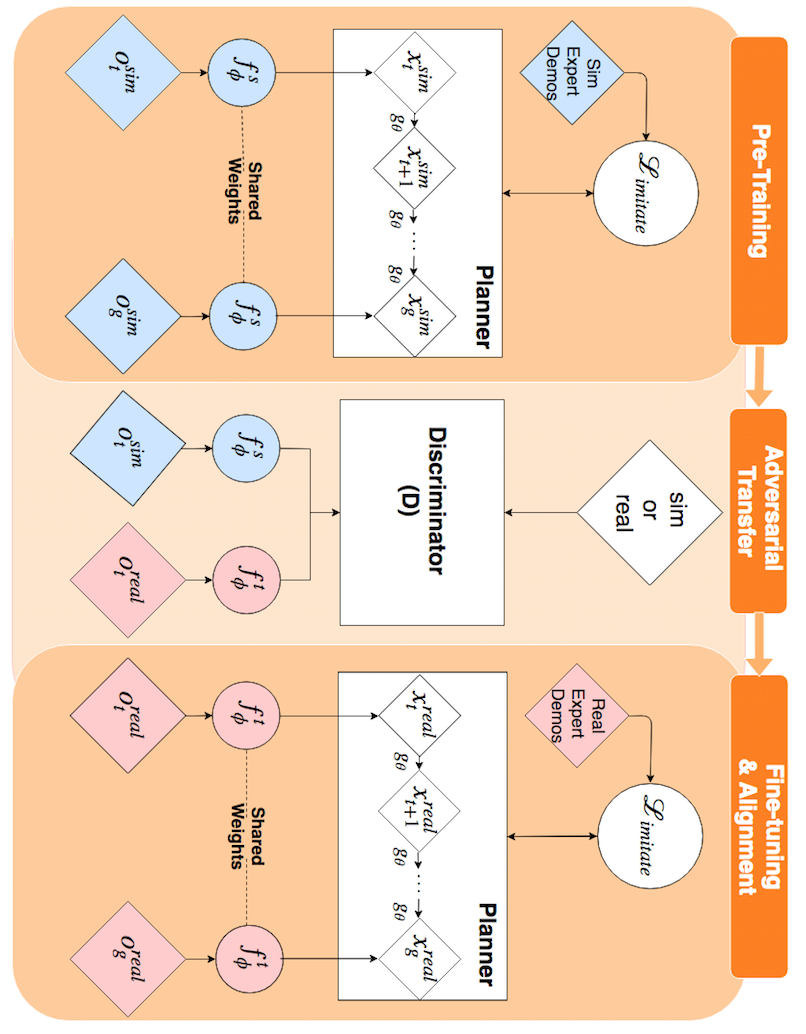

The proposed method involves training a planner in a simulated environment, transferring the learned policy to a real setup using adversarial domain adaptation, and fine-tuning with a small number of real-world examples.

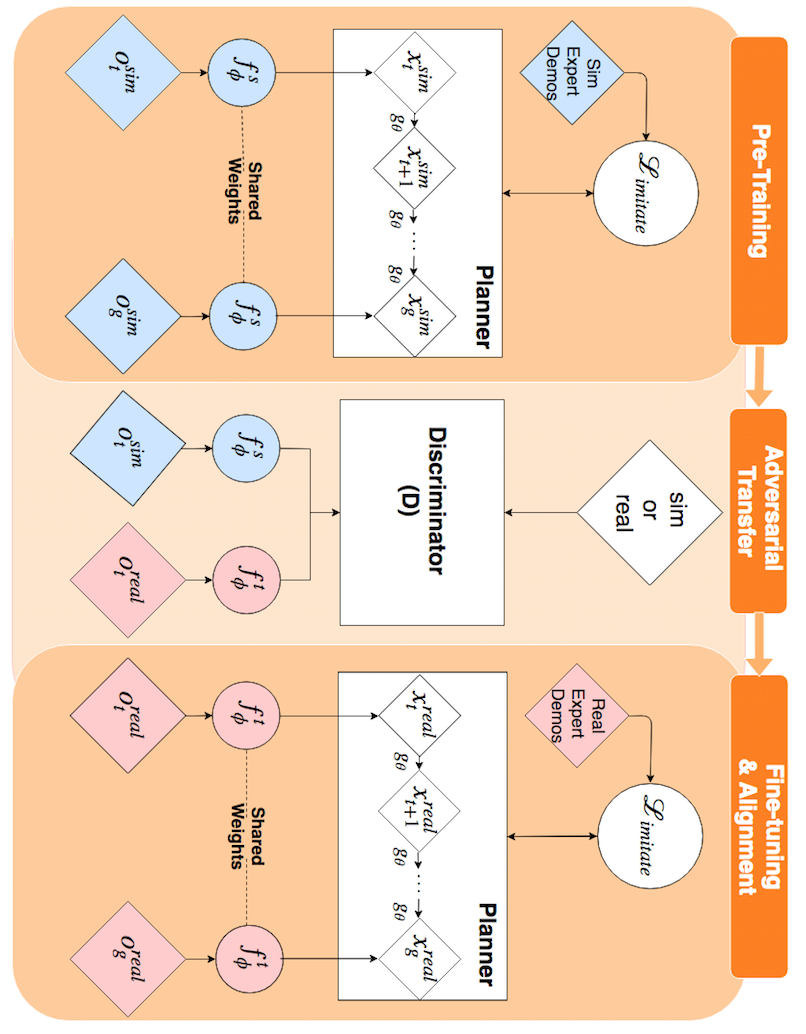

Figure 1: Sim-to-real transfer of navigation policies, illustrating the main elements such as simulation-based training, adversarial discriminative transfer, and real environment fine-tuning.

Stochastic Forward Dynamics Model

Introducing a stochastic element in the dynamics model addresses the real-world stochasticity not captured by a deterministic model. This is achieved by embedding the state transitions in a probabilistic framework, thereby enhancing robustness when deployed on real robots.

Learning the Planning Loss Function

To improve adaptability, the authors propose a multi-layer perceptron (MLP) as a learnable planning loss function. This approach adapts the loss to specific task requirements, optimizing it through an outer-loop imitation learning process.

Regularization Strategies

The paper incorporates smoothness and consistency regularization techniques to expedite convergence. Smoothness regularization ensures consecutive latent states are close in latent space, while consistency regularization enforces congruence between direct image encodings and propagated states.

Sim-to-Real Transfer Approach

The sim-to-real transfer leverages a generative adversarial network (GAN) architecture to match the latent encodings of real and simulated images. This adversarial training aligns the image embeddings such that the dynamics model, trained in simulation, can generalize effectively to real-world conditions.

Experimental Setup

Environment

Experiments are conducted in the Duckietown simulator and physical testbed to evaluate policy performance in lane-following and left-turn scenarios. This diverse set of initial conditions facilitates a comprehensive assessment of both the trained policy and transfer strategy.

Results

Performance in Simulation

The planner demonstrates strong performance in simulation, evidenced by rapid convergence and high average rewards in lane-following and left-turn tasks. Model A, with full multi-component integration, outperformed baseline methods significantly.

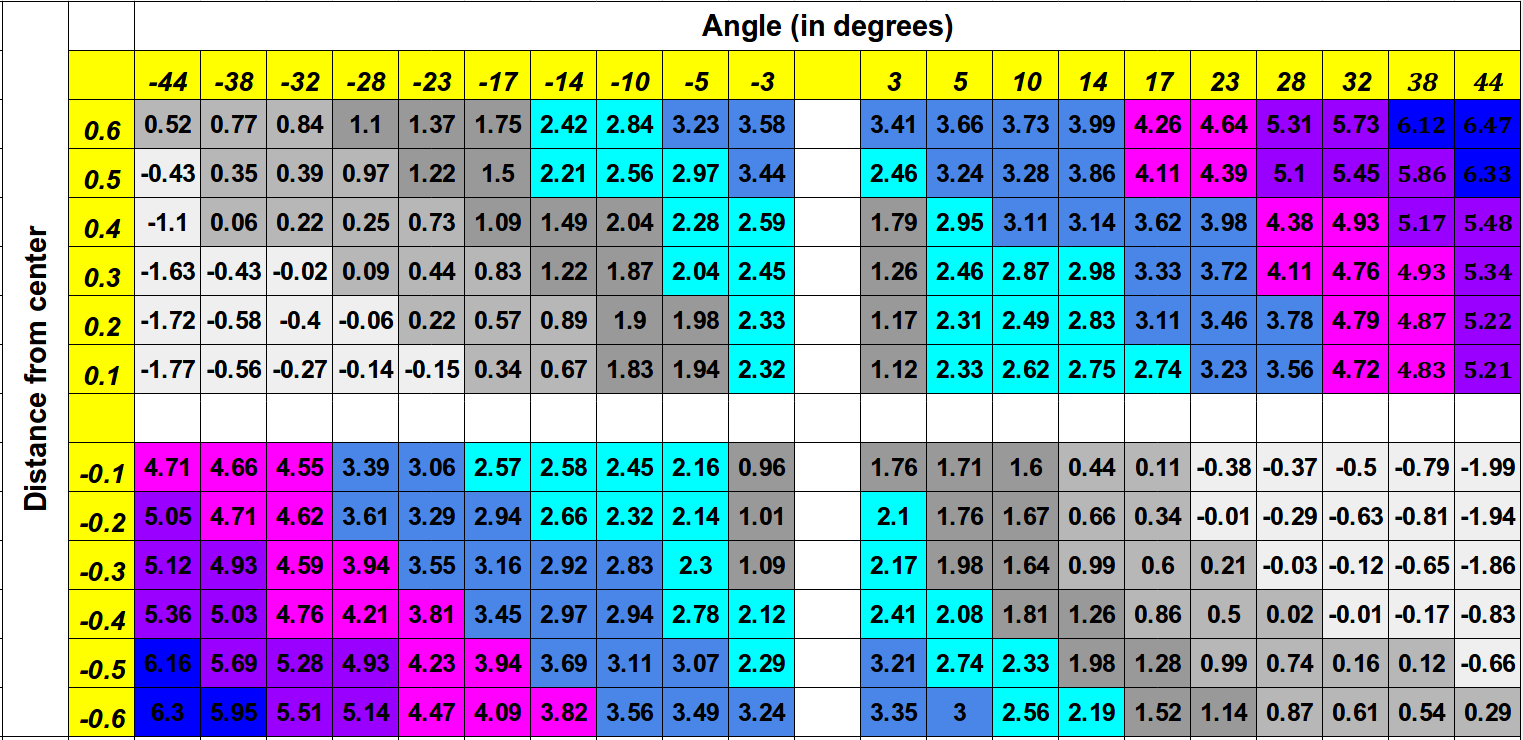

Figure 2: Evaluation of the inner loop loss function on Duckietown Simulator, demonstrating robustness across various horizon lengths trained via curriculum learning.

Real Robot Deployment

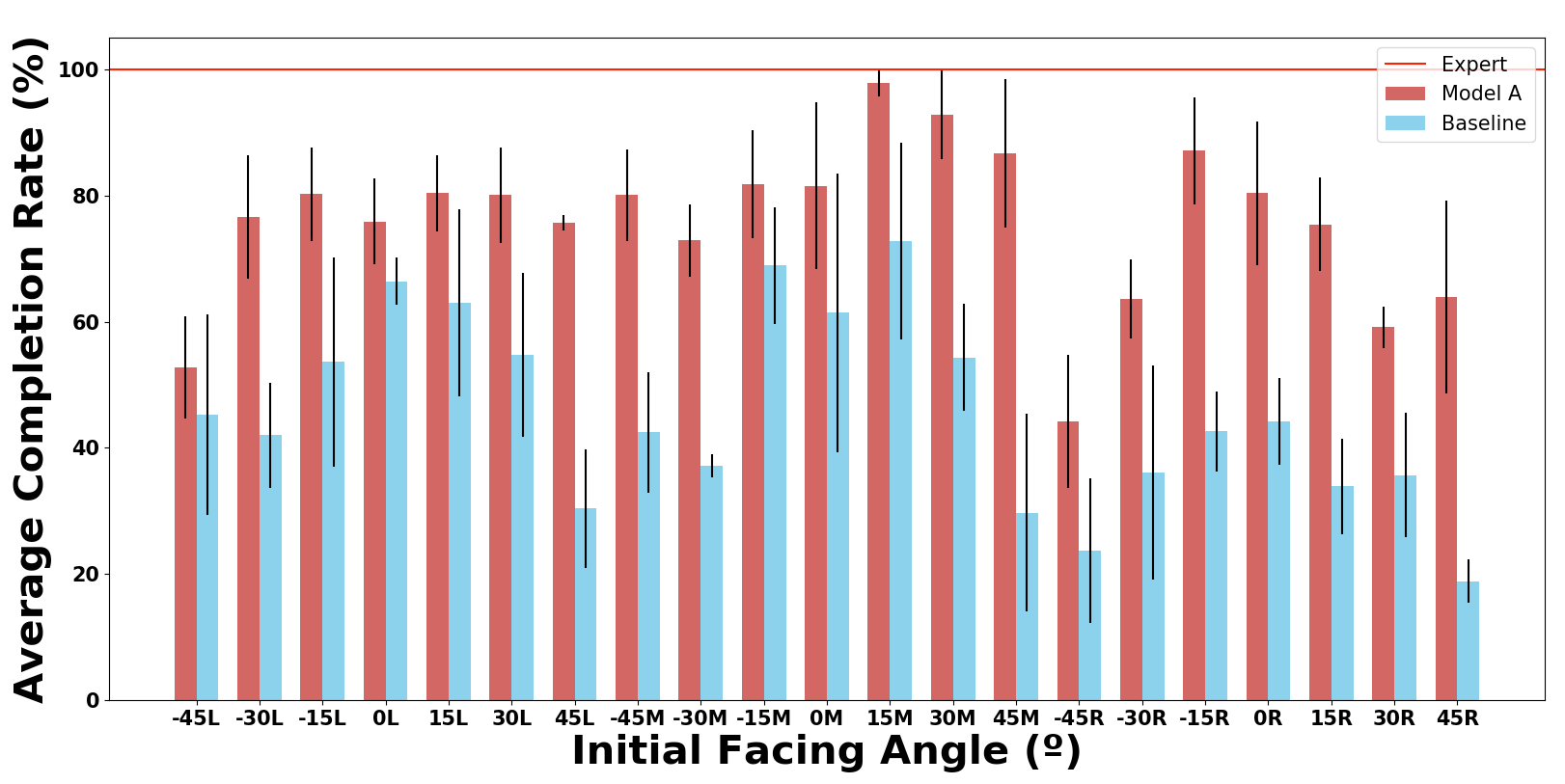

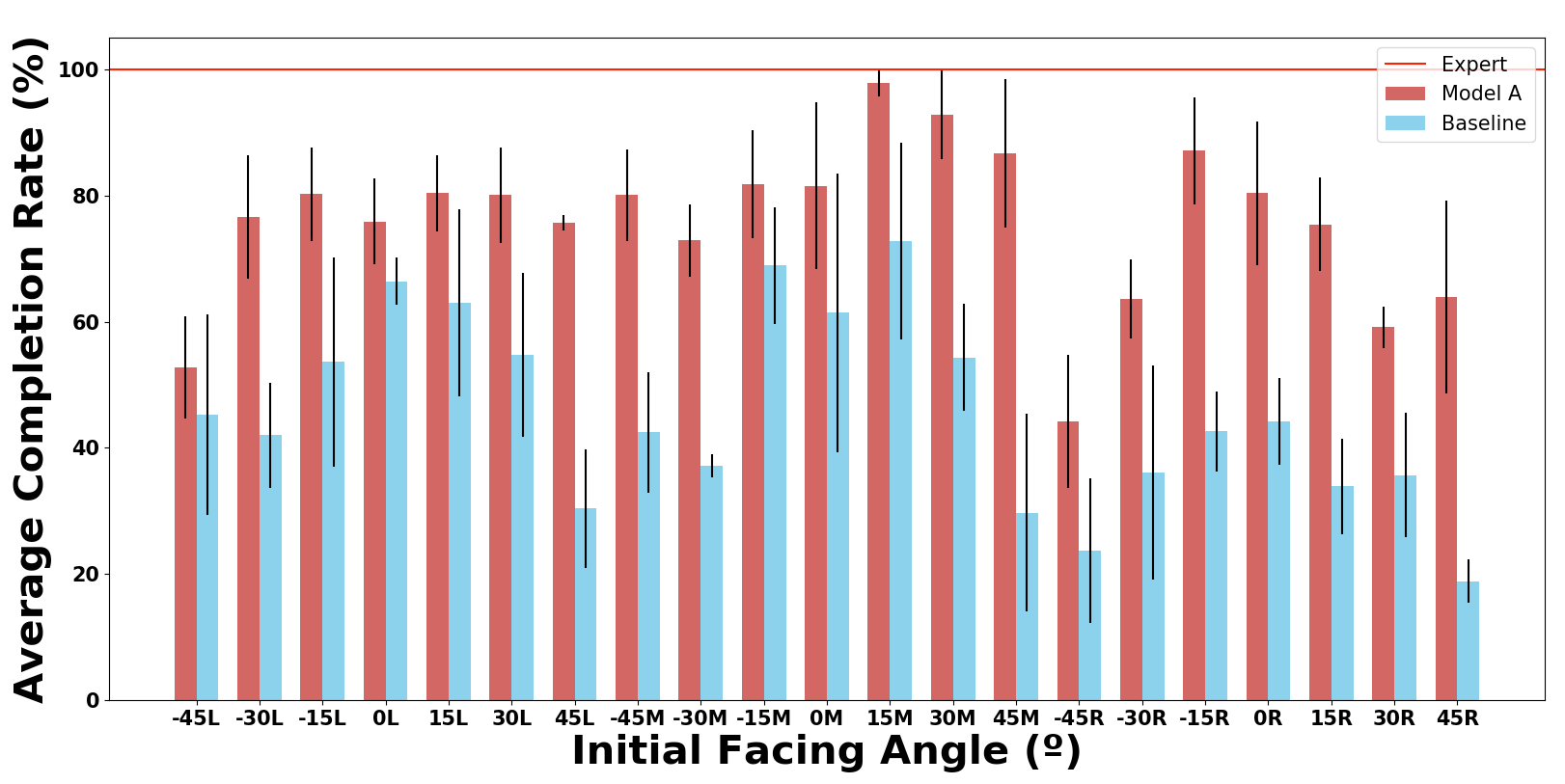

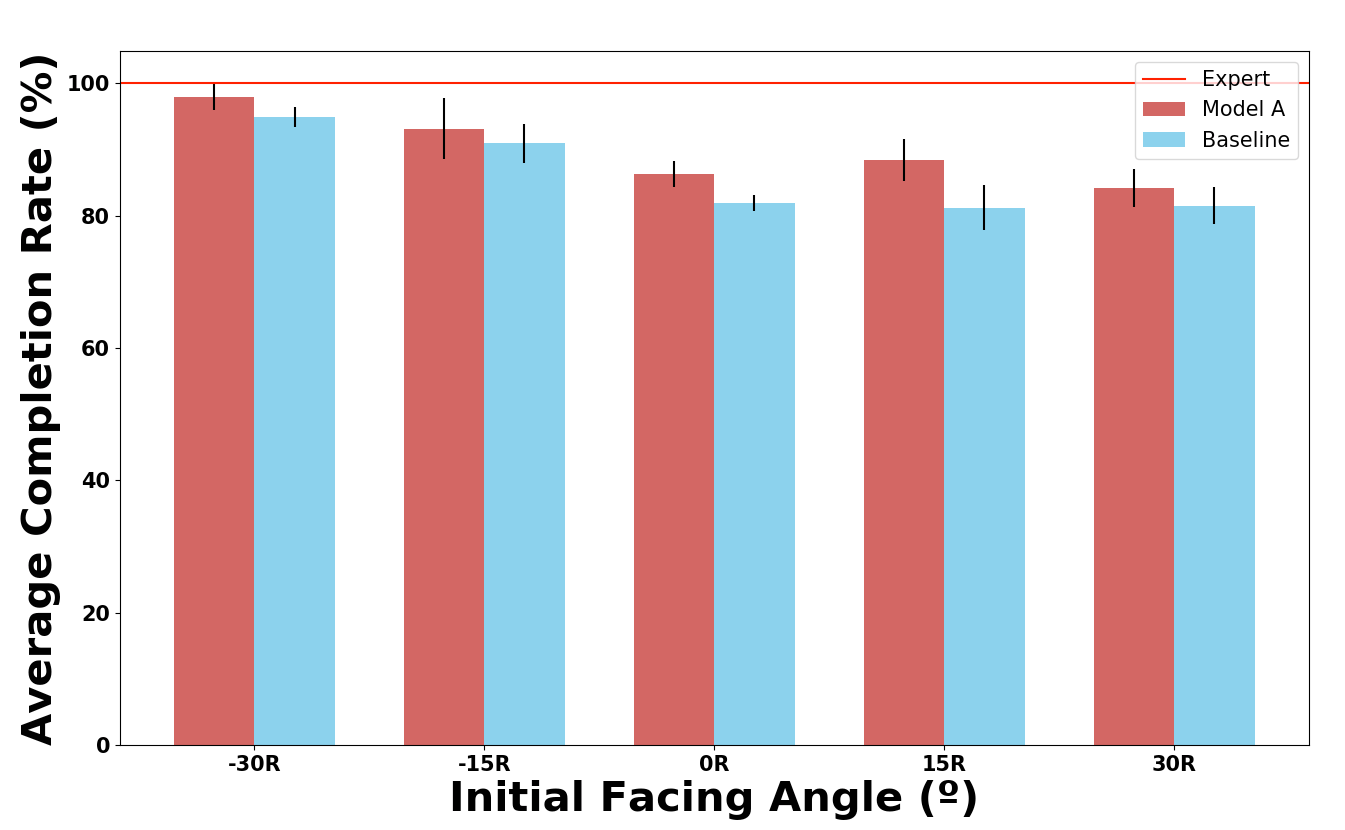

Successful sim-to-real transfer is validated by the model's performance in real-world tests, achieving over 50% completion rates even in challenging configurations. The adversarial adaptation technique proved effective, requiring fewer real-world iterations compared to direct training.

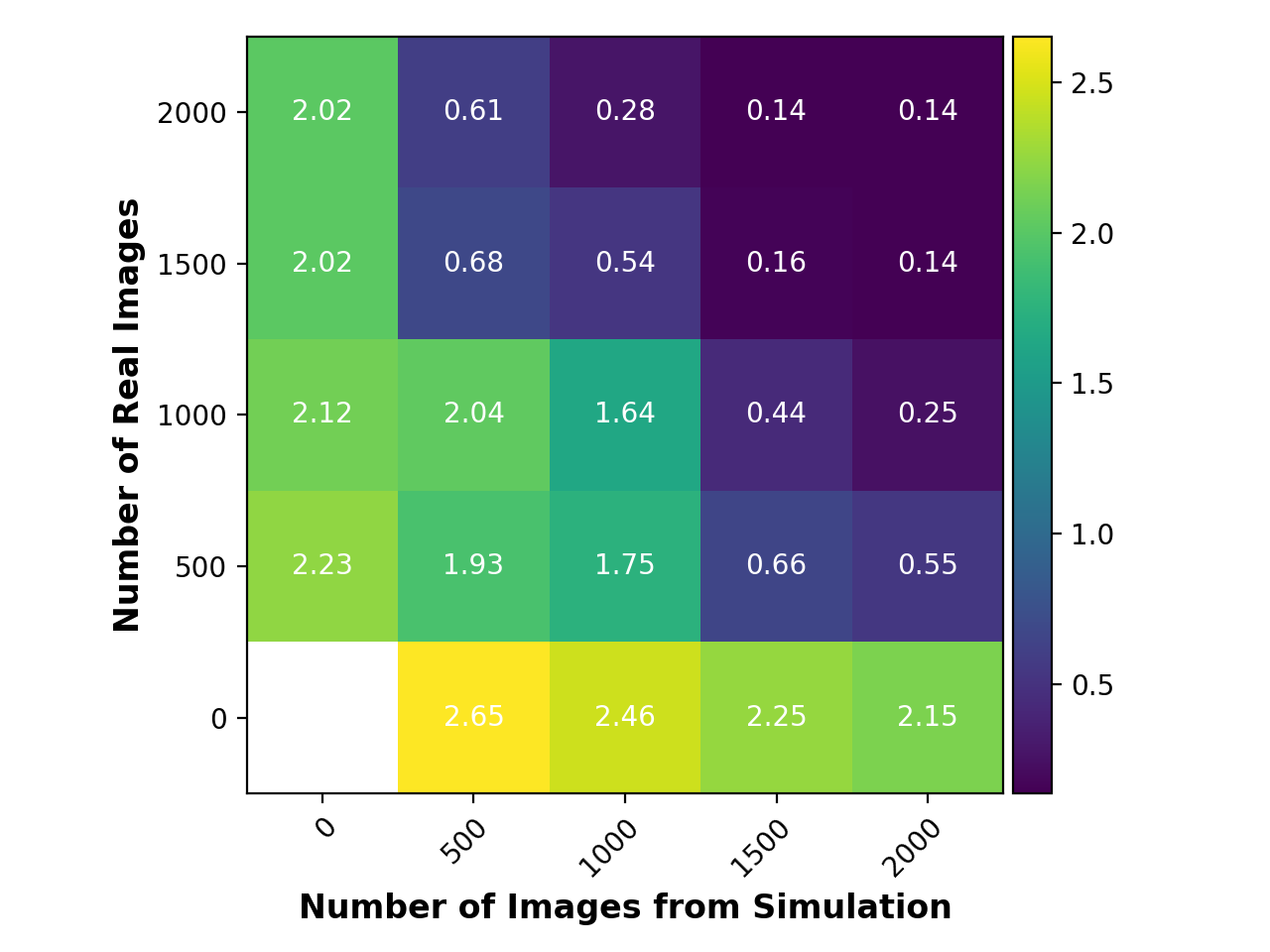

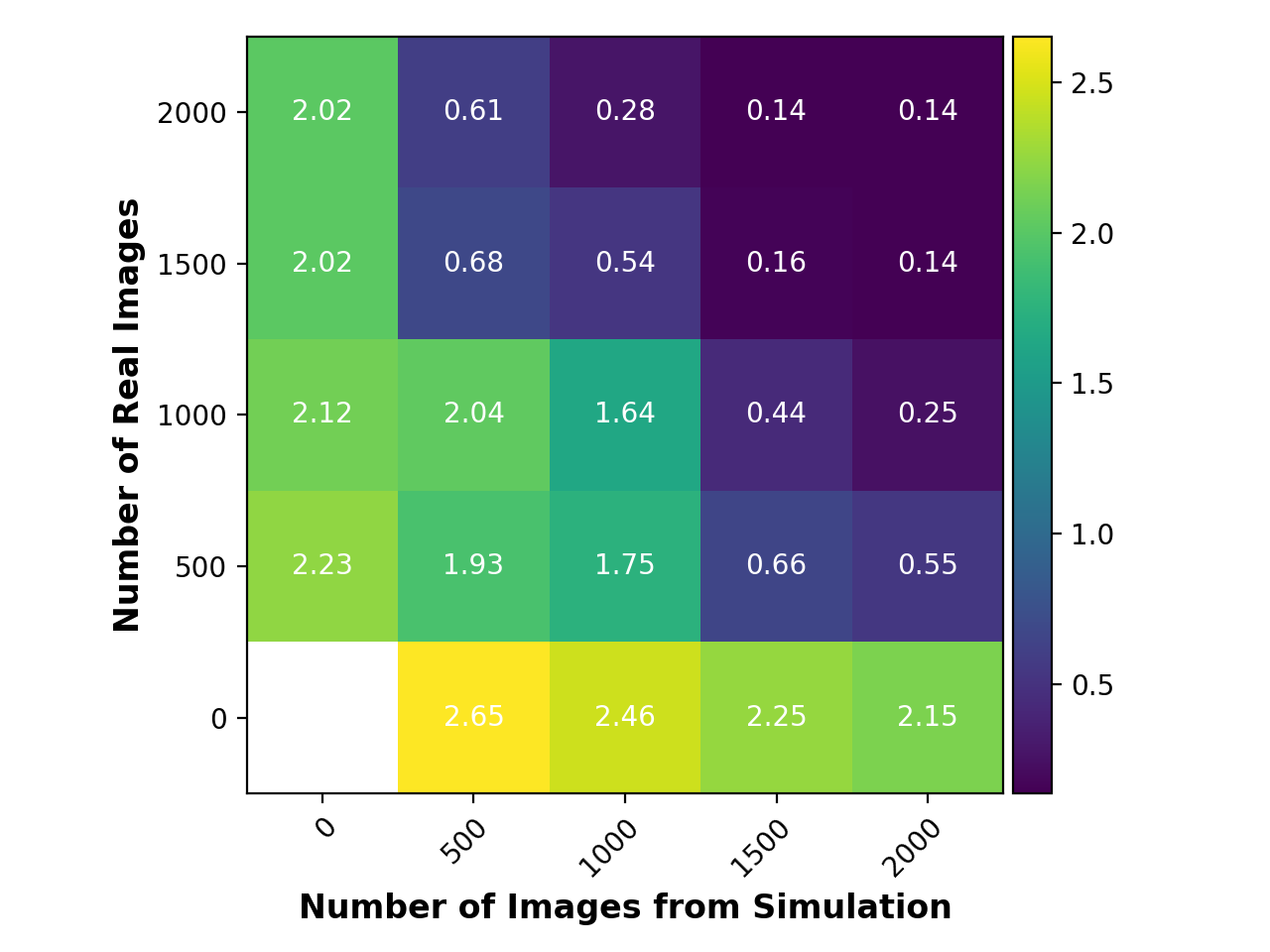

Figure 3: Evaluation of the performance of adversarial transfer in terms of loss as a function of the number of real and simulated images used in training, after pre-training in simulation with 2000 expert demos.

Conclusion

The paper presents a robust framework for sim-to-real transfer that significantly reduces the number of required real-world demonstrations. The methodology demonstrates a marked improvement in the ease and efficiency of training robotic navigation policies, paving the way for broader applicability in complex robotic tasks. Future work may include refining the adversarial transfer process to further minimize real-world training data requirements and exploring additional applications within mobile robotics.

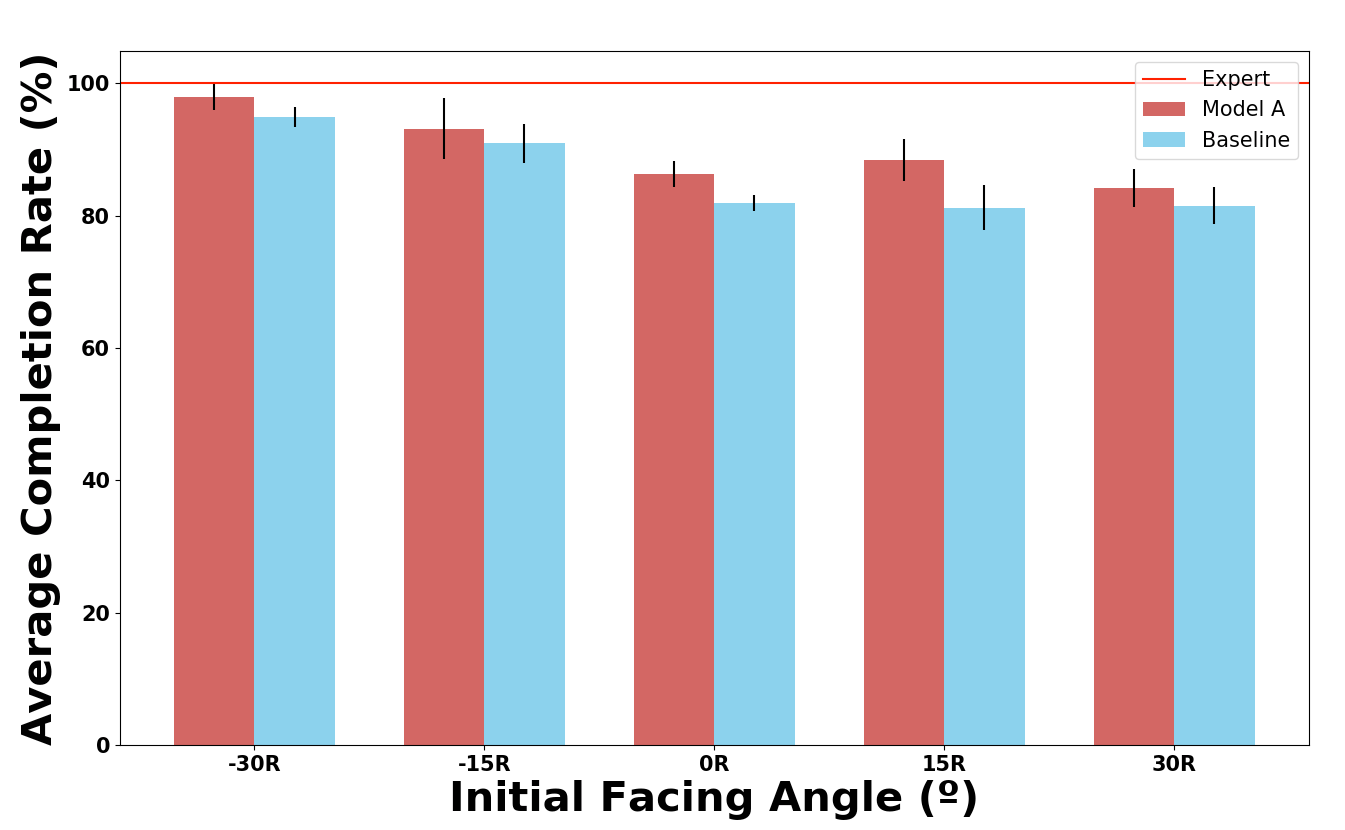

Figure 4: Average completion rate on the lane-following test (real), showcasing the efficiency of sim-to-real transfer methodology.