- The paper introduces a hybrid generative framework that synthesizes 3D scenes by integrating arrangement and image-based representations using a feed-forward network.

- It employs a novel matrix encoding of scene elements that is invariant to rigid transformations and object permutations, ensuring robust scene alignment.

- The approach demonstrates effective scene interpolation and completion, outperforming baselines through a combined VAE-GAN and CNN discriminator strategy.

Deep Generative Modeling for Scene Synthesis via Hybrid Representations

Introduction

The challenge of constructing realistic 3D indoor scenes involves learning effective parametric models from heterogeneous data. This paper presents a novel approach that uses a feed-forward neural network to generate 3D scenes from low-dimensional latent vectors. This differs from prior iterative object addition methods and volumetric grid-focused neural network techniques. The core innovation is the employment of a configurational arrangement representation for 3D scenes, combined with a hybrid training scheme using both 3D arrangements and image-based discriminators.

Scene Representation

The 3D scene is encoded using a matrix representation wherein each column corresponds to an object's status vector. This encodes various parameters including existence, location, orientation, size, and shape descriptor. A unique aspect of this encoding is its invariance to global rigid transformations and permutations of object categories. This invariance is tackled by introducing permutation variables and latent matrix encoding for each scene, facilitating effective training through novel loss functions and alignment strategies.

Generator Model

Key challenges such as overfitting are addressed through sparsely connected layers in the feed-forward network. These layers limit interactions to small groups of nodes, aligning with typical object correlations observed in indoor scenes. Combined with fully connected layers, this architecture ensures robustness and expressiveness. The generator is trained using VAE-GAN techniques, integrating arrangement and image-based discriminator losses to maintain both global coherence and local compatibility.

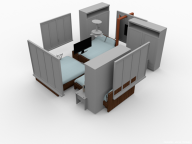

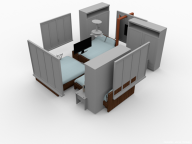

Figure 1: Visual comparisons between synthesized scenes using different generators.

Image-Based Module

The image-based discriminator captures finer local object interactions via top-view image projections. By leveraging CNN-based discriminator losses on these projections, the model effectively learns spatial relations that are difficult to encode with arrangement-only representations. The projection operator is designed to provide smooth gradients for efficient training back to the generator network.

Scene Alignment

A crucial preprocessing step involves aligning training scenes using map synchronization techniques. This sequential optimization for orientations, translations, and permutations significantly improves learning quality by providing consistent reference frames across training sets. Pairwise matching followed by a global refinement addresses potential noise, ensuring high-quality data input for model training.

Applications

Scene Interpolation: The approach enables smooth transitions between different scenes by interpolating latent parameters. This results in semantically meaningful transformations across intermediate states, illustrating versatile generation capabilities.

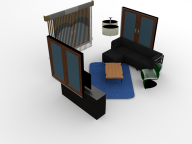

Figure 2: Scene interpolation results between different pairs of source and target scenes.

Scene Completion: The generator effectively completes partial scenes, outperforming baseline methods in terms of semantic coherence and generation speed. This application is particularly useful in scenarios requiring rapid prototyping for interior design.

Figure 3: Scene completion results, displaying the effectiveness of our method compared to existing techniques.

Conclusion

The paper contributes significantly to 3D scene generation by introducing a hybrid model that leverages both arrangement and image-based representations. The feed-forward architecture offers an integrated approach to generating complex scenes while addressing typical challenges like local compatibility and efficient training. Future research directions include extending hybrid representations to encode physical properties and handling unsegmented scenes from raw data inputs. Further exploration of integrating diverse 3D representations, such as multi-view and texture-based models, could enhance the richness and applicability of generated scenes.