Generative Adversarial Networks: An Overview

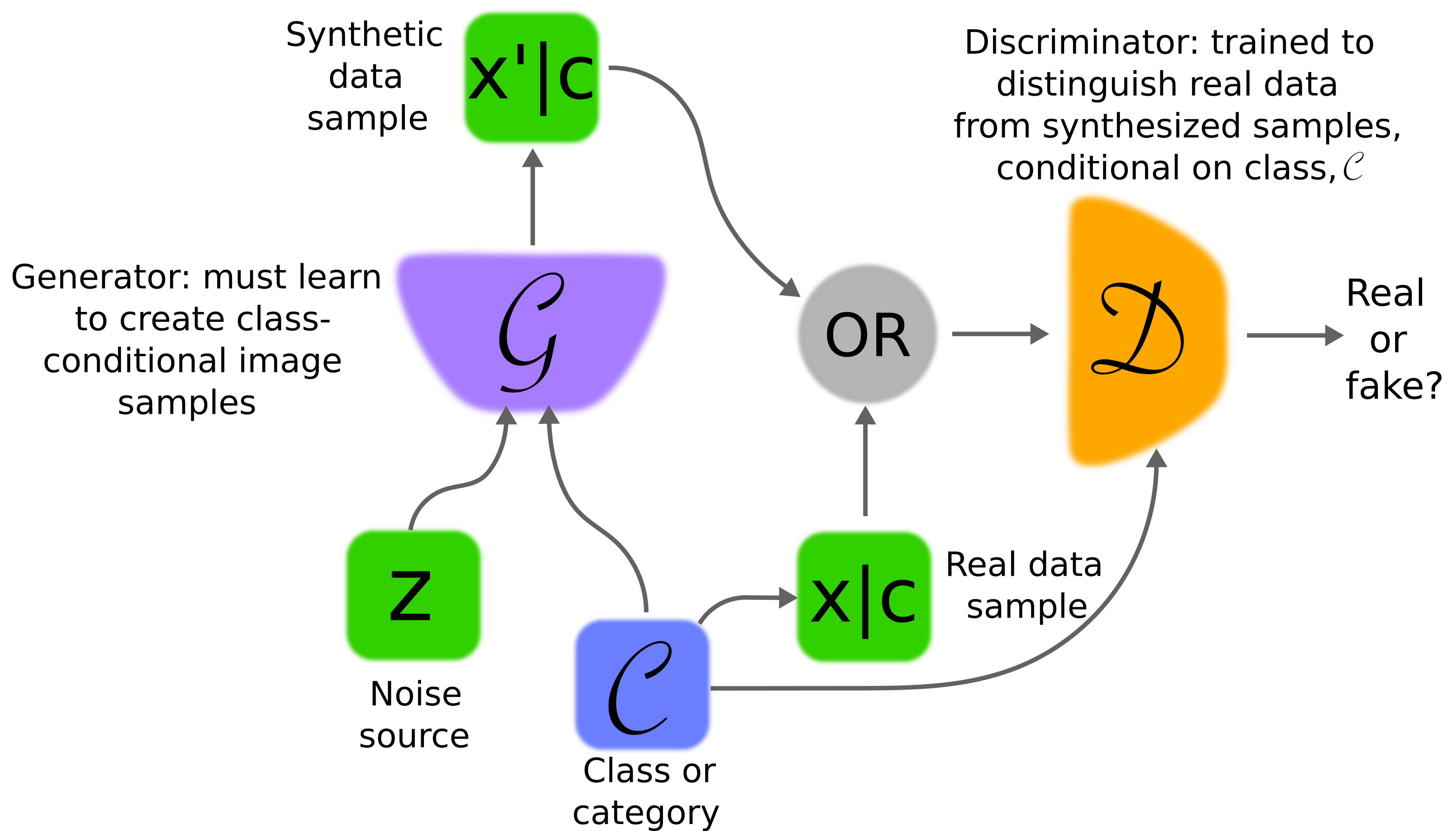

Abstract: Generative adversarial networks (GANs) provide a way to learn deep representations without extensively annotated training data. They achieve this through deriving backpropagation signals through a competitive process involving a pair of networks. The representations that can be learned by GANs may be used in a variety of applications, including image synthesis, semantic image editing, style transfer, image super-resolution and classification. The aim of this review paper is to provide an overview of GANs for the signal processing community, drawing on familiar analogies and concepts where possible. In addition to identifying different methods for training and constructing GANs, we also point to remaining challenges in their theory and application.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Practical Applications

Immediate Applications

The following applications can be deployed now by leveraging the paper’s methods (e.g., DCGAN, Conditional GANs, CycleGAN, SRGAN, ALI/BiGAN/AAE, WGAN) and training practices (feature matching, mini-batch discrimination, gradient penalty, label smoothing, instance noise).

- Semi-supervised feature extraction for classification

- Sectors: software, e-commerce, security, academia

- Tools/products/workflows: reuse trained discriminator or encoder features from DCGAN/ALI as generic visual feature extractors; add a lightweight classifier (e.g., linear SVM or a small MLP) for downstream tasks with limited labeled data

- Assumptions/dependencies: stable GAN training; modest labeled set available; domain similarity between pretraining and target task to avoid domain shift

- Synthetic data refinement for training with preserved labels

- Sectors: robotics, autonomous vehicles, AR/VR, manufacturing inspection

- Tools/products/workflows: use GAN-based refinement (e.g., Shrivastava et al.) to make simulator images look realistic while retaining ground-truth annotations; plug refined images into existing supervised training pipelines

- Assumptions/dependencies: synthetic data must carry accurate labels; refined images must not corrupt annotation semantics; careful tuning to avoid mode collapse

- Domain adaptation from synthetic to real imagery

- Sectors: robotics (sim2real), industrial vision, autonomous driving

- Tools/products/workflows: train domain-adaptive GANs to translate source-domain (simulator) images to target-domain (real) appearance (e.g., Bousmalis et al.); deploy models trained on adapted images to the real domain

- Assumptions/dependencies: sufficient target-domain samples; alignment of task-relevant content across domains; monitor for artifacts that harm control or perception

- Semantic image editing for creative tooling

- Sectors: creative industries, consumer photo apps, marketing

- Tools/products/workflows: conditional GANs and latent-space manipulation (InfoGAN, ALI/encoder) to toggle attributes (hair style, eyeglasses, age); integrate sliders/buttons for attribute vectors in a photo editor

- Assumptions/dependencies: encoder quality (ALI/BiGAN/AAE) affects edit fidelity; attribute labels or disentangled codes are needed; manage ethical considerations (e.g., identity manipulation)

- Text-to-image prototyping

- Sectors: design, advertising, digital content studios

- Tools/products/workflows: conditional GANs with text conditioning (Reed et al.) to rapidly visualize product concepts or ad mockups from natural language; extend with layout conditioning (GAWWN) for “what-where” placement

- Assumptions/dependencies: domain-specific paired text–image data; clear prompts; potential bias in training data affecting outputs

- Super-resolution for image enhancement

- Sectors: consumer imaging, media restoration, satellite imaging; caution in healthcare

- Tools/products/workflows: SRGAN (adversarial + perceptual loss) to upscale low-res images (e.g., 4×); embed into camera pipelines, photo apps, and remote sensing workflows

- Assumptions/dependencies: domain-specific training improves fidelity; adversarial upscales can hallucinate details—use care in medical/legal settings; robust evaluation beyond pixel metrics

- Image-to-image translation with paired data

- Sectors: computer vision, GIS, media, education

- Tools/products/workflows: pix2pix for tasks like B/W colorization, semantic-to-photo synthesis, aerial-to-map; build general-purpose “translator” services that learn both mapping and loss

- Assumptions/dependencies: requires paired datasets; output quality depends on label alignment and diversity; computational resources for training

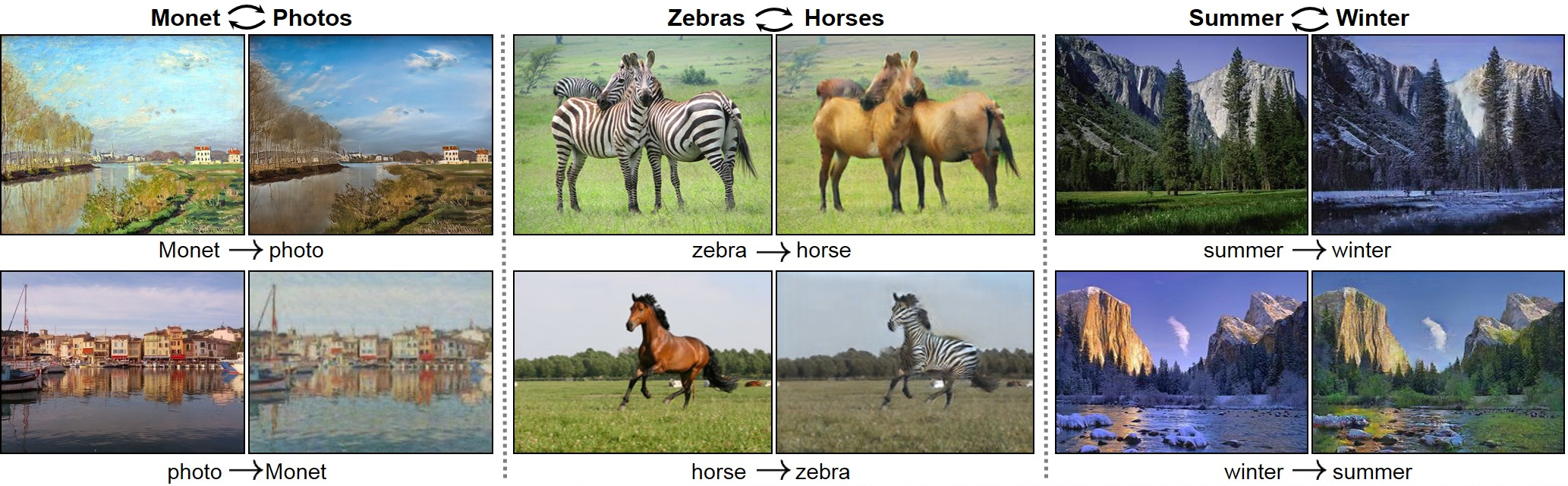

- Unpaired image translation and style transfer

- Sectors: creative apps, film post-production, social media

- Tools/products/workflows: CycleGAN for unpaired domain translation (e.g., Monet→photo, horse↔zebra); integrate style filters that preserve content via cycle consistency

- Assumptions/dependencies: unpaired collections with representative diversity; cycle consistency may still introduce artifacts or content drift; monitor for mis-translations

- Visual search and image retrieval from learned representations

- Sectors: e-commerce, digital asset management

- Tools/products/workflows: use discriminator/encoder feature maps as compact embeddings; build nearest-neighbor search, re-identification, and similarity-based recommendation

- Assumptions/dependencies: scalable indexing infrastructure; embedding quality depends on GAN stability and domain coverage; periodic retuning for catalog drift

- 3D object synthesis and 2D-to-3D assist

- Sectors: AR/VR, CAD, product design, gaming

- Tools/products/workflows: volumetric convolutional GANs to synthesize 3D shapes (chairs, tables, cars) and infer 3D from single images; rapid prototyping toolchains for concept models

- Assumptions/dependencies: curated 3D datasets; compute and memory overhead for volumetric models; resolution limits may require post-processing/refinement

- Forensic detectors and authenticity screening

- Sectors: policy, platform trust & safety, media forensics

- Tools/products/workflows: train discriminators/critics to flag synthetic/manipulated images; use instance noise and diverse manipulation datasets to improve generalization; triage pipelines for moderation

- Assumptions/dependencies: adversarial dynamics (detectors vs. generators) create an arms race; need continual retraining; false positives/negatives have policy implications

- Education and pedagogy for signal processing and ML

- Sectors: academia, workforce upskilling

- Tools/products/workflows: classroom labs using DCGAN/AAE/InfoGAN to teach density estimation, transforms, latent spaces, and training stability; visual demos of concept vectors and cycle consistency

- Assumptions/dependencies: access to compute and datasets; ethical guidance on synthetic media; assessment beyond visual inspection

Long-Term Applications

These applications are promising but likely require further research, robustness, scaling, or validation (e.g., stability, disentanglement, safety, or regulatory approvals).

- Controllable, disentangled generation at scale

- Sectors: software tooling, creative industries, robotics

- Tools/products/workflows: InfoGAN-style latent codes for high-level controls (pose, lighting, identity); standardized “concept knobs” in content pipelines and simulation

- Assumptions/dependencies: reliable disentanglement across complex domains; large, diverse datasets; metrics for control fidelity and attribute independence

- Bidirectional, high-fidelity editing workflows

- Sectors: professional photo/video editing, design

- Tools/products/workflows: ALI/BiGAN/AAE/AVB-based encoders for faithful image reconstruction and reversible edits; “non-destructive” GAN layers in editors

- Assumptions/dependencies: reconstruction quality must match professional standards; stable training objectives (e.g., adversarial + reconstruction terms); user trust and auditability

- Medical imaging: cross-modality translation and super-resolution

- Sectors: healthcare (radiology, pathology)

- Tools/products/workflows: CycleGAN-like translation (e.g., MRI↔CT) to reduce scanning burdens; SRGAN-style upscaling for low-dose scans with perceptual constraints

- Assumptions/dependencies: rigorous clinical validation and bias assessment; regulatory approvals; safeguards against hallucinated pathology; clear provenance and disclaimers

- Scalable sim2real for robotic control

- Sectors: robotics, logistics, manufacturing, autonomous systems

- Tools/products/workflows: multi-domain GANs with tied weights (e.g., Liu et al.) to co-train corresponding domains; full-stack pipelines from simulation assets to deployable policies

- Assumptions/dependencies: robust stability and safety; closed-loop evaluation; real-time deployment constraints; failure mode monitoring

- Privacy-preserving synthetic data release

- Sectors: healthcare, finance, public policy, research

- Tools/products/workflows: AAE/WGAN-based generators to produce utility-preserving synthetic datasets for sharing and benchmarking

- Assumptions/dependencies: strong privacy guarantees (resistance to membership inference and re-identification); utility–privacy trade-off quantification; governance and consent frameworks

- Content authenticity ecosystem (watermarking and verification)

- Sectors: policy, media platforms, standards bodies

- Tools/products/workflows: integrate watermarking into generators; standardized verification APIs; discriminator-based certification services to flag unmarked synthetic media

- Assumptions/dependencies: multi-stakeholder standardization; broad adoption by tool vendors; resilience against removal attacks and adversarial countermeasures

- Real-time, edge-deployed GANs

- Sectors: mobile devices, AR glasses, embedded vision

- Tools/products/workflows: model compression and quantization to run SRGAN/CycleGAN variants on-device for enhancement and translation without cloud latency

- Assumptions/dependencies: hardware acceleration; robust performance under tight power budgets; safety and privacy on edge devices

- Cross-domain extension beyond images (e.g., sequential data)

- Sectors: finance (anomaly detection), IoT, cybersecurity

- Tools/products/workflows: adapt critic-based training (WGAN with gradient penalty) to time-series for anomaly detection and synthetic data; integrate into monitoring systems

- Assumptions/dependencies: domain-specific architectures for sequences; evaluation under concept drift; careful handling to avoid harmful synthetic anomalies

- 3D scene generation for autonomous simulation

- Sectors: autonomous driving, AR/VR training environments

- Tools/products/workflows: extend volumetric GANs to dynamic, interactive scenes; data engines to generate diverse edge cases for testing

- Assumptions/dependencies: scaling to high fidelity and temporal coherence; standardized quality metrics; substantial compute and storage

- Environmental monitoring via enhanced remote sensing

- Sectors: energy, climate, conservation, urban planning

- Tools/products/workflows: SRGAN and unpaired translation to enhance satellite/aerial imagery for asset mapping, deforestation tracking, and seasonal analysis

- Assumptions/dependencies: validation against ground truth; bias and artifact audits; cautious use in policy decisions to avoid misinterpretation

Each application’s feasibility depends on stable training (e.g., WGAN with gradient penalty, instance noise), careful hyperparameter tuning, domain-appropriate datasets, and rigorous evaluation to manage common GAN risks (mode collapse, vanishing gradients, artifacts, bias).

Collections

Sign up for free to add this paper to one or more collections.