A Brief Survey of Deep Reinforcement Learning

Overview

Deep Reinforcement Learning (DRL) is an integrative approach that combines Reinforcement Learning (RL) concepts with deep learning. It has advanced the development of autonomous agents capable of performing complex tasks such as playing video games directly from pixel data and sensorimotor control in robotics. This survey delineates the different facets of DRL, including foundational concepts, algorithmic advancements, and application domains.

Foundations of Reinforcement Learning

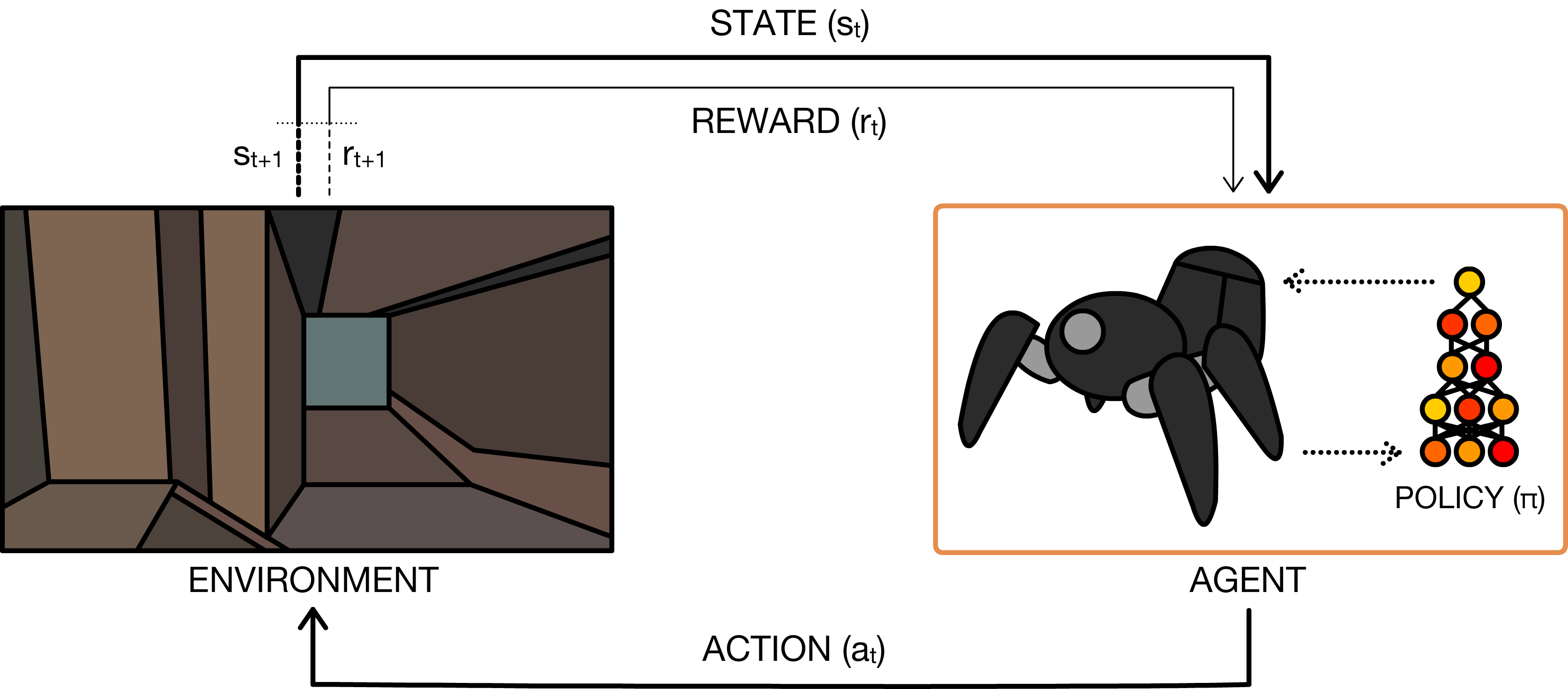

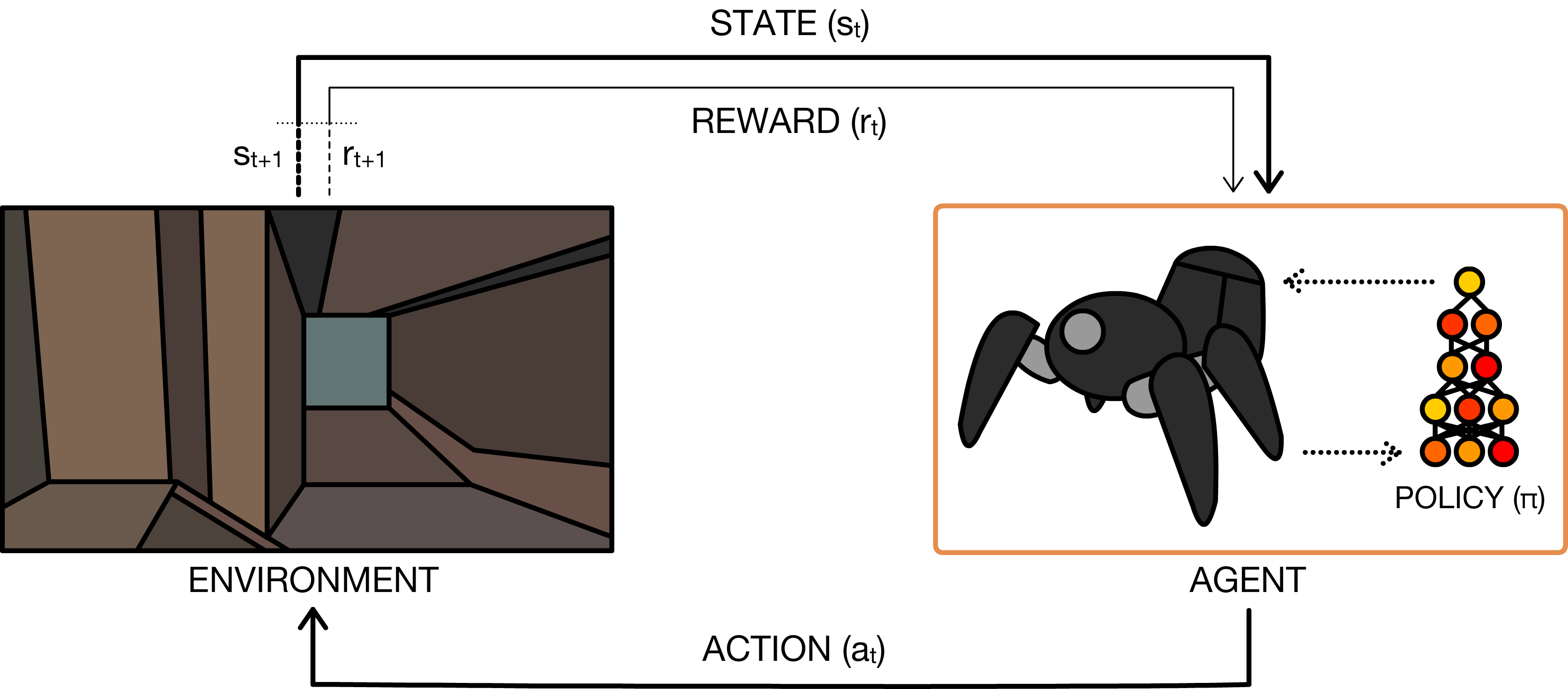

Reinforcement Learning provides a mathematical framework for experience-driven autonomous learning agents. It is often formalized as a Markov Decision Process (MDP), where an agent interacts with an environment, making decisions to maximize a long-term reward. The MDP comprises states, actions, transition dynamics, rewards, and a discount factor. Given these, the agent seeks an optimal policy to maximize expected return.

Figure 1: The perception-action-learning loop. At time t, the agent receives state st from the environment, executes an action at, and receives reward rt+1 and new state st+1.

The RL setup requires an optimal balance between exploration (discovering new knowledge about the environment) and exploitation (using known information to maximize reward). These challenges necessitate algorithms that are robust to temporal credit assignments and highly complex environments.

Value Functions and Q-learning

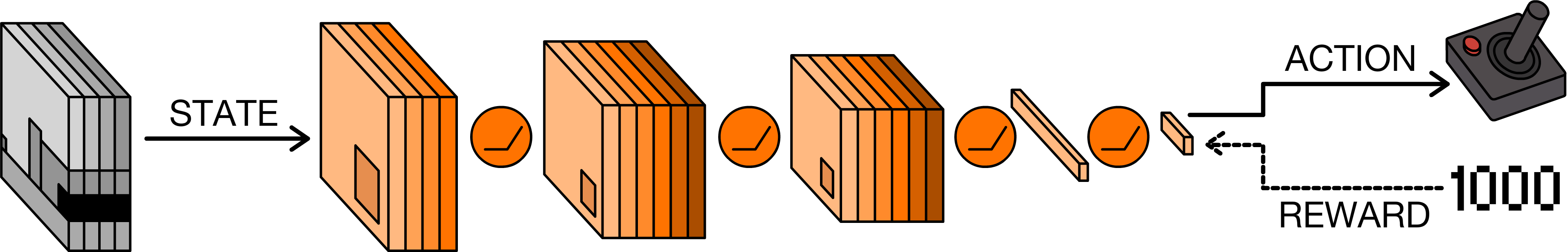

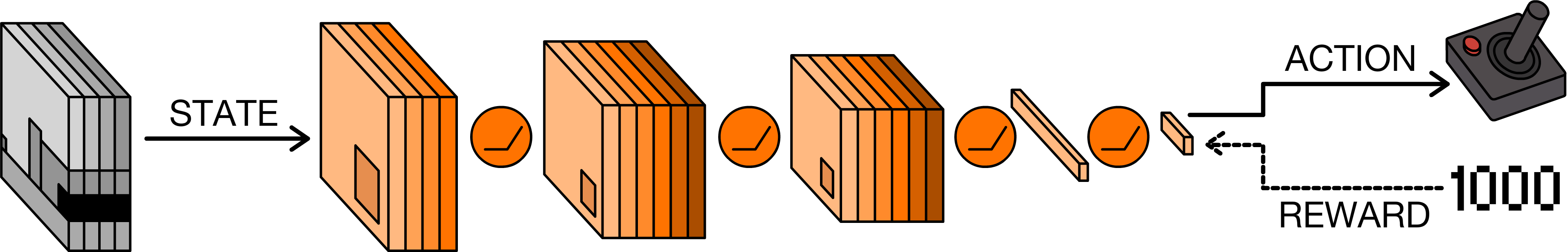

One of the seminal algorithms in DRL is the Deep Q-Network (DQN), which leverages a neural network to estimate a Q-value function, enabling the agent to choose actions without requiring a model of the environment's dynamics.

Figure 2: The deep Q-network architecture demonstrates convolutional layers for feature extraction and fully connected layers for decision making based on visual inputs.

The DQN's innovations include experience replay and target networks, both designed to stabilize learning. Experience Replay allows the network to learn from past actions by storing transitions and randomly sampling mini-batches, thereby reducing temporal correlations between data samples. Target networks help mitigate the issue of non-stationary targets in Q-learning by periodically updating the target network with the policy network's weights.

Algorithmic Variants and Trade-offs

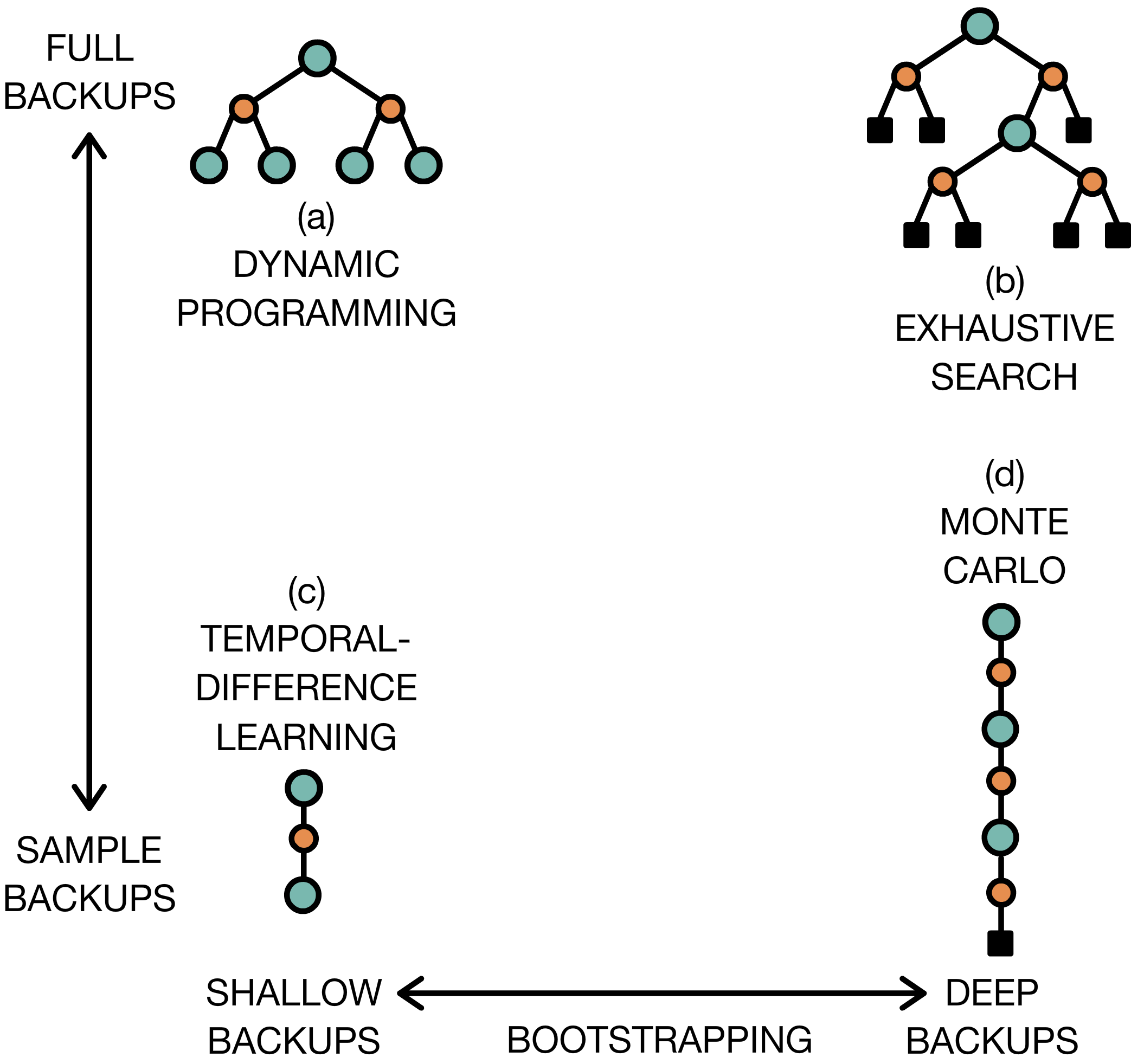

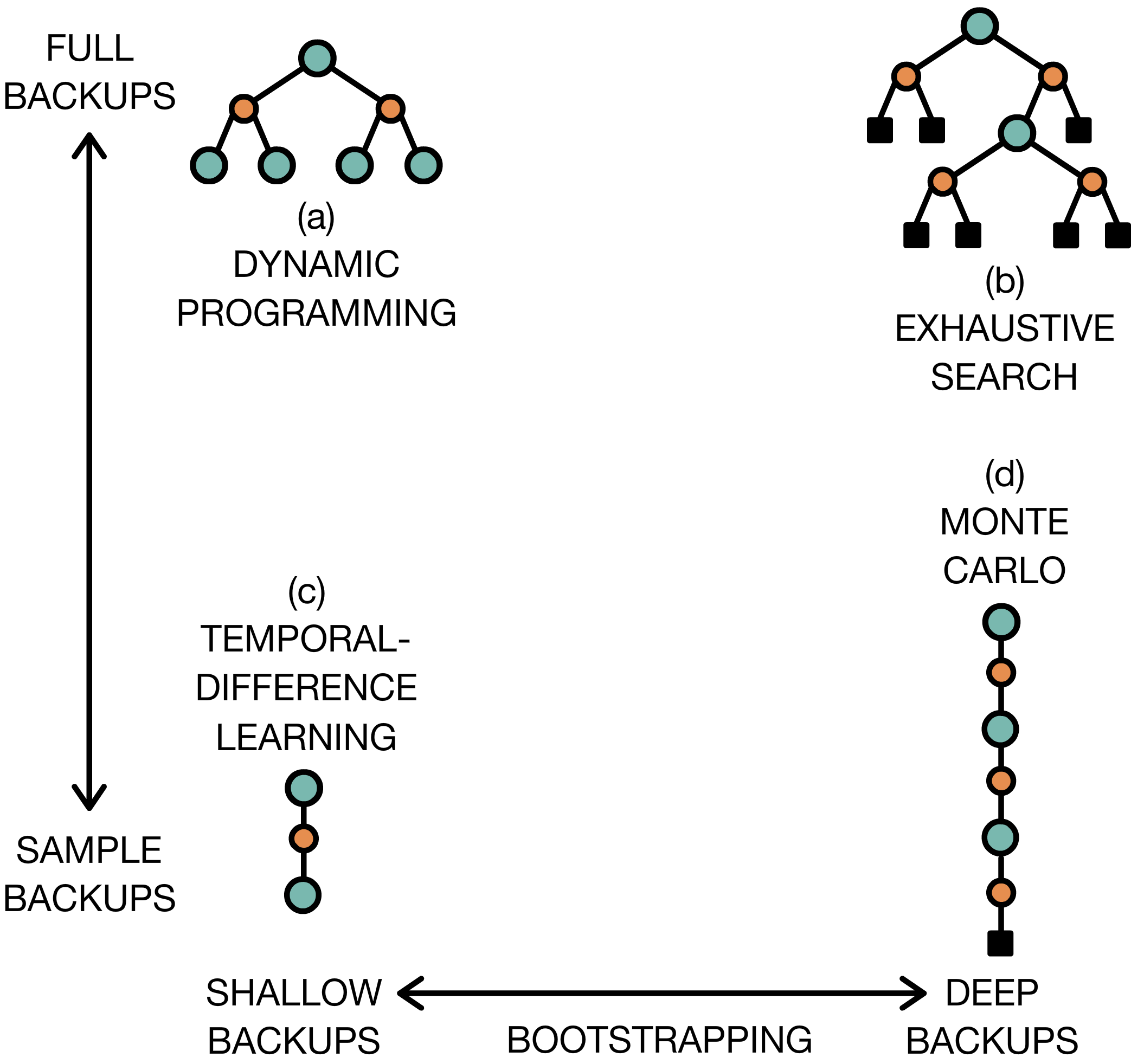

DRL includes a range of algorithms beyond DQN, such as Double DQN (DDQN) that corrects the overestimations in Q-value predictions and advantage-based methods that decompose Q-values into value and advantage functions. These advancements cater to various environments and optimize the exploration-exploitation trade-off.

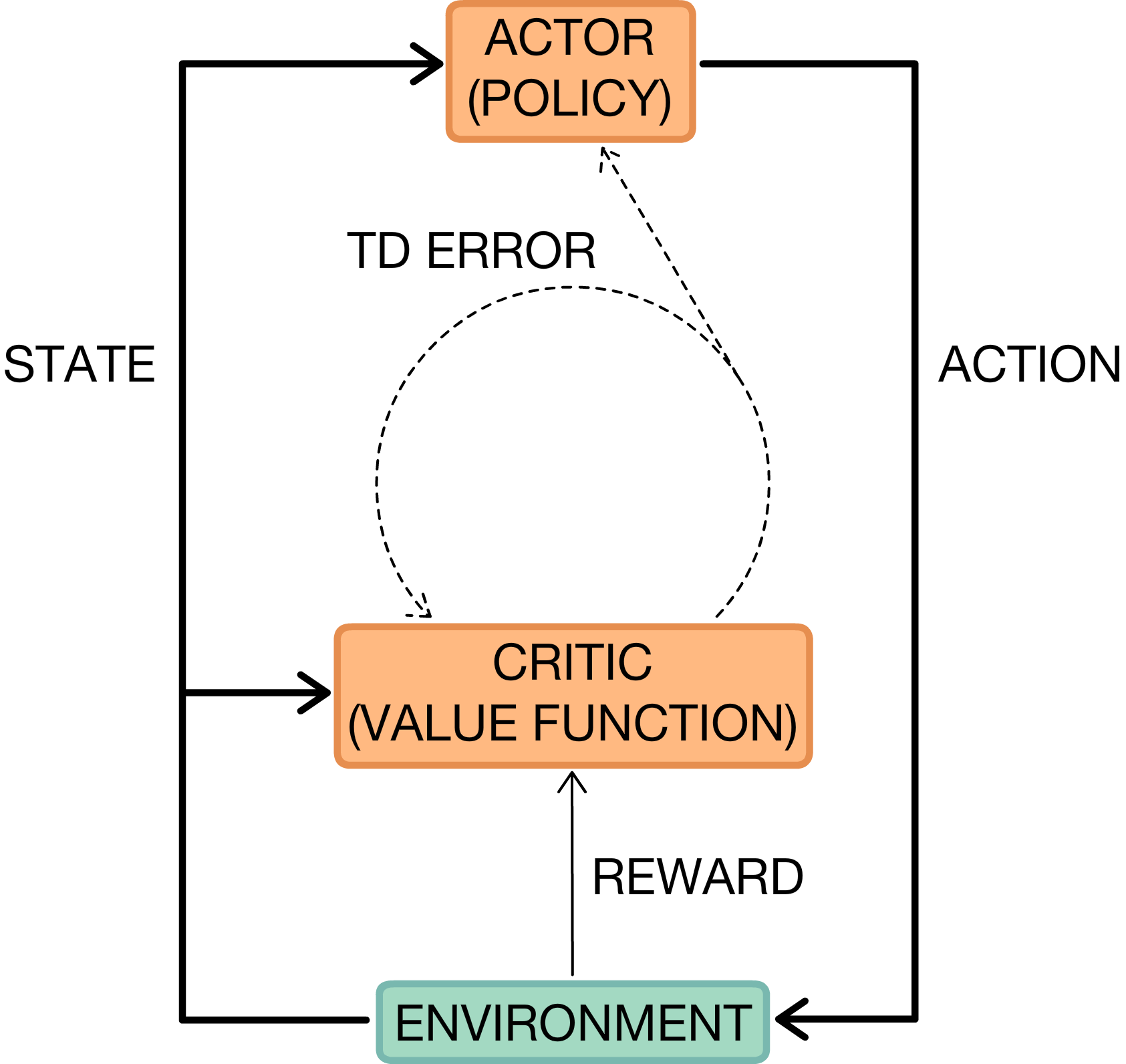

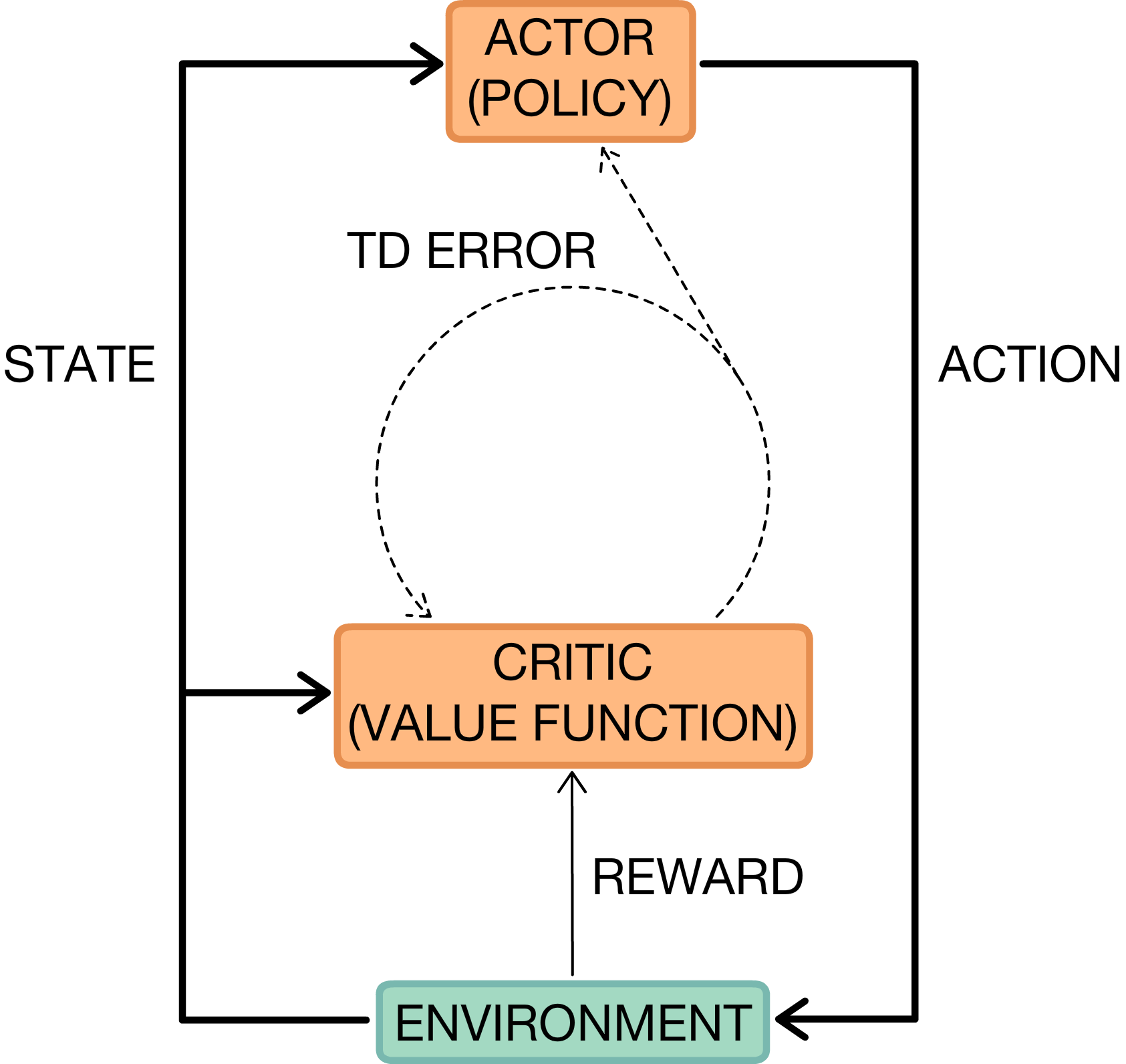

Policy search methods like Trust Region Policy Optimization (TRPO) and Proximal Policy Optimization (PPO) employ gradient-based strategies, incorporating trust regions to ensure stability and robustness. Actor-critic architectures combine value function approximation with direct policy learning, bridging the gap between off-policy algorithms' data efficiency and on-policy approaches' stability.

Figure 3: Illustration of RL algorithm spectrum from dynamic programming to pure Monte Carlo methods.

Applications in Robotics and Autonomous Systems

The adaptability of DRL algorithms extends to a wide array of applications such as robotics, where algorithms like GPS (Guided Policy Search) and A3C (Asynchronous Advantage Actor-Critic) are utilized to develop control policies directly from visual and proprioceptive data. These applications emphasize the ability of DRL to abstract complex data representations and optimize control tasks within high-dimensional spaces.

Implications and Future Directions

Deep Reinforcement Learning