- The paper introduces a recurrent autoencoder with hidden-state priming and spatially adaptive bit rates to enhance lossy image compression.

- It employs a pixel-wise SSIM-weighted loss and progressive encoding strategy that optimizes bit allocation based on local image complexity.

- Experimental results on Kodak and Tecnick datasets show significant rate savings and improved perceptual image quality over conventional codecs.

Improved Lossy Image Compression with Priming and Spatially Adaptive Bit Rates for Recurrent Networks

The paper presents a novel approach for lossy image compression using recurrent convolutional neural networks (RCNNs) that surpasses traditional codecs measured by the MS-SSIM metric. It introduces significant improvements over prior methods in the context of neural network-based image compression.

Methodology and Innovations

Recurrent Autoencoder Architecture

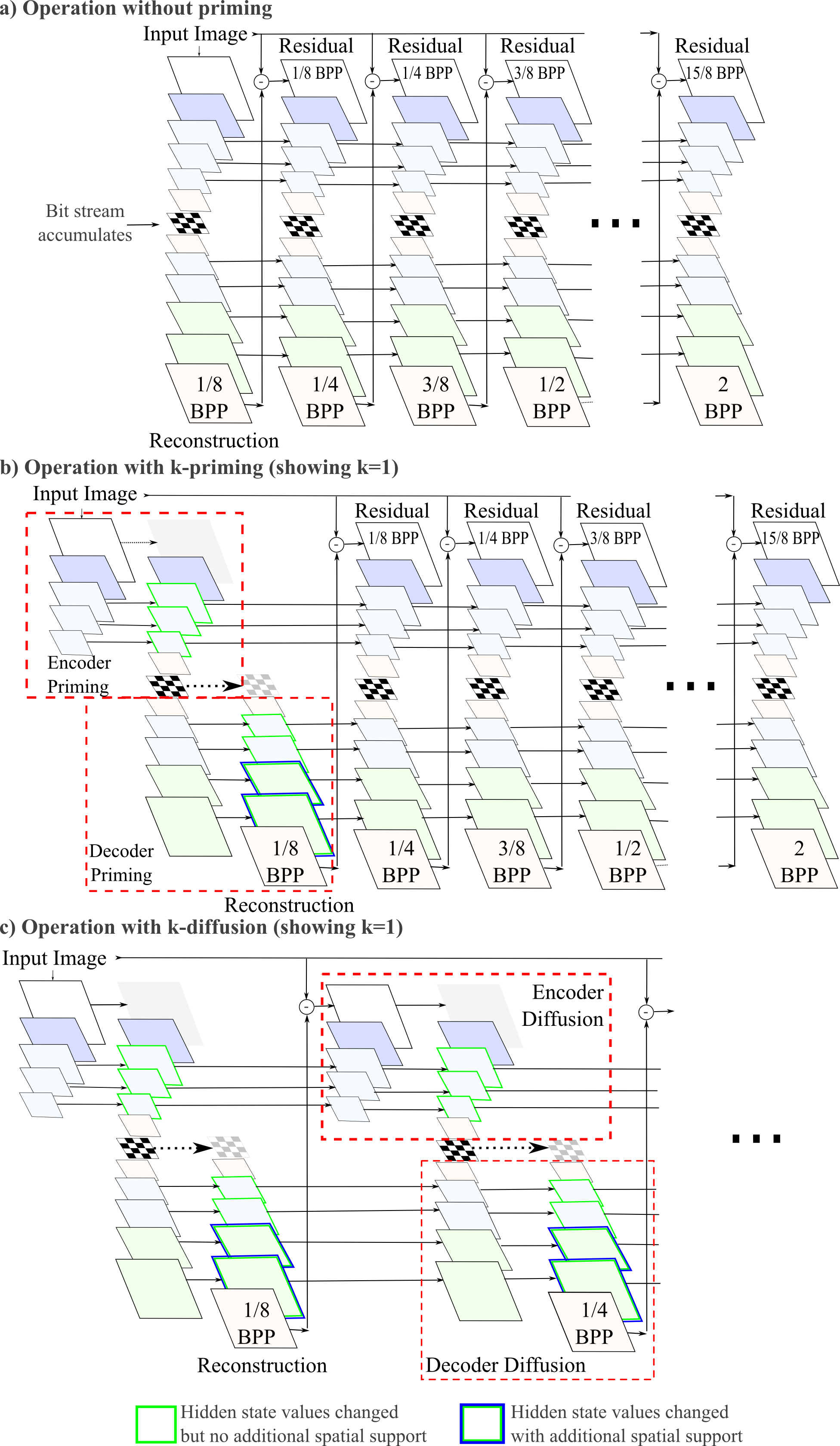

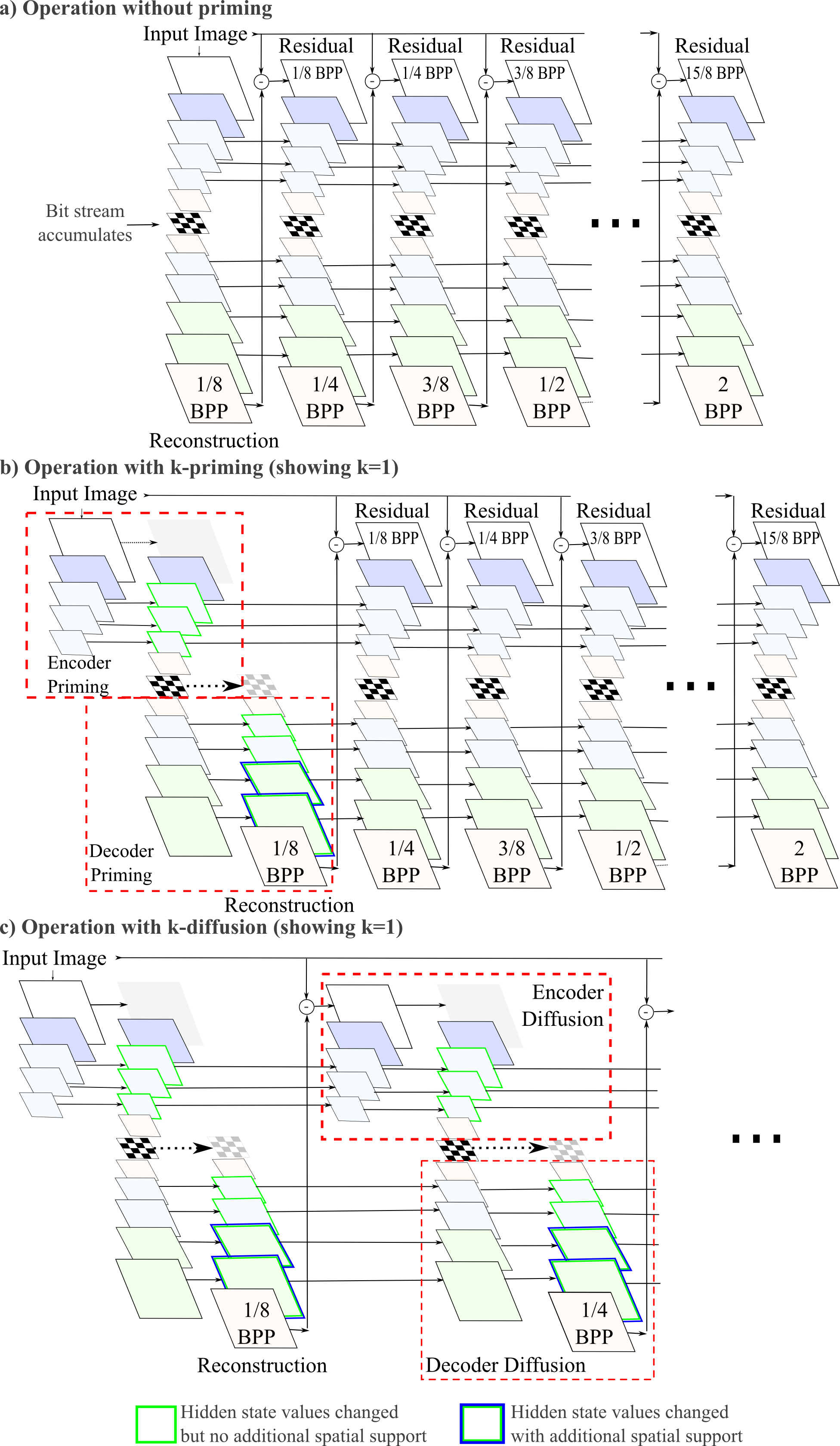

The method employs a recurrent autoencoder structure that encodes the residual image—the difference between the original image and its reconstruction from a previous iteration. The architecture allows progressive encoding, where initial iterations cater to lower bit rates and higher quality is obtained with subsequent iterations.

Figure 1: The layers in our compression network, showing the encoder (E_i), binarizer, and decoder (D_j).

Enhancements in Image Compression

- Loss Weighted by SSIM: The paper introduces a pixel-wise training loss weighted by SSIM, which better aligns with perceived image quality over standard L1 or L2 losses.

- Hidden-State Priming: By initializing hidden states through extra iterations ("priming") before generating binary codes, the spatial context enhances, improving image reconstruction without additional bit usage.

- Spatially Adaptive Bit Rates (SABR):

SABR dynamically assigns bit rates according to local image complexity, optimizing bit usage across the image by focusing on perceptually significant areas, enhancing overall quality.

Figure 2: Left: Original image crop. Center: Reconstruction using DSSIM network. Right: Reconstruction using Prime network.

The proposed method demonstrates superior performance against traditional codecs like BPG, WebP, and JPEG2000, and recent neural network-based methods. Evaluations on Kodak and Tecnick datasets show substantial improvements in compression efficiency.

Figure 3: Comparison of model results with BPG 420, JPEG2000 (OpenJPEG) and WebP.

Results

- Bj{\o}ntegaard Delta Analysis:

A significant rate savings over previous methods and codecs is evident, showing a more efficient compression while maintaining higher MS-SSIM scores.

Figure 2: Reconstruction comparisons indicating reduced artifacts especially visible in detailed regions.

Implications and Future Directions

The paper's advancements in using perceptual metrics and adaptive techniques in neural network-based compression promise better real-world application efficiency. Future developments could involve further optimization of the SABR technique, potentially integrating real-time adaptive training adjustments or incorporating more refined perceptual metrics. Additionally, the exploration of different residual coding strategies and neural architectures could extend these improvements.

Conclusion

The paper establishes a new benchmark in lossy image compression by integrating advanced machine learning techniques. The innovations in perceptual metric weighting, hidden-state initialization, and spatial bit rate adaptation make significant strides over existing methods both quantitatively and in perceived quality, marking a step forward in the application of deep learning to image compression.